Multi-threaded computing across multiple processors demoed — promises big gains in AI performance and efficiency

It's a proof-of-concept so far.

Simultaneous and Heterogeneous Multithreading (SHMT) may be the solution that can harness the power of a device's CPU, GPU, and AI accelerator all at once, according to a research paper from the University of California, Riverside. The paper claims that this new multithreading technique can double performance and halve power consumption, which results in four times the efficiency. However, as a proof-of-concept, don't get excited too fast; it's just in the early stages.

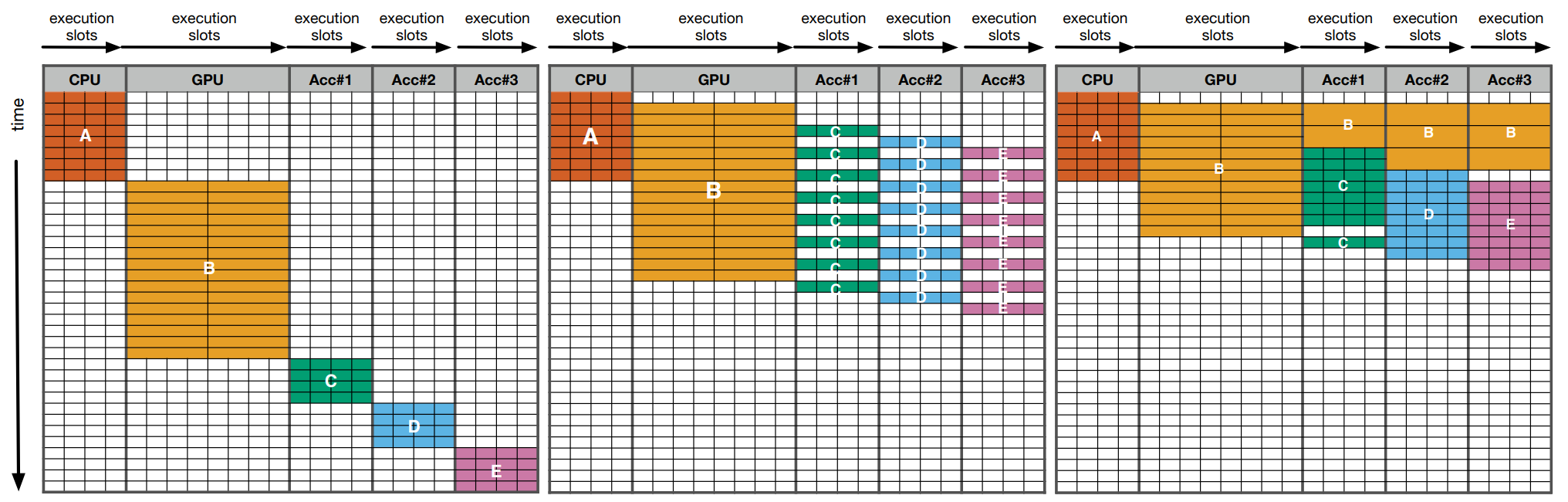

Many devices already use multithreading techniques like Simultaneous Multithreading (SMT), which divides a processor core into two threads for more efficient computing. However, SHMT spans multiple devices: a CPU, a GPU, and at least one AI-powered accelerator. The idea is to get each processor working on separate things simultaneously and even spread GPU and AI resources across multiple tasks.

According to the paper Hung-Wei Tseng and Kuan-Chieh Hsu authored, SHMT can improve performance by 1.95 times and reduce power consumption by 51%. These results were recorded on Nvidia's Maxwell-era Jetson Nano, which features a quad-core Cortex A57 Arm CPU, 4GB of LPDDR4, and a 128-core GPU. Additionally, the researchers installed a Google Edge TPU into the Jetson's M.2 slot to provide the AI accelerator, as the Jetson comes with one.

The researchers achieved this result by creating a quality-aware work-stealing (QAWS) scheduler. Essentially, the scheduler is tuned to avoid high error rates and to balance the workload evenly among all components. Under QAWS policies, tasks that require high precision and accuracy won't be assigned to sometimes error-prone AI accelerators, and tasks will be dynamically reassigned to other components if one isn't meeting performance expectations.

With double the performance, half the power, and four times the efficiency, you might be wondering what the catch is. According to the paper, "the limitation of SHMT is not the model itself but more on whether the programmer can revisit the algorithm to exhibit the type of parallelism that makes SHMT easy to exploit." This statement refers to how software must be written to take advantage of SHMT and that not all software can utilize it to maximum effect.

Rewriting software is known to be a pain; for instance, Apple had to do lots of legwork when it switched from Intel to its in-house Arm chips for Mac PCs. Regarding multithreading specifically, it can take a while for developers to adjust. It took several years for software to take advantage of multi-core CPUs, and we may be looking at a similar timeline for developers to utilize multiple components for the same task.

Additionally, the paper details how SHMT's performance uplift hinges on problem size. The figure of 1.95 times faster comes from the maximum problem size the paper tested, but smaller problem sizes see lower performance gains. At the lowest problem size, there was essentially no performance benefit since lower problem sizes offer fewer opportunities for all components to work in parallel.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

As computers of all sorts are increasingly shipped with multiple computing devices like AI processors, it's probably inevitable that developers will want to use more hardware to speed things up. Even if SHMT doesn't live up to the best-case scenario that the paper outlines, it could still boost PCs and smartphones if and when it or a similar technology gains mainstream momentum.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

slightnitpick CPUs already have multiple "accelerators" such as floating point units. I'm kind of wondering whether this technology would allow more fractionation of a CPU into its components, or whether the already existing components of a CPU have been optimized enough to work together over the decades that this would result in no gains.Reply -

bit_user ReplyThe researchers achieved this result by creating a quality-aware work-stealing (QAWS) scheduler.

The article skipped a fundamental step that's needed to appreciate what is being scheduled.

The way this works is that the authors propose that programs be re-factored into a set of high-level Virtual Operations (aka VOPs). These are sub-divided into one or more High-Level Ops (HLOPs), which are the basic scheduling atoms, in this system. Each HLOP would have an equivalent implementation compiled for each type of hardware engine (CPU, GPU, AI engine, etc.) that you want it to be able to utilize. The runtime scheduler is then deciding which engine should execute a given HLOP, based on the state of those queues.

For further details, see Section 3 of the paper:

https://dl.acm.org/doi/pdf/10.1145/3613424.3614285

As the article suggests, this is very invasive to how software is written. The paper suggests that application code might not have to change, so long as major runtime libraries can incorporate the changes. Some examples of places where you might see benefits are:

programs using AI, where work can be flexibly divided up between CPU, GPU, and AI accelerator.

portions of video game code, such as physics, where you have similar flexibility over where some parts of the computation are processed.

Key limitations are:

The main control still happens on the CPU.

Some code must be restructured to use HLOPS and they must compile for the various accelerators.

Code which is mostly serial won't benefit from being able to run on the GPU, so these operations must be things that are already good candidates (and likely sometimes subject to) GPU implementations.

Finally, the article prototypes this on an old Nvidia SoC, similar to the one powering the Nintendo Switch. This is a shared-memory architecture, which is probably why the article makes almost no mention of data movement or data locality. In such a system as they describe, you could account for data movement overhead, when making scheduling decisions, but that would put a lot more burden on the scheduler and ideally require applications to queue up operations far enough in advance that the scheduler has adequate visibility.

In the end, I'd say I prefer a pipeline-oriented approach, where you define an entire processing graph and let the runtime system assign portions of it to the various execution engines (either statically or dynamically). This gives the scheduler greater visibility into both processing requirements and data locality. It's also a good fit for how leading AI frameworks decompose the problem. In fact, I know OpenVINO has support for hybrid computation, and probably does something like this.

It's an interesting paper, but I don't know if it's terribly ground-breaking or if it will be very consequential. But it's always possible that Direct3D or some other framework decides to utilize this approach to provide the benefits of hybrid computation. This could be important as we move into a world where AI (inferencing) workloads are expected to run on a variety of PCs, including some with AI accelerators and many without. -

bit_user Reply

These are very tightly-integrated. Floating point instructions are intermingled directly in the instruction stream of a program.slightnitpick said:CPUs already have multiple "accelerators" such as floating point units.

In the backend of a CPU, there is often some decoupling between the integer & floating point pipelines, since they tend to have separate registers and relatively little data movement between them. The best way to learn about this stuff is probably to just start reading some deep analysis of modern microarchitectures. For instance:

https://chipsandcheese.com/2021/12/02/popping-the-hood-on-golden-cove/ https://chipsandcheese.com/2022/12/25/golden-coves-lopsided-vector-register-file/ https://chipsandcheese.com/2022/11/05/amds-zen-4-part-1-frontend-and-execution-engine/ https://chipsandcheese.com/2022/11/08/amds-zen-4-part-2-memory-subsystem-and-conclusion/ https://chipsandcheese.com/2023/10/27/cortex-x2-arm-aims-high/

Going further back in history, you can find some worthwhile analysis on Anandtech and RealWorldTech:

https://www.anandtech.com/show/5057/the-bulldozer-aftermath-delving-even-deeper https://www.anandtech.com/show/3922/intels-sandy-bridge-architecture-exposed https://www.realworldtech.com/sandy-bridge/

You can sometimes find lots of details at WikiChip. It varies quite a bit, though.

https://en.wikichip.org/wiki/amd/microarchitectures/zen

If an app is targeted at CPUs and the instruction set extensions are known, then this basically turns into a fancy work-stealing implementation. While work-stealing is a popular approach for parallelizing and load-balancing programs, the logical abstractions they introduce would be unnecessarily burdensome for a pure-CPU application.slightnitpick said:I'm kind of wondering whether this technology would allow more fractionation of a CPU into its components, or whether the already existing components of a CPU have been optimized enough to work together over the decades that this would result in no gains. -

slightnitpick Reply

Thanks. Your comments are already a bit beyond my knowledge and I have other things I need to spend attention on, but I appreciate your explanations!bit_user said:The best way to learn about this stuff is probably to just start reading some deep analysis of modern microarchitectures. -

michalt Reply

Thanks for your really great writeup. I feel like I don't need to read the paper to understand the core of what they did.bit_user said:The article skipped a fundamental step that's needed to appreciate what is being scheduled.

-

Geef We all know that there will be a few rich dudes who are somehow able to fit more than 2 graphics cards onto a motherboard and using this will be getting mega FPS.Reply

Upgrading your GPU? No problem just move old card down a slot and it can be the secondary processor for your new main GPU! -

abufrejoval Reply

This reminds me of large HPC scale-out work I've seen in an EU-project called TANGO, with researchers from the Barcelona Supercomputing Centre. There the goal was also to take better advantage of shifting supercomputing resources and opportunistically schedule heterogenous workloads to match time and energy budgets, basically inspired from how Singularity schedules HPC jobs.bit_user said:The article skipped a fundamental step that's needed to appreciate what is being scheduled.

The way this works is that the authors propose that programs be re-factored into a set of high-level Virtual Operations (aka VOPs). These are sub-divided into one or more High-Level Ops (HLOPs), which are the basic scheduling atoms, in this system. Each HLOP would have an equivalent implementation compiled for each type of hardware engine (CPU, GPU, AI engine, etc.) that you want it to be able to utilize. The runtime scheduler is then deciding which engine should execute a given HLOP, based on the state of those queues.

They also instrumented the code (typically HPC libraries) and profiled the different variants to check for energy consumption vs. performance metrics to serve as inputs to the scheduler for the (uninstrumented) production runs.bit_user said:

Key limitations are:

The main control still happens on the CPU.

Some code must be restructured to use HLOPS and they must compile for the various accelerators.

Control transfer in this HPC code is mostly via message passing and if they pass between identical or distinct architecture is what the outer scheduler/resource allocator decides based on the profiling information and the workload description. The inner scheduler than just does thread pool management without global run-time knowledge.

These techniques are absolutley niche and require significant extra effort just to make them work. So they require a significant scale to pay for that work. In this case at the very small end with the Tegra SoC the use case may be robotics where you're hunting for that extra performance at a fixed budget, in the other end at HPC getting the typically disastrously bad utilization of supercomputers up even a few percent, almost saves you from having to buy another and a lot of those libraries are being reused constantly.bit_user said:Code which is mostly serial won't benefit from being able to run on the GPU, so these operations must be things that are already good candidates (and likely sometimes subject to) GPU implementations.

Managing heterogeneous computing resources flexibly at run-time potentially adding scale-out and fault-tolerance is about as difficult as it gets. So finding a generic and reusable approach to solving it, can't be much easier.

But on the other hand what used to be an entirely esoteric issue in a few niche applications is becoming something that today already affects smartphone workloads which may want to squeeze an optimum out of various different type of processors and accelerators, but may not be allowed to just monopolize that phone, either. With a user base counting in billions, some things that have seemed very esoteric gain critical mass to pay a team of developers.

E.g. I've seen research on how to chunk up DSP workloads in such a manner that they can both be running in a shared environment yet maintain their real-time quality requirements across a base platform that has orders of magnitude different hardware capabilities. And that actually requires a hardware-software co-design.

I believe that type of development won't easily be replaced by AI, but AI might help to make that more affordable, too.