Engineer 'builds a GPU from scratch' in two weeks — process much harder than he expected

The 'CPU from scratch' guy has done it again.

An engineer has shared his journey in “building a GPU from scratch with no prior experience.” As with his prior project of designing a CPU from scratch, Adam Majmudar took just two weeks to complete this cerebral feat. In a Twitter/X thread Majmudar takes us through the process, step-by-step, and admits GPU designing was a much harder task than expected. To be clear, the current conclusion of the project is a chip layout in Verilog which was finally passed through OpenLane EDA software to verify it. However, the GPU is going to be submitted for tapeout via Tiny Tapeout 7 so is destined to become a physical chip in the coming months.

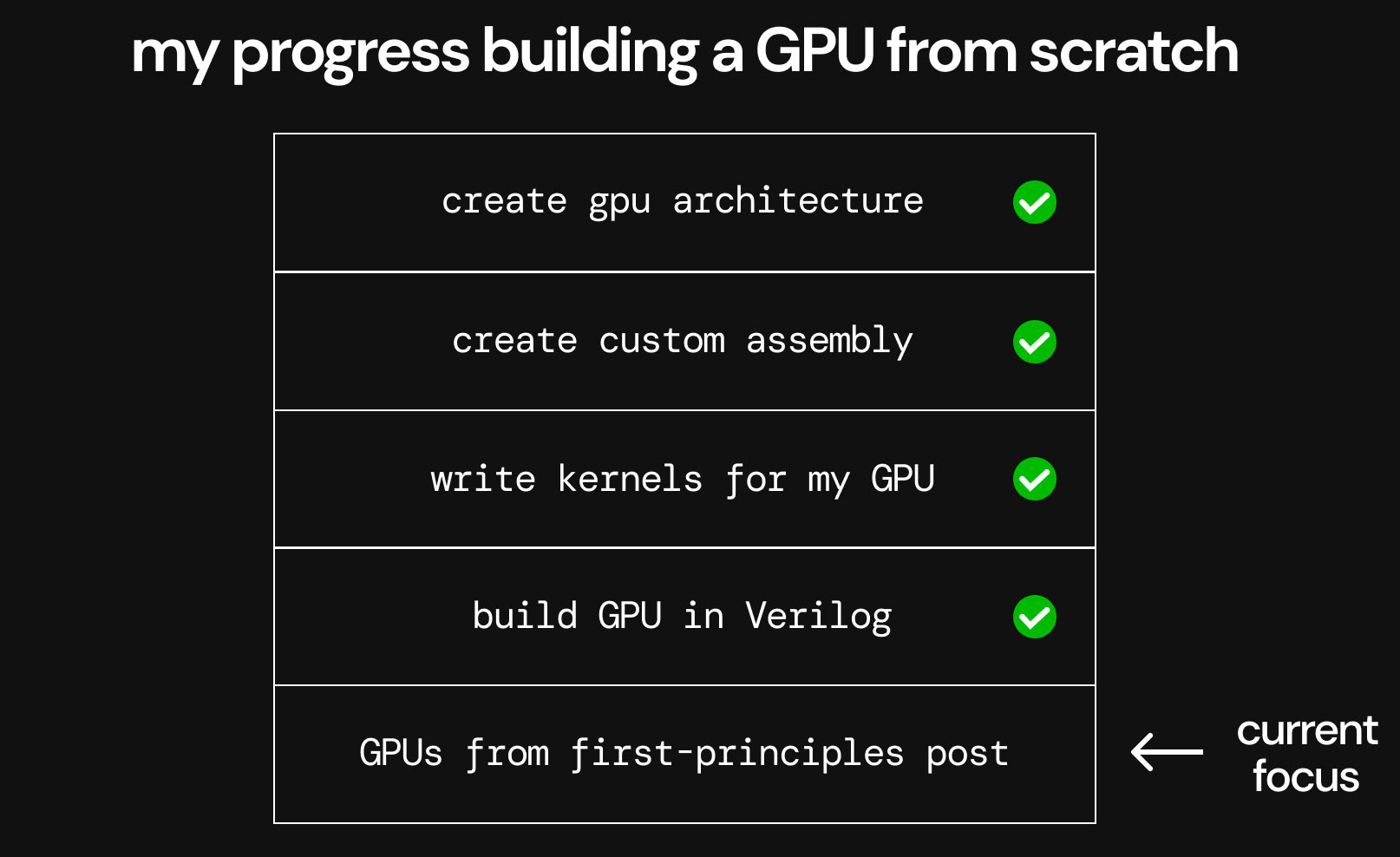

Above you can see the flow of tasks Majmudar worked through to design his GPU. Yet, as a ‘from scratch’ project, a lot of study and thought was required even before the first step was tentatively taken. Last time we highlighted the engineer’s concerns that GPUs would be a relatively difficult field of study, due to the dominance of proprietary tech, as that prediction came true.

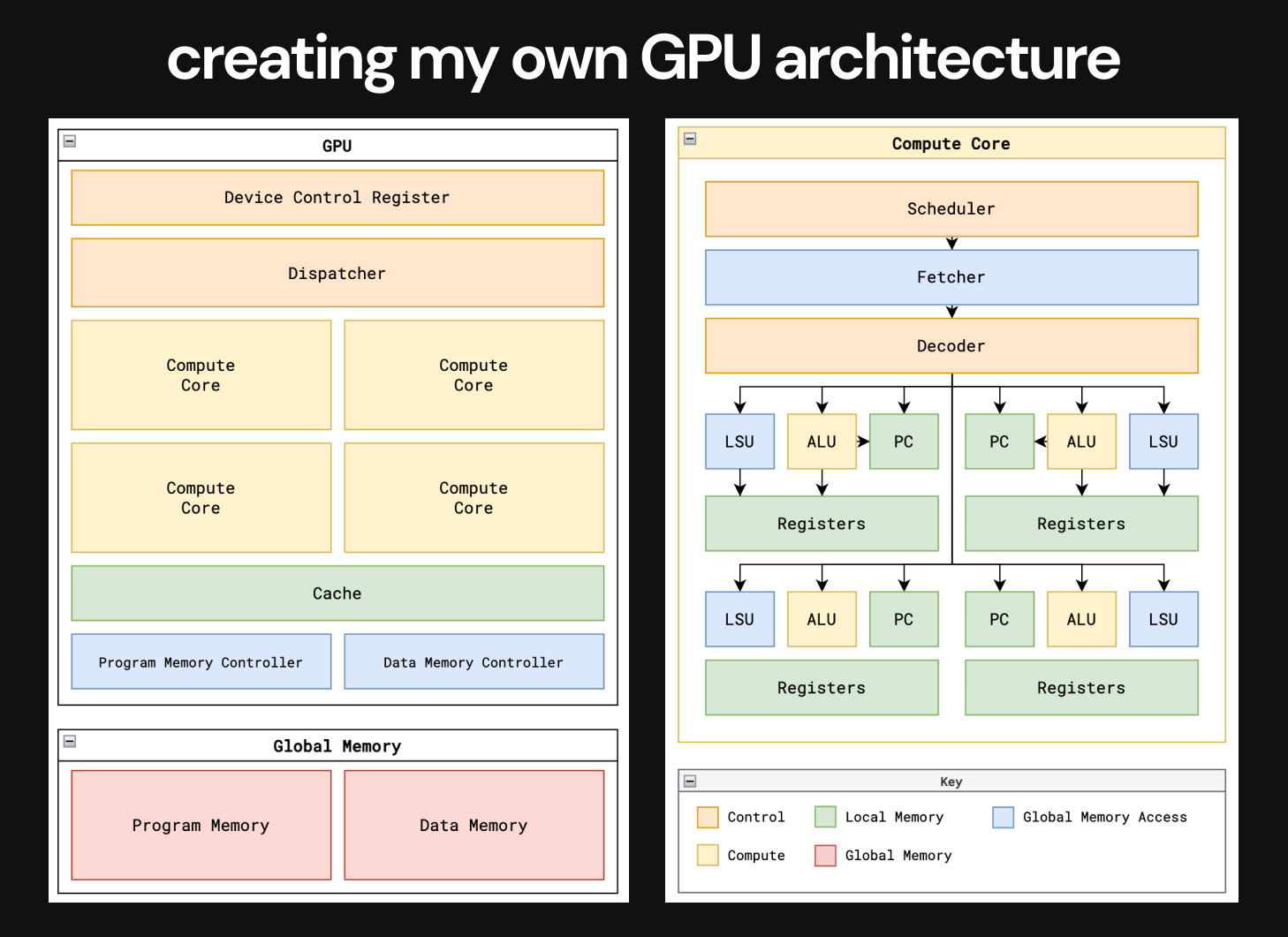

Through several iterations of the above architecture, Majmudar decided to focus on general-purpose parallel computing (GPGPUs) capabilities. Thus he adjusted his Instruction Set Architecture (ISA), which features just 11 instructions, to achieve this goal. Next up, the engineer wrote two matrix math kernels to run on his GPU. These matrix addition and multiplication kernels would demonstrate the key functionality of the GPU and provide evidence of its useful application in graphics and machine learning tasks.

It had been relatively easy for the engineer so far, but building his GPU in Verilog presented “many issues.” Advice from the (in)famous George Hotz helped Majmudar move past one of his first (and second) hurdles regarding memory and a warp-scheduler implementation. A third rewrite of his code did the trick though, fixing compute core execution scheduling.

Some more unspecified redesigns later and the proof of the pudding, a video showing the matrix addition kernel running and validating, was shared in the Tweet thread.

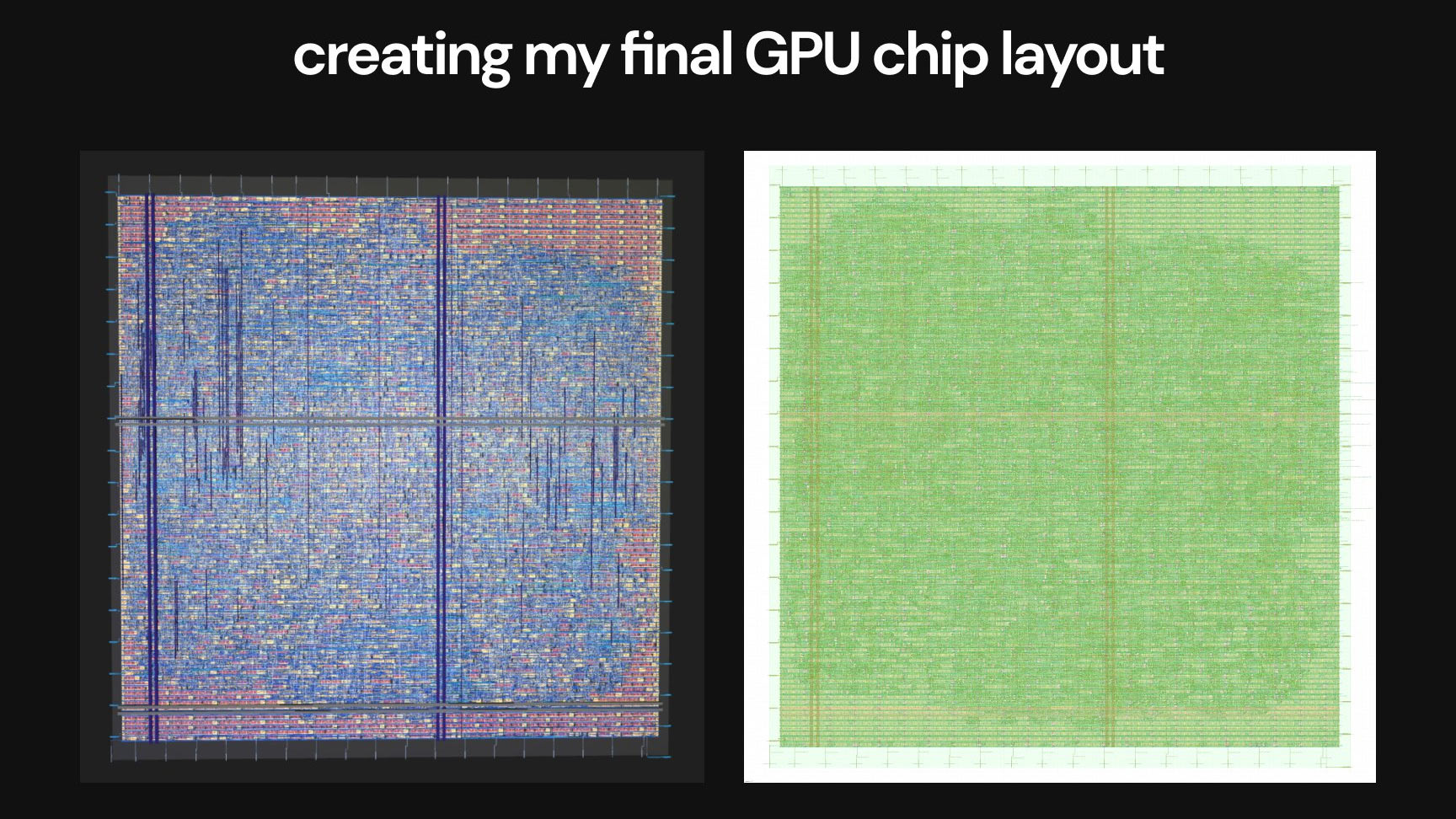

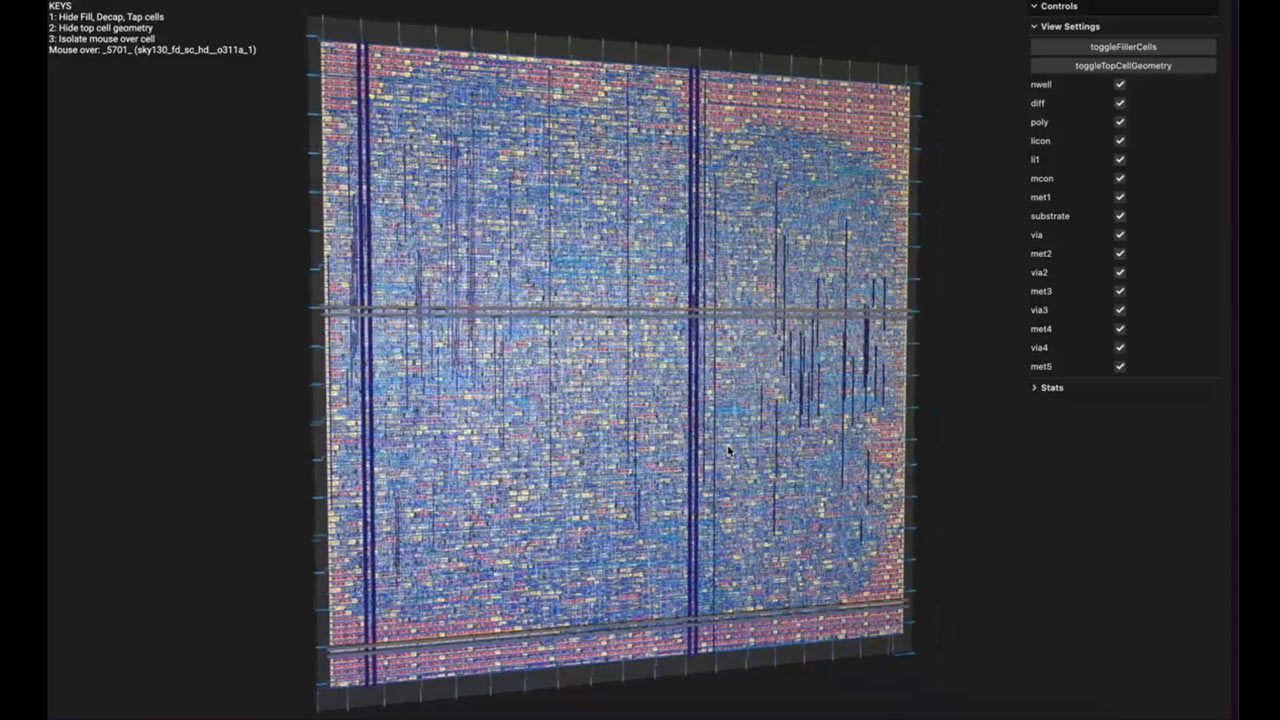

Lastly, the completed Verilog design was passed through OpenLane EDA, targeting the Skywater 130nm process node (for Tiny Tapeout). Again some issues needed to be ironed out. In particular, Majmudar explains that some Design Rule Checks (DRCs) failed and necessitated rework.

After the two-week effort, the engineer enjoyed playing with a cool 3D visualization of his GPU design. That will have to suffice until TT7 returns silicon to participants. Of course, the work isn't going to rank among the best graphics cards. If you want to read more about this homemade GPU check out the entertaining social media thread and / or investigate the dedicated Tiny-GPU GitHub page.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Order 66 So, how powerful is this thing? I mean on a 130nm process node, it can't be that fast considering 130nm was used in GPUs in 2001.Reply -

cyrusfox I will be interested to see how they navigate the display drivers as well as what resolutions and output technologies this can support. Doing all this solo in 2 weeks is amazing, hope it powers on with first tape out. Best of luck.Reply -

rfdevil ReplyOrder 66 said:So, how powerful is this thing? I mean on a 130nm process node, it can't be that fast considering 130nm was used in GPUs in 2001.

cyrusfox said:I will be interested to see how they navigate the display drivers as well as what resolutions and output technologies this can support. Doing all this solo in 2 weeks is amazing, hope it powers on with first tape out. Best of luck.

It's not a GPU. Back in the day (like 30 years ago) something like this might get slotted into a CPU as an accelerated math unit or a co-processor to a CPU to help with certain math functions. Interesting project but far away from what it is being reported as. Same applies to the CPU article which design was even further away from a CPU than this design is to a GPU. -

helfer.pesche Reply

No, but maybe someone will run Doom on it ;)Sam Bridges said:I gotta ask. Can it run Crysis?

Cheers. 🤣 -

Sam Bridges Reply

Well played mate, you win the Internet today. 🤣helfer.pesche said:No, but maybe someone will run Doom on it ;)

Cheers. -

Conor Stewart Reply

Yeah it doesn't seem like much of a GPU, it has 4 compute units with 4 processors each and nothing seems specific to graphics. Like you say it seems much more like a maths accelerator.rfdevil said:It's not a GPU. Back in the day (like 30 years ago) something like this might get slotted into a CPU as an accelerated math unit or a co-processor to a CPU to help with certain math functions. Interesting project but far away from what it is being reported as. Same applies to the CPU article which design was even further away from a CPU than this design is to a GPU.

It's also going to be very limited with only 11 instructions.

I can't help but feel that this guy is rushing it. First a CPU in two weeks and then a GPU in two weeks and neither is that good. He has likely missed a lot of things and made quite a few bad design choices. In my opinion it would be better to have taken more time and done more research to produce a better CPU from the start rather than rushing through a pretty bad design and calling it done.

He would also likely learn more by slowing it down, like learning the best design practices rather than pushing through with bad design decisions. -

rfdevil ReplyConor Stewart said:Yeah it doesn't seem like much of a GPU, it has 4 compute units with 4 processors each and nothing seems specific to graphics. Like you say it seems much more like a maths accelerator.

It's also going to be very limited with only 11 instructions.

I can't help but feel that this guy is rushing it. First a CPU in two weeks and then a GPU in two weeks and neither is that good. He has likely missed a lot of things and made quite a few bad design choices. In my opinion it would be better to have taken more time and done more research to produce a better CPU from the start rather than rushing through a pretty bad design and calling it done.

He would also likely learn more by slowing it down, like learning the best design practices rather than pushing through with bad design decisions.

Agreed. It seems like he just wanted to get a crash course on digital design to better understand the overall flow, which is commendable and it is impressive how much he was able to accomplish in the short time frame. However, as you mention, the small amount of time given and seemingly fairly decent reliance on AI to help, most likely a lot of core understanding was missed. I would be curious to see what the target frequency is and how he intends to actually test the chip after tapeout. Bad design or not, if the chip is at least functional at even a half-way decent frequency for the process node, it would be a nice accomplishment given the very short design time.