GPUs can now use PCIe-attached memory or SSDs to boost VRAM capacity —Panmnesia's CXL IP claims double-digit nanosecond latency

Provided that AMD, Intel, and Nvidia would like to support CXL for memory expansions.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

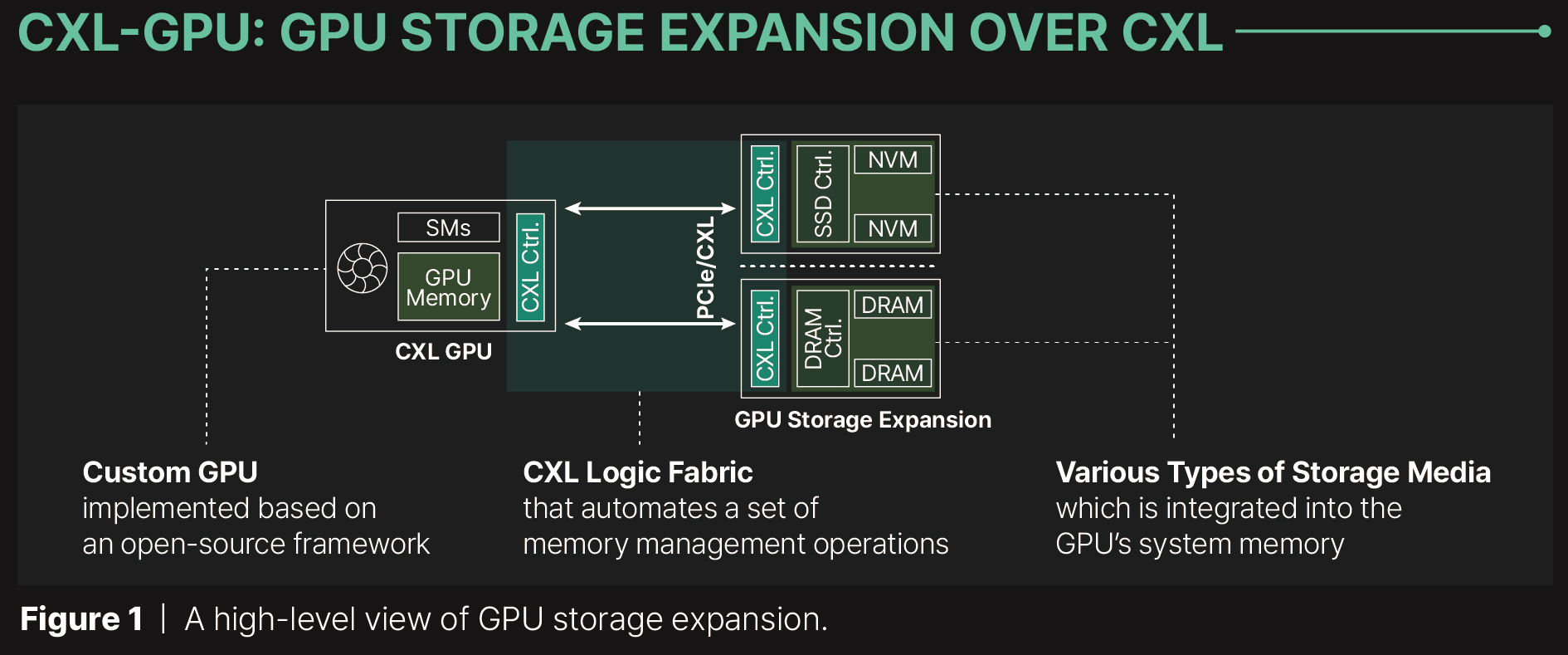

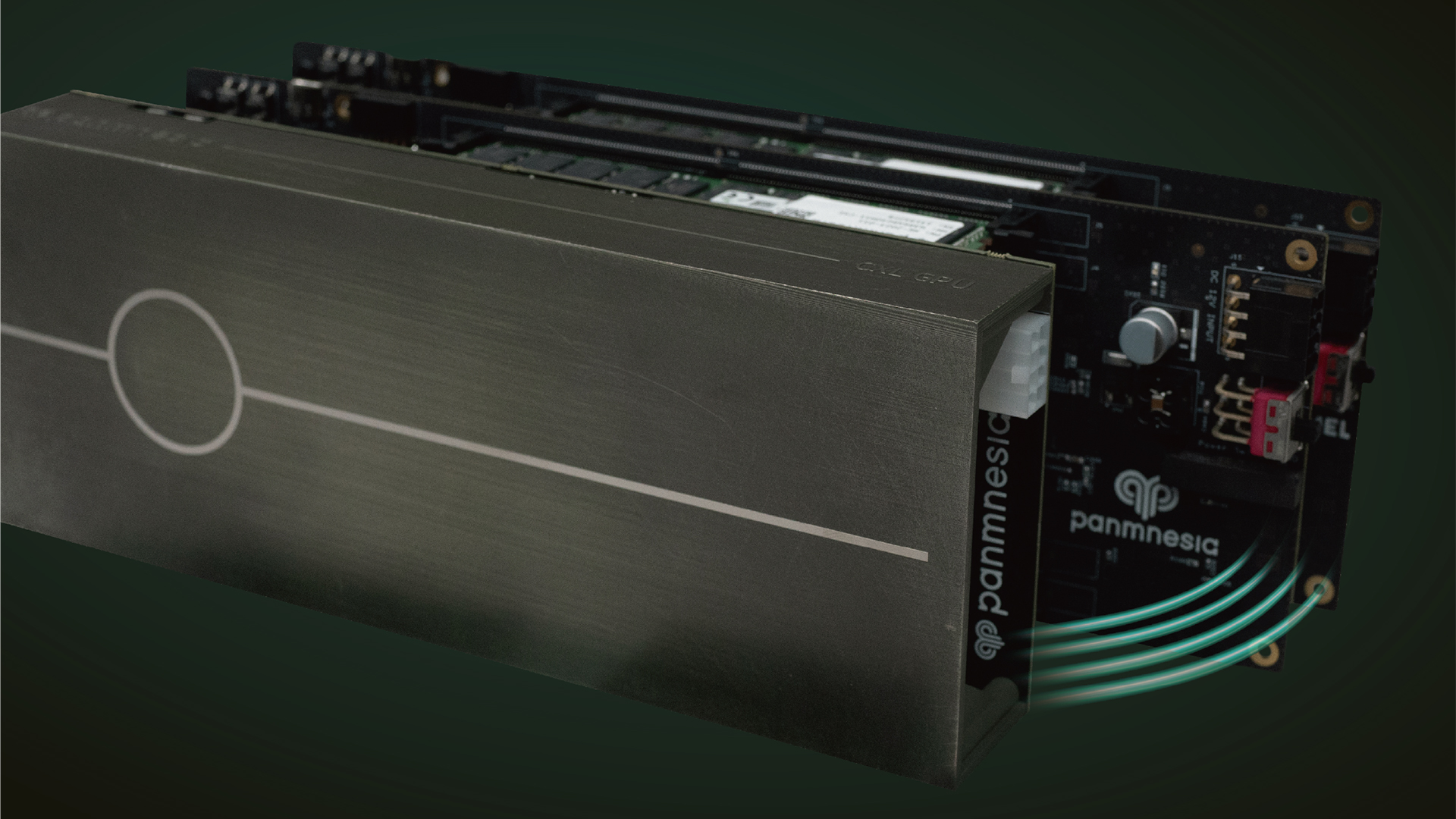

Modern GPUs for AI and HPC applications come with a finite amount of high-bandwidth memory (HBM) built into the device, limiting their performance in AI and other workloads. However, new tech will allow companies to expand GPU memory capacity by slotting in more memory with devices connected to the PCIe bus instead of being limited to the memory built into the GPU — it even allows using SSDs for memory capacity expansion, too. Panmnesia, a company backed by South Korea's renowned KAIST research institute, has developed a low-latency CXL IP that could be used to expand GPU memory using CXL memory expanders.

The memory requirements of more advanced datasets for AI training are growing rapidly, which means that AI companies either have to buy new GPUs, use less sophisticated datasets, or use CPU memory at the cost of performance. Although CXL is a protocol that formally works on top of a PCIe link, thus enabling users to connect more memory to a system via the PCIe bus, the technology has to be recognized by an ASIC and its subsystem, so just adding a CXL controller is not enough to make the technology work, especially on a GPU.

Panmnesia faced challenges integrating CXL for GPU memory expansion due to the absence of a CXL logic fabric and subsystems that support DRAM and/or SSD endpoints in GPUs. In addition, GPU cache and memory subsystems do not recognize any expansions except unified virtual memory (UVM), which tends to be slow.

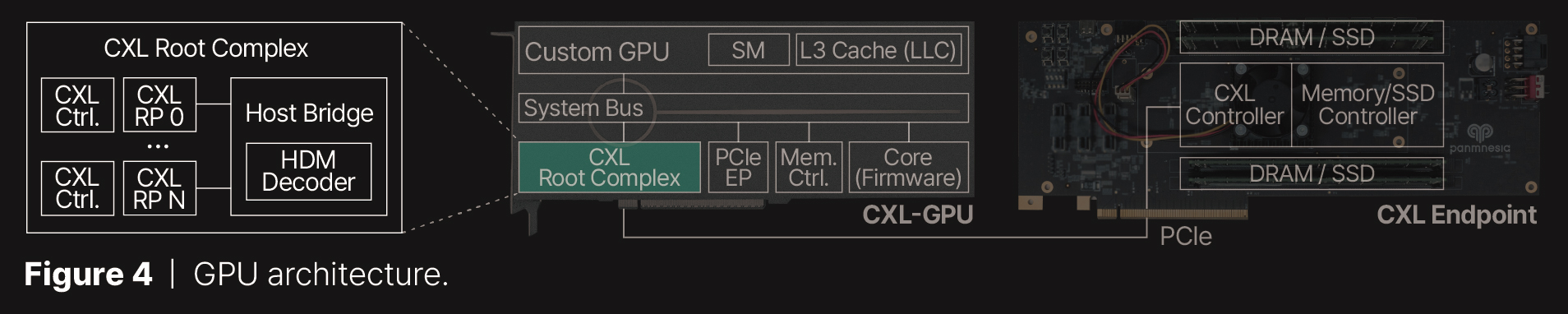

To address this, Panmnesia developed a CXL 3.1-compliant root complex (RC) equipped with multiple root ports (RPs) that support external memory over PCIe) and a host bridge with a host-managed device memory (HDM) decoder that connects to the GPU's system bus. The HDM decoder, responsible for managing the address ranges of system memory, essentially makes the GPU's memory subsystem 'think' that it is dealing with system memory, but in reality, the subsystem uses PCIe-connected DRAM or NAND. That means either DDR5 or SSDs can be used to expand the GPU memory pool.

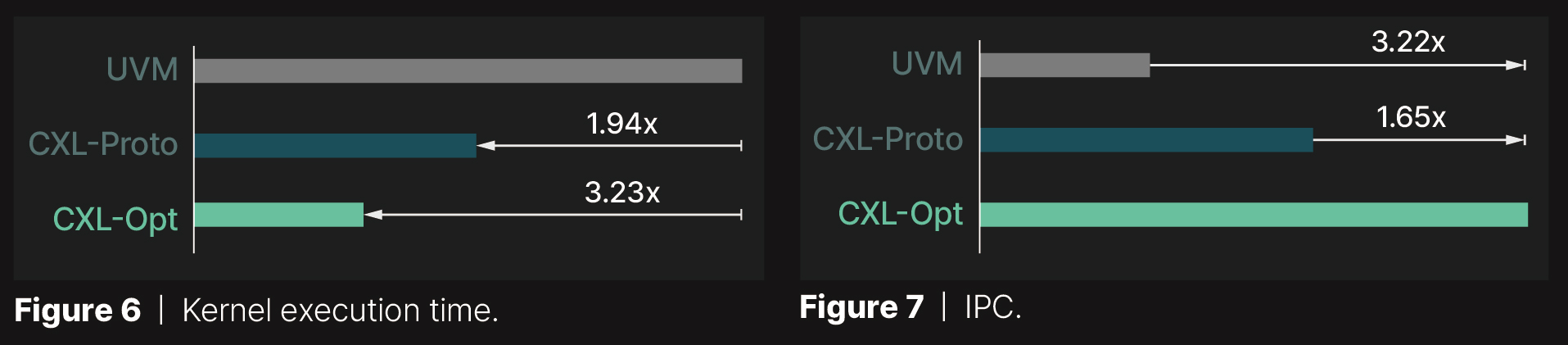

The solution (based on a custom GPU and marked as CXL-Opt) underwent extensive testing, showing a two-digit nanosecond round-trip latency (compared to 250ns in the case of prototypes developed by Samsung and Meta, which is marked as CXL-Proto in the graphs below), including the time needed for protocol conversion between standard memory operations and CXL flit transmissions, according to Panmnesia. It has been successfully integrated into both memory expanders and GPU/CPU prototypes at the hardware RTL, demonstrating its compatibility with various computing hardware.

As tested by Panmnesia, UVM performs the worst among all tested GPU kernels due to overhead from host runtime intervention during page faults and transferring data at the page level, which often exceeds the GPU's needs. In contrast, CXL allows direct access to expanded storage via load/store instructions, eliminating these issues.

Consequently, CXL-Proto's execution time is 1.94 times shorter than UVM. Panmnesia's CXL-Opt further reduces execution time by 1.66 times, with an optimized controller achieving two-digit nanosecond latency and minimizing read/write latency. This pattern is also evident in another figure, which displays IPC values recorded during GPU kernel execution. It reveals that Panmnesia's CXL-Opt achieves performance speeds 3.22 times and 1.65 times faster than UVM and CXL-Proto, respectively.

In general, CXL support can do a lot for AI/HPC GPUs, but performance is a big question. Additionally, whether companies like AMD and Nvidia will add CXL support to their GPUs remains to be seen. If the approach of using PCIe-attached memory for GPUs does gather steam, only time will tell if the industry heavyweights will use IP blocks from companies like Panmnesia or simply develop their own tech.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

hotaru251 while this would help Nvidia's stinginess on vram (especially lower end) factoring in the cost it would likely be cheaper just gettign next sku higher that comes w/ more vram...Reply -

bit_user Reply

This is confusing and wrong.The article said:Although CXL is a protocol that formally works on top of a PCIe link, thus enabling users to connect more memory to a system via the PCIe bus, the technology has to be recognized by an ASIC and its subsystem

CXL and PCIe share the same PHY specification. Where they diverge is at the protocol layer. CXL is not simply a layer atop PCIe. The slot might be the same, but you have to configure the CPU to treat it as a CXL slot instead of a PCIe slot. That obviously requires the CPU to have CXL support, which doesn't exist in consumer CPUs. Not sure if the current Xeon W or Threadrippers support it, actually, but they could. -

Notton I don't see the article talking about bandwidth. Does it not matter for the expected AI workload?Reply

(I assume no one would use this to game on, except youtubers) -

nightbird321 Reply

This is definitely aimed at very expensive pro models with maxed out VRAM already. The cost of another expansion card would definitely not be cost effective versus the next sku with more vram.hotaru251 said:while this would help Nvidia's stinginess on vram (especially lower end) factoring in the cost it would likely be cheaper just gettign next sku higher that comes w/ more vram... -

bit_user Reply

Because the PHY spec is the same as PCIe, the bandwidth calculations should be roughly the same. CXL 1.x and 2.x are both based on the PCIe 5.0 PHY, meaning ~4 GB/s per lane (per direction). So, a x4 memory expansion would have an upper limit of ~16 GB/s in each direction.Notton said:I don't see the article talking about bandwidth.

Depends on which part. If you look as the dependence of high-end AI training GPUs on HBM, bandwidth is obviously an issue. That's not to say that you need uniformly fast access, globally. There are techniques for processing chunks of data which might be applicable for offloading some of it to a slower memory, such as the way Nvidia uses the Grace-attached LPDDR5 memory in their Grace-Hopper configuration. You could also just use the memory expansion for holding training data, which is far lower bandwidth than access to the weights.Notton said:Does it not matter for the expected AI workload?

Consumer GPUs (and by this I mean anything with a display connector on it - even the workstation branded stuff) don't support CXL, so it's not even an option. Even if it were, you'd still be better off just using system memory. Where this sort of memory expansion starts to make sense is at scale.Notton said:(I assume no one would use this to game on, except youtubers) -

DiegoSynth Reply

We don't know if they will actually have more VRAM, and even so, knowing Nvidia, they will add something like 2GB, which is quite @Nal.nightbird321 said:This is definitely aimed at very expensive pro models with maxed out VRAM already. The cost of another expansion card would definitely not be cost effective versus the next sku with more vram.

Nevertheless, if the GPU can only access the factory designated amount due to bandwith limitation, then we are cooked anyway.

One way or another, it's a nice approach to remediate the lack, but still very theoretical and subjected to many dubious factors. -

bit_user Reply

CXL makes it somewhat obsolete.usertests said:Make our day, bring back the "SSG".

The reason why they had to integrate a SSD into a GPU is that PCIe created all sorts of headaches and hurdles for trying to have one device talk directly to another. With CXL, those problems are supposedly all sorted out. -

bit_user Reply

LOL. You just @ -referenced a user named Nal. Try putting tags around it, next time.DiegoSynth said:... knowing Nvidia, they will add something like 2GB, which is quite ... -

razor512 I wish video card makers would just add a second pool of RAM that would use SODIMM modules. For example imagine if a card like the RTX 4080 had 2 SODIMM slots on the back of the card for extra RAM. While it would be a slower pool of RAM of around 80-90GB/s compared to the 736GB.s of the VRAM, it would still be useful.Reply

Video card makers already have experience with using and prioritizing 2 separate memory pools on the same card, for example the GTX 970 would have a 3.5GB pool at 225-256GB/s, and a second 512MB pool at around 25-27GB/s depending on clock speed. If a game used that 512MB pool, the performance hit was not much, as the card and drivers at least knew enough to not shove throughput intensive data/ workloads into that second pool.

If they could do the same but with 2 DDR5 SODIMM slots, then users could do things like have a second pool of up to about 96GB, and it would have far fewer performance hits than using shared system memory which tops out at a real world throughput of around 24-25GB/s on a PCIe 4.0 X16 connection that also has to share bandwidth with other GPU tasks, thus not well suited for pulling double duty.