Compute Express Link (CXL) 3.0 Debuts, Wins CPU Interconnect Wars

CXL emerges as the clear winner of the CPU interconnect wars.

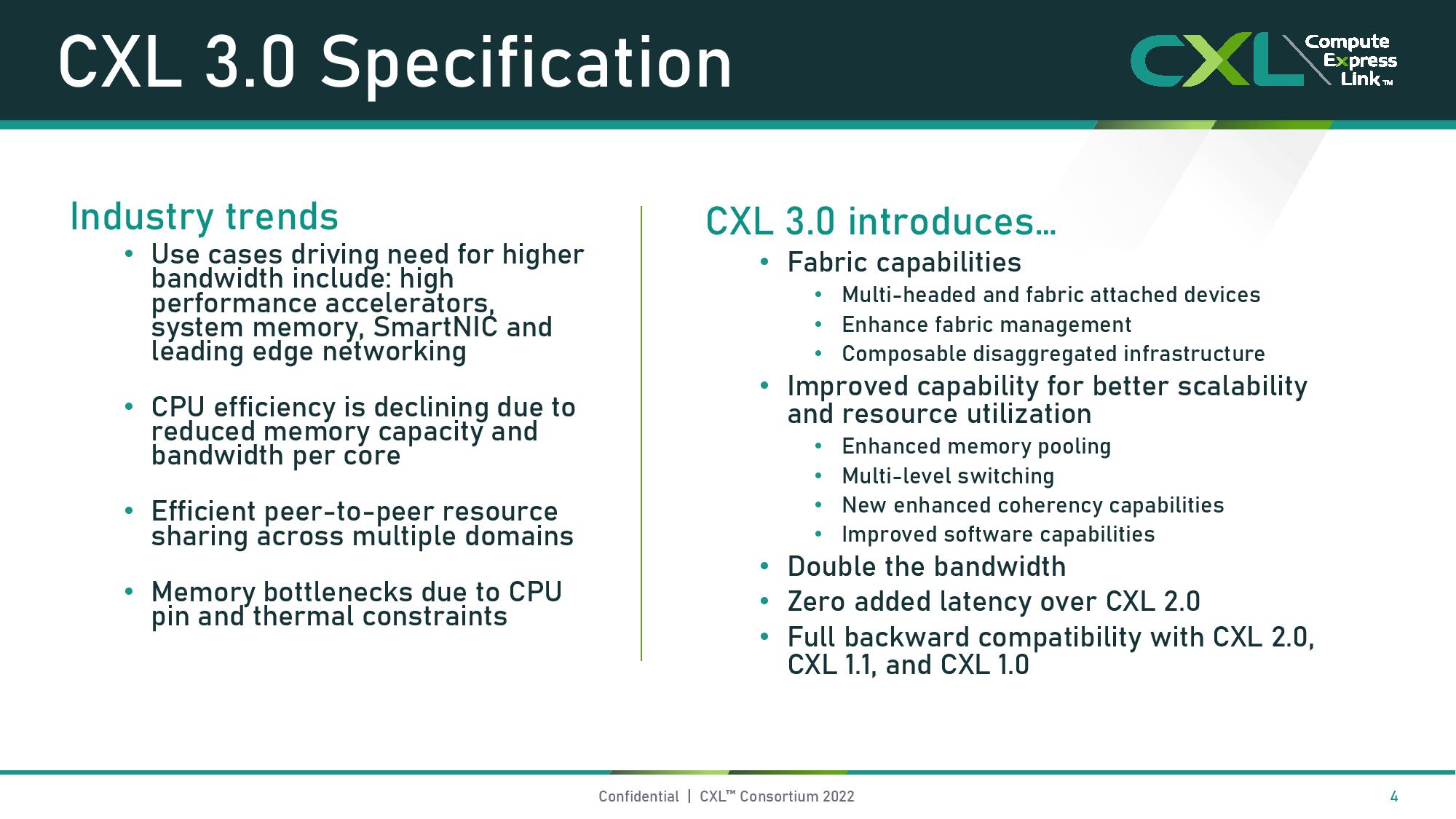

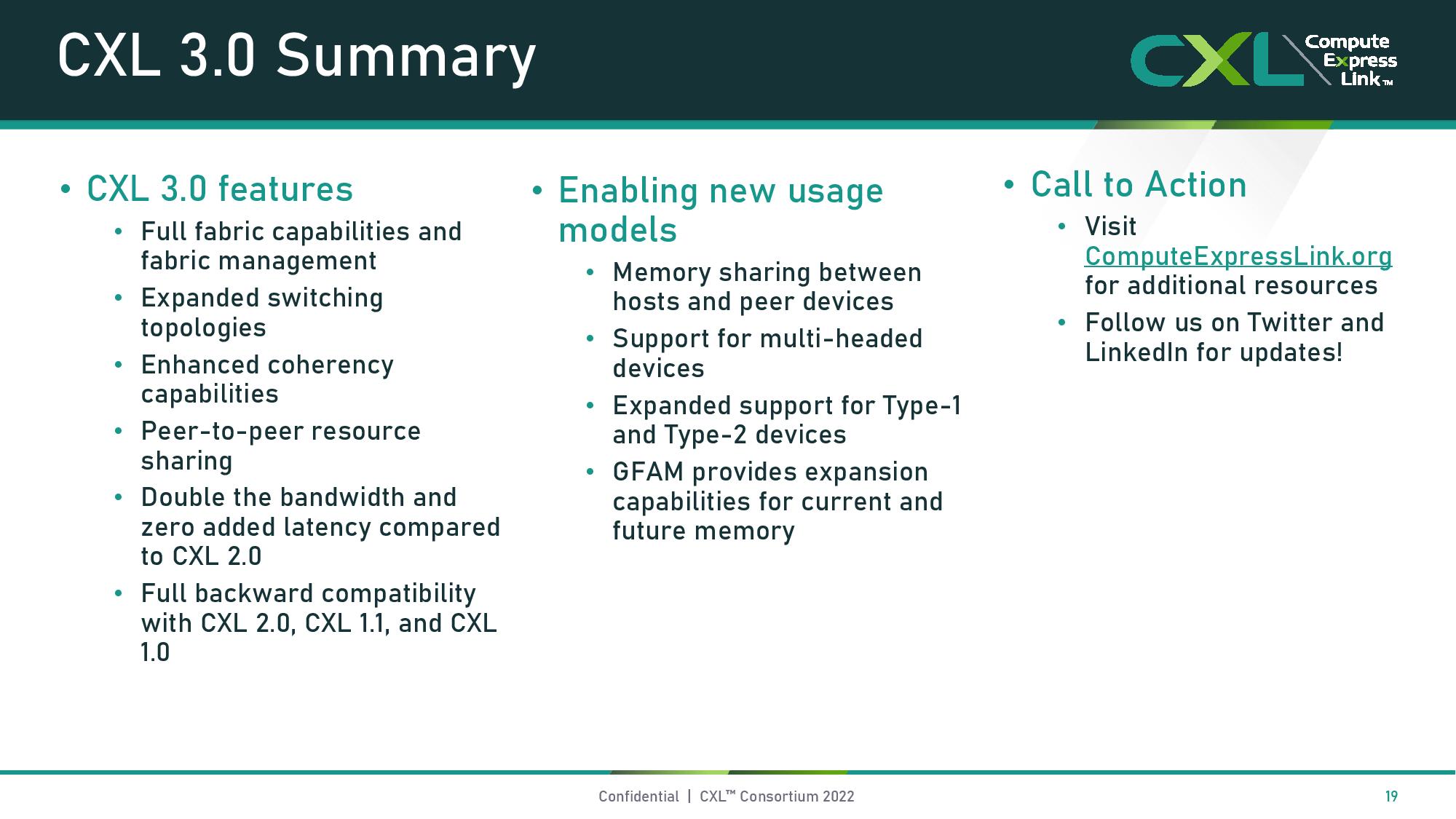

The Compute eXpress Link (CXL) consortium today unveiled the CXL 3.0 specification, bringing new features like support for the PCIe 6.0 interface, memory pooling, and more complex switching and fabric capabilities to bear. Overall, the new spec will support up to twice the bandwidth of recent revisions without adding any latency, all while maintaining backward compatibility with previous versions of the specification. The new specification comes as OpenCAPI, the last meaningful open competition in the CPU interconnect wars, announced yesterday that it would contribute its spec to the CXL Consortium, leaving CXL as the clear path forward for the industry.

As a reminder, the CXL spec is an open industry standard that provides a cache coherent interconnect between CPUs and accelerators, like GPUs, smart I/O devices, like DPUs, and various flavors of DDR4/DDR5 and persistent memories. The interconnect allows the CPU to work on the same memory regions as the connected devices, thus improving performance and power efficiency while reducing software complexity and data movement.

All major chipmakers have embraced the spec, with AMD's forthcoming Genoa CPUs and Intel's Sapphire Rapids supporting the 1.1 revision (caveats for the latter). Nvidia, Arm, and a slew of memory makers, hyperscalers, and OEMs have also joined.

The new CXL 3.0 specification comes to light as the industry has finally and fully unified behind the standard. Yesterday the OpenCAPI Consortium announced that it will transfer its competing cache-coherent OpenCAPI spec for accelerators and its serial-attached near memory Open Memory Interface (OMI) spec to the CXL Consortium. That brings an end to the last meaningful competition for the CXL standard after the Gen-Z Consortium was also absorbed by CXL earlier this year. Additionally, the CCIX standard appears to be defunct after several of its partners wavered and chose to deploy CXL instead.

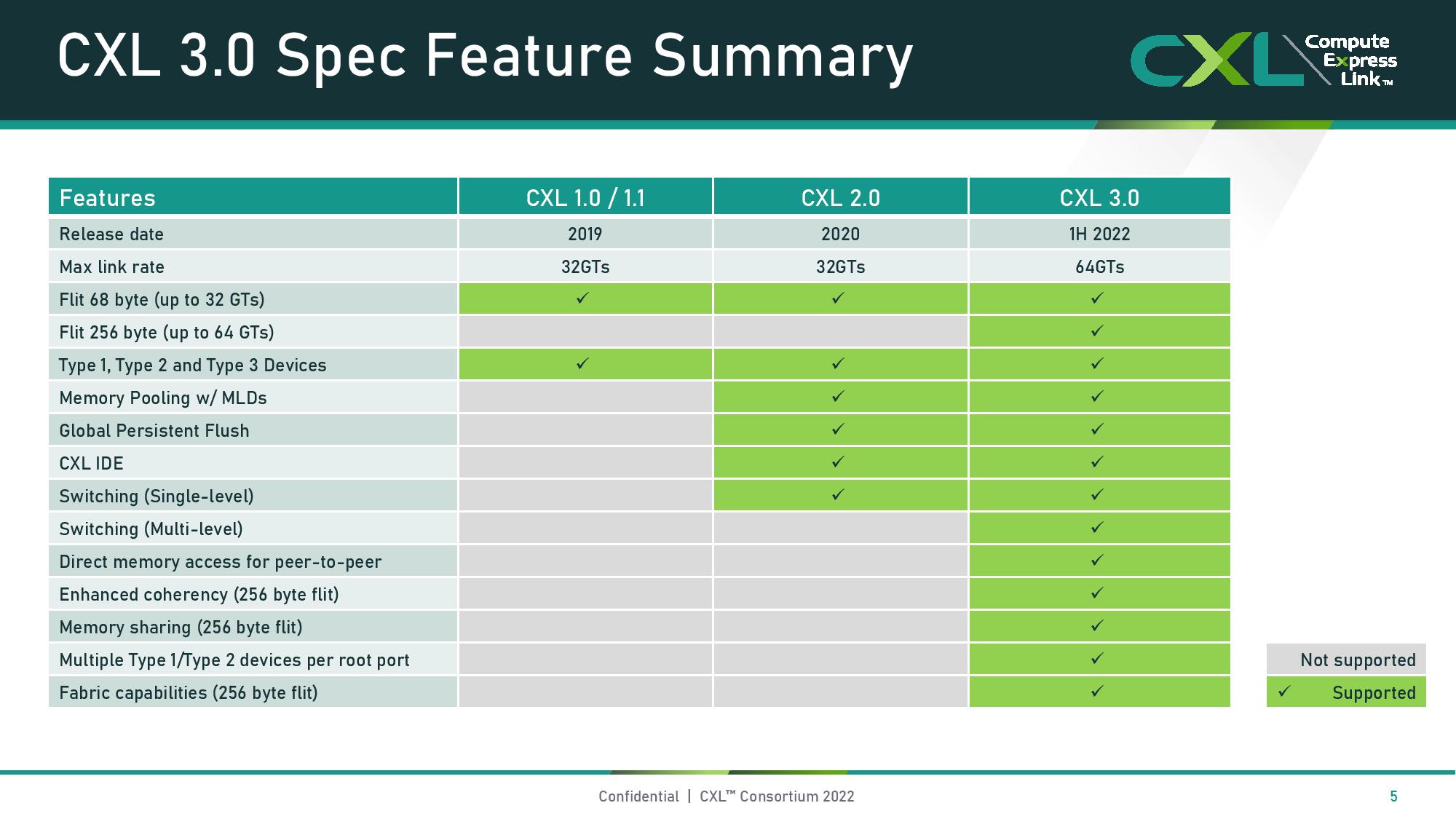

CXL 2.0 currently rides on the PCIe 5.0 bus, but CXL 3.0 brings that up to PCIe 6.0 to double throughput to 64 GT/s (up to 256 GB/s of throughput for a x16 connection), but with a claimed zero added latency. CXL 3.0 uses a new latency-optimized 256-byte flit format to reduce latency by 2-5 ns, thus maintaining the same latency as before.

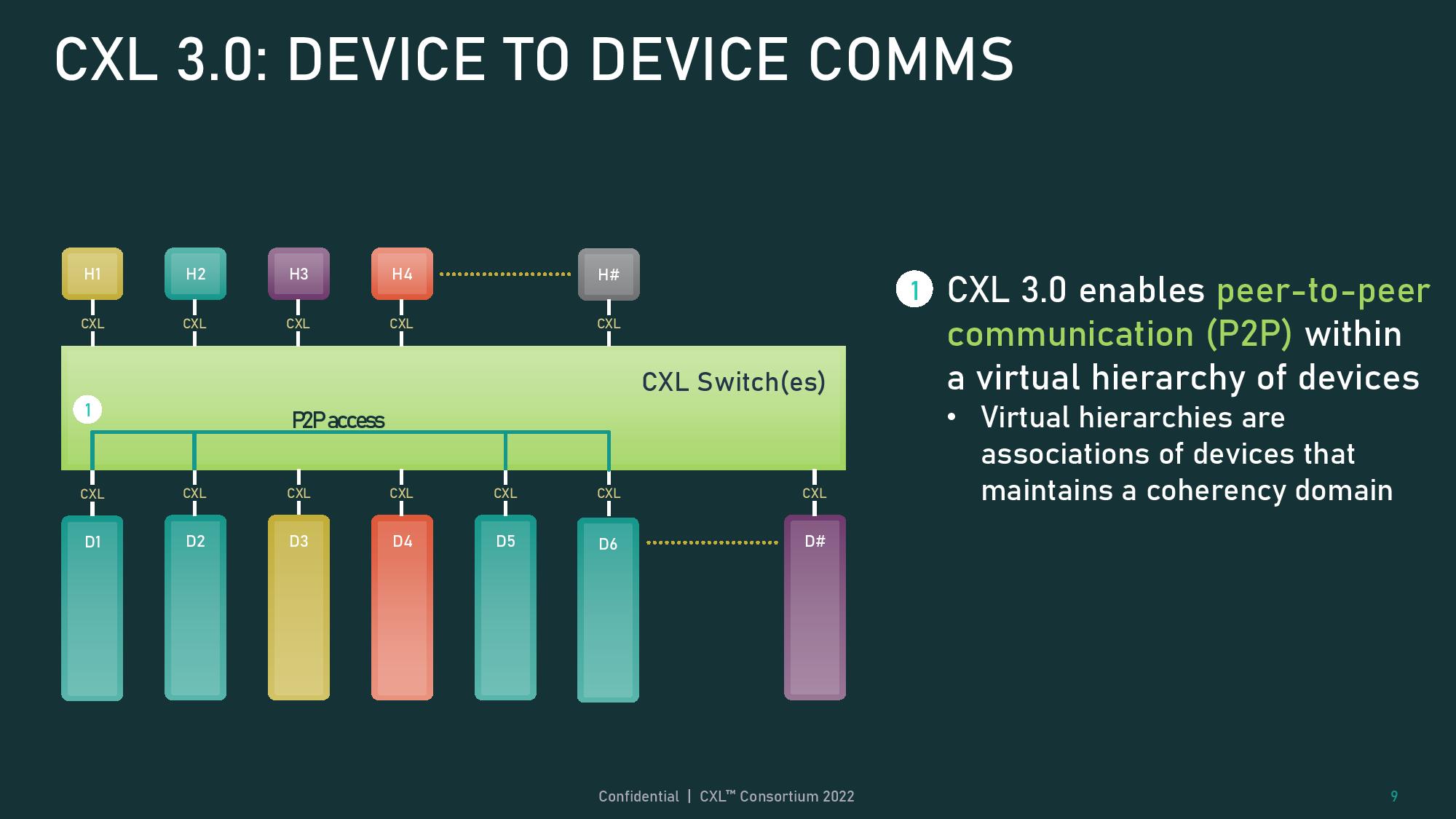

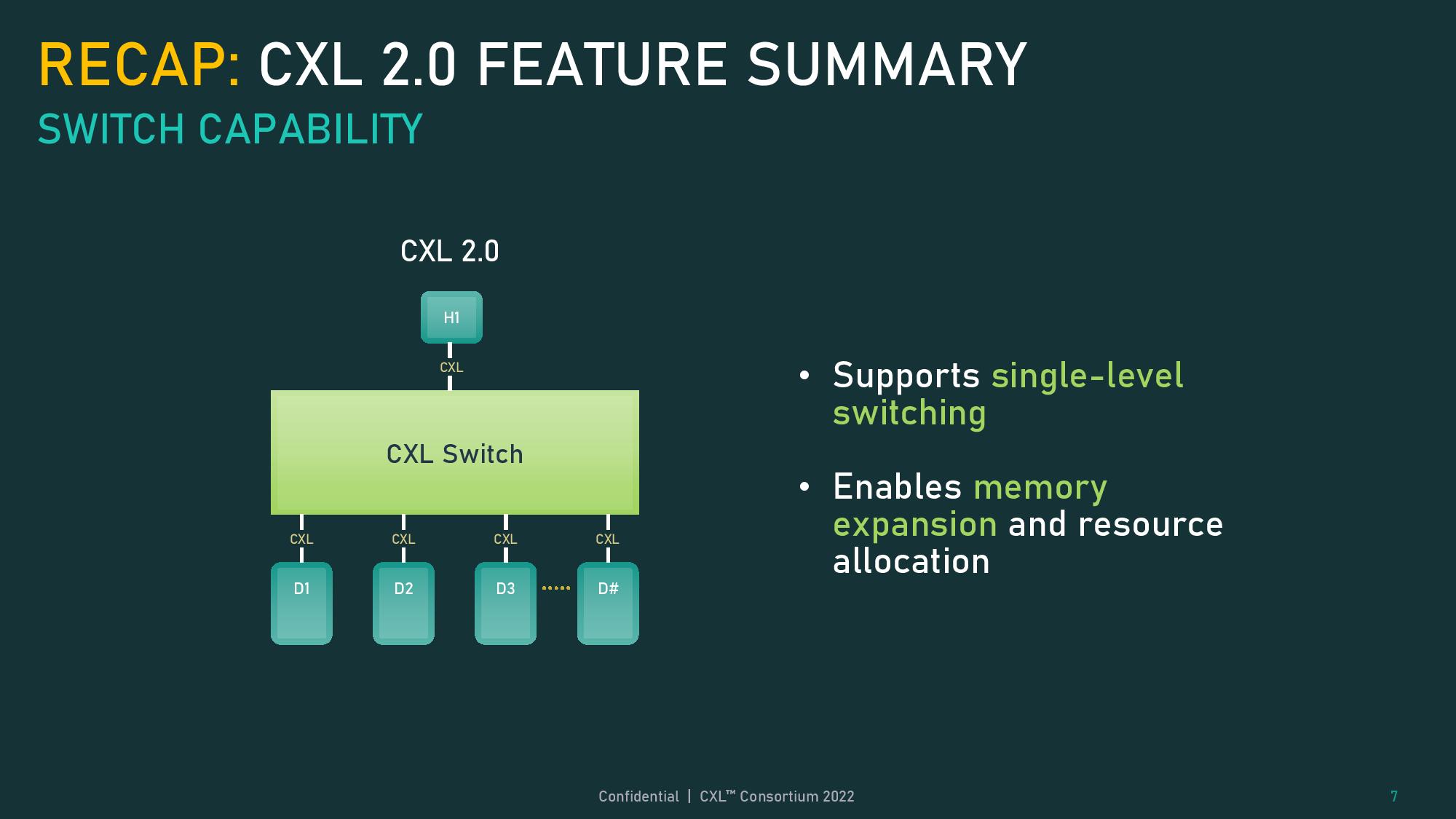

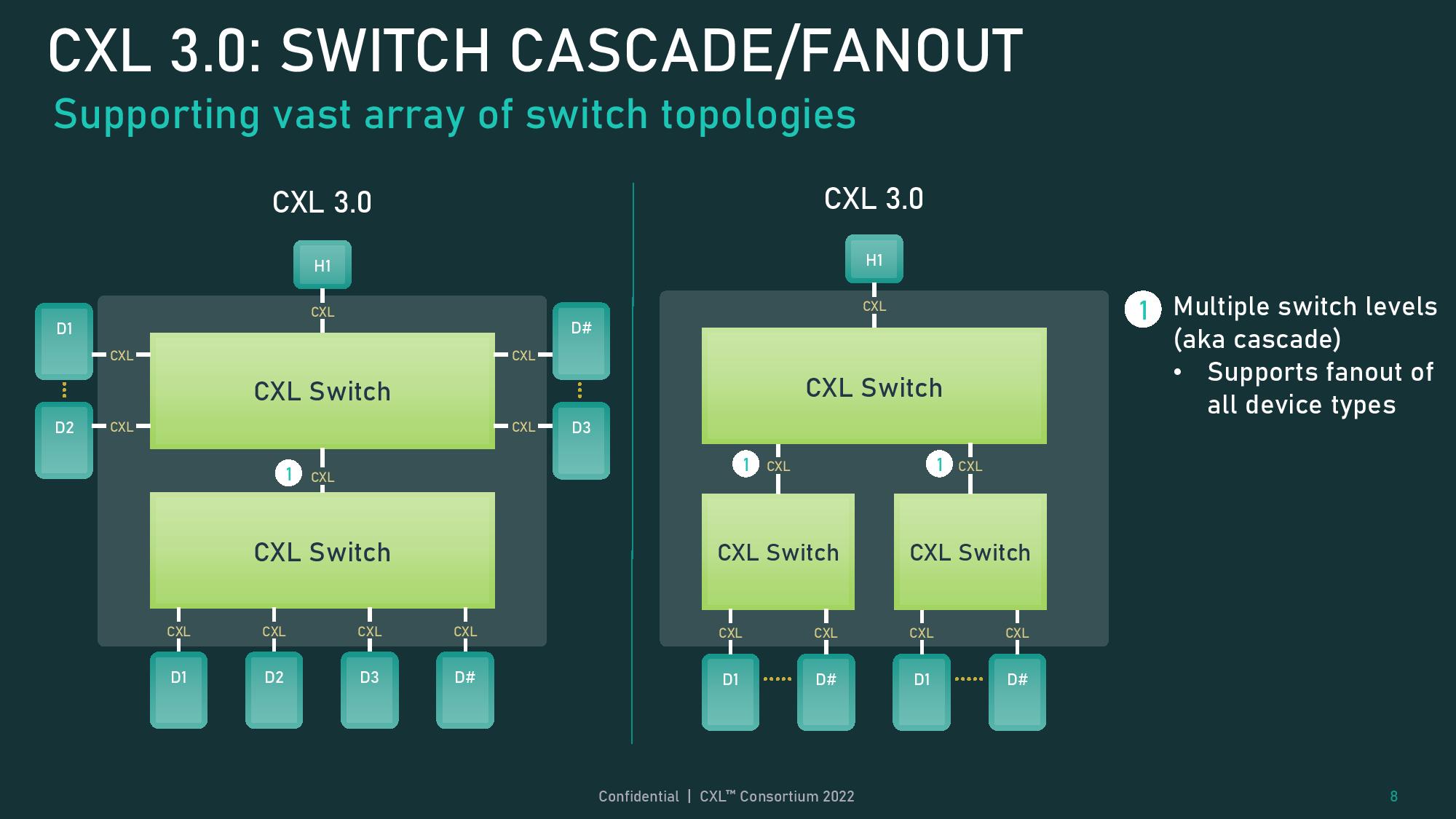

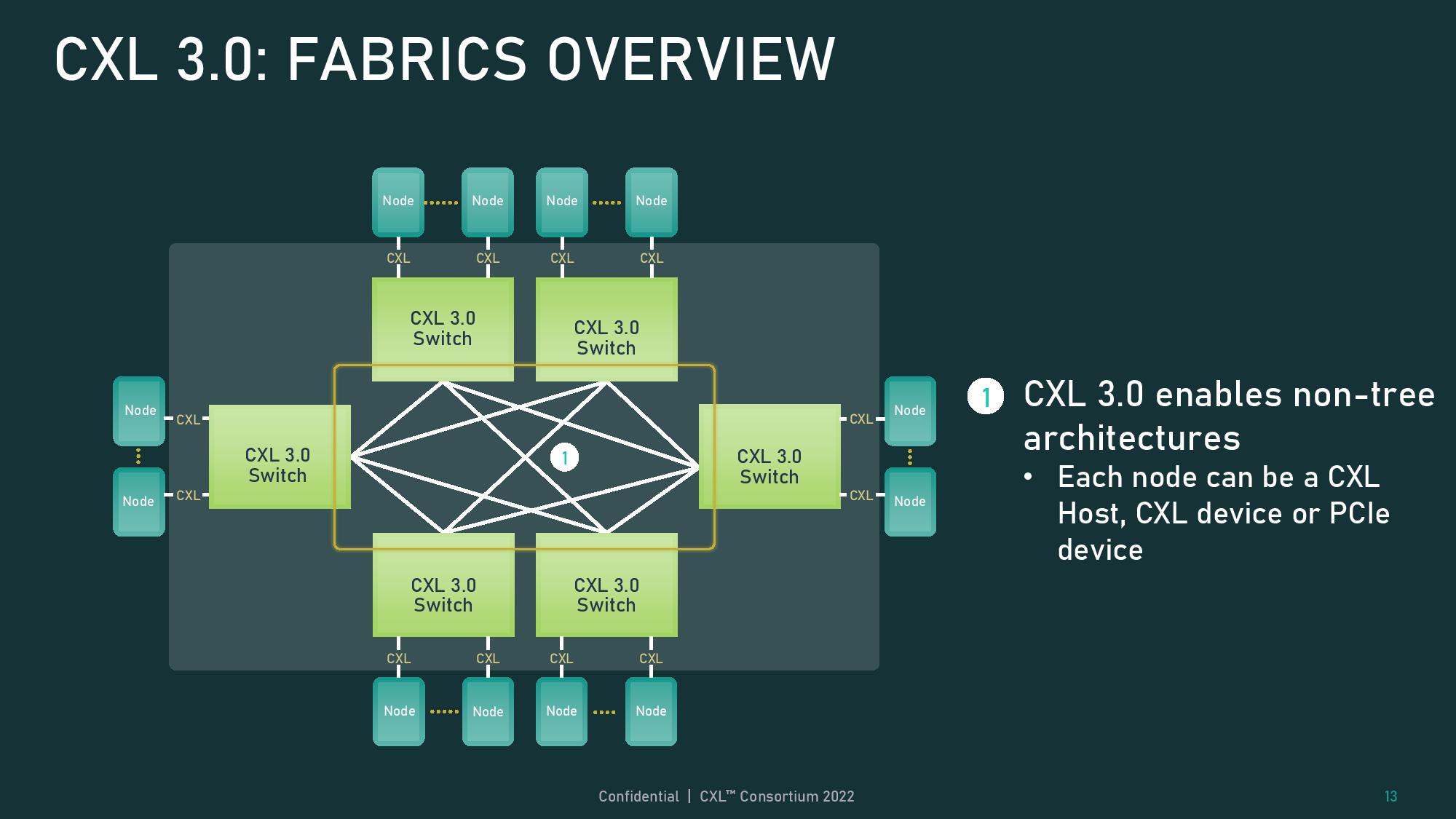

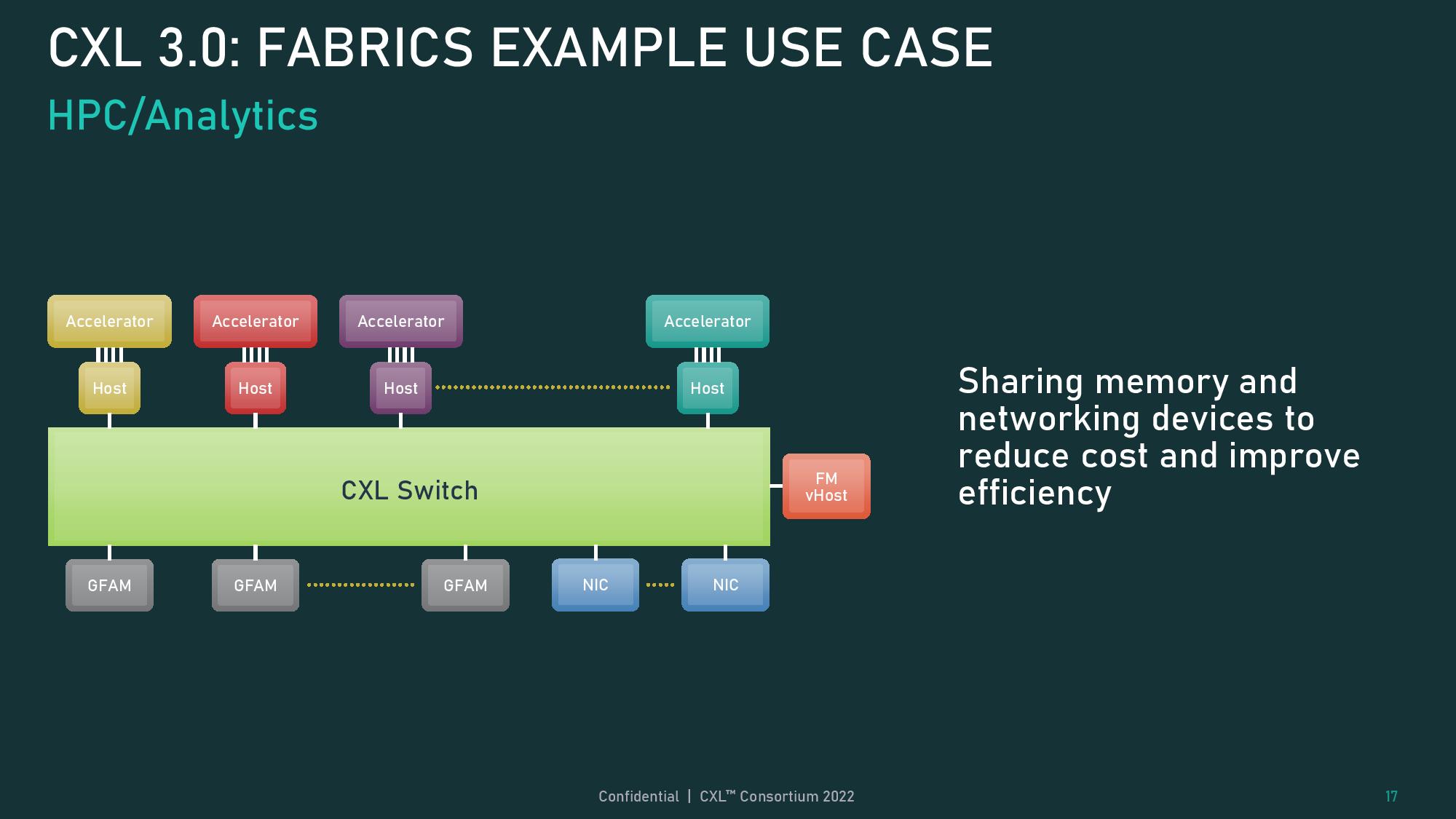

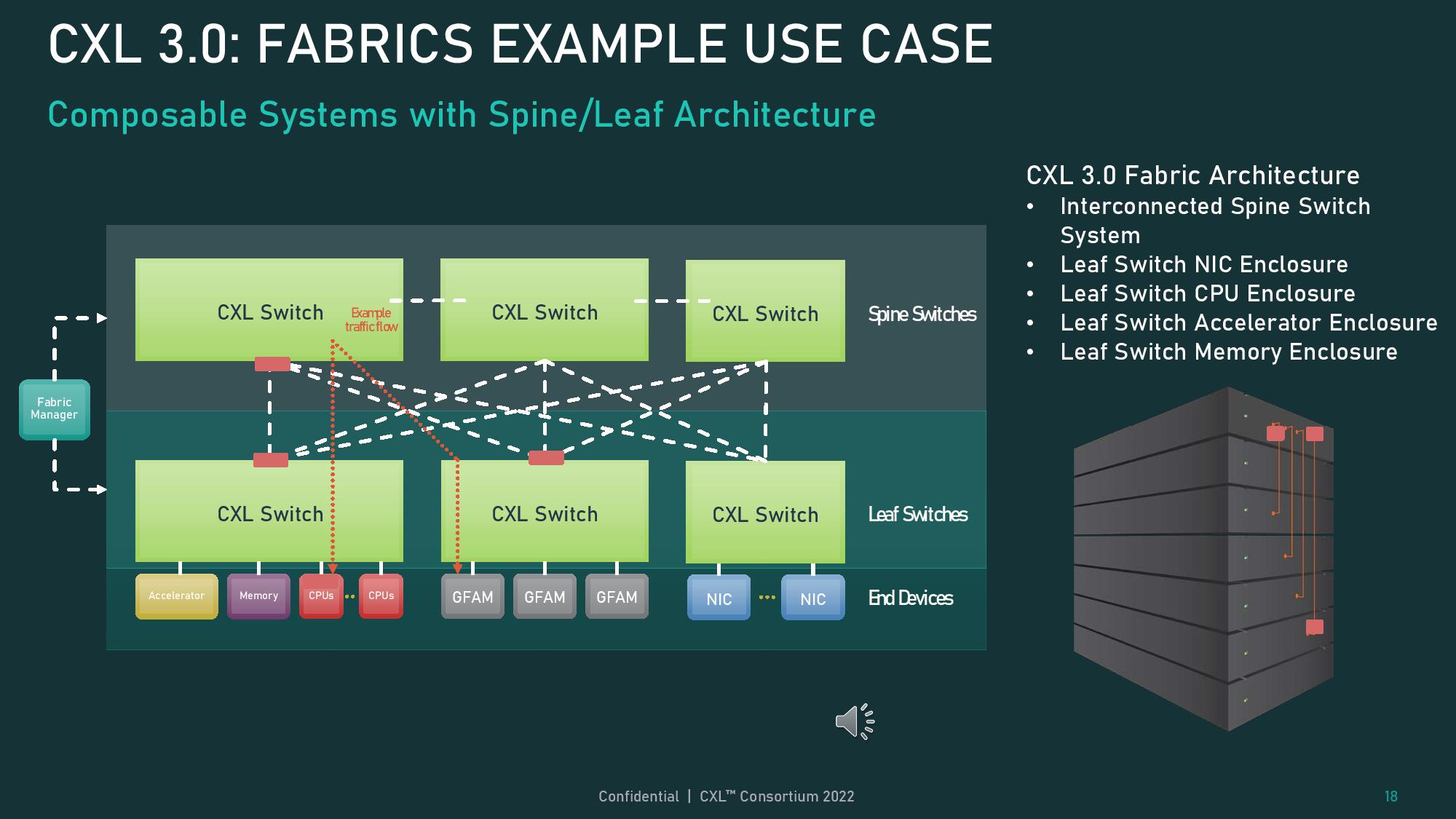

Other notable improvements include support for multi-level switching to enable networking-esque topologies between connected devices, memory sharing, and direct memory access (DMA) for peer-to-peer communication between connected accelerators, thus eliminating CPU overhead in some use-cases.

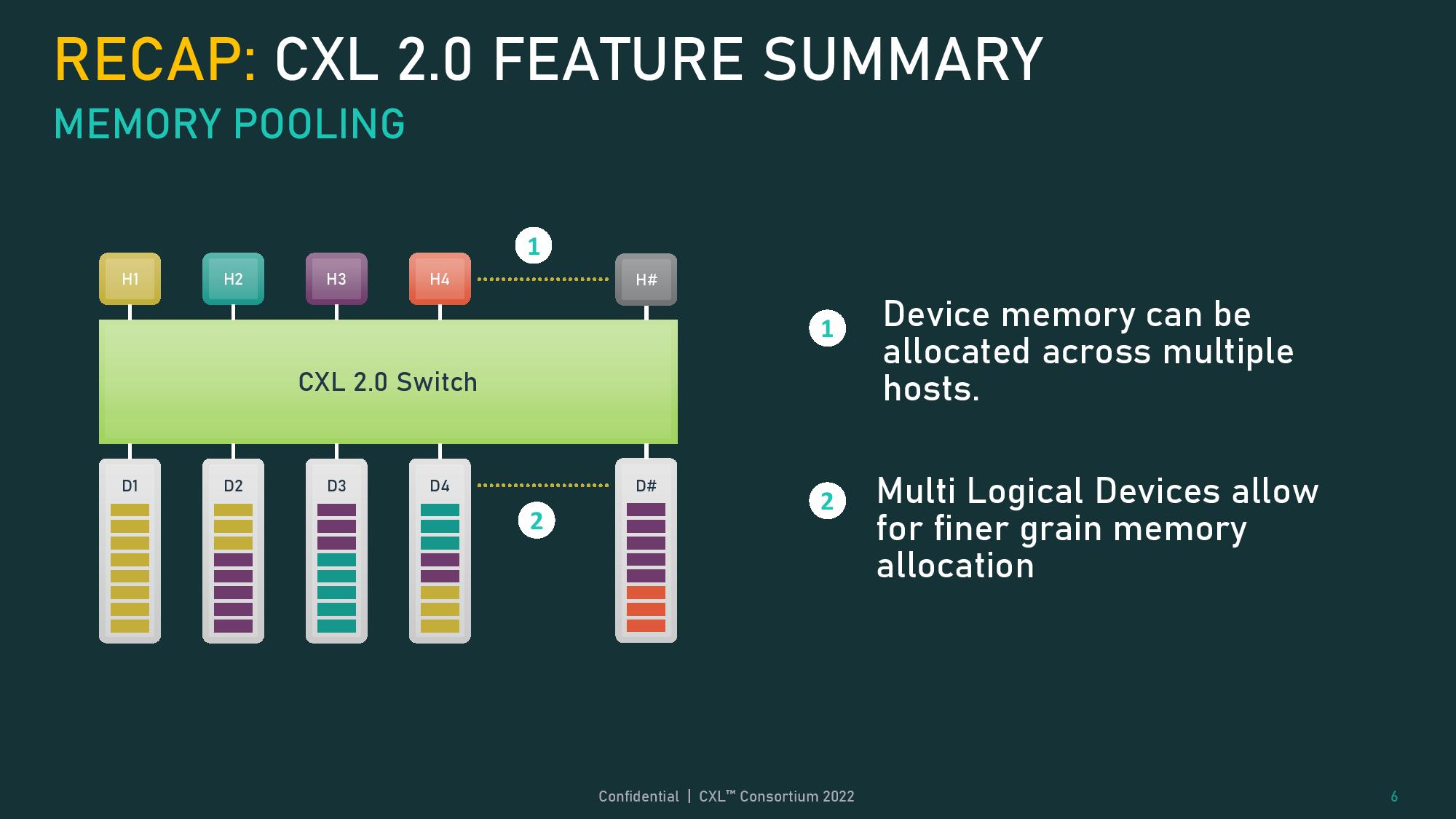

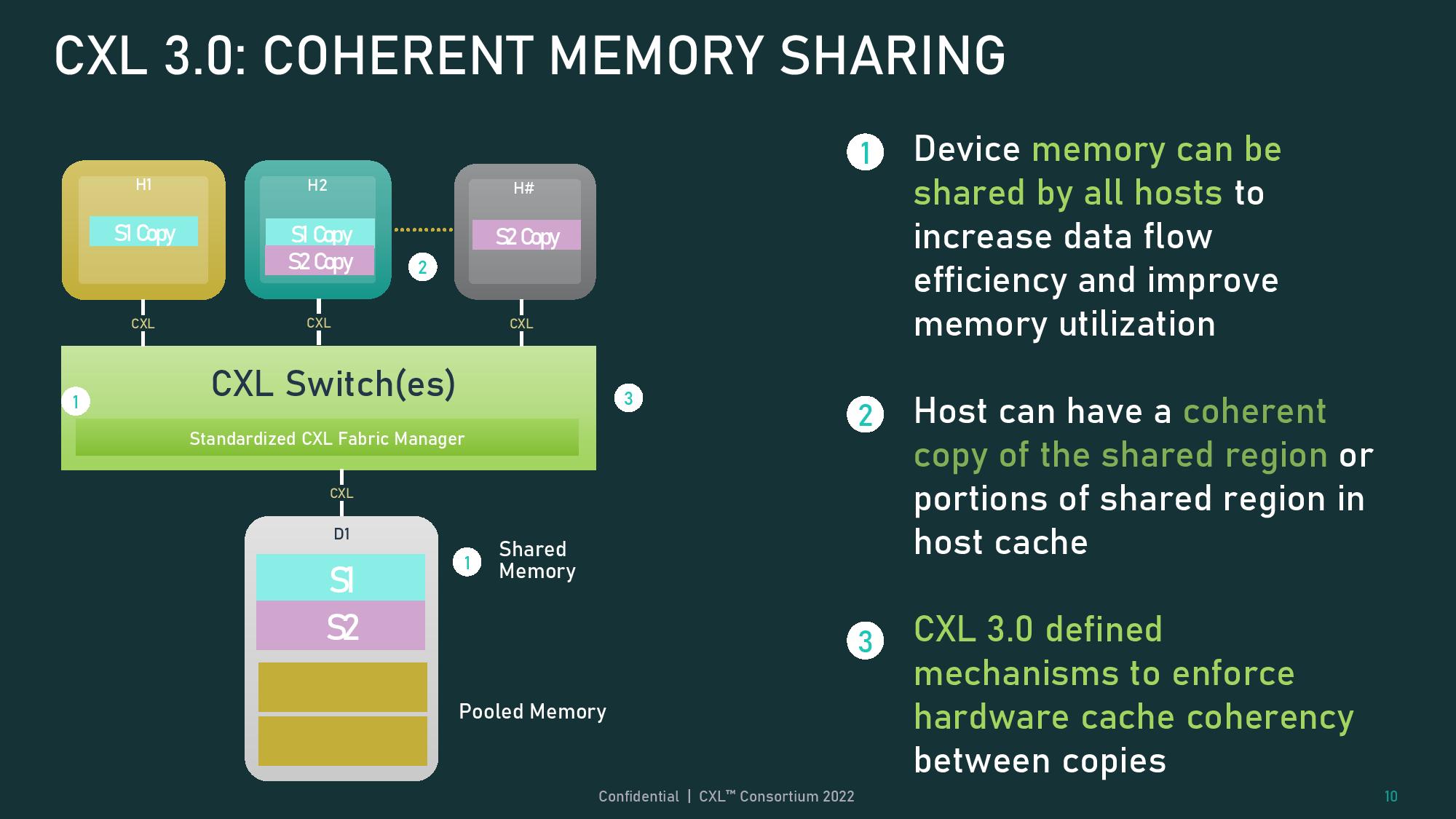

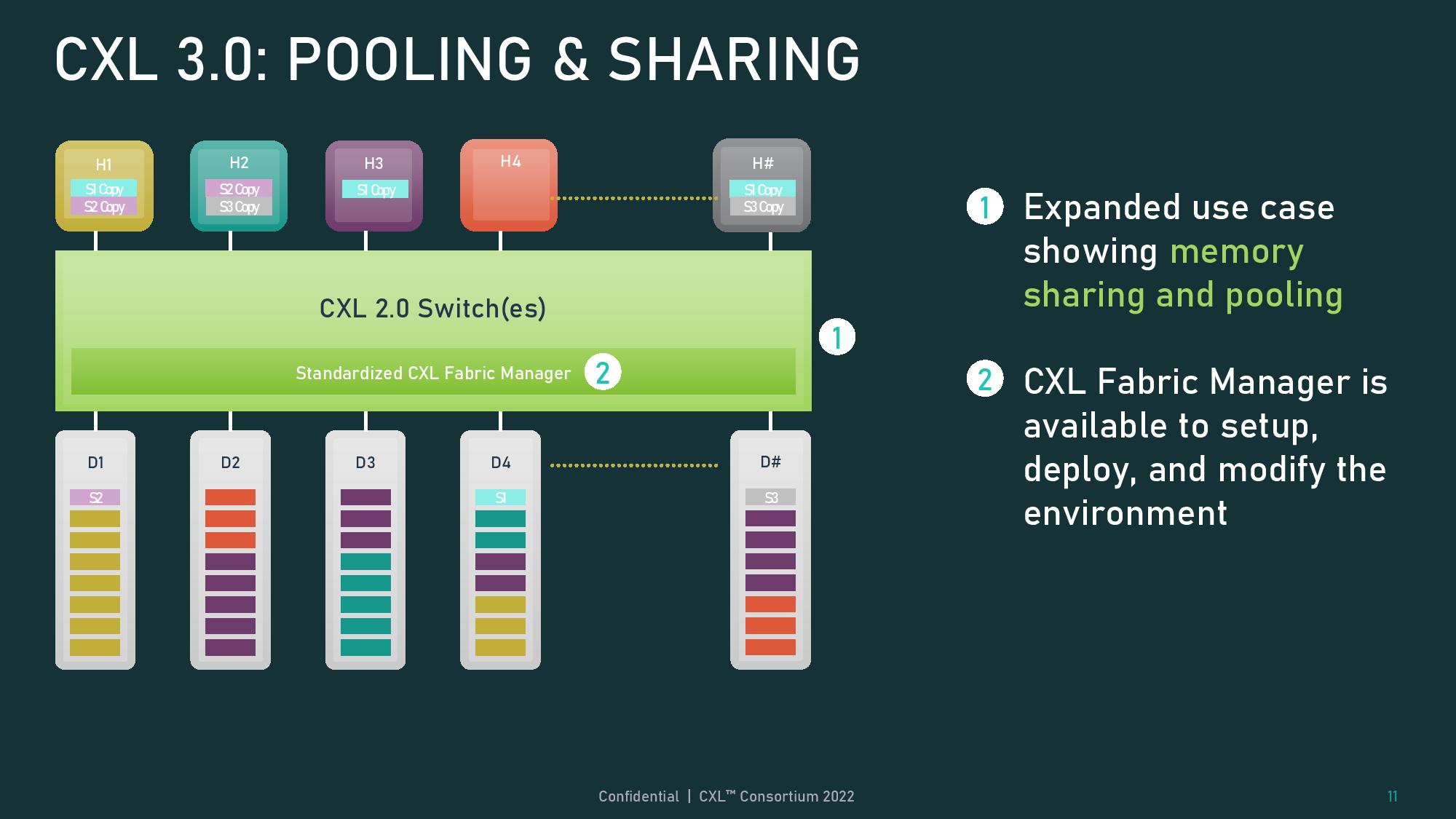

The CXL 2.0 spec supported memory pooling to dynamically allocate and de-allocate memory regions to different hosts, thus allowing a single storage device to be subdivided into multiple segments, but each region could only be assigned to one host. CXL 3.0 adds memory sharing, which allows data regions to be shared between multiple hosts via hardware coherency. This works by placing data in the host cache with the added hardware cache coherency to ensure each host sees the most up-to-date information.

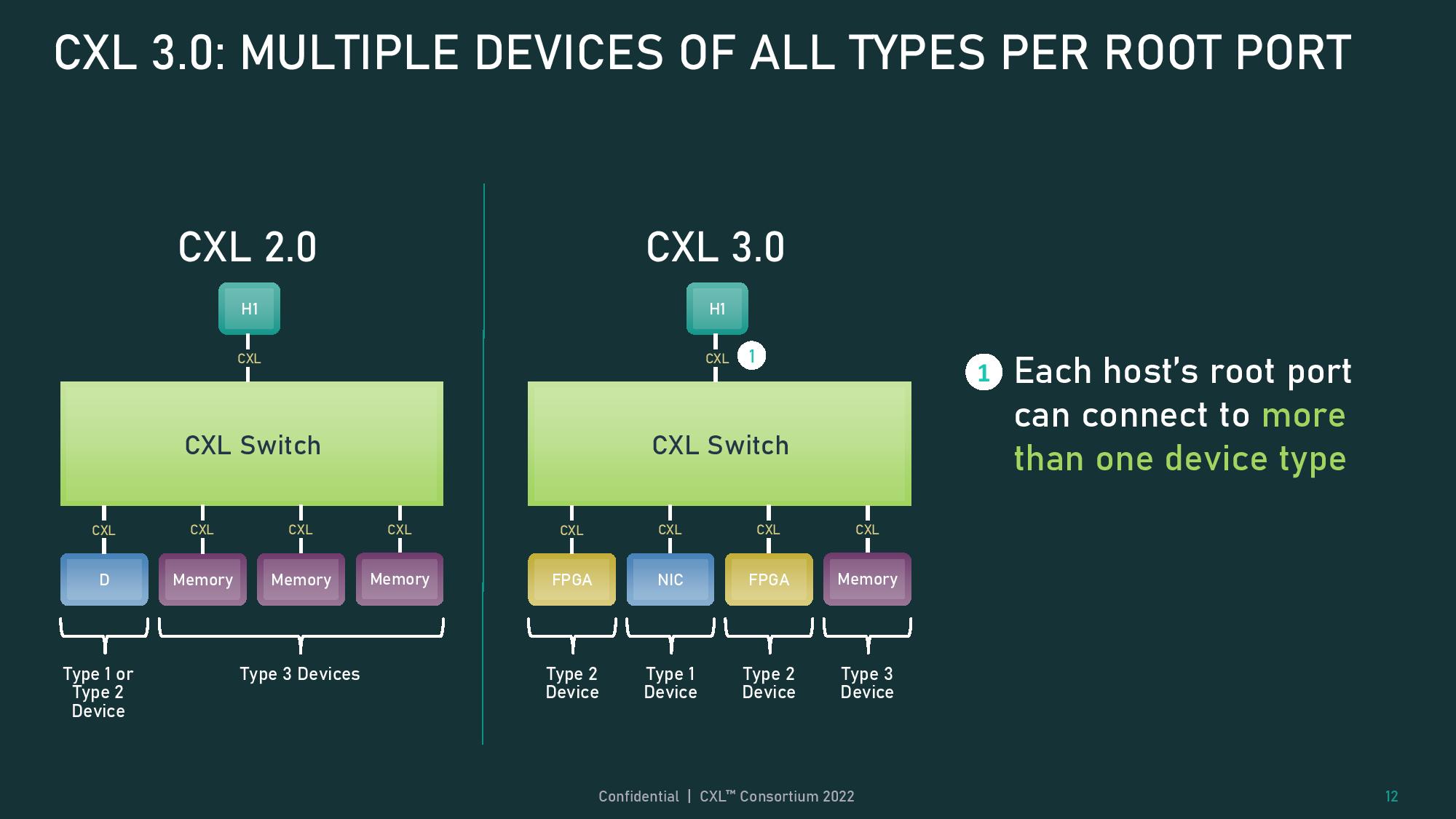

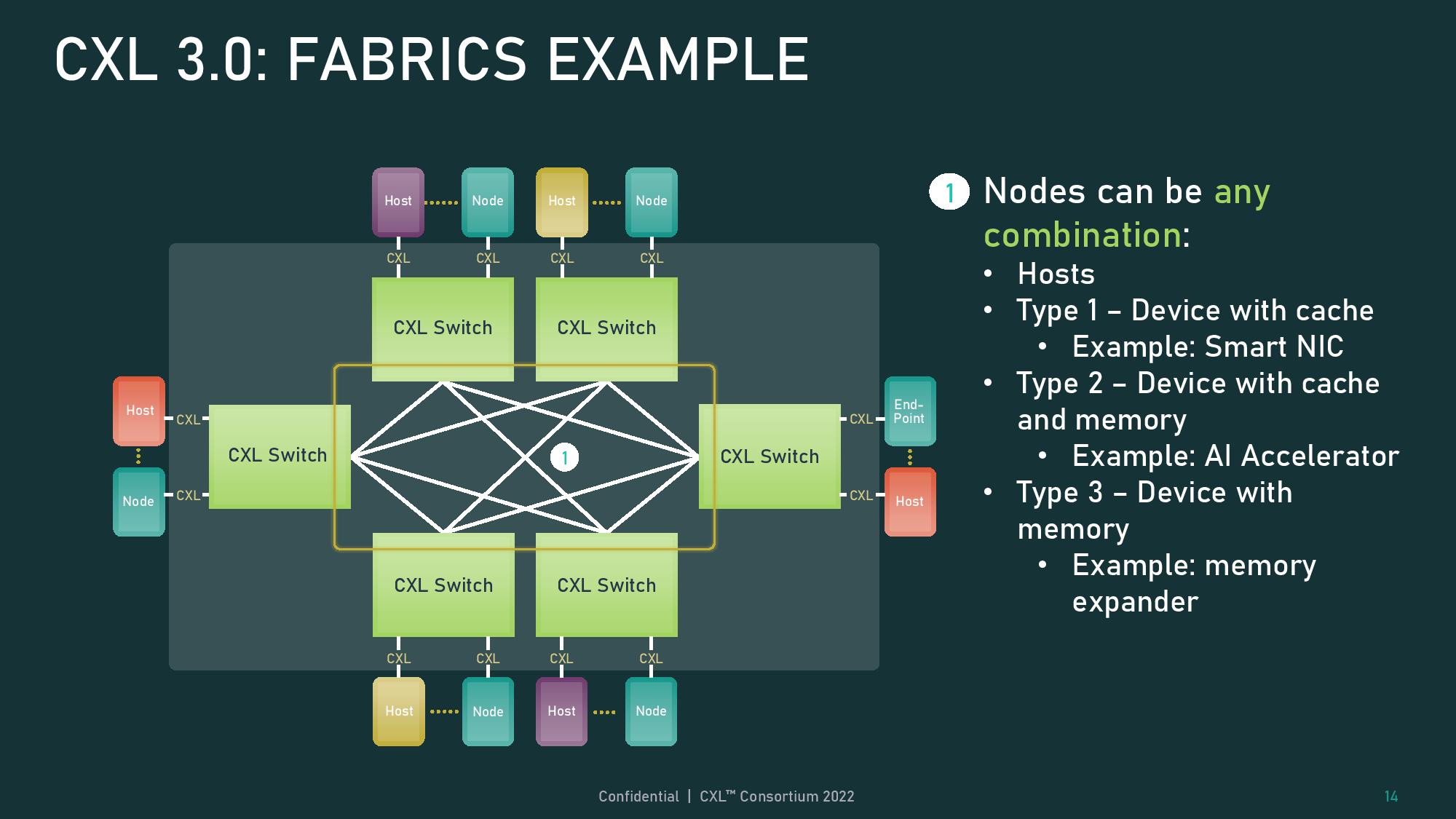

The CXL spec breaks devices into different classes: Type 1 devices are accelerators that lack local memory, Type 2 devices are accelerators with their own memory (like GPUs, FPGAs, and ASICs with DDR or HBM), and Type 3 devices consist of memory devices. In addition, CXL now supports mixing and matching these types of devices on a single host root port, tremendously expanding the number of options for complex topologies like those we cover below.

The updated spec also supports direct peer-to-peer (P2P) messaging between attached devices to remove the host CPU from the communication path, thus reducing overhead and latency. This type of connection allows a new level of flexibility for accelerator-to-memory and accelerator-to-accelerator communication.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The CXL spec now allows cascading multiple switches inside a single topology, thus expanding the number of connected devices and the complexity of the fabric to include non-tree topologies, like Spine/Leaf, mesh- and ring-based architectures.

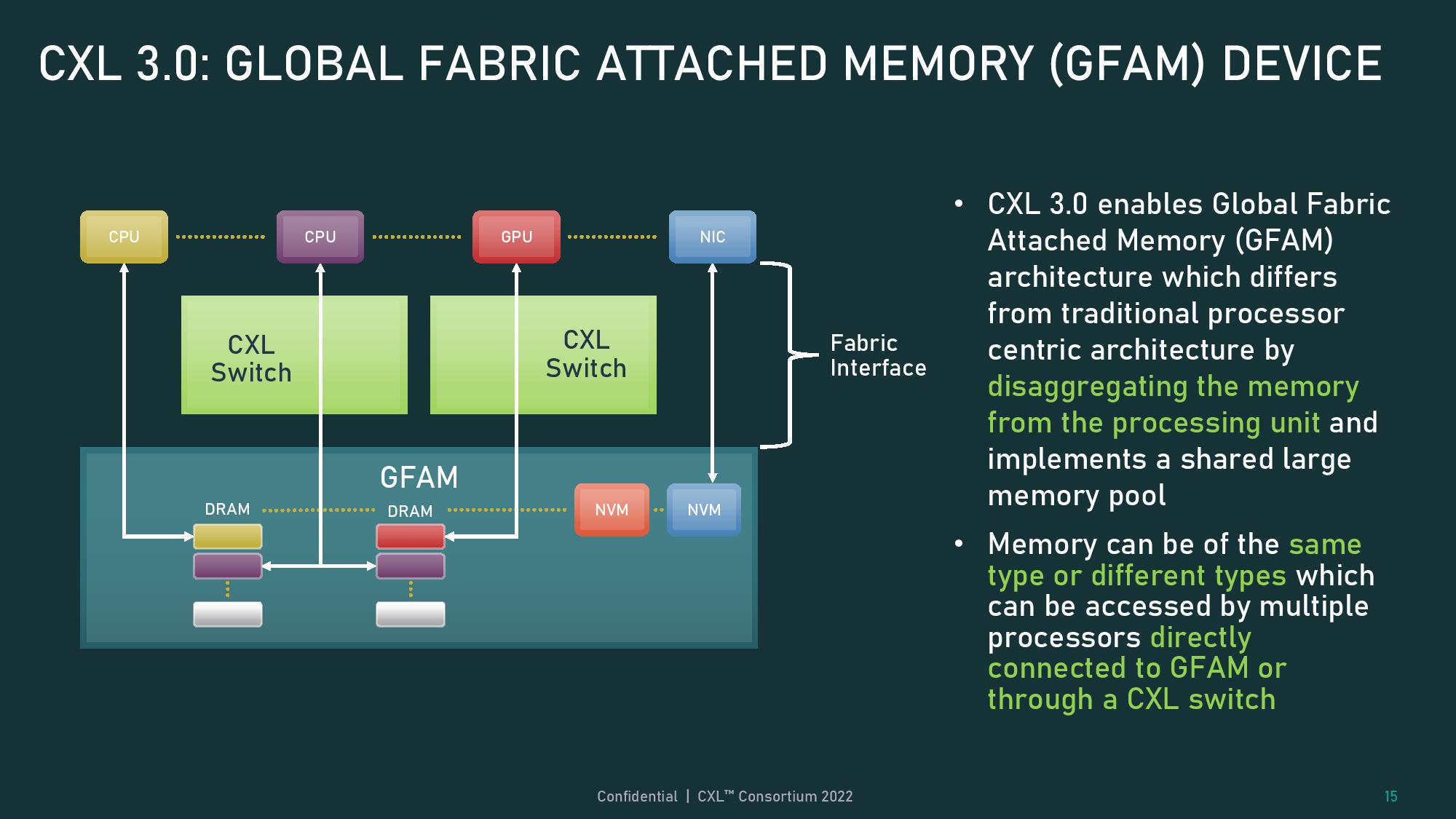

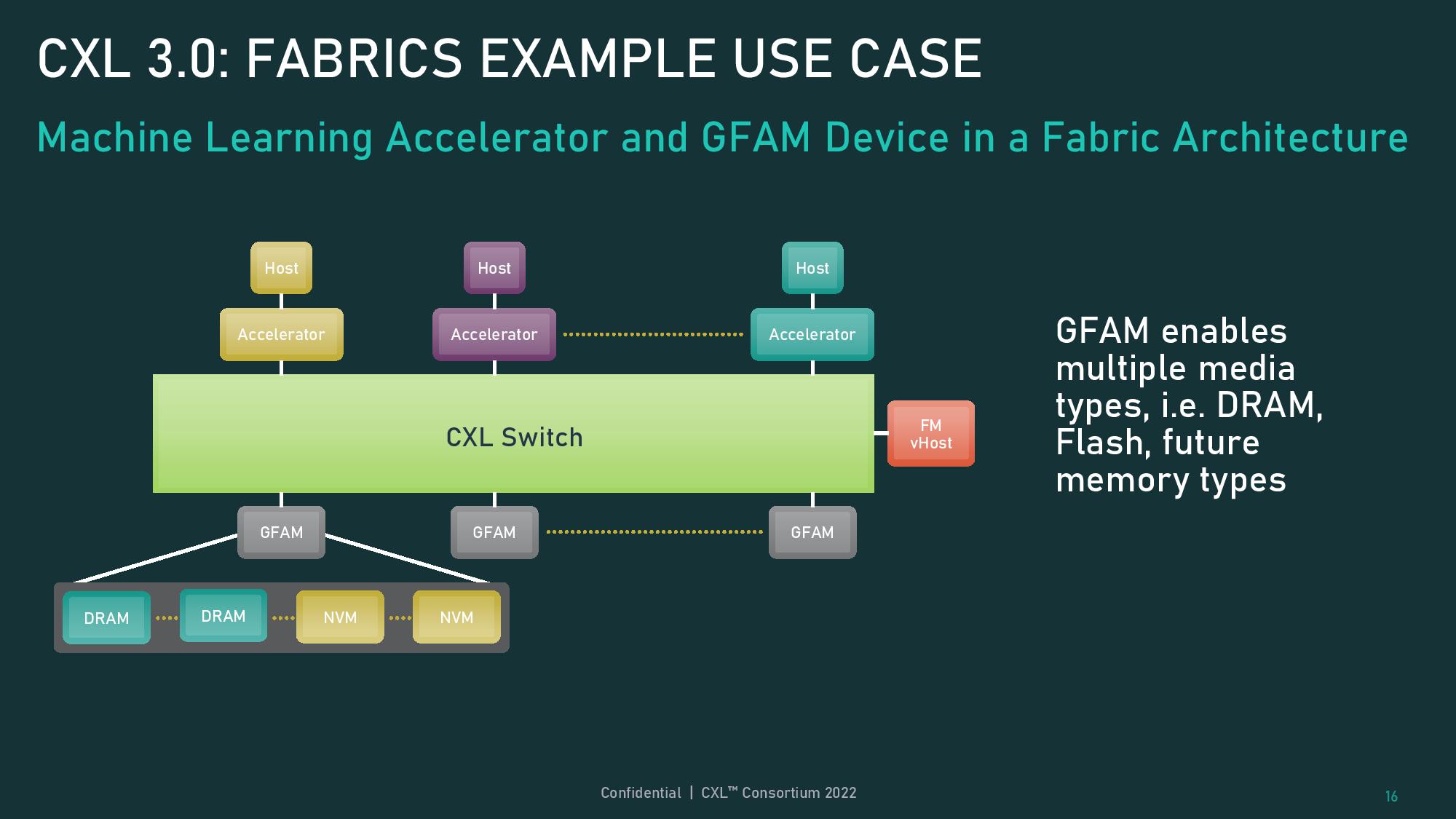

A new Port Based Routing (PBR) feature provides a scalable addressing mechanism that supports up to 4,096 nodes. Each node can be any of the existing three types of devices or the new Global Fabric Attached Memory (GFAM) device. The GFAM device is a memory device that can use the PBR mechanism to allow for memory sharing between hosts. This device supports using different types of memory, like persistent memory and DRAM, together in a single device.

The new CXL spec expands the use cases for the interconnect drastically to encompass large disaggregated systems at rack scale (and perhaps beyond). Naturally, these types of features beg the question of if this would be feasible for more storage-centric purposes, like connecting to an all-flash storage appliance, for instance, and we're told the spec has begun to garner some interest for those types of uses as well.

The consortium also tells us that it sees intense interest from its members in using DDR4 memory pools to sidestep the cost of DDR5 in next-gen servers. In this way, hyperscalers can use DDR4 memory that they already have (and would otherwise discard) to create large flexible pools of memory paired with DDR5 server chips that can't accommodate the cheaper DDR4 memory. That type of flexibility highlights just one of the many the advantages of the CXL 3.0 specification, which will release to the public today.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

cmccane CXL gets us closer to supercomputer interconnects like ccNUMA from SGI. Intel has been promising this type of tech for many years but it never materialized. It would have a significant impact on hyperscalers going forward. Today, in terms of RAM and processor scaling, every server is an island. Infiniband gave us RDMA but it was convoluted and not fast enough. You will always be dealing with stranded capacity when every server is an island. What I call Single Image Multi Server could become reality with CXL. That is a single Linux or Hypervisor image that runs across all servers in a rack. Individual app or VM performance on such a platform would still require placement tech to keep apps on Cores near their RAM. For microservices we could see communication happening over CXL instead of ethernet. Dynamically allocated centralized memory pools could also become reality. That would change up a typical 52 RU rack layout to include some RU’s dedicated to all-RAM servers so we could see such a thing as a RAM server and of course someone (not me) would coin the term Software Defined Memory.Reply -

abufrejoval While your positive outlook gladdens my heart, my mind keeps reminding me, that Linux is an absolutistic disk operating system, which doesn't understand anything except CPUs, DRAM and block storage. Networks have been grafted on to a certain degree, but things like dynamic allocation and de-allocation of resources, heterogeneous computing devices or accelerators, dynamic reconfiguration of PCIe or CXL busses or meshes or just plain cooperation with other kernel instances with proper delegation of authority etc. just never were part of Unix, not even Plan-9.Reply

So while the hardware is going there in leaps and bounds, I have very little hope Linux (neither as OS nor as hypervisor), Xen or any other hypervisor is anywhere ready to deal with all that.

Perhaps some cloud vendors will be able extend their proprietary hypervisors to take advantage of these things, but that doesn't bring that technology any closer to me.