Intel's Sapphire Rapids Roadmap Slips: Enters Production in 2022

Moving the goalposts

Intel announced via a blog post this morning that Sapphire Rapids will enter production in the first quarter of 2022, with the volume ramp beginning in the second quarter of 2022. The revised production timeline represents a three-month slip from the last Sapphire Rapids roadmap, which projected that production would begin at the end of 2021, with the volume ramp occurring in the first half of 2022.

That still means these chips will compete primarily with AMD's EPYC Milan processors, but also with the 5nm Zen 4 EPYC Genoa chips that will arrive later in 2022. Intel also divulged new details about its Advanced Matrix Extensions (AMX) and Data Streaming Accelerator (DSA) technologies that debut with Sapphire Rapids.

Intel's release cadence for its data center products can be a bit tricky — the company typically begins shipping to its largest customers (hyperscalers like Facebook, Amazon, etc.) shortly after the chips enter production. General availability typically comes six months later, marking the traditional formal launch that finds chips and OEM systems available to the general public.

| Row 0 - Cell 0 | Production / Leading Customers | Volume Ramp / General Availability |

| Original Roadmap | 2021 (Fourth Quarter) | First Half 2022 |

| Revised Roadmap | First Quarter 2022 | Second Quarter 2022 |

As such, Intel's new timeline represents a delay to the initial production and availability of final silicon for its largest customers (they do have samples), but a compressed deployment time (i.e., the time between the first shipments and general availability). By shrinking the 'deployment time' from six months to three, Intel's formal launch is still on schedule for the first half of 2022.

Lisa Spelman, Corporate Vice President and GM of Intel's Xeon and Memory Group, cited Sapphire Rapids breadth of new technology as the impetus for the timeline readjustment, saying, "Given the breadth of enhancements in Sapphire Rapids, we are incorporating additional validation time prior to the production release, which will streamline the deployment process for our customers and partners."

Sapphire Rapids represents Intel's first chips with its newest 10nm Enhanced SuperFin process, whereas its Ice Lake predecessors came fabbed on the (now older) 10nm+ node. Given Intel's notoriously difficult transition to its 10nm process, public perception will likely first center on potential trouble with the process technology. That's a possibility, but a slim one. Intel's Sapphire Rapids chips have been sampled (and leaked) widely, so working pre-release/validation silicon is in the wild with leading customers. Additionally, yield issues typically take much longer than three months to resolve, so issues with the process tech don't seem likely.

Spelman's blog post cites that "new enhancements" that require further validation, and it also talks about its Advanced Matrix Extensions (AMX) and Data Streaming Accelerator (DSA) technology (more on those technologies below) but doesn't cite them as the direct cause of the pushed production timeline.

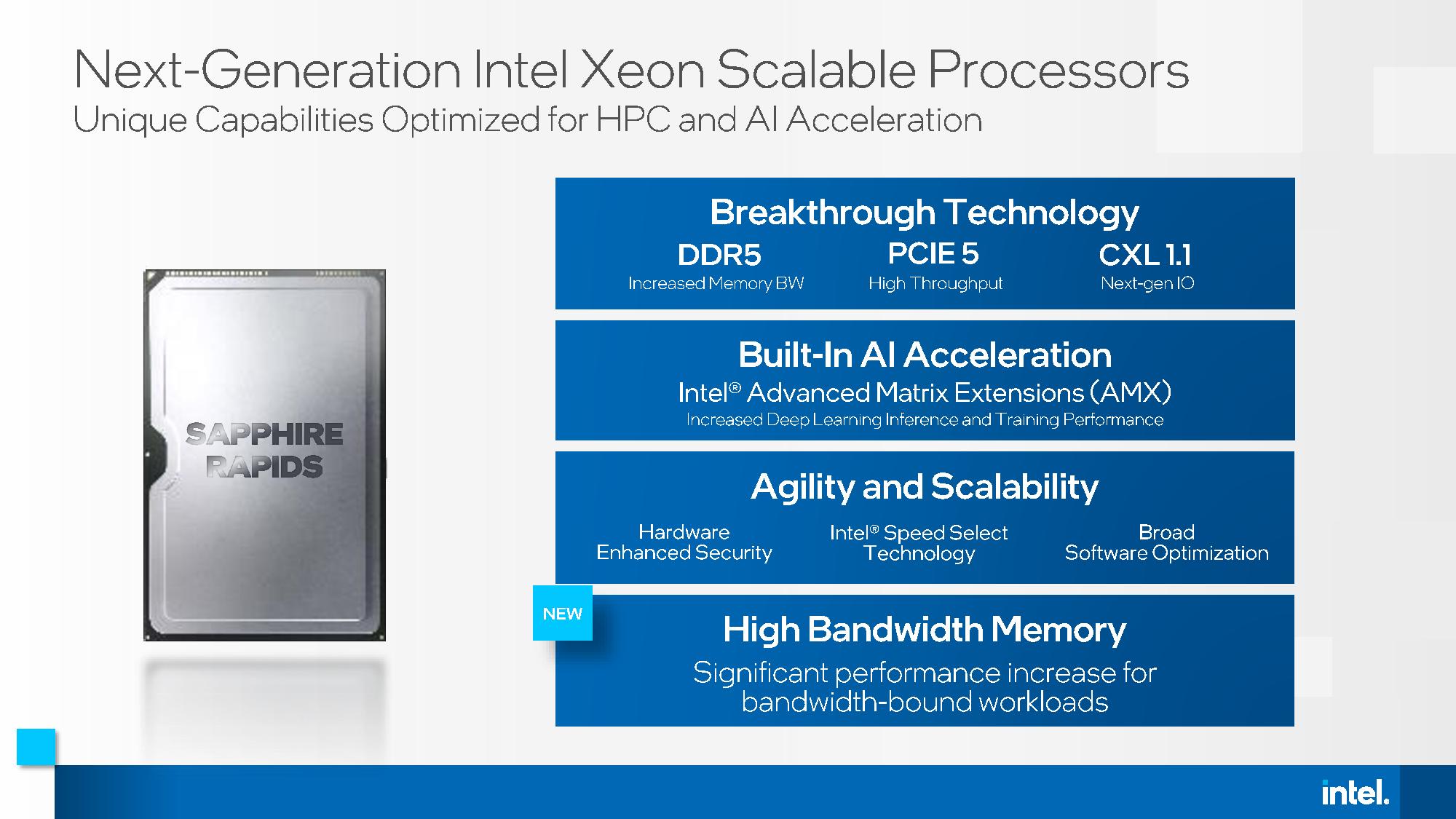

Sapphire Rapids comes with plenty of new technology, with DDR5 and PCIe 5.0 being the stand-out connectivity enhancements over the prior-gen parts. Sapphire Rapids will represent Intel's first server chips to support DDR5, thus requiring extensive validation at the platform level from Intel and its hardware/software partners. Additionally, the ecosystem of PCIe 5.0 endpoints is in the early stages, meaning there could be difficulties associated with fully validating the new, faster interface with actual end-user devices.

Intel also has Sapphire Rapids models with HBM memory coming to market a few months after the main launch. These chips will be available to the general market, but given that they're already on a different schedule and likely aren't part of the first production run, it wouldn't make sense for them to cause a delay.

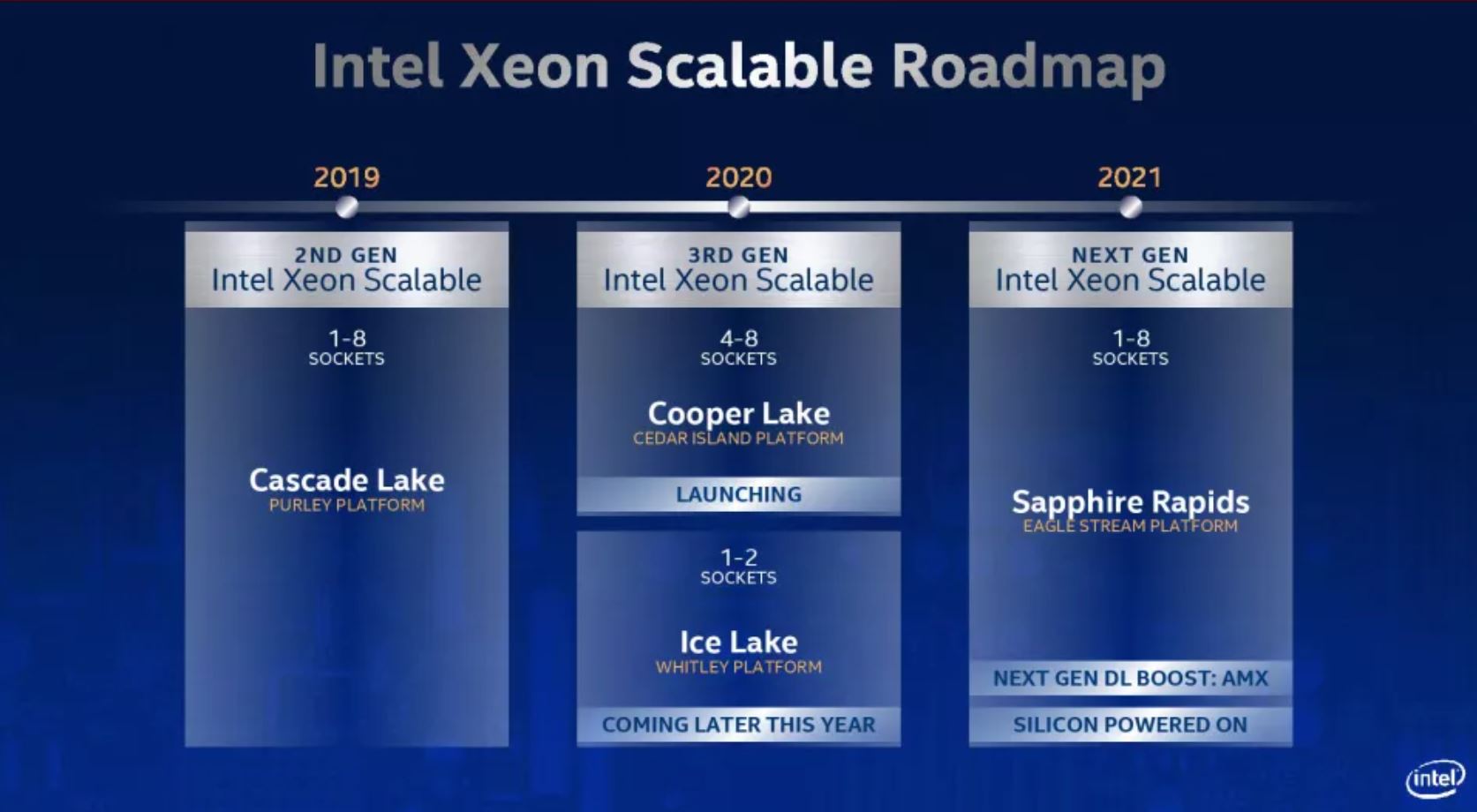

Intel's launch cadence for Sapphire Rapids has always been a bit odd. Currently, 10nm Ice Lake processors slot in for one- and two-socket servers, while 14nm Cooper Lake slots in for four- and eight-socket servers. In contrast, Sapphire Rapids is expected to reunify Intel's data center product stack with its Eagle Stream platform addressing all servers (from one to eight sockets).

Intel's Ice Lake famously arrived late, pushing its launch date perilously close to Sapphire Rapids. That isn't ideal because it didn't give OEMs as much time to recoup their investments into the platform. It also didn't give customers an extended period of time to deploy Ice Lake servers before newer, faster models would be available. That could cause some customers to skip the Ice Lake/Cooper Lake generation.

Intel sees it differently: DDR5 is in its early days, and it's expensive. The extra expense for new modules is worth it from a total cost of ownership (TCO) perspective, but some data center operators are more sensitive to CapEx expenditures and will likely balk at the higher up-front costs associated with DDR5. Additionally, the ecosystem of PCIe 5.0 devices that will slot into Sapphire Rapids is extremely limited and will take time to build out, which also might be a reason for customers to delay a mass migration to Sapphire Rapids.

Intel's follow-on Granite Rapids isn't due until 2023 (presumably late 2023). As such, Intel sees Ice Lake and Sapphire Rapids co-existing in the market, with the Ice Lake lineup being used for more general applications while data centers layer on Sapphire Rapids servers for higher-performance applications.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel Advanced Matrix Extensions (AMX) and Data Streaming Accelerator (DSA)

Intel's blog post covers its Advanced Matrix Extensions (AMX) that debut with Sapphire Rapids. These new ISA extensions add instructions that leverage new two-dimensional registers, called tiles, to boost the performance of matrix multiply operations, a staple of deep learning workloads. Spelman says AMX provides twice the performance of current-gen Ice Lake chips in training and inference workloads. Those gains come on early silicon and without advanced software tuning, so we can expect larger improvements in the future.

Sapphire Rapids also marks the debut of the Intel Data Streaming Accelerator (DSA) tech, with is a built-in feature that optimizes streaming data movement and transformation operations by reducing the amount of computational overhead associated with performing the operations on the CPU cores. Think of this as similar to the technique we see with DPUs and Intel's own IPU, but built into the processor. We're told that DSA isn't as powerful as the standalone solutions, naturally, but that the tech significantly reduces the computational overhead associated with data movement and can be used either by itself or in tandem with an IPU/DPU.

Wrapping Up

Intel's revised timeline doesn't represent a massive change to its competitive stance against its x86 rival: AMD has said we would see a mix of Milan and Genoa in 2022. That means Sapphire Rapids will still primarily face AMD's Zen 3 EPYC Milan during most of 2022 and then contend with the 5nm Zen 4 EPYC Genoa parts as they arrive near the end of the year.

Intel has hit a snag somewhere in its production timeline. Still, it doesn't seem likely that its new AMX or DSA tech are the culprits — Sapphire Rapids also comes with challenging new features on the hardware side, like PCIe 5.0 and DDR5, that seem to be a more likely cause for a validation delay.

There are also ongoing shortages of critical materials, crippling the supply of chips worldwide. Intel has weathered the opening stages of the chip shortage better than many of its counterparts, which is an advantage of being an IDM, but it now faces tightness in substrate and packaging capacity/materials, just like many of its competitors. Intel has a history of prioritizing higher-margin Xeon chips over its consumer products, though, so the company could merely divert its resources from lower-end chips, making it hard to determine if, or how much of, a role this potentially plays.

The company recently restructured its data center business and parted ways with the head of its DCG operations, Navin Shenoy. Intel veteran Sandra Rivera now leads the new Datacenter and AI group that's responsible for Xeon CPUs, FPGA, and AI products. So it's natural to think that one of her first goals is to realign the company's Xeon roadmap to a more tenable position.

We'll learn more about the chips soon; Spelman's blog post says the company will divulge more technical details about the chips during Hot Chips 2021 in August and the Intel innovation event in October — or possibly even sooner.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

thisisaname ReplyNeoMorpheus said:Whatever.

The future belongs to AMD, ARM and RISC-V.

Intel needs to die already.

No Intel just needs to work harder, if they fail what is to stop AMD becoming a new version of Intel? -

salgado18 Reply

Absolutely. I'm still glad they have some heavy-weight competition.thisisaname said:No Intel just needs to work harder, if they fail what is to stop AMD becoming a new version of Intel? -

InvalidError Reply

A duopoly where both participants are manufacturing-constrained and can achieve 30+% net profit margins isn't competition. In a truly competitive market, you have to worry about competition wanting a piece of your cake when your net profit is significantly above 10%.salgado18 said:Absolutely. I'm still glad they have some heavy-weight competition. -

hotaru251 Reply

You do realize in past (prior to the core i series) it was flipped. AMD was basically intel and intel was amd.NeoMorpheus said:Whatever.

The future belongs to AMD, ARM and RISC-V.

Intel needs to die already.

you need competition. w/o it you get stagnation (ala intel refusing more than quad core saying its not possible then amd showed em up and forced them to release a 6 and 8 core) and price abuse (as if ur the only chocie for top end performance u can charge w/e and they either pay or they dont get it at all) -

NeoMorpheus Replythisisaname said:No Intel just needs to work harder, if they fail what is to stop AMD becoming a new version of Intel?

I already know what Intel is capable of doing and I want no part of it.

Love how everyone has already forgiven them for all the illegal cr@p that they pulled on AMD.

How they held back the industry in what I call 4 core hell just because they were so succesfull in almost killing AMD and the other competitors (RIP Alpha, killed with a lie).

Wanted more than 4 cores? Bend over peasant!

No, I dont want Intel in any way, shape or form.

I hope they do die and AMD, ARM, Apple, Risc-V and the others take over.

Intel already showed us what they do when on top.

And if AMD tries to pull the same cr@p, then the new ones can kill them and make them join Intel in the cemetery. -

Kamen Rider Blade Reply

Just a (PSA/reminder) for those who have forgotten about Quad-Core Hell.NeoMorpheus said:How they held back the industry in what I call 4 core hell just because they were so succesfull in almost killing AMD and the other competitors (RIP Alpha, killed with a lie).

Wanted more than 4 cores? Bend over peasant!

-

escksu ReplyNeoMorpheus said:I already know what Intel is capable of doing and I want no part of it.

Love how everyone has already forgiven them for all the illegal cr@p that they pulled on AMD.

How they held back the industry in what I call 4 core hell just because they were so succesfull in almost killing AMD and the other competitors (RIP Alpha, killed with a lie).

Wanted more than 4 cores? Bend over peasant!

No, I dont want Intel in any way, shape or form.

I hope they do die and AMD, ARM, Apple, Risc-V and the others take over.

Intel already showed us what they do when on top.

And if AMD tries to pull the same cr@p, then the new ones can kill them and make them join Intel in the cemetery.

LOL, we are still living largely in quad core territory today. In fact, its already an upgrade from dual cores. Vast majority of the notebooks are still quad core. -

escksu ReplyNeoMorpheus said:Whatever.

The future belongs to AMD, ARM and RISC-V.

Intel needs to die already.

Future belongs to AMD??? Lol.....hope you know that both AMD and Intel are CISC architecture. -

InvalidError Reply

The difference between RISC and CISC is pedantic semantics at this point since modern RISC is pretty much as complex as CISC used to be, most new instructions added to "CISC" are fundamentally RISC and practically all modern CPUs regardless of ISA are fundamentally RISC internally.escksu said:Future belongs to AMD??? Lol.....hope you know that both AMD and Intel are CISC architecture.