RTX 5090D tested with nine-years-old Xeon CPU that cost $7 — it does surprisingly well in some games, if you enable MFG

Framegen and MFG help more in CPU-constrained workloads.

A Chinese tech reviewer benchmarked Nvidia's all-new "for China" RTX 5090D against a variety of CPUs, from AMD's flagship Ryzen 7 9800X3D, one of the best CPUs for gaming, to Intel's Core Ultra 9 285K, and all the way down to a nine-years-old 14-core Xeon E5-2680 v4 based on the Broadwell architecture. Despite its age, the $7 chip was at least moderately competitive with modern CPUs when using DLSS frame generation, especially with DLSS4 MFG.

The reviewer tested Counter-Strike 2, Marvel Rivals, and Cyberpunk 2077 on an assortment of CPUs paired to the RTX 5090D. The list of CPUs consists of the Core Ultra 9 285K, Core Ultra 7 265K, Core Ultra 5 245K, Core i7-14700KF, Core i5-14600KF, Core i5-12400F, Core i3-12100F, and Xeon E5-2680 v4. On the AMD side, Ryzen 9 9900X, Ryzen 7 9800X3D, Ryzen 5 9600X, Ryzen 9 7900X, Ryzen 7 7800X3D, Ryzen 5 7500F, and Ryzen 5 5600 were tested. That's a wide range of processors extending back nearly a decade.

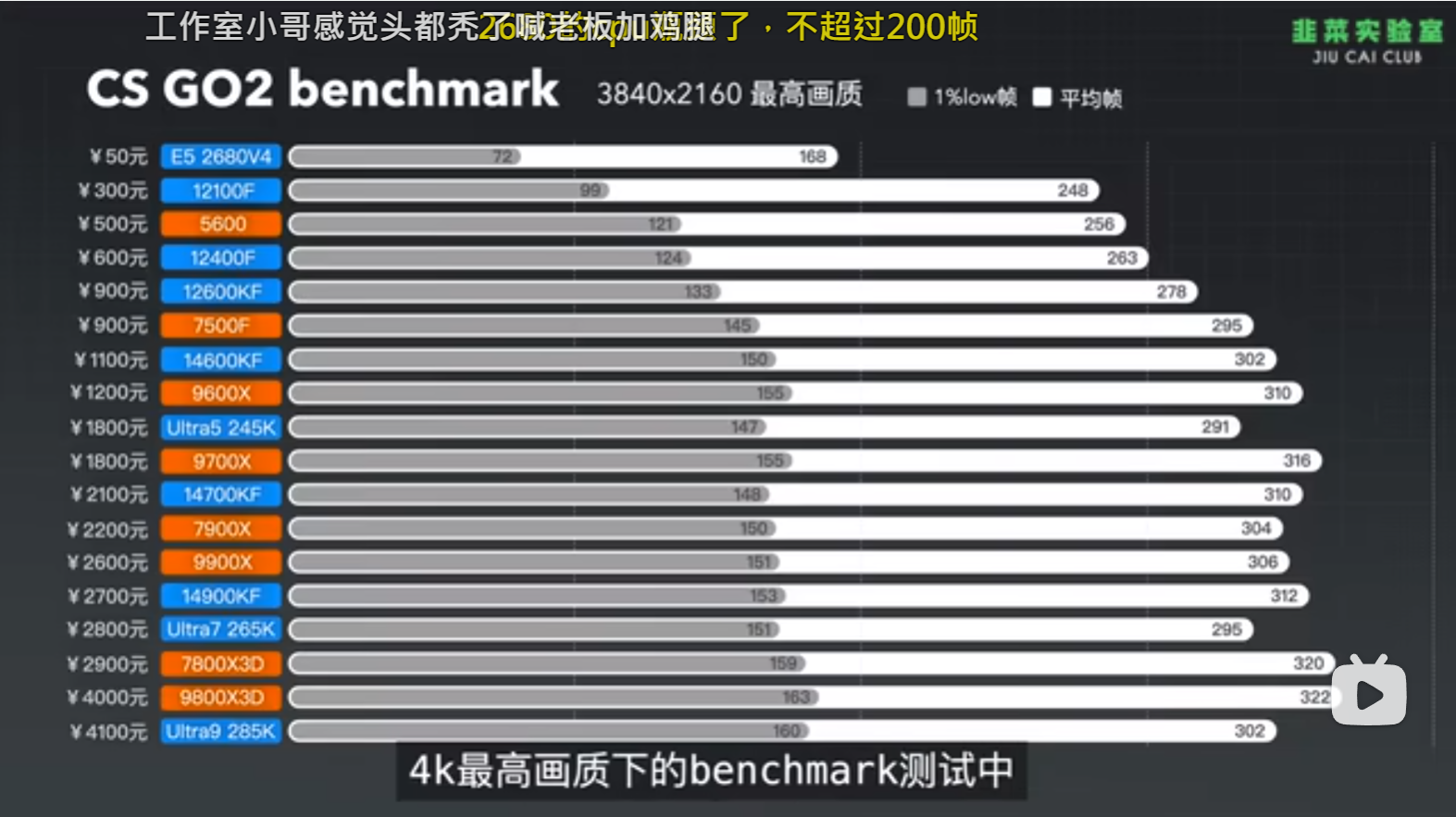

Counter-Strike 2 showcased the lowest results for the Xeon E5-2680 v4. At 4K maximum settings, the CPU's average frame rate was 33% slower than the next-closest Core i3-12100F, never mind higher performance chips like the Ryzen 7 9800X3D that were almost twice as fast. The Broadwell-based chip delivered an average frame rate of 168 FPS, with the Core i3-12100F at 248 FPS. The fastest CPUs topped out at up to 322 FPS — all without any frame generation. 1% Low framerates were even worse, with the Xeon E5-2680 v4 netting just 72 FPS, with the fastest CPU landing at 163 FPS — 2.26X faster.

The Xeon chip's awful frame rate in CS2 shows the problem with pairing such a powerful GPU with an "archaic" processor. But this testing of the RTX 5090D also serves as the foundation for the other tests.

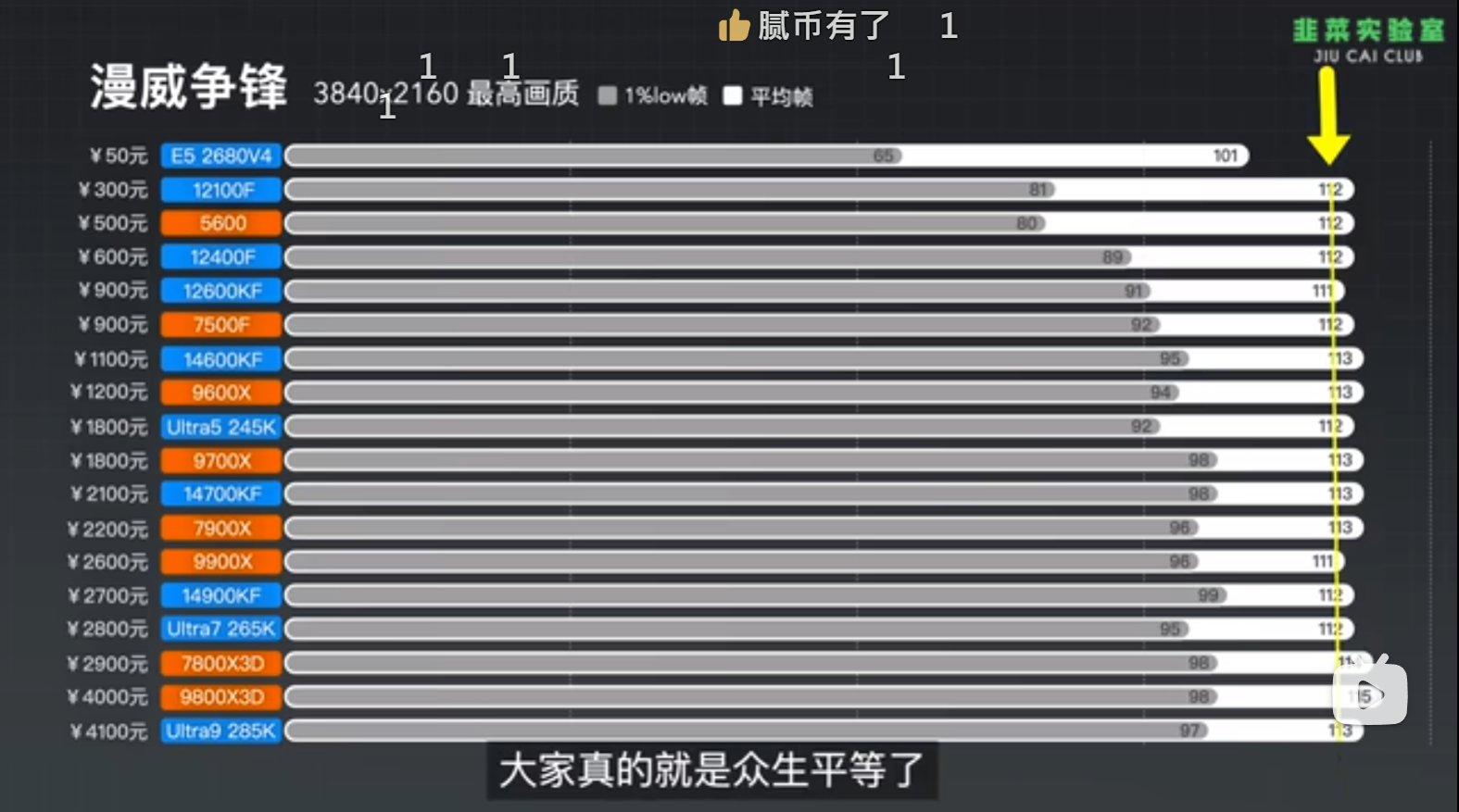

Marvel Rivals at 4K highest quality settings almost completely reverses the CS2 results. All the CPUs, from the Core i3-12100F to the Core Ultra 9 285K, shared virtually identical frame rates of 111-115 FPS, revealing a bottleneck that's not CPU related. The Xeon E5-2680 v4 wasn't far behind, with an average frame rate of 101. That makes the fastest Ryzen 7 9800X3D only 14% quicker than the nine-years-old Xeon chip. But again, minimum FPS is important, and the Xeon only managed 65 FPS compared to 80–99 FPS on the other processors. It's up to 34% slower on minimum framerates.

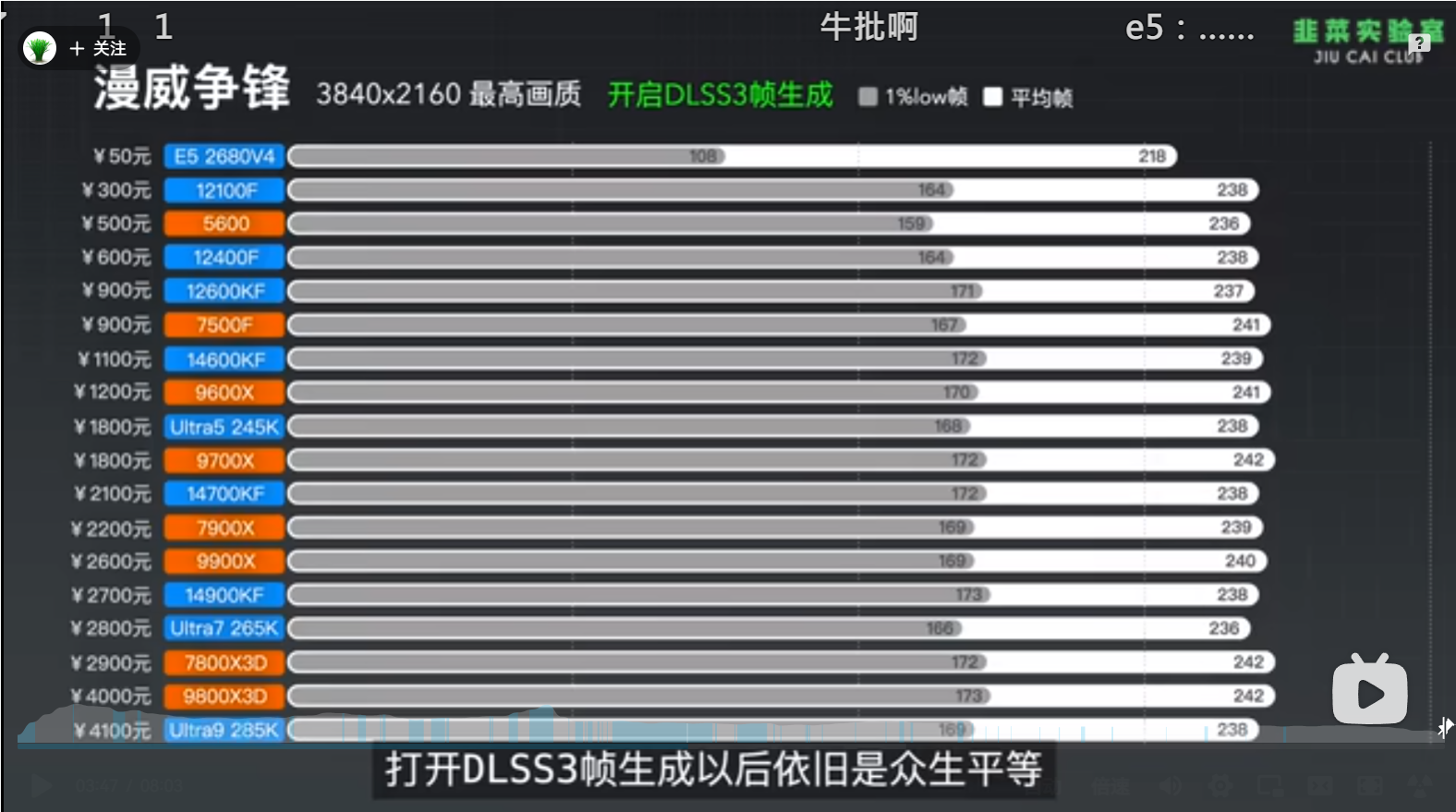

Interestingly, DLSS 3 frame generation more than doubled the performance of all the CPUs, with most of the chips at 238–242 FPS. (We have to assume DLSS upscaling was also enabled, as otherwise performance shouldn't be more than 80~90 percent faster at best from framegen.) With DLSS3 framegen, the Xeon E5-2680 v4 closed the gap slightly, averaging 218 FPS. Minimums were still up to 38% slower, however.

And DLSS4 with MFG4X in Marvel Rivals pushed everything into the 400+ FPS range on average. The Xeon this time was only 6% behind the fastest chips. And of course, the 1% lows were still quite a bit worse, at 164 FPS compared to 298–308 FPS on the fastest CPUs. So that's still a big 40–47 percent deficit, and as we pointed out in our DLSS4 and MFG testing, when you divide by four to get the base framerate and input rate, it was only running at 41 FPS before framegen.

Cyberpunk 2077, at 4K maximum RT-Overdrive (possibly Psycho) settings ran at just 34 FPS on the best CPUs, including the Ryzen 7 9800X3D. The slower chips like the Ryzen 5 5600 and Core i3-12100F landed at 32 and 31 FPS, respectively. The Xeon E5-2680 v4 was the lowest again, but still with an average framerate of 29 FPS. In other words, the Ryzen 7 9800X3D outperformed the Xeon E5-2680 v4 by 17%. Minimum FPS was 19 on the Xeon versus up to 24 on the other CPUs, a 26% lead.

DLSS3 framegen and upscaling gives a massive boost to framerates, with the Xeon landing at 95 FPS compared to 125–132 FPS on the other chips. It was up to 28% slower in that case. But then DLSS 4 with multi frame generation (MFG) boosts framerates even more.

The Ryzen 7 9800X3D managed 255 FPS, with around 250 FPS on all the other AMD chips save for the Ryzen 5 5600. DLSS 4 with MFG4X on the Xeon E5-2680 v4 allowed the ancient CPU to nearly match the other Intel chips on average FPS. It scored 213 FPS, compared to 219–226 FPS on the other Intel CPUs. That's only a 6% difference, at most... unless you look at minimum FPS. In that case, the Xeon only managed 78 FPS (basically tying the 12100F), while most of the other CPUs were in the 120–150 FPS range.

In short, no, the nine-years-old Xeon processor is not going to keep up with the latest chips, with or without framegen and MFG and upscaling. But it does better than you might expect, at least in the three games that were tested on the RTX 5090D. Frame generation can prove particularly helping in games that are completely CPU limited, as that means the GPU has plenty of headroom to run the AI routines for frame generation. It's particularly helpful with the Xeon E5-2680 v4, as that's going to be a severely CPU-constrained environment.

That doesn't mean MFG and framegen are the solution to CPU bottlenecks, though. If you take some of the minimum FPS results and divide by four (like in Cyberpunk 2077), the base framerate was under 20 FPS. It might be playable, technically, after quadrupling that to 78 FPS, but it's not the smoothest experience at all.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

-Fran- Well, this is their play.Reply

When they need to emulate X86 in their ARM CPUs, they will just say "hey, look; even emulated runs pretty darn well in our SoCs!".

Not bad or good, but I'm pretty sure that's how it's going to play out.

Regards. -

Alvar "Miles" Udell 4K isn't a CPU limited resolution, so this shouldn't come as any surprise.Reply -

JTWrenn Interesting way to show some of the areas that cpu bottlenecks get stuck in but...without input lag testing I feel like it doesn't tell the whole story. I wonder if the fps was high but input lag was way off the charts?Reply

Also kind of makes me wonder how cpu bottlenecked everything is right now. -

Lamarr the Strelok The i3 12100 specs aren't listed.Cores/threads? 168 fps is "awful" for the xeon in CS2 where that game barely uses any of the 14 cores from it?It's great for people to try getting as many fps as possible,but this is way beyond what's needed for a fun time.Maybe pro gaming would make a difference but anyone getting one of these still can game on it for some games.Reply -

Lamarr the Strelok Reply

FSR 3.1 has been a life saver for me.I thought it was nonsense for already hi end cards but it works great for me.Upscaling and FG are great for my low end rig.It sometimes adds graphics artifacts in stalker but that's normal for a stalker game.OneMoreUser said:Pushing Fake Frames isn't doing well, it is faking.

I used to like and laugh along to the "fake frames" comments but no more. I call it frame gen now.I still hate ray tracing in general though. -

abufrejoval Reply

First raction: what's the point of running Raspberry PIs in a cluster?P.Amini said:TL;DR What's the point?

Or Doom inside a PDF?

As a parent and occasional CS lecturer, I'd say a lot of it is around encouraging critical thinking and do your own testing instead of just adding to a giant pile of opinions based on other opinions influenced by who knows who.

It's also around acknowleding that the bottlenecks and barriers in gaming performance are not a constant, but have shifted and moved significantly. And a lot of the current discussion is about seeking for new escapes from non-square returns on linear improvements.

For the longest time Intel has pushed top clocks as the main driver for the best in gaming performance. And it wasn't totally wrong, especially since single core CPUs were the norm for decades and single threaded logic remains much simpler to implement.

I bought big Xeons years ago, because they turned incredibly cheap when hyperscalers started dropping them. My first Haswell 18-core E5-2696 v3 was still €700, but at that time I needed a server for my home-lab and there was nothing else in that price range, especially with 44 PCIe lanes and 128GB RAM capacity.

Those were the very same cores you could also find on their desktop cousins, but typically lower turbo clocks, because they had to share their power budget with up to 21 others and it made very little sense to run server loads on a few high clocks: you want to be very near the top of CMOS knee for optimum compute/electrical power.

Of course, when you use them in a workstation with a mix of use cases, that tradeoff would make a lot more sense, which is why I was happy to use the Chinese variants of these E5 Xeons, which gave you a bit more turbo headroom.

I've upgraded it to a Broadwell E5-2696 v4 somewhat more recently, because that was €160 for the CPU, a bit of leftover thermal paste and one hour for the swap job. I guess it's my equivalent of putting a chrome exhaust on V8 truck or giving your older horse a new saddle, not strictly an economic decision, but not bad husbandry, either.

Yet it also has Windows 11 (IoT) and VMs run better, because Broadwell had actually some pretty nifty ISA extensions made for cloud use.

Synthetic benchmarks put both chips at very similar performance and energy use as a Ryzen 7 5800X3D that used to sit next to it (which became a Ryzen R9 5950X later), both with 128GB of ECC RAM.

Progress during the eight years between that first Xeon and the Ryzen was measured mostly in per-core performance improving to the point where 8 Zen cores could do the work of 18/22 Xeon cores, but top turbo scalar performance of a Xeon core was also at around 50% of what a Zen3 can do. All core turbo on Xeons is much lower than for Zen, that's where the extra cores come in: essentially it gives you 18/22 E-cores in modern parlance, at 110 Watts of actual (150 Watt TDP) max power use... that's where modern E-cores will show their progress, probably 1/4 power consumption at iso performance.

With the Xeon at around €5000 retail when new vs. €500 for Zen, these numbers are another nice metric for progress.

But since IT experimentation is both my job and my hobby, I recently used it to test just how much of an impact or difference there was between those two systems, when running modern games on a modern GPU with equal multi-core power, but vastly different max per core performance. In other words: just how well had games adapted to an environment that had given them more but weak cores for some years now on consoles (due to manufacturing price pressure)?

And while I didn't do this exhaustively, my finding were very similar: modern game titles spread the load so much better that these older CPUs with extra cores are quite capable enough to run games at acceptable performance. And no, it doesn't have to be a 22 core part, even if it just happens to have 55MB of L3 cache, which makes it almost a "3D" variant. It also has 4 memory channels...

And that also explained why two to four years ago "gamer X99" mainboards were astonishingly popular on Aliexpress: X99 and C612 are quite the same hardware and like those Xeons it's quite likely these parts were given a 2nd life serving gamers, who, couldn't afford leading edge hardware and rather well served by what they got.

So the point: you may do better by not just jumping for the leading edge.

But because that takes the means to explore, naive buyers may not be able to exploit that and pay the price elsewhere.

Did I mention (enough) that Windows 11 IoT runs quite well on these chips? Still nine years of support and very low risk of being Co-Piloted.