SK Hynix says new high bandwidth memory for GPUs on track for 2024 - HBM4 with 2048-bit interface and 1.5TB/s per stack is on the way

Big changes coming to HBM memory.

HBM3E memory with a whopping 9.6 GT/s (9.6 gigatransfers, or billions of transfers a second, a typical measurement of memory bandwidth) data transfer rate over a 1024-bit interface has just hit mass production. But the demands of artificial intelligence (AI) and high-performance computing (HPC) industries are growing rapidly, so HBM4 memory with a 2048-bit interface is just about two years away. A vice president of SK Hynix recently said that his company is on track to mass produce HBM4 by 2026, reports Business Korea.

"With the advent of the AI computing era, generative AI is rapidly advancing," said Chun-hwan Kim, vice president of SK hynix, said at SEMICON Korea 2024. "The generative AI market is expected to grow at an annual rate of 35%."

The rapid growth of the generative AI market calls for higher-performance processors, which in turn need higher memory bandwidth. As a result, HBM4 will be needed to radically increase DRAM throughput. SK Hynix hopes to start making next-generation HBM by 2026, which suggests late 2025. This somewhat corroborates Micron's plan to make HBM4 available in early 2026.

With a 9.6 GT/s data transfer rate, a single HBM3E memory stack can offer a theoretical peak bandwidth of 1.2 TB/s, translating to a whopping 7.2 TB/s bandwidth for a memory subsystem consisting of six stacks. However, that bandwidth is theoretical. For example, Nvidia's H200 'only' offers up to 4.8 TB/s with H200, perhaps due to reliability and power concerns.

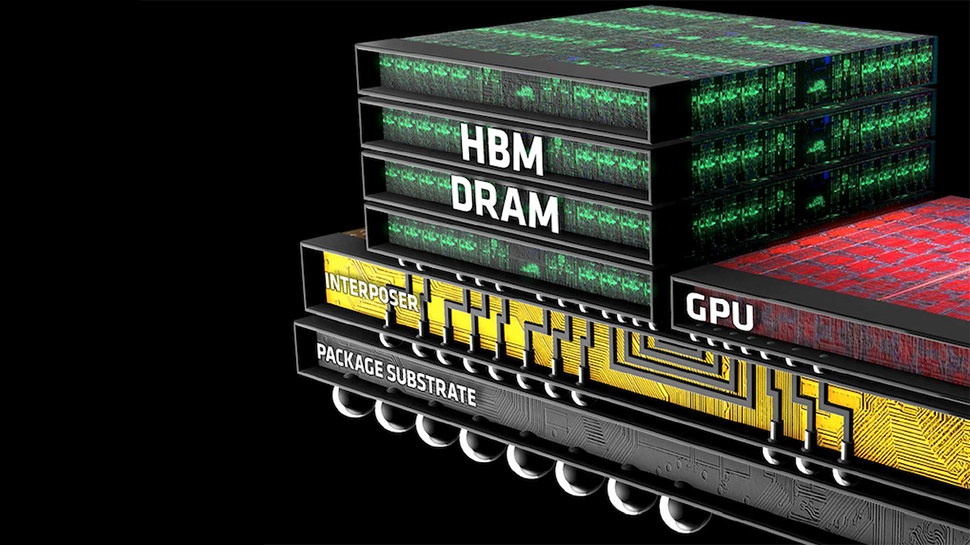

According to Micron, HBM4 will use a 2048-bit interface to increase theoretical peak memory bandwidth per stack to over 1.5 TB/s. To get there, HBM4 will need to feature a data transfer rate of around 6 GT/s, which will allow to keep the power consumption of next-generation DRAM in check. Meanwhile, a 2048-bit memory interface will require a very sophisticated routing on an interposer or just placing HBM4 stacks on top of a chip. In both cases, HBM4 will get more expensive than HBM3 and HBM3E.

SK Hynix's sentiment regarding HBM4 seems to be shared by Samsung, which says it is on track to produce HBM4 in 2026. Interestingly, Samsung is also developing customized HBM memory solutions for select clients.

"HBM4 is in development with a 2025 sampling and 2026 mass production timeline," said Jaejune Kim, Executive Vice President, Memory, at Samsung, at the latest earnings call with analysts and investors (via SeekingAlpha). "Demand for also customized HBM is growing, driven by generative AI and so we're also developing not only a standard product, but also a customized HBM optimized performance-wise for each customer by adding logic chips. Detailed specifications are being discussed with key customers."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply"Demand for also customized HBM is growing, driven by generative AI and so we're also developing not only a standard product, but also a customized HBM optimized performance-wise for each customer by adding logic chips. Detailed specifications are being discussed with key customers."

For me, this is perhaps the most intriguing part. I wonder if they'll be able to overcome the performance limitations of standard HBM4, or if the hybrid stack designs are really just about simplifying routing, saving energy, and/or reducing cost. -

gg83 "According to Micron, HBM4 will use a 2048-bit interface to increase theoretical peak memory bandwidth per stack to over 1.5 TB/s. To get there, HBM4 will need to feature a data transfer rate of around 6 GT/s, which will allow to keep the power consumption of next-generation DRAM in check"Reply

How does hbm effect dram? -

bit_user Reply

That's a good question. My understanding is that it's not fundamentally different than regular DRAM, but simply has a lot more parallelism and compensates by running at a lower interface speed.gg83 said:How does hbm effect dram?

However, I haven't kept up to date on all the various developments, as HBM has continued to evolve.