Gigabyte twists its RAM slots to fit 24TB of DDR5 sticks into a standard server — AMD EPYC sports an impossible 48 DIMMs in new configs

Leading-edge servers need leaning-edge DIMMs

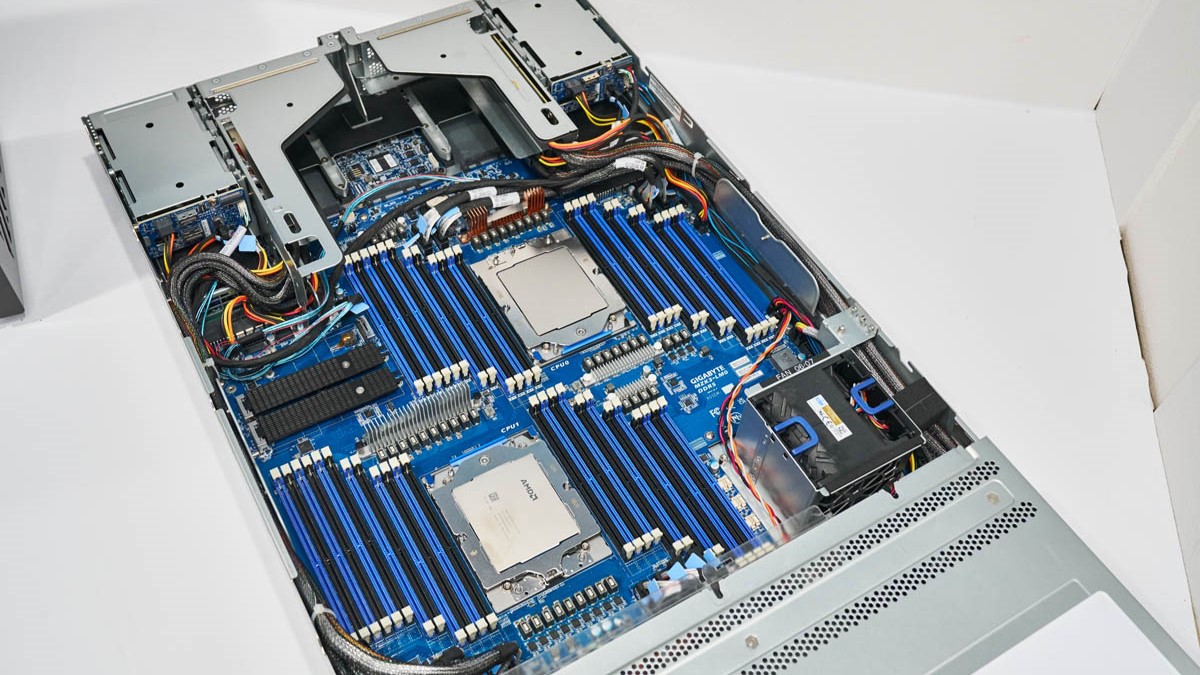

Computex 2024 was a massive expo, and as such, it had some hidden delights that we didn't manage to spot for ourselves. For example, the Gigabyte R283-ZK0: This server motherboard has done the seemingly impossible and fits 48 DDR5 memory slots inside a standard 2U server form factor.

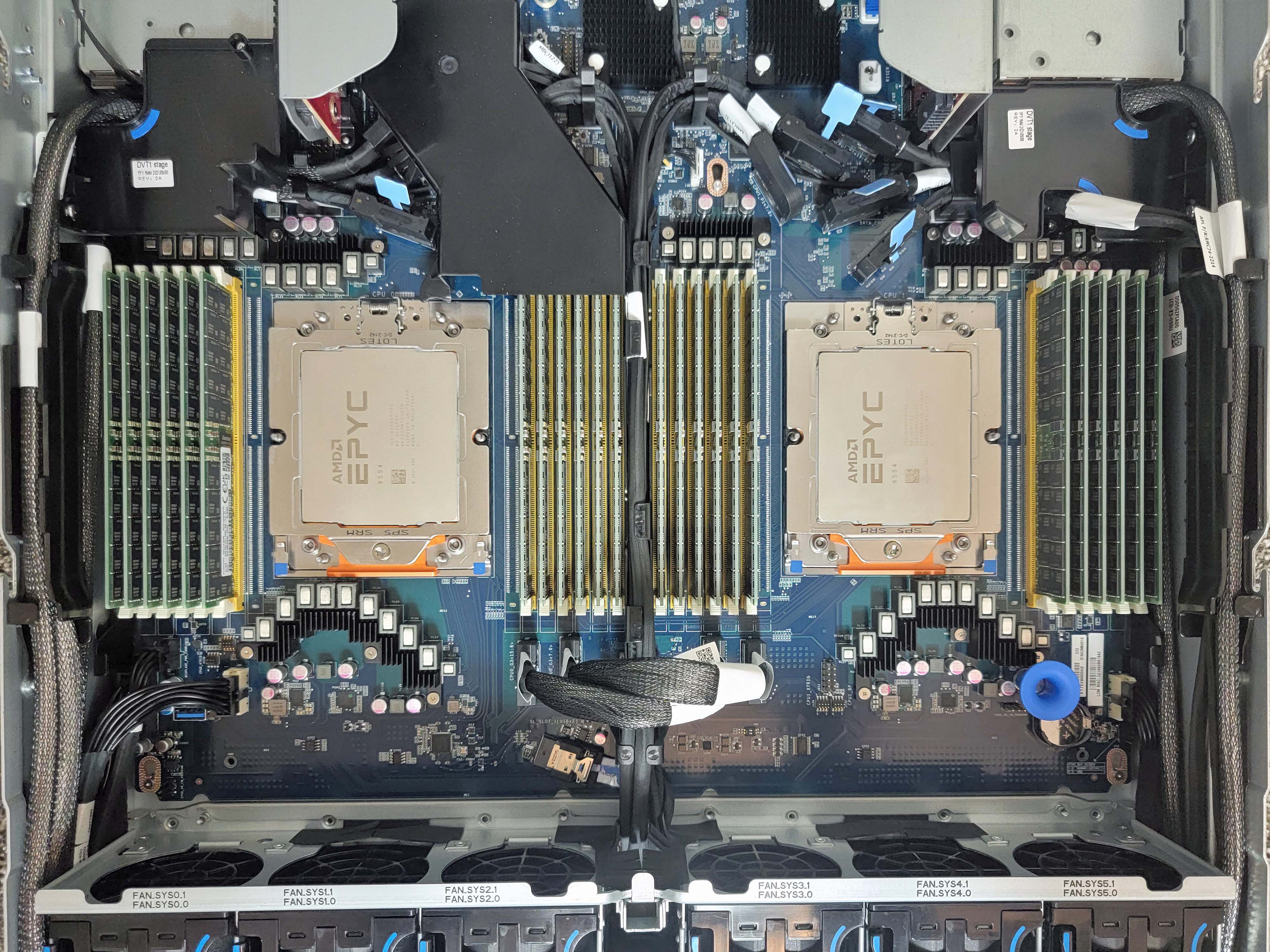

First spotted by ServeTheHome, the Gigabyte board does indeed fit 48 memory slots on a single motherboard dominated by a pair of AMD's SP5 sockets for EPYC processors. To accommodate such an insane amount of RAM, the CPUs and DIMMs cannot sit next to each other; instead, they arrange the RAM in descending sets of 6-2-4-4-2-2-4. This staircase design allows NVMe SSDs and extra cooling fans to hide in the created nooks.

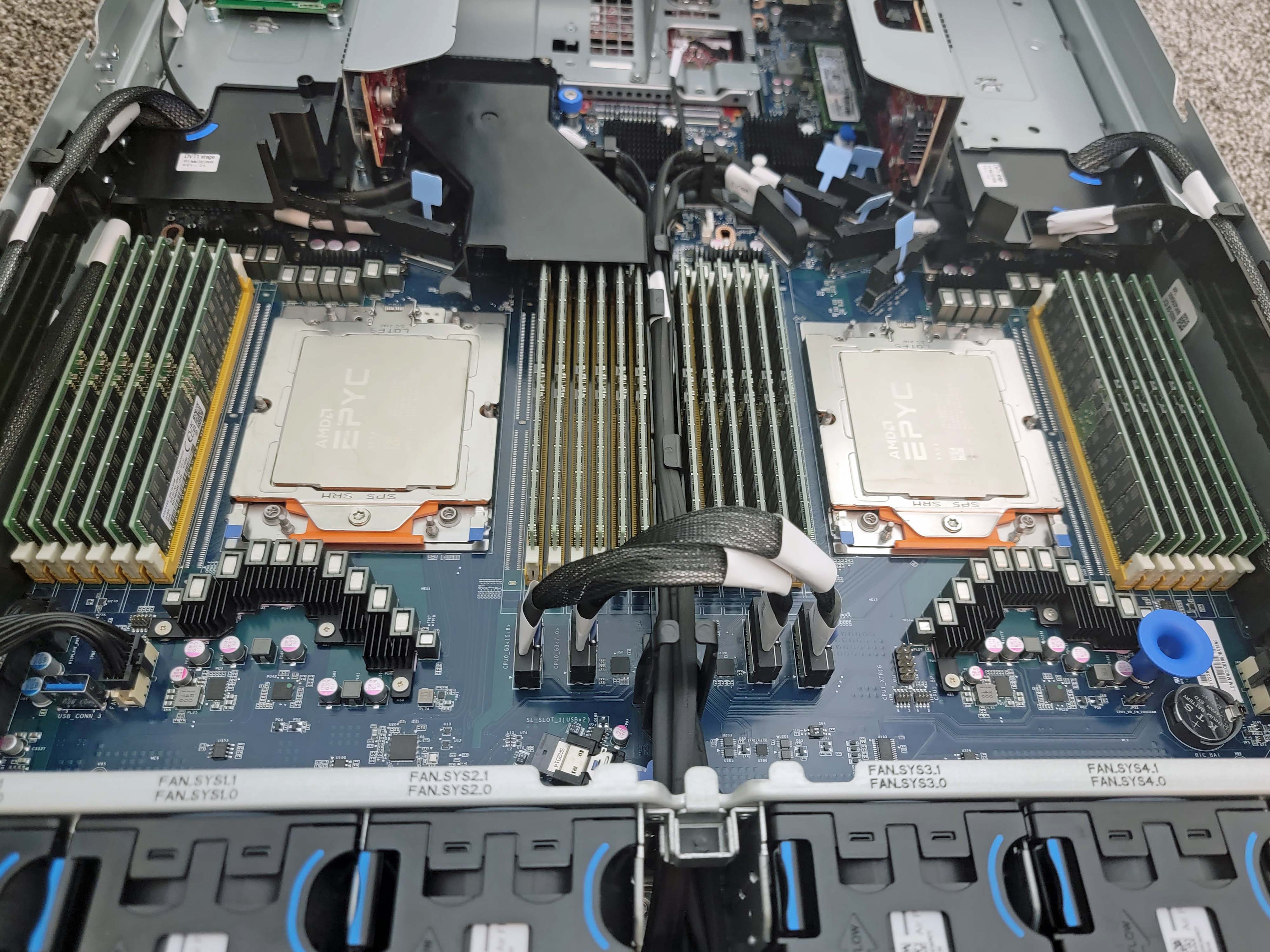

Our testbench for EPYC 9004 series was a dual-socket machine with 12 memory channels per CPU, but with only one DIMM per channel (1DPC). This board did fit the dual sockets and all of their RAM in a straight line, but serious compromises still had to be made to fit its 24 DDR5 DIMMs (half the capacity of the Gigabyte R283-ZK0). As shown in our picture of the test system below, the cramped arrangement doesn't provide enough space for running the 12 memory channels in two DIMMs per channel (2DPC) configurations.

AMD had to use skinnier DDR5 slots to cram 12 memory channels into our test system. As a result, AMD had to warn us not to put any lateral pressure on the DIMMs when installing them or the heatsink, as AMD had experienced shearing off DIMMs from slight pressure due to the skinnier slots. That highlights the big challenges that Gigabyte's interesting new solution has solved.

The Innovative Gigabyte R283-ZK0 will ship ready for two AMD EPYC 9004 series processors, which ship at up to 128 cores/256 threads and run at up to 300W TDP. Eight front NVMe bays rest in the front, and 4 hot-swappable 2.5" SSD bays take up the rear. Two M.2 slots are inside with four FHHL (full-height half-length) PCIe Gen5 x16 slots. Two gigabit LAN ports and two OCP 3.0 slots cover network connectivity, and two 2700W 80 Plus Titanium power supplies keep the lights on.

The massive SP5 CPU sockets eat up board real estate like Blackrock, which creates a challenge for motherboard and system vendors looking to supply consumers needing high amounts of RAM in a small footprint. If all you need is dual-socket, 2DPC (2 DIMMs per channel) servers, Gigabyte has a solution in the G493-ZB0. However, this 4U server is for GPUs and takes up a hefty 4 rack spaces.

Gigabyte has shaved off half of the thickness of the G493-ZB0 and doubled the memory capacity of EPYC systems, creating the first 2U dual-socket 2DPC server. Though the end result may look silly, it is an impressive feat that will surely excite the few users who need such extreme memory capacity at a space deficit.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

jeremyj_83 I am anxious to see this in the wild as it could offer a 4:1 consolidation or more for a lot of companies.Reply -

thestryker Saw this when STH posted the news and it's still boggling that they were able to properly engineer even trace lengths and squeeze in all 48 slots.Reply

I'm waiting to see if AMD supports MRDIMMs on Zen 5 as that should mostly negate the need for this type of design. The initial numbers being put out by Micron surprisingly show it with lower latency, but that may also be tied to capacity/throughput so I don't want to make any assumptions there.

Anandtech posted about the Micron release yesterday: https://www.anandtech.com/show/21470/micron-mrdimm-lineup-expands-datacenter-dram-portfolio -

micheal_15 1152 GIGABYTES of Ram. Almost enough to meet the minimum requirements to run Windows 12....Reply -

edzieba With how common it is to add DIMM slots in sets of 4 (and occasionally 2) to a board, it still surprises me that singlet sockets remain the standard. Monolithic DIMM 'slot blocks' would allow for mechanically more robust sockets and reduced parts-count. Even if colour-coding is desired, multi-shot injection moulding is hardly a rarity.Reply -

bit_user Reply

In 24/7 servers, DIMMs probably fail at a sufficiently high rate that I think they'd want to be able to replace them in singles or doubles. Replacing failed components is probably the main reason why server components are still socketed. Even Ampere Altra is socketed!edzieba said:With how common it is to add DIMM slots in sets of 4 (and occasionally 2) to a board, it still surprises me that singlet sockets remain the standard.

What I think we're going to see is backplanes primarily for CXL.mem devices, not unlike storage backplanes. Imagine 48x M.2 boards plugged into it, perpendicularly.edzieba said:Monolithic DIMM 'slot blocks' would allow for mechanically more robust sockets and reduced parts-count. -

edzieba Reply

I don't mean the DIMMs themselves, I mean the DIMM sockets that are soldered to the motherboards.bit_user said:In 24/7 servers, DIMMs probably fail at a sufficiently high rate that I think they'd want to be able to replace them in singles or doubles.