What Does One Petabyte Of Storage (And $500K) Look Like?

We're big fans of big technology, and with more than 1 PB of capacity, Aberdeen's Petarack is sure something to marvel at. We take a look inside and figure out what it takes to deploy 1 000 terabytes of space in an enterprise environment, reliably.

Meet The Petarack

We all know what a megabyte is, and gigabytes are familiar as well. Terabytes were more recently folded into our vocabulary. But there’s a good chance that many enthusiasts still haven't wrapped their minds around the idea of a petabyte.

In short, we’re talking about one thousand terabytes, or enough space for 20 000 Blu-ray movie rips. Although we’re a long way from seeing petabytes of data used in a desktop context, a company called Aberdeen recently offered to ship us a more business-oriented solution already capable of serving up a petabyte of data for a cool half a million bucks. I thought about taking the company up on its offer, but ultimately decided that there was no way for me to tax its potential given the equipment currently in our Bakersfield lab. So, I thought I’d push Aberdeen for more information on its creation and break down its internals.

What, exactly, does it take to deliver a petabyte of storage, and what do you get for that nice, even price tag of $495 000?

Connecting Servers To Storage

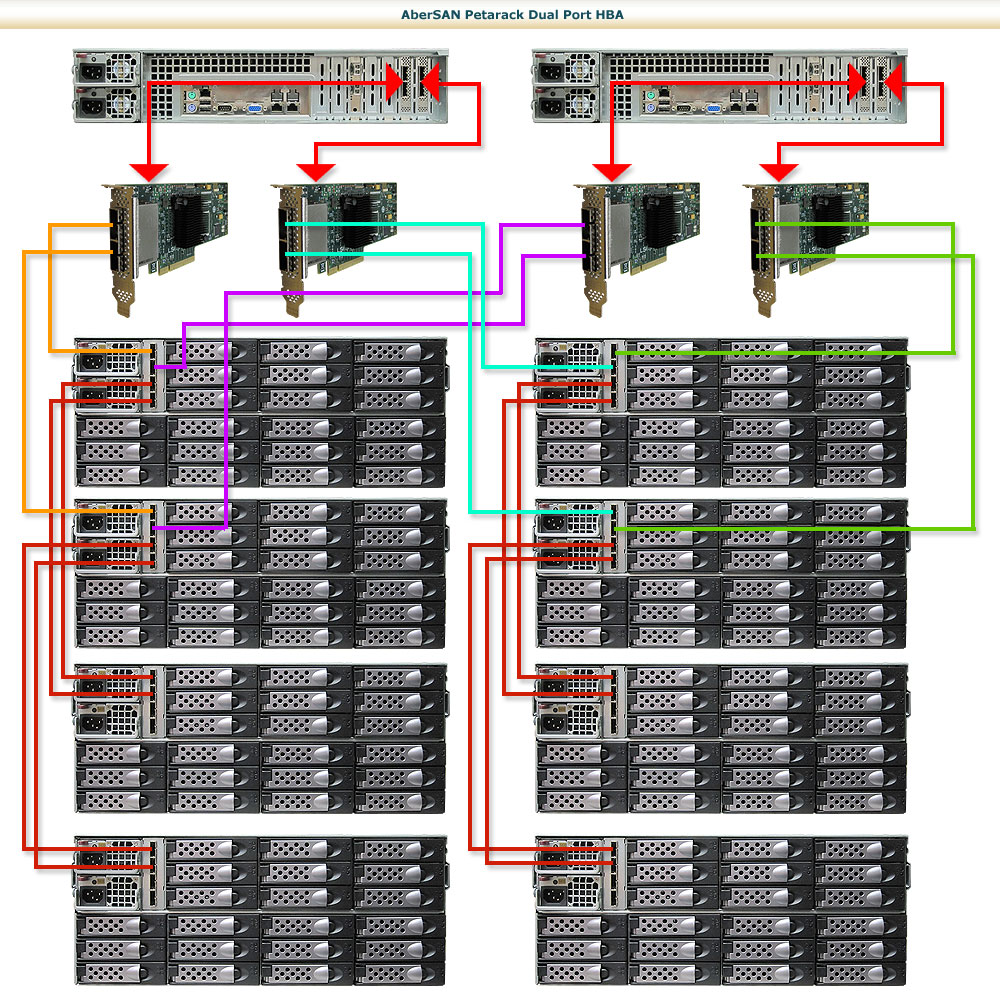

The Petarack consists of eight JBODs. Each JBOD carries 45 nearline, SAS hard drives with 3 TB of capacity each. This makes for a total of 360 drives, resulting in 1080 TB of storage. Anticipating the bickering over capacity calculations, Aberdeen clarifies with the following: “The Petarack carries 1080 TB, going by the decimal capacity written on the hard drives themselves.”

Aberdeen claims that there’s enough empty rack space available to mount a ninth JBOD, yielding an additional 135 TB. It also says it could add two additional HBAs (one in each one of the system’s servers), pushing the configuration to 1215 TB in the same chassis. By default, the company didn’t go that route, leaving free PCIe slots for 10 Gb Ethernet cards or Fibre Channel HBAs.

Inside The Petarack

Most hardware-based RAID controllers have on-board processors designed specifically to accelerate storage tasks, and most modern 6 Gb/s RoCs are dual-core implementations running somewhere under 1.2 GHz. Although they generally claim to support as many as 256 devices via expanders, once you attach a couple dozen drives, they become your performance bottleneck. Additionally, they’re only able to address a limited amount of cache memory (somewhere between 512 MB and 4 GB). Naturally, a big storage appliance has to work around that.

Two Dual-Xeon Servers

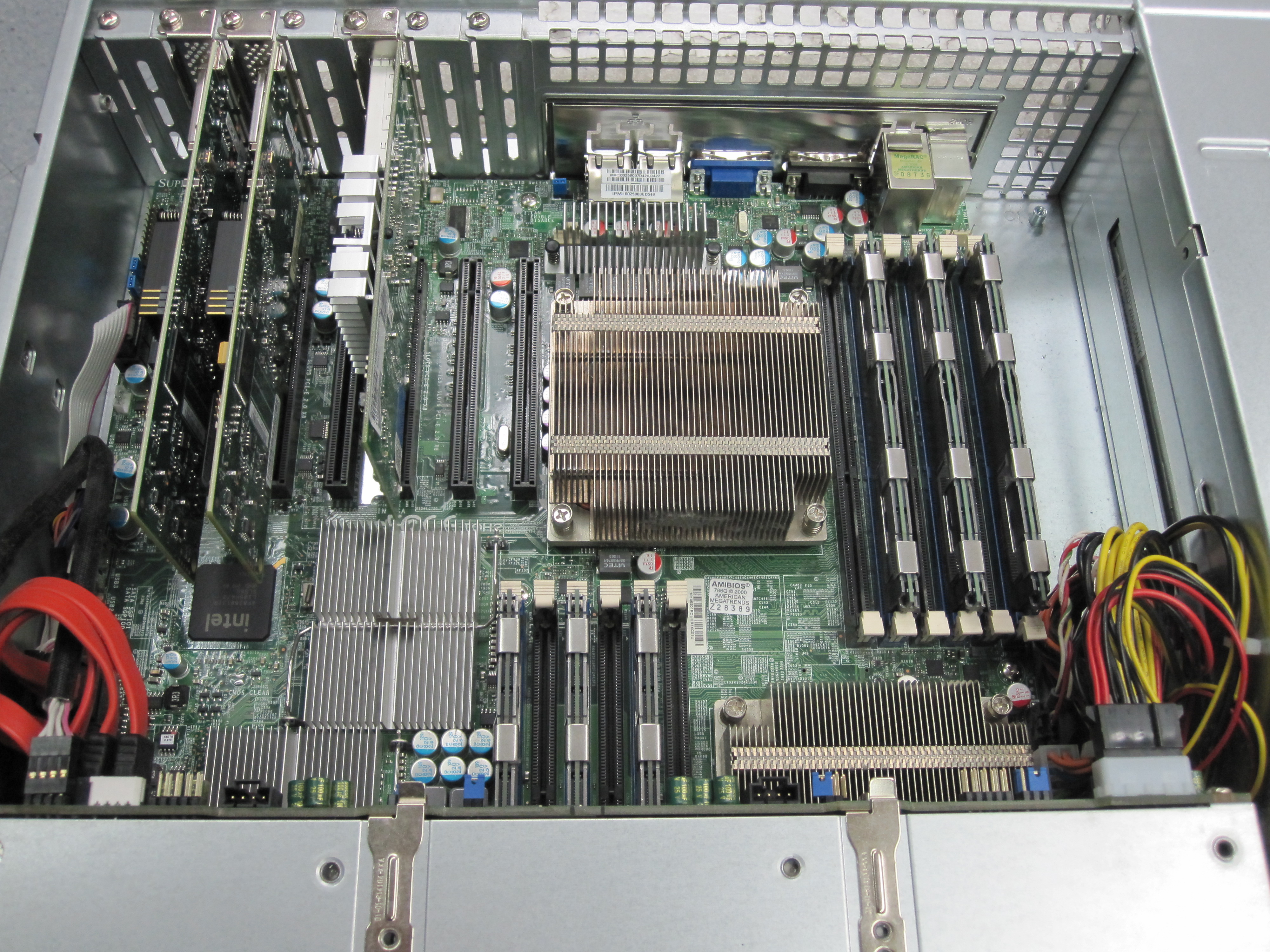

Instead of hardware RAID cards, the Petarack employs HBAs that rely on host processing power. This is supplied by two six-core Xeon X5670s running at 2.93 GHz in each of the rack’s two storage servers. By default, each server also includes 48 GB of DDR3 memory, though that’s expandable to 192 GB. The dual-server concept is a nod to redundancy. In the event that one fails, the other automatically steps in.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

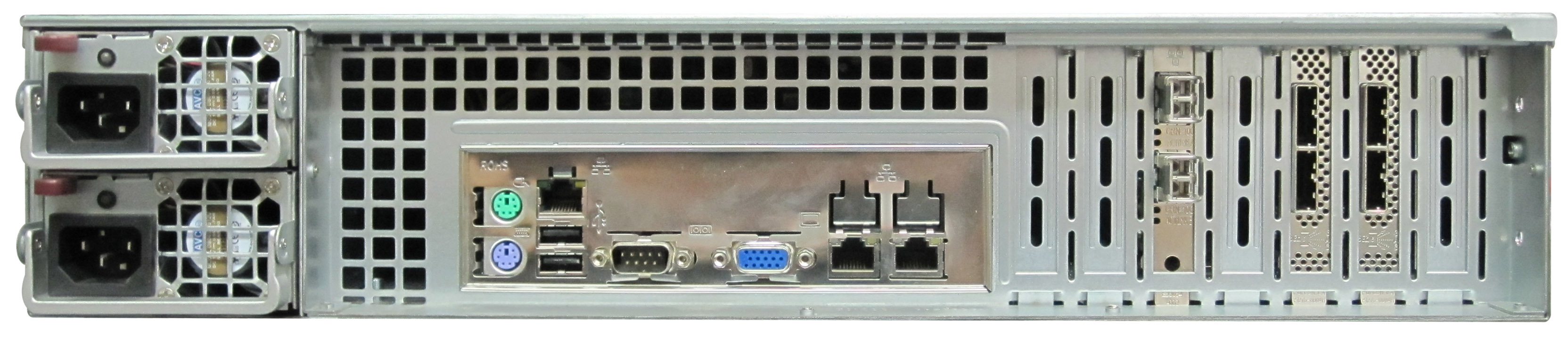

As you can see in the photo, extra PCIe expansion is available for more storage or networking connectivity.

Populating The Petarack

Each JBOD includes two expanders. One expander in each JBOD is connected to each storage server for redundancy. The Petarack employs SAS drives, which feature two ports, facilitating a connection to each server, again, for redundancy. The idea is that if one server or one HBA drops offline, there is always another data path available.

Vibration is a big factor in a chassis with so many disks. If you were to build your own enclosure with 360 drives and not use any sort of rotational vibration correction mechanism, performance would degrade by about 50% (according to Aberdeen), and you would see multiple drive failures in a short period of time. More specifically, the vibration would cause read errors, and the hard drives would repeatedly try to reread and correct them. Not only does this lower the performance, but it also wears the disks out, reducing lifespan.

For that reason, 3 TB nearline SAS drives make the most sense in an environment like this. They offer the reliability characteristics of an enterprise product with the capacity advantages of magnetic SAS-based storage. Aberdeen says the Petarack also supports 15 000 RPM SAS drives or SSDs for performance-sensitive applications. Multiple technologies can even coexist within a single Petarack, though obviously that’ll affect its ability to host an actual petabyte of storage space.

Creating Big RAID Arrays

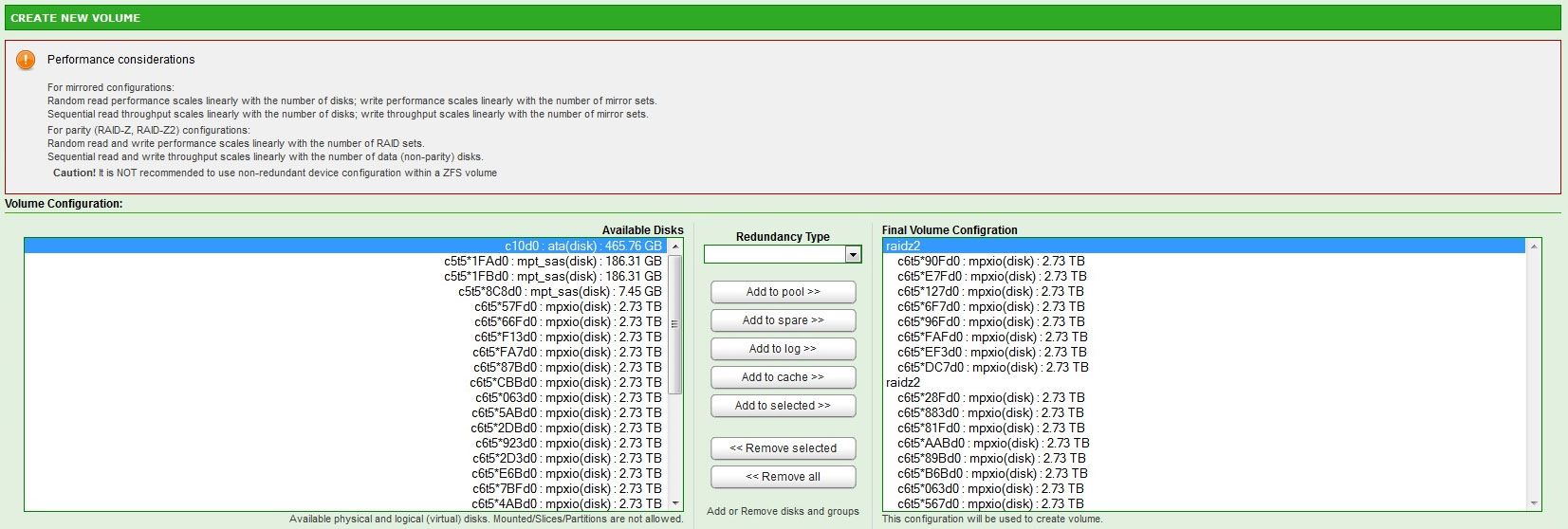

Generally speaking, it’s easy to build a RAID array with a typical controller card. However, even high-end boards often face limitations, like arrays composed of no more than 32 drives. It’s easy to work around that by combining 32-drive RAID arrays, though this involves compromises in reliability.

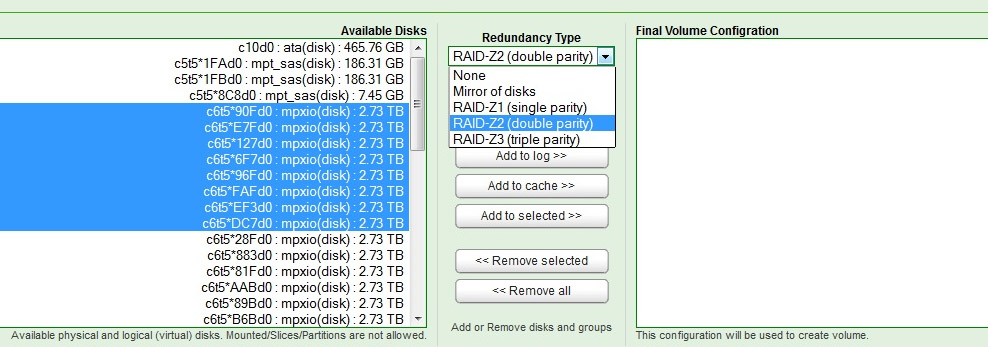

Aberdeen instead leans on an OpenSolaris-based kernel with a GNU/Debian user interface for creating RAID arrays, circumventing the limitations of hardware-based RAID solutions. You get a few RAID level options: Stripe, Mirror, RAID-Z1 (single parity, similar to RAID 5), RAID-Z2 (double parity, similar to RAID 6), and RAID-Z3 (triple parity). Aberdeen claims that you can actually have a pool of 180 drives in a Petarack mirrored to the other 180 drives.The company also says its ideal configuration is RAID-Z2 in each of the eight JBODs, with the octet pooled together.

Picking The Right File System

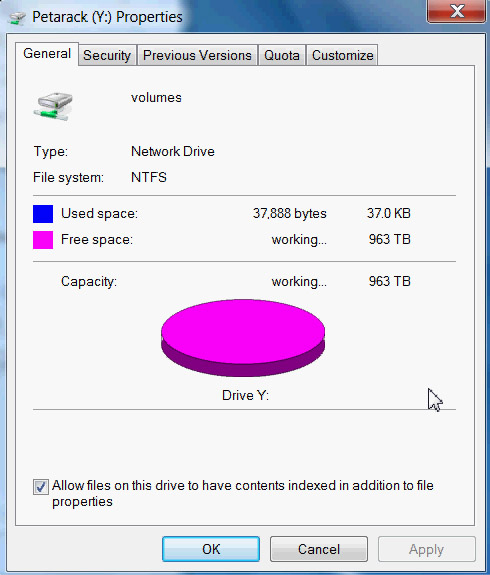

Typically, NAS devices run some form of Linux- or Unix-based operating system with a proprietary embedded GUI. There are even Window-based NAS devices that require a similar interface. In those appliances, 16 TB and larger partitions are not recommended, and more than 256 TB is not supported at all. Only a few experimental file systems (like BTRFS) try to push those limits.

The 128-bit ZFS file system has been around for a long time, and it has a proven track record of enterprise reliability. To avoid accidental and silent data corruption, ZFS provides end-to-end check summing and transactional copy-on-write I/O operations. Petabytes are peanuts for a 128-bit file system. Not only can it address that much storage, but it can also present it in a single namespace.

Network Connectivity

You can present all or some of the storage on a Petarack as block-level storage. It supports iSCSI by default through two 10 Gb Ethernet controllers, as well as two 1 Gb ports. If you have a Fibre Channel-based network, Aberdeen says it can swap out iSCSI for FC HBAs and activate the Petarack’s FC target capabilities with licenses.

If you prefer NAS or file-level access to your storage, you can always connect the Petarack to a 10 Gb switch instead.

Creating Shares

Multiple-platform access to a single share has always been problematic. You can create a CIFS share on Linux, which Microsoft and Mac platforms can access. Linux administrators usually prefer NFS shares, though, and they use their Access Control Lists for user authentication. Sometimes they want to access files from Windows. With ZFS, you can have any type of share and represent it as another.

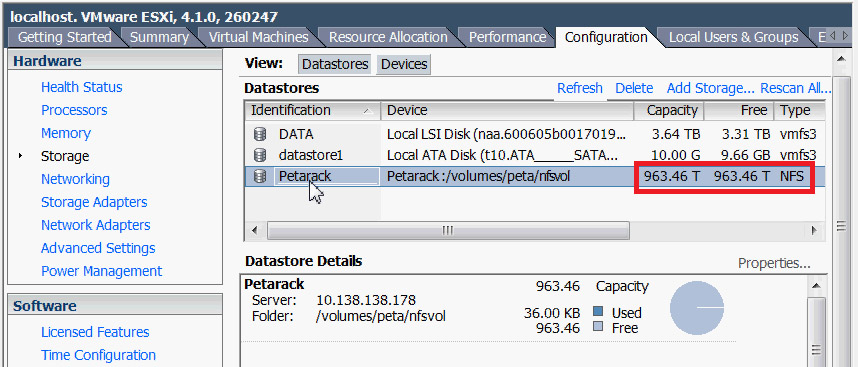

Storage In VMware

NFS shares are also preferred by VMware administrators. Block-level storage was limited to 2 TB partitions in vSphere 4.1. This limit increases to 64 TB with vSphere 5.0. NFS shares are not limited to those numbers.

Scotty, We Need More Power

I’m going to guess that most folks will simply look at a rack like this for the marvel of checking out 1 PB of capacity in one place. But what if you’re the sort of IT guy who can really use an appliance as large as the Petarack? What sort of infrastructure does it necessitate? Aberdeen calculates the power draw of its Petarack to be 7000 watts running at full capacity. Within the rack, you’ll find two 40 A power strips, each divided into two 20 A circuits. The load is distributed so that four 20 A twist-lock receptacles are required to drive a fully-loaded Petarack.

-

Benihana It's difficult to imagine 1 PB in an area the size of a deck of playing cards, but I'm going to remember today when it does.Reply -

clownbaby I remember marveling at a similar sized cabinet at the Bell Laboratories in Columbus Ohio that held a whole gigabyte. That was about twenty years ago, so I would suspect in another 20 we might be carrying PBs in our pocket.Reply -

cangelini haplo602from my point of view, this is a pretty low to mid end array :-)What do you work on, by chance? =)Reply -

razor512 Seems like a decent setup, but the electric bill will be scary running a system like that.Reply

But then again, the servers I work on barely use 10TB of storage. -

Casper42 Razor512Seems like a decent setup, but the electric bill will be scary running a system like that.But then again, the servers I work on barely use 10TB of storage.Reply

Lets see....

I work for HP and we sell a bunch of these for various uses:

http://www.hp.com/go/mds600

Holds 70 x 2TB (and soon to be 3TB) drives in a 5U foot print.

Can easily hold 6 of these in a single rack (840 TB) and possibly a bit more but you have to actually look at things like floor weight at that point.

I am working on a project right now that involves Video Surveillance and the customer bought 4 fully loaded X9720s which have 912TB Useable (After RAID6 and online spares). The full 3.6PB takes 8 racks (4 of them have 10U free but the factory always builds them a certain way).

The scary part is once all their cameras are online, if they record at the higher bitrate offered by the cameras, this 3.6PB will only hold about 60 days worth of video before it starts eating the old data.

They have the ability and long term plan to double or triple the storage.

Other uses are instead of 2TB drives you can put 70 x 600GB 15K rpm drives.

Thats the basis for the high end VDI Reference Architecture published on our web page.

Each 35 drive drawer is managed by a single blade server and converts the local disk to an iSCSI node. Then you cluster storage volumes across multiple iSCSI nodes (known as Network RAID because you are striping or mirroring across completely different nodes for maximum redundancy)

And all of these are only considered mid level storage.

The truly high end ignores density and goes for raw horsepower like the new 3Par V800.

So Yes, I agree with haplo602. Not very high end when comparing to corporate customers.