Nvidia Jetson AGX Xavier Developer Event: AI-Powered Robots Abound

The Robots are Coming

You may not realize it, but the age of AI-powered robots is already upon us. From farms to construction sites and factories, and even college campuses, automated machines are quickly fanning out to new use cases, albeit quietly. But unlike the broken promises we’ve heard for the last several decades about the coming robot revolution, we truly are on the cusp of automated helpers everywhere.

While robots may seem relatively simplistic from the exterior, they are anything but. Compute power had long held the industry back on several axes, but the rise of artificial intelligence has proven to be the breakthrough the industry needed.

It doesn't just require sheer compute horsepower, either: there's a variety of data that has to crunched to power a truly autonomous machine. These applications require systems that can ingest high-resolution video, even up to 4K, crunch data from complex arrays of sensors, encode those streams and then run convolutional neural networks to analyze the incoming data. LIDAR systems and inference engines for path tracing are also an absolute must. That means the real challenge is grappling with the crushing influx and variety of data that needs processed.

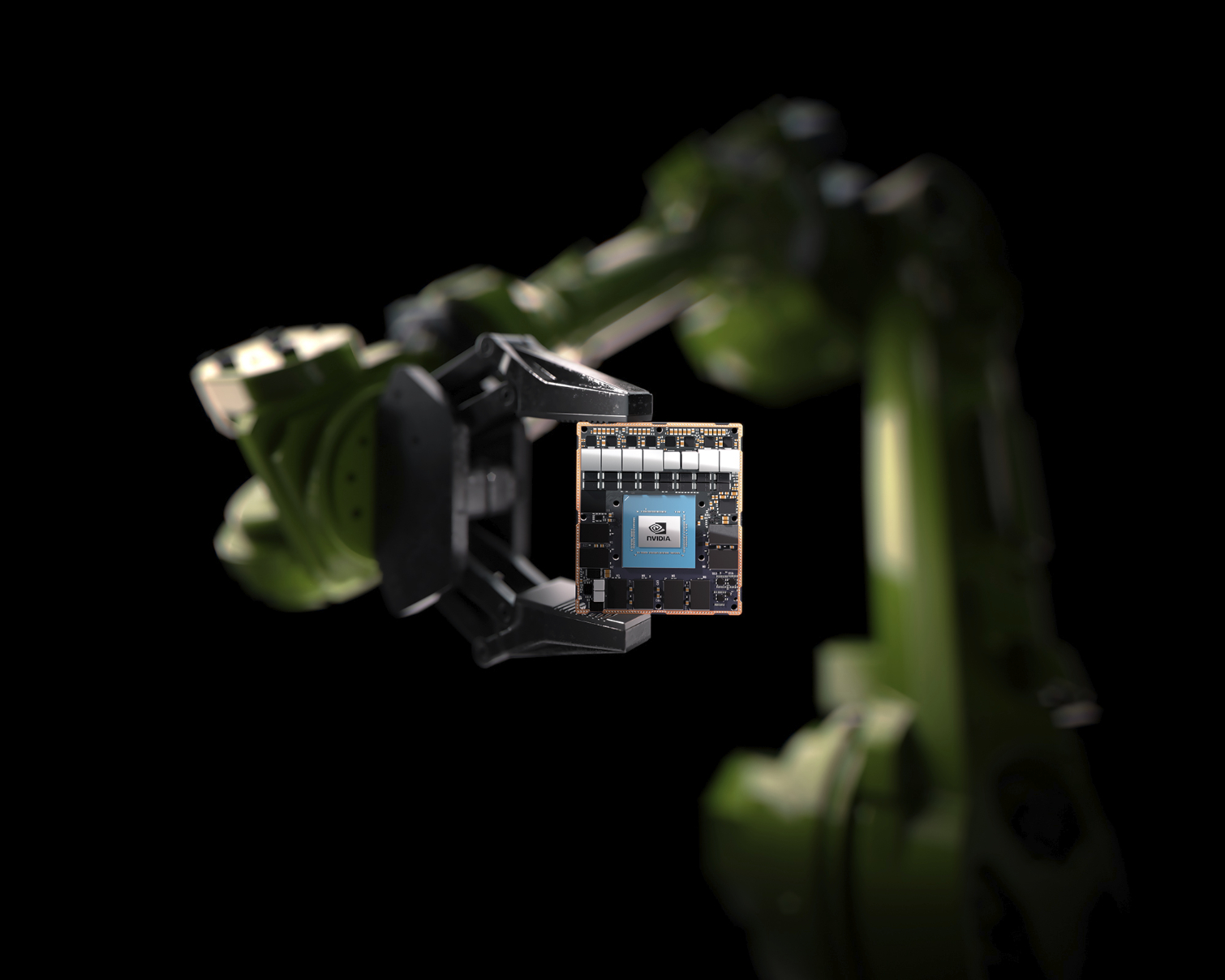

That's where Nvidia hopes its Jetson TX2 platform can step in. The third-gen Jetson AGX Xavier Module processes up to 30 trillion operations per second through the combined power of its 512-core Volta GPU, two NVLDA engines, vision processors, and eight core ARM processor. Overall, the SoC can handle a tremendous amount of incoming data from a plethora of sensors, crunch it into a usable form that can be analyzed by advanced AI neural networks, and thus enable a wide range of truly autonomous vehicles.

Nvidia's Jetson TX2 enjoys broad industry uptake, and the company hopes it can find the same success with its new AGX Xavier. Let's look at the machines the current-gen Jetson TX2 powers, and then dive into the AGX Xavier details.

What an Endeavor

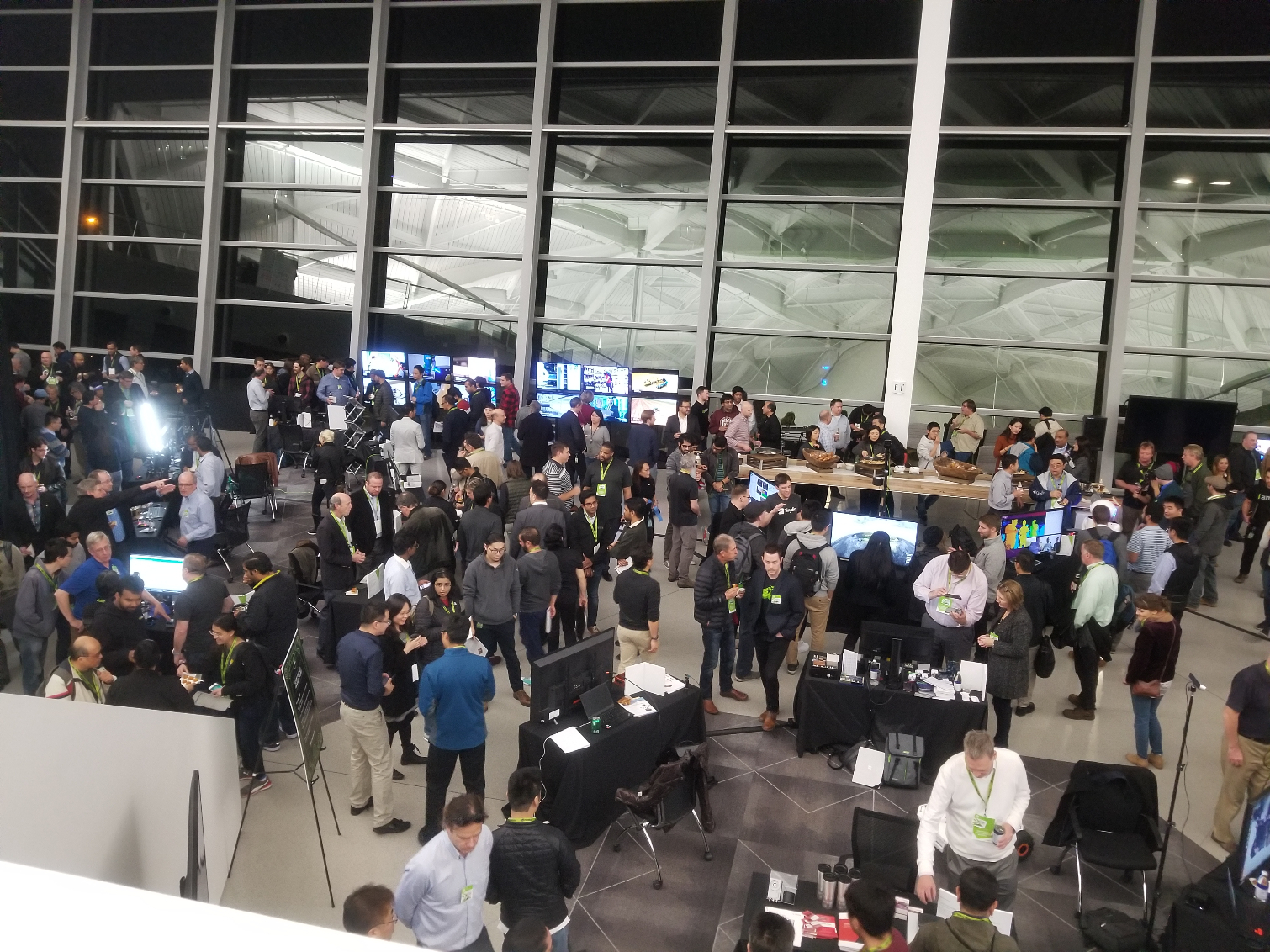

We visited Nvidia's $370 million Endeavor headquarters in Santa Clara, California, to attend the company's Jetson Developer Event. The sprawling facility features an open floor plan that houses 2,500 employees. The spacious building has 500,000 square feet of floor space spread across two floors. From the shape of the ceiling panels to the skylights, triangle shapes dominate the design.

Nvidia's Jetson platform now has over 200,000 developers working on its products worldwide, spread among 1,800+ customers. These customers range from small startups to huge outfits like Yamaha, Komatsu, and JD.com. Several of Nvidia's partners hosted displays with their latest wares.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

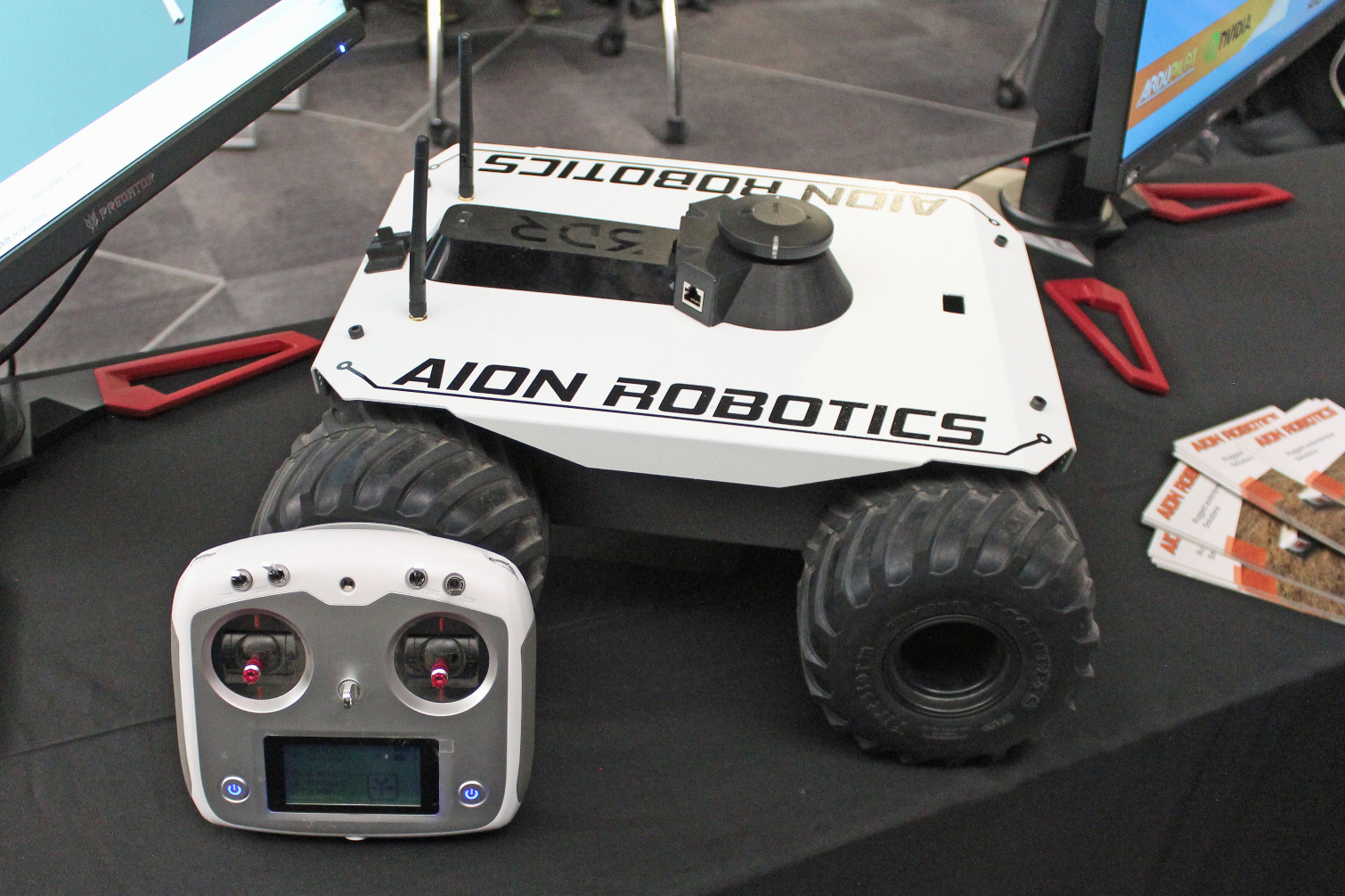

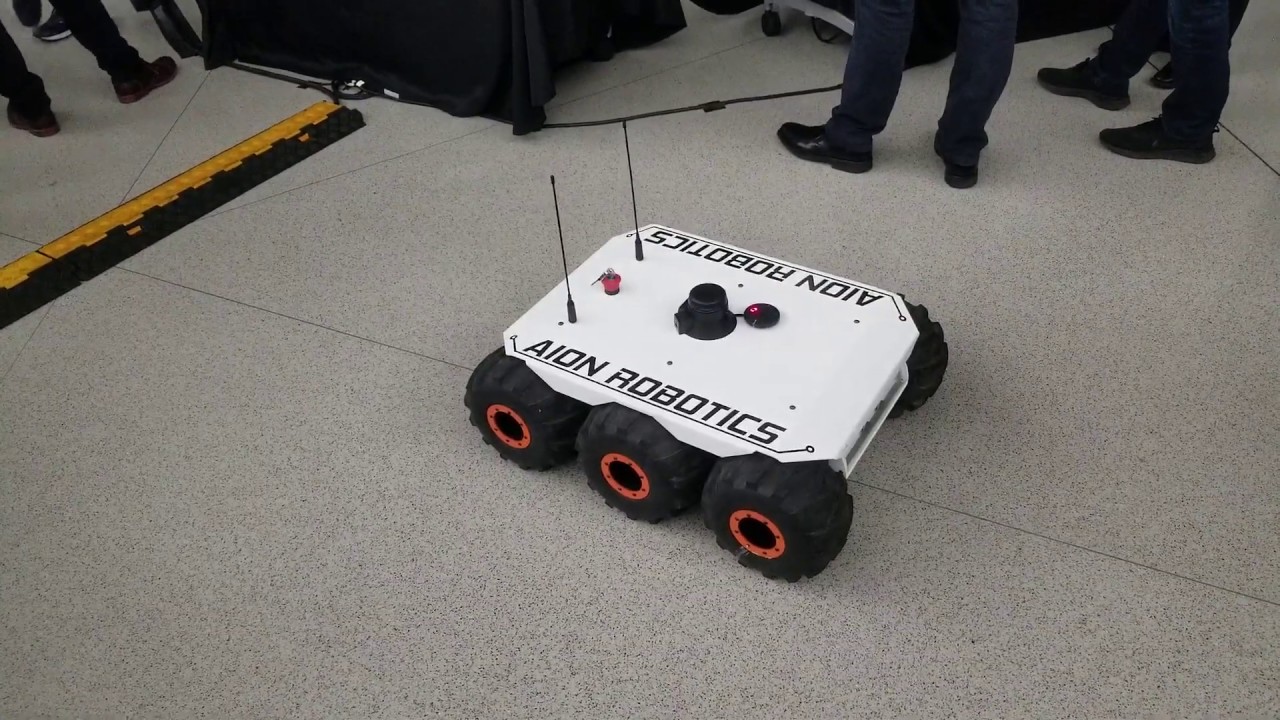

AION Robotics M6 Autonomous Drone

AION Robotics focuses on building rugged autonomous solutions that it can use as a platform for various applications. AION designed its M6 robot, which uses a Jetson TX2, for tasks like autonomous patrols, surveying and mapping, gas and soil sampling, and industrial inspections. The company also says the platform can be used for military applications.

The drone is agile, but it only moves at roughly 6 miles per hour. As with most drone platforms, the real magic lies in the data processing and sensor arrays. The company can then apply these key ingredients to other bigger and faster vehicles or machines.

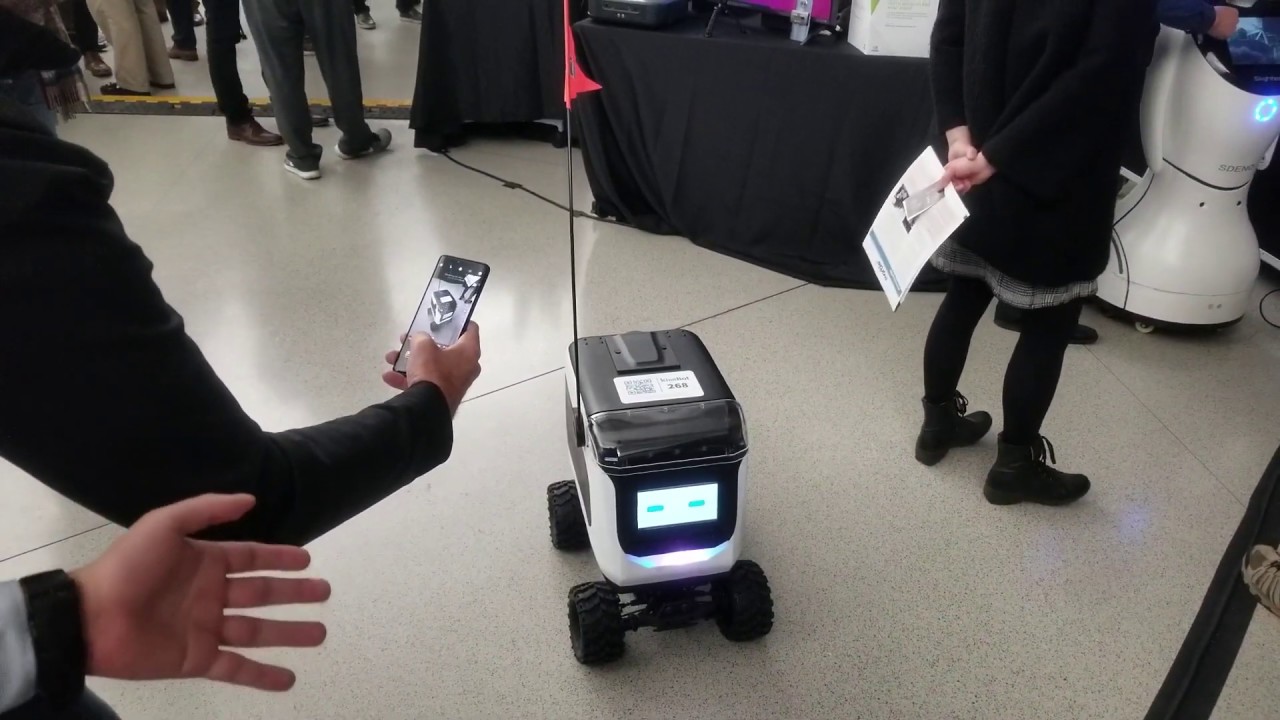

Kiwibot Food Delivery Drone

The Kiwibot is a speedy little delivery drone that comes with an insulated compartment for food delivery. This bot runs on a Jetson TX2 that processes data from six cameras as the robot delivers food around UC Berkeley's campus, but Kiwibot has numerous other bots under development. These drones operate on normal city streets and can detect objects autonomously and navigate around them. It can also read traffic signals so it can cross streets unassisted.

Students at UC Berkeley download an app and order food, which is then sent via the automated drone to their location. The service began in 2017. The company claims its average service time of 27 minutes is 65% faster than couriers. The service is also less expensive -- a normal courier charges roughly $9 per delivery, while Kiwibot delivers for $2.80.

Marble Last-Mile Delivery

Marble designed its intelligent delivery robot to handle heavier items to augment last-mile delivery services. The current model can shuttle around an 80-pound payload, and like most drones, is electric-powered. This model sports seven Intel Real Sense D415 camera-based depth sensors (the rectangular gray boxes on the front and sides) and six additional RGB cameras. The round gray and black protrusion on the upper front of the robot is the LIDAR system (a radar that uses light instead of radio waves). Two large black antennae boxes flank the LIDAR unit.

This robot is a good example of the benefits of Nvidia's AGX Xavier platform, but only because it doesn't use it. The internal system features an x86 host processor (the company won't reveal the model) in tandem with a Nvidia GeForce GTX 1060 GPU. That means the battery-powered vehicle also has to power what is essentially a desktop PC in addition to its other sensors and motors, which obviously has an impact on battery life. A Marble representative explained that some functions, such as encoding video and sensor integration, perform better on a CPU than GPU, which necessitates more than just a low-power CPU to host the graphics card.

As part of Nvidia's AGX Xavier launch, the company presented benchmark results that showed its SoC providing more performance than a Core i7 system paired with a GeForce 1070, but at 1/10th the power consumption and consuming 1/10th the physical space as the full system. The Xavier SoC also handles all sensor fusion and encoding/decoding tasks, making it a natural fit for robots that need multiple types of compute integrated into a single system.

Marble isn't sharing whether it is moving forward with an AGX Xavier system, but we imagine it is under consideration.

MYNTAI Otaru Robots

MYNTAI developed a suite of software and hardware that includes MYNT Eye visual and binocular modules that it integrates into its robots. The company uses a mixture of Nvidia's technologies, including Jetson TX1, TX2, and the AGX Xavier modules, for its products.

The Otaru robot doesn't look like it can do many tasks–and it can't. Instead, the robot is designed for exhibitions, shopping malls, leading guided tours, and various service tasks, like a gas station attendant. The robot also uses facial recognition technology to identify individuals, which could be useful for corporate security tasks, and can also hold conversations in multiple languages. Part of that capability stems from its speech recognition algorithms that the company claims have a 98% accuracy rate.

Like many robotics companies, the company is focused on developing a suite of technologies that it can apply to new applications over time. That includes its MYNT Eye and depth modules that it sells to other robot vendors.

Skycatch Precision Mapping and Inspection

Large corporations spend billions of dollars a year inspecting and maintaining facilities and land, but much of this cost boils down to human factors, such as travel, pay, and the time it takes to complete tasks. Not to mention the price of ensuring employee safety.

Aerial drones are penetrating these inspection roles at a rapid pace. For instance, the Skycatch Explore1 drone is fully autonomous, so a human doesn't provide specific guidance as the drone goes about its tasks. The drone features two cameras for data capture and can map and inspect objects within a 1cm tolerance. You can also swap out one of the cameras for a thermal module. The drone can inspect or map up to 45 acres of land on a single battery charge, or hover for up to 14 minutes (with a camera payload).

As expected, the unit comes with a remote control that allows for manual control, but Skycatch designed the drone to complete its tasks autonomously. The system comes with an Edge1 GNSS base station that uses an Nvidia TX2 module for data processing and boosts GPS accuracy to within a 5cm tolerance. It also analyses the data captured by the drone.

The full solution also comes with a software suite designed to simplify drone control. Users can quickly map out autonomous missions, and the software also provides fine-grained data analysis. Skycatch caters to large corporations, particularly in the construction and mining industries, that save tremendous amounts of money using autonomous systems. As a result, these companies don't balk at the $35,000 yearly lease price for the drone, base station, remote control, and data analysis software.

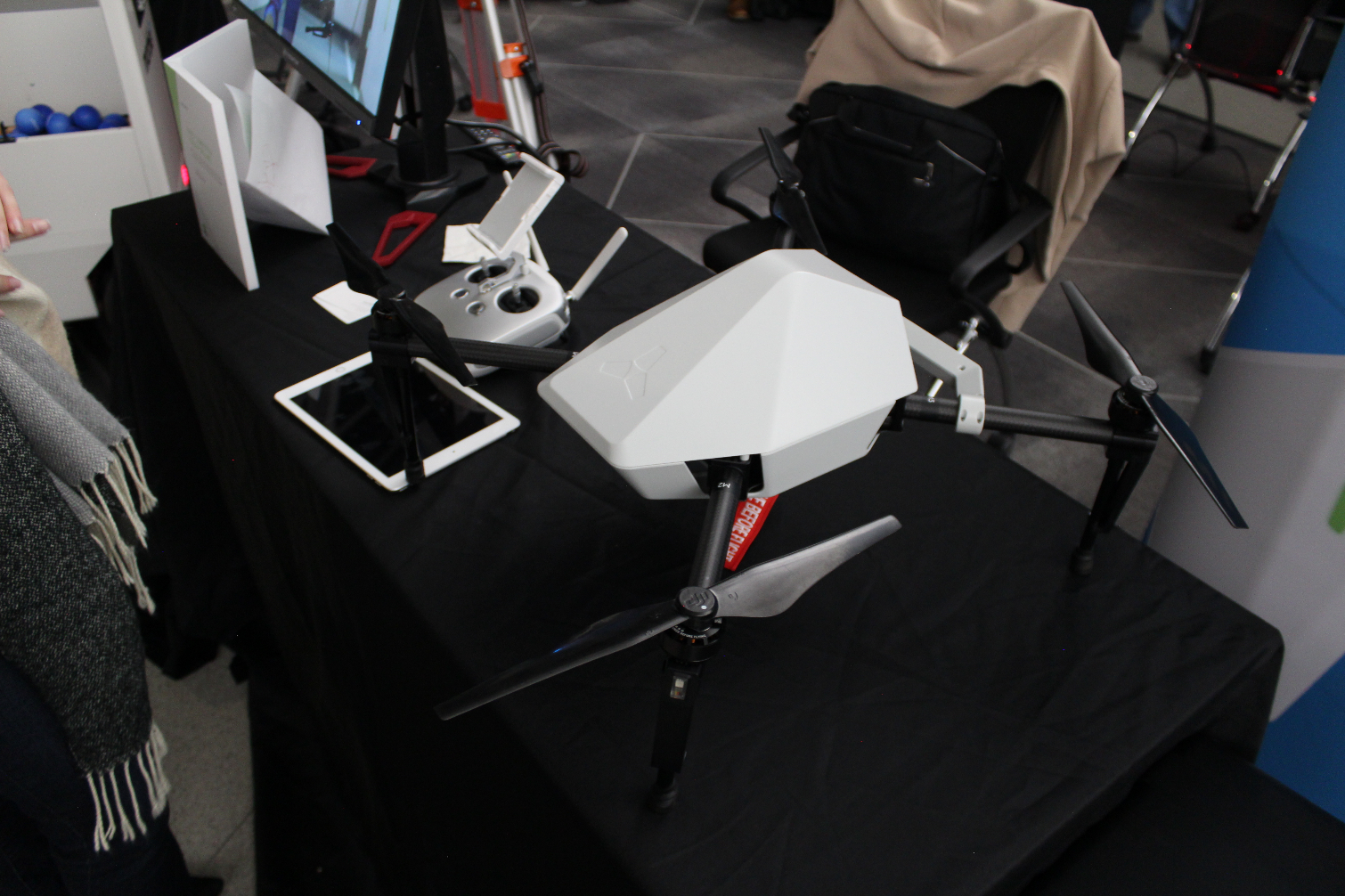

DJI Manifold 2 Autonomous Drone Kit

DJI is a well-known supplier of drones around the world, but like many drone companies, it is also diligently working to enable the autonomous drone ecosystem. Hence, the Manifold kit, which is an embedded computer that you can program using the company's Onboard SDK. That allows anyone, provided they have (or learn) coding skills, to turn a Matrice 100 or Matrice 600 drone into a truly autonomous machine. Manifold 2 features a built-in Ubuntu Linux operating system that supports CUDA, OpenCV, and ROS to speed the process.

We can see the small, lightweight (sub-200g) part strapped to the top of this drone in tandem with an array of sensors. This small part holds the Jetson TX2, and future models will house AGX Xavier modules. The Jetson TX2 model pulls a maximum of 15W of power, but you can adjust it dynamically based on load, disabling portions of the quad-core Tegra CPU when they aren't needed. This is a crucial consideration given the limited battery life available on a drone.

Smart AG for Automated Farming

Farmers face the same situation every year: Limited temporary labor during peak harvest and planting seasons. Smart AG looks to fix that issue by automating key farming machinery, like grain carts. These trucks roll alongside a combine as it threshes the wheat that is fed into the cart.

Smart AG's device controls the steering column electronically through the tractor's onboard computer, meaning it doesn't mount on the steering column, while an array of cameras and radars takes the place of the human driver. The system also includes a range of other equipment, such as remote controls for manual operation, emergency stop buttons mounted on the exterior of the truck, and networking equipment.

Smart AG trained the neural network inside the grain cart to follow the combine until it fills the grain cart with wheat, and then the cart peels off to deliver the wheat to another waiting vehicle. The company also plans to expand its automation systems to other farming operations in the future.

The product propels farmers into the space age with a cloud-based software system that allows farmers to define staging and uploading locations in the fields. It also provides more granular control of day-to-day operations.

Smart AG's system was one of the few at the event that had already upgraded to the AGX Xavier module.

Skydio R1

The show floor had several commercial-focused robots on display, but the Skydio R1 drone is designed for consumers. This drone flies itself autonomously to film its assigned subject in 4K while they go about their tasks, such as riding a bike, jogging, or even driving a vehicle. The drone is easy to control through Apple and Android phones, or even with the Apple Watch. The drone uses various cinematic capture techniques, such as lead, orbit, dronie, or boomerang, to capture video during its assignment, and you can even create your own custom effects. You can also pilot the drone yourself while the AI algorithms keep the drone (but not you) safe from colliding with nearby obstacles.

The Skydio R1 sounds amazingly simple to use, but that belies the complexity underneath. The system uses an array of 13 cameras paired with the Jetson TX1 to predict movements up to four seconds in advance

The best part? You can actually buy this today. The Skydio R1 is available now for $1,999, and the company also encourages developers to use its Skydio Autonomy Platform, a portfolio of software tools, to develop their own differentiated products on the platform. Hopefully, those don't involve weapons, but it’s probably only a matter of time.

Live Planet 4K VR and 360 Video Streaming

Live Planet was on hand to demo its 4K VR and 360 video camera system. This system, which includes access to a cloud-based software platform, allows developers to quickly create and distribute 4K video to a plethora of VR platforms, like Samsung Gear VR, HTC Vive, Facebook Live, YouTube Live Streaming, Oculus Connect, and Google Cardboard.

The camera system sports 512GB of onboard data storage, a must given the array of 16 Full HD cameras that can livestream 4K-per-eye video at 30 FPS. It can also stream recorded video at 60 FPS and take 8K still images.

The Nvidia Tegra X1 supplies the computational horsepower, but the full system doesn't come cheap. The system is now available for preorder for $9,995, with deliveries taking place in January 2019. We expect the high price to keep the device relegated to professional applications for now, but as with all new technology, prices should drop over time.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.