Nvidia Jetson AGX Xavier Developer Event: AI-Powered Robots Abound

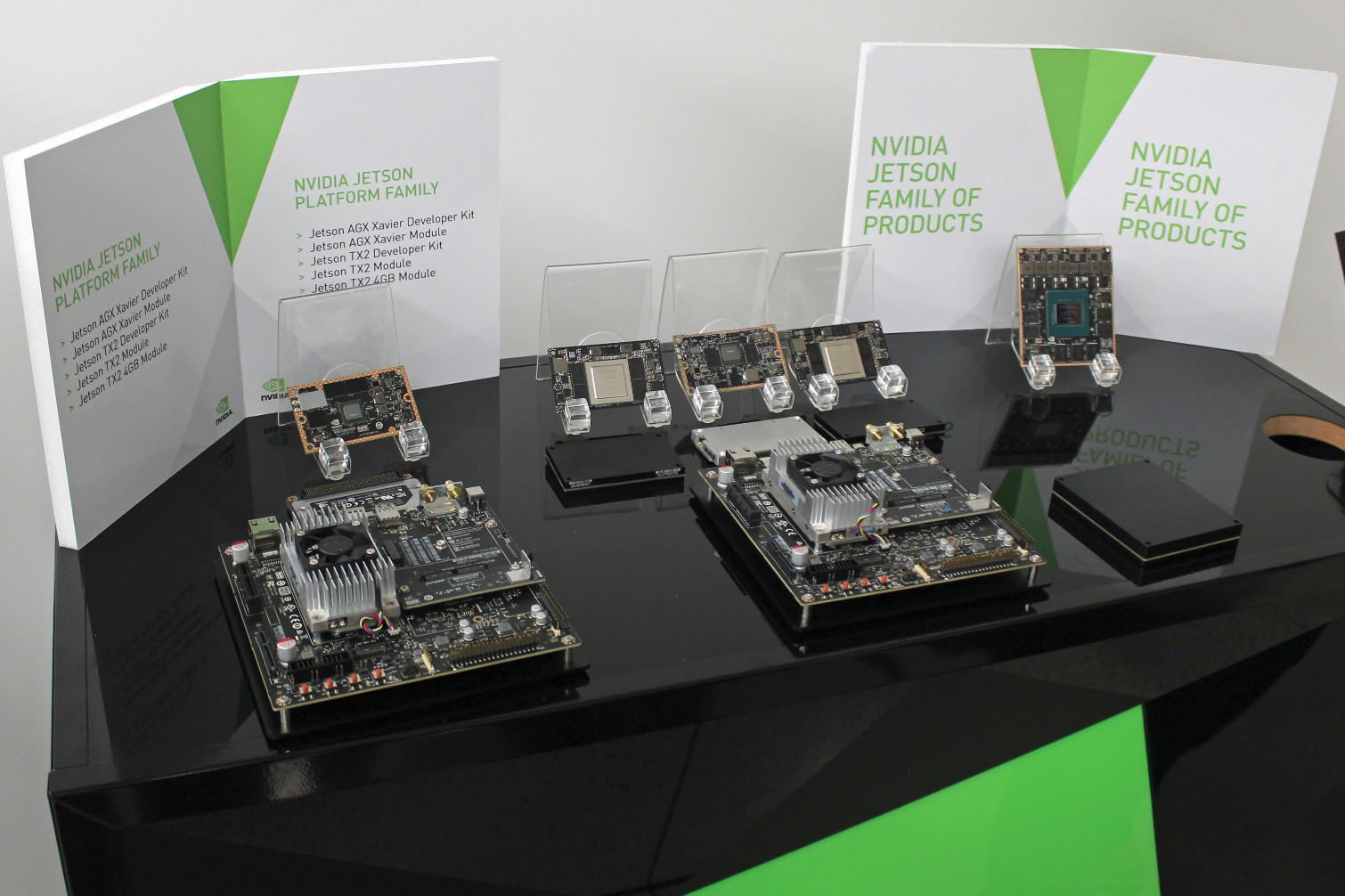

The Jetson Family

Now that we've seen some of the devices that Nvidia's Jetson enables, let's take a closer look at the processors behind the curtain. Nvidia's first Jetson, the TX1, debuted in 2016, followed by the Jetson TX2 in 2017. Nvidia is keeping up with the yearly cadence with the release of its AGX Xavier that was originally made available as a developer kit in September 2018 but is now fully available for purchase.

Here we can see the developer kits in the foreground, with the original Jetson TX1 developer kit on the left. That big design continued forward with the TX2 series, but now we can see the fruits of Nvidia's labors in the form of the developer kit on the right. This slim box provides much more computational power than its predecessors but comes with a remarkably reduced footprint and power envelope. You’ll still need to affix a heatsink to the AGX, but it represents a significant space saving compared to the previous-gen devices.

Speaking of developers, Nvidia has rapidly expanded its market share in the autonomous space. The company says that it now has 200,000 developers working in the Jetson ecosystem, a 5x increase compared to the spring of 2017. The company has also increased its customer base by 6x and has gained 2.5x more formal ecosystem partners within the same time period.

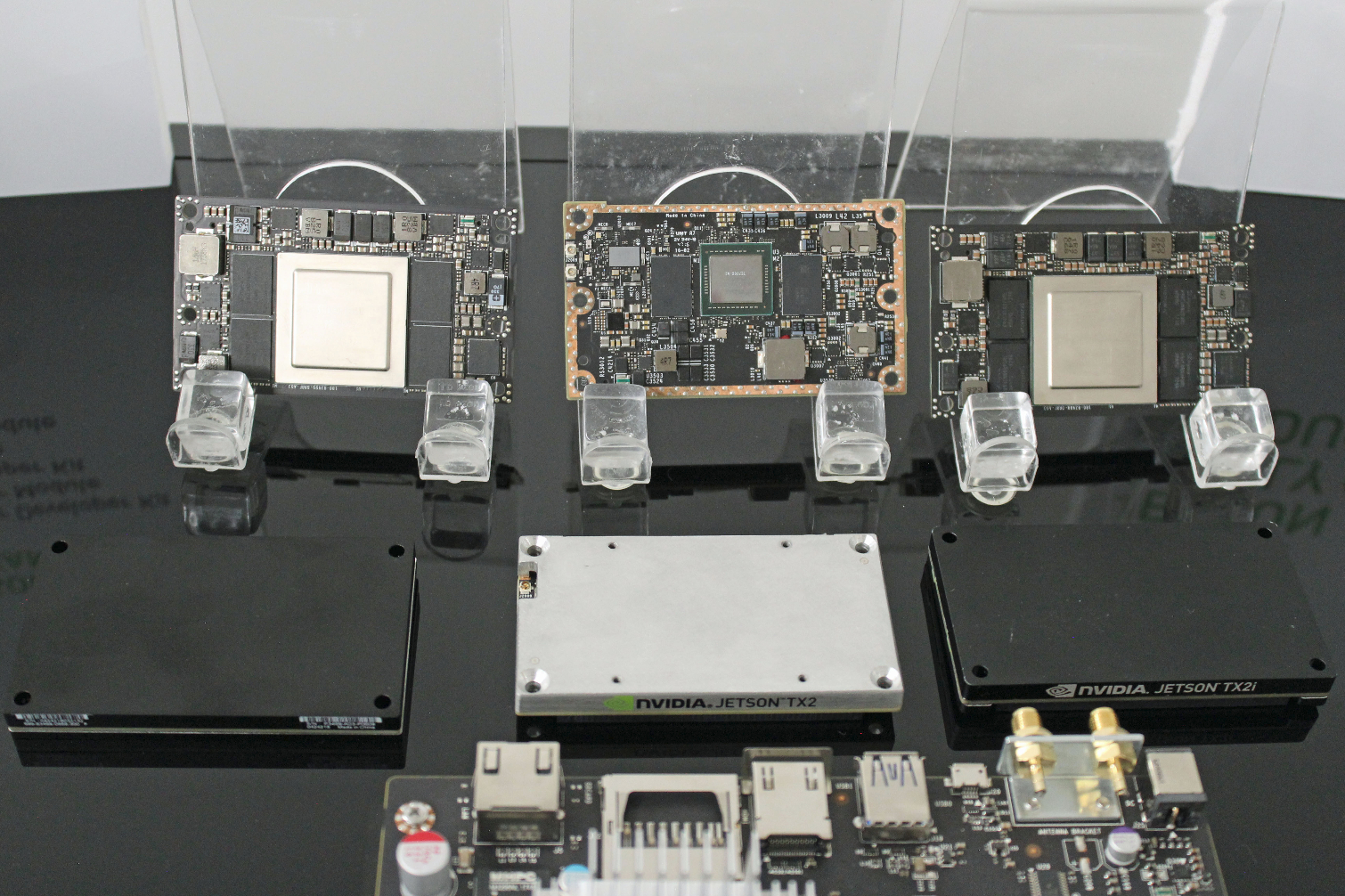

The Jetson TX2, TX2i, and TX2 8GB

Here we can see the previous-gen Jetson TX2 devices. As part of the launch, Nvidia also announced the new TX2 4GB model. The TX2 4GB comes with half the memory capacity of the standard model, which reduces the price to $299 per 1,000 units, a decline from $499. Nvidia also has the TX2i for industrial applications. This model comes with enhanced resilience to temperature extremes and a 10-year warranty, as opposed to the standard five-year warranty period.

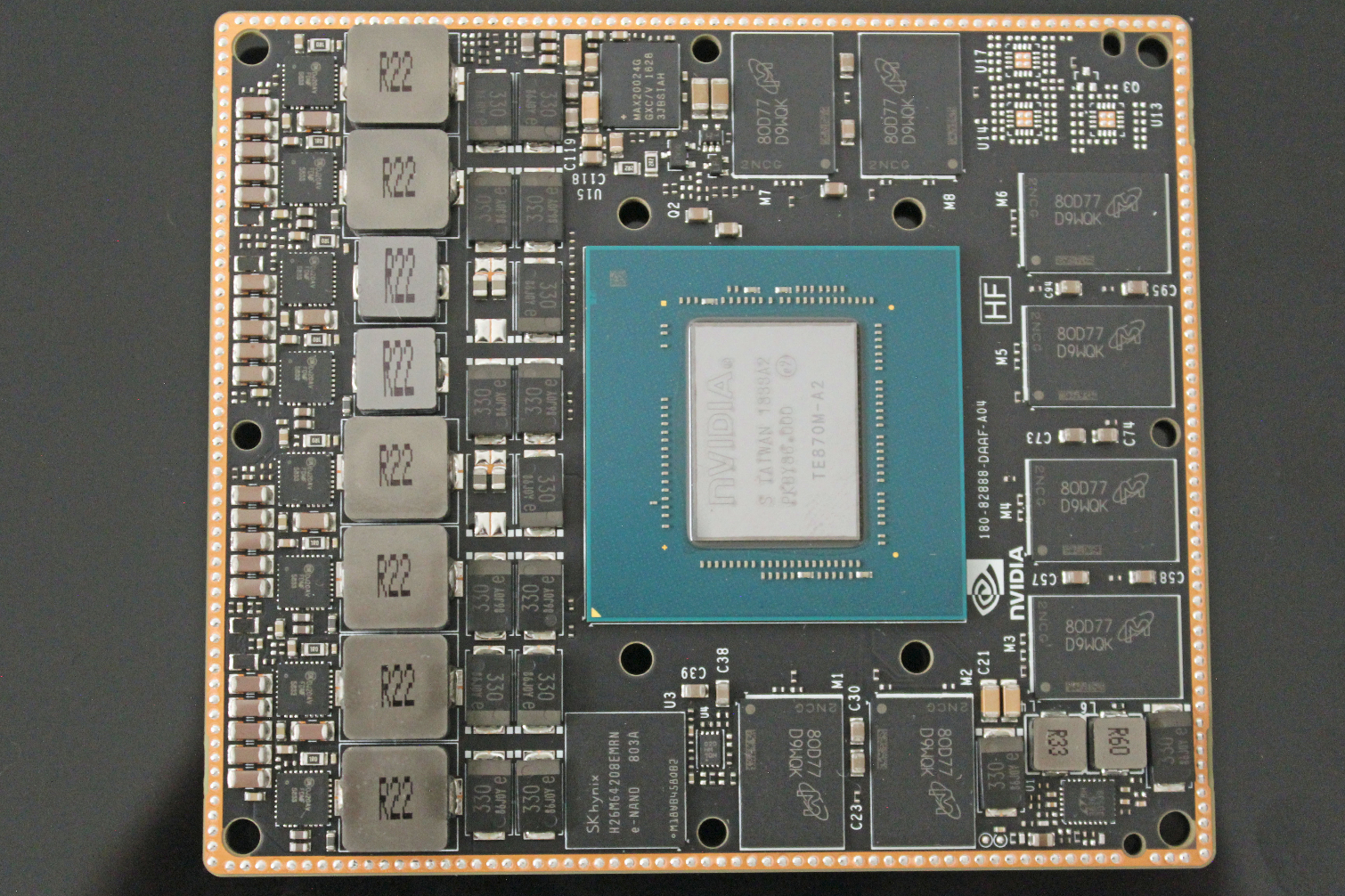

Up Close With Jetson AGX Xavier

This Jetson AGX Xavier unit can be yours for $1,100 in quantities of 1,000 units or more. Xavier runs in 10W, 15W and 30W power envelopes, but it's all the same silicon: the developer simply picks one of the predefined power profiles or creates a unique power profile based upon the use-case. Nvidia claims AGX Xavier offers 20 times more performance than its predecessor but consumes ten times less energy.

The SoC comes with 512-core Volta GPU armed with 64 Tensor cores. That's complemented by two NVDLA (Nvidia Deep Learning Accelerator) cores and an eight-core Carmel ARM v8.2 64-bit processor that wields 8MB of L2 cache and 4MB of L3.

The SoC also houses Nvidia's Vision Accelerator engine and can ingest camera inputs at 128 Gbps. Xavier also offers 16 PCIe 4.0 lanes. The 16GB of onboard LPDDR4x runs at DDR4-2133, enabling up to 137 GB/s of throughput.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

All the various compute components operate on the same coherent memory pool. This eliminates copying data in and out of the various units, each of which has its own standard SRAM and local caches. This approach maximizes the memory resource and is one of the keys that enables the diverse computing units to work in tandem and deliver an impressive performance-to-power ratio.

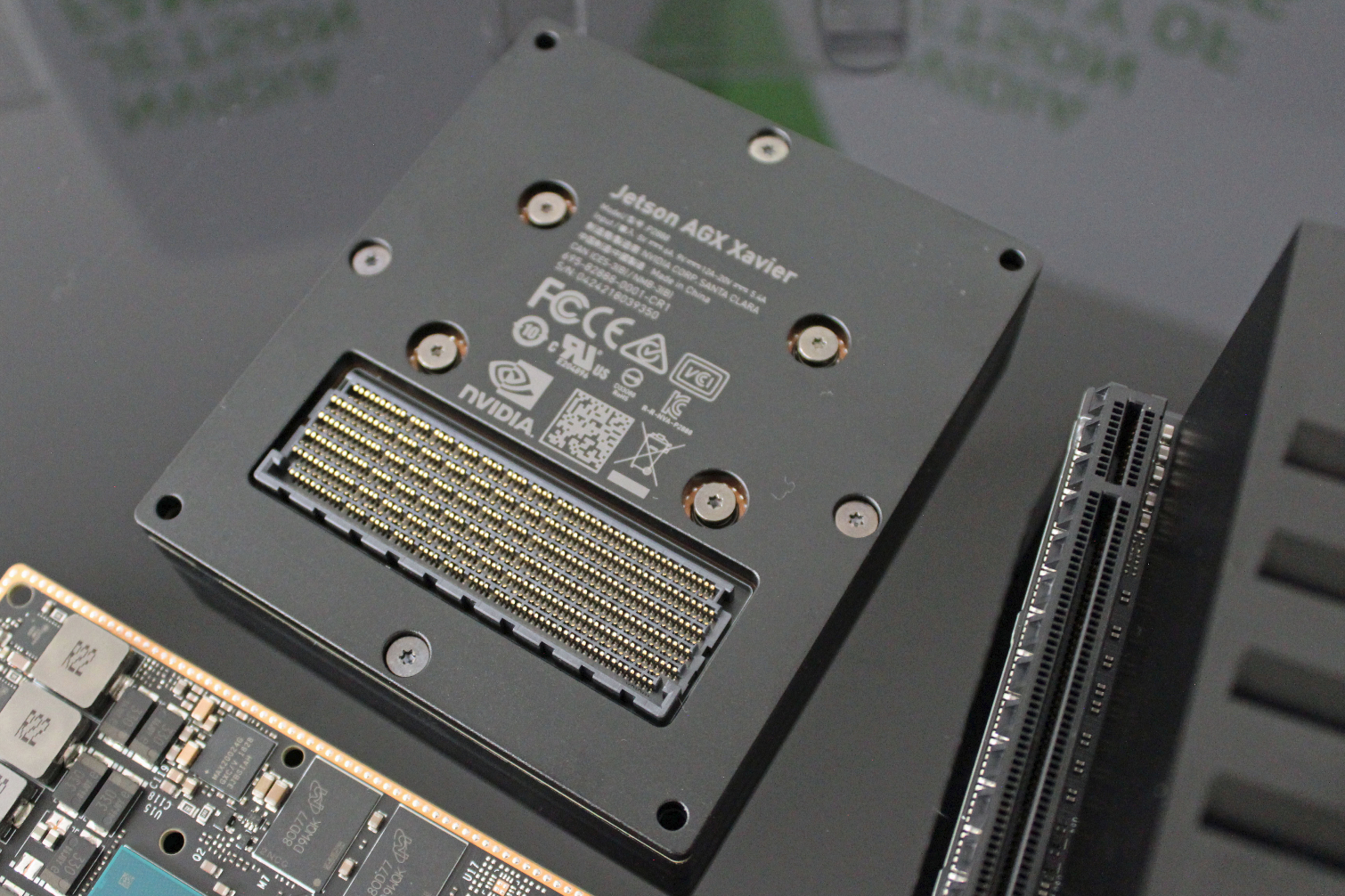

Jetson AGX Xavier - Mezzanine Connector

Perhaps one of the biggest keys to Jetson's success is backward compatibility. Here we can see the unit's housing and the mezzanine connector. This connector is pin-compatible with both the TX1 and TX2 models, allowing the AGX Xavier to serve as a simple drop-in replacement that provides a massive performance boost and power reduction to autonomous machines built on the previous-gen platforms.

Of course, hardware compatibility is only half of the picture: The software also must be backward compatible. That's the case, too, due to Nvidia's dedication to keeping all its Jetson products, even the first-gen TX1, updated to the latest versions of its Jetpack SDK. The SDK includes CUDA, Ubuntu, cuDNN, and TensorRT 5.0, providing a true plug-and-play capability for existing devices.

Nvidia's AI Software and Services Partners

In the end, it's all about ecosystem partners. Nvidia has built up a robust network of big-name partners for end devices, like Toshiba, Yamaha, and Komatsu, but it also collaborates with a web of sensor-producing partners to ensure hardware compatibility with the latest technology. That same work carries over to collaborating with hardware and design services for customers, along with a robust network of AI software and services partners.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.