The History of DOE Nuclear Supercomputers: From MANIAC to AMD's EPYC Milan

History of DOE Nuclear Supercomputers

The United States Department of Energy (DOE) has been at the forefront of supercomputing for seventy years and has largely paved the way for the entire supercomputing industry. To celebrate that legacy, the agency had several displays at its booth at the Supercomputing 2018 tradeshow.

Although the agency was only officially incorporated in 1977, it sprung from the US governments' Manhattan Project that was originally managed by the Army Corps of Engineers. That project introduced the world to the first atomic bomb during the waning days of World War II. The agency is still responsible for designing, building, and testing nuclear weapons, but it also steers the country's energy research and development programs.

The DOE's work began back in 1945 (before the age of transistors) with the first machine used to study the feasibility of a nuclear weapon, the famed vacuum tube-equipped ENIAC (Electronic Numerical Integrator and Computer). That work progressed to custom and vector processors, like the famous Cray-1, in the years spanning 1960 to 1989.

1992 to 2005 found the agency's supercomputers evolving to single-core processors and symmetric multiprocessing (SMP), while 2005 also rung in the first supercomputers with multi-core / SMP processors. But now we're in the age of accelerators, otherwise known as GPUs, that have taken the supercomputing world by storm.

The agency currently hosts the Summit and Sierra supercomputers that rank #1 and #2 in the world, respectively. And now, after 70 years, the agency is on the cusp of deploying the world's first exascale-class supercomputers. These exascale machines will calculate upwards of one billion calculations per second, a 1000x improvement over the petascale supercomputers that were the previous benchmark for mind-bending compute power.

All told, the far-flung agency manages 17 National Labs that include such well-known names as the Los Alamos National Laboratory, Oak Ridge National Laboratory, and Sandia National Laboratories, among many others. These facilities tout the most advanced supercomputers on the planet, but they all start with simple components.

The MANIAC

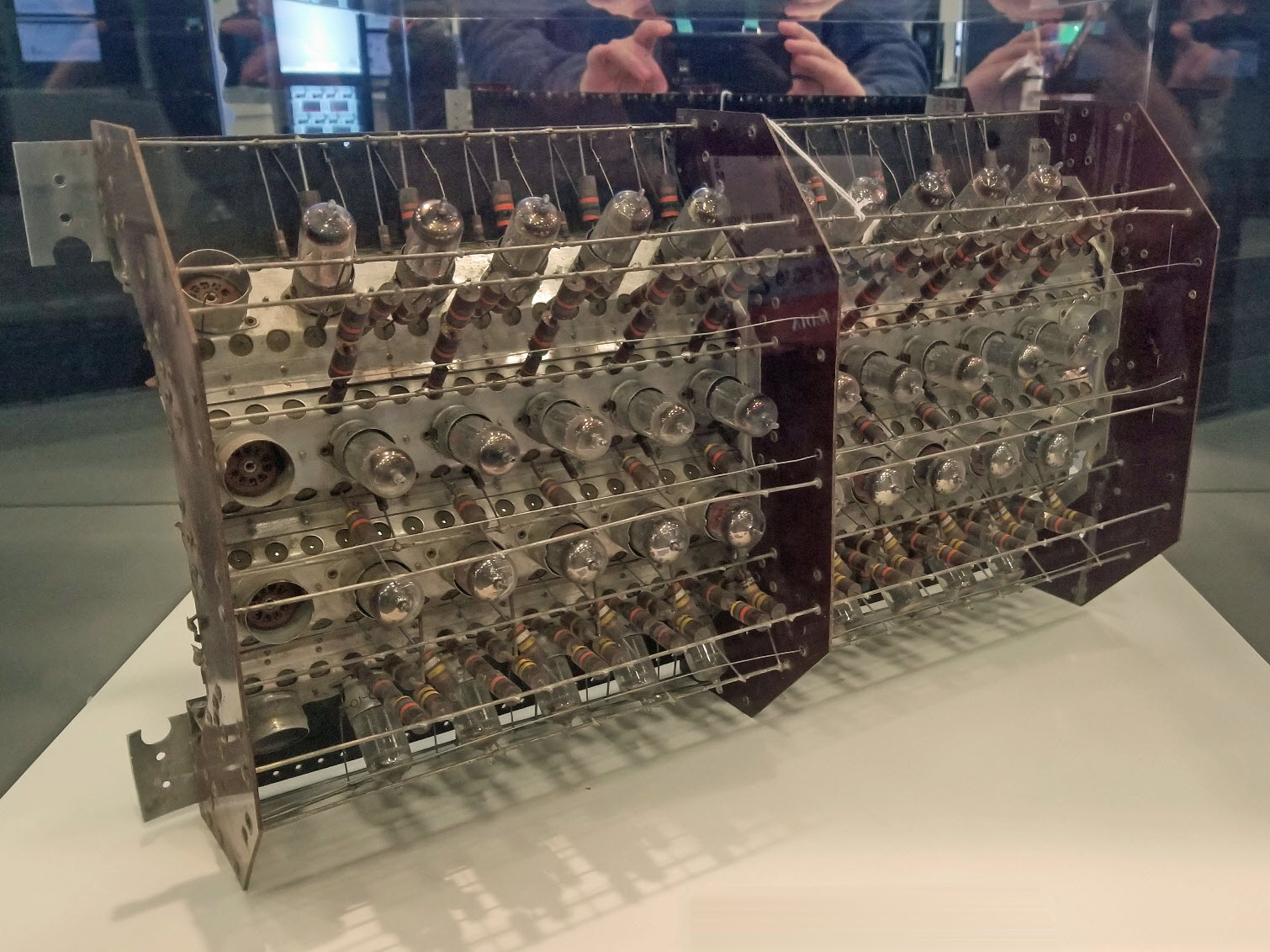

The ENIAC (Electronic Numerical Integrator and Computer) supercomputer gets all the attention in the history books, but lest we forget its brother with a much cooler name, the DOE had its MANIAC on display.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This module served as just one part of the MANIAC (Mathematical Analyzer, Numerical Integrator, and Computer) supercomputer that was built between 1949 and 1952 at the Los Alamos National Laboratory.

As a pre-transistor computer, this machine wielded 2,850 vacuum tubes and 1,040 diodes in the arithmetic unit alone. All told, it featured 5,190 vacuum tubes and 3,050 semiconductor diodes. This 1,000-pound computer generated precise calculations of the thermonuclear process and was one of the first computers based on the von Neumann architecture.

The CDC 600

In 1966, the Control Data Corp. (CDC) 6600 was the fastest supercomputer on the planet with up to three megaFLOPS of performance. That's three million floating point operations per second. This freon-cooled supercomputer consisted of four cabinets with a single central CPU constructed of multiple PCBs. It also had ten peripheral processors that handled input and output operations. Seymour Cray, the father of the famous Cray supercomputers, designed the machine.

The Cray-1

The Cray-1 is perhaps one of the best-known supercomputers of all time, partially because of its C-shaped design and leather seating that earned it the distinguished moniker of "the world's most expensive love seat." This Cray-1 system, the first off the assembly line, made an appearance at the Supercomputing 2018 tradeshow. It was originally installed at the Los Alamos National Laboratory in 1976.

Unbeknownst to the casual observer, the speedy supercomputer was designed in a semi-circle to reduce the length of the wires connecting the individual components, which helped reduce latency and improve performance. The posh seating around the tower concealed the power delivery subsystem that powered the 115 KW machine. Overall, the machine weighs in at a beastly 10,500 pounds and consumes 41 square feet of floor space. The single freon-cooled processor sped along at 80 MHz and addressed 8.39 Megabytes of memory spread across 16 banks. Storage weighed in at a mere 303 MB. This supercomputer delivered 160 megaFLOPS of performance, all for a mere $7.9 million.

Strap SANDAC On A Missile

What do you do if you need a supercomputer for missile navigation and guidance? You design and build one.

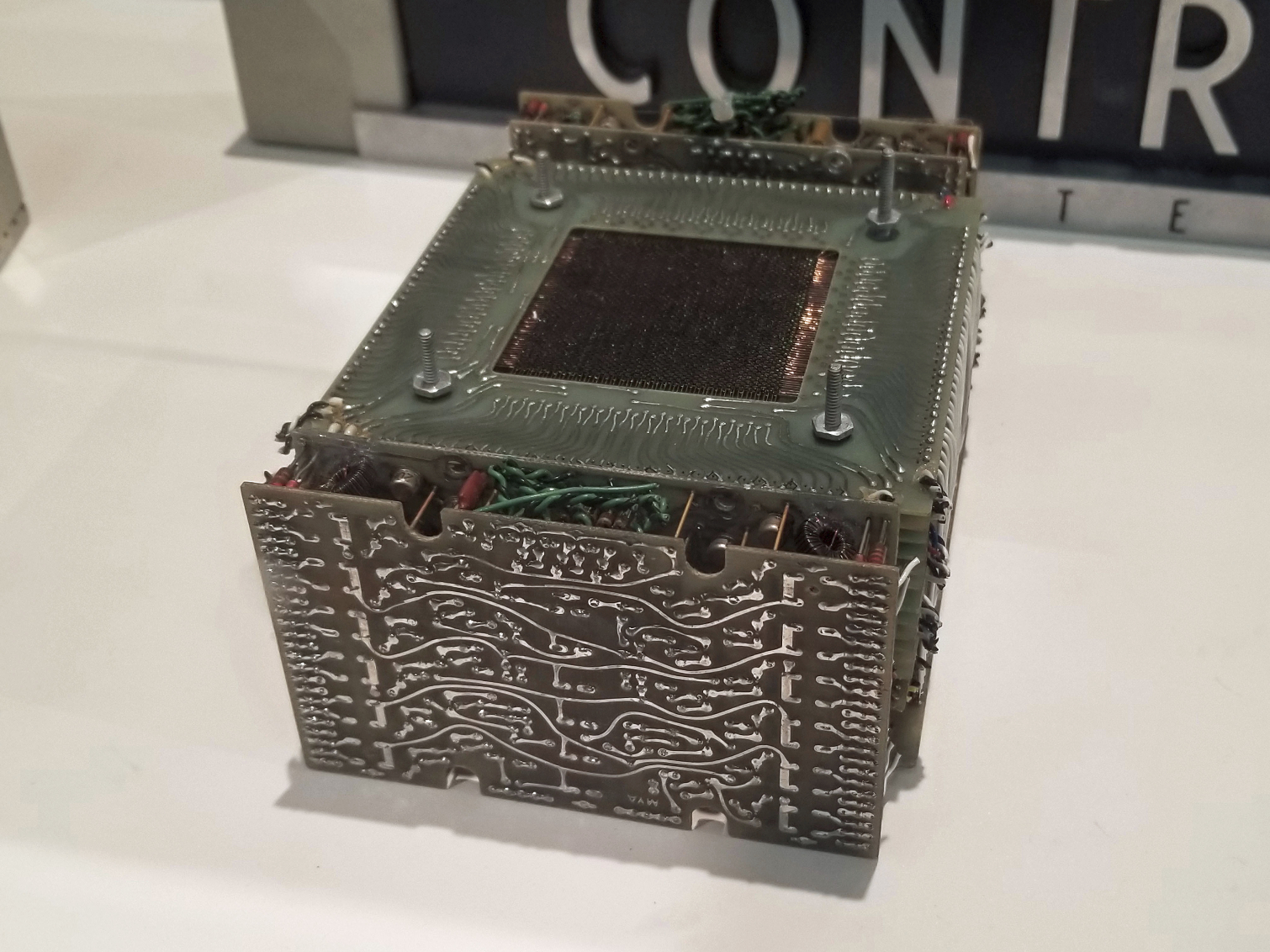

The Sandia National Labs Airborne Computer (SANDAC) supplied all the power of the Cray-1 supercomputer on the preceding page. But highlighting the speed of innovation during that time period, it packed all of that horsepower into a device the size of a shoebox that weighed less than 20 pounds. That's a big decrease in size from the 41-square foot, 10,500 lb. Cray-1, but SANDAC arrived nine years (1985) after the Cray machine.

SANDAC consisted of up to 15 custom-built MIMD processors from Motorola and offered up to 225 Million Instructions Per Second (MIPS) of performance. This was one of the first parallel computers, and the first parallel computer to fly on a missile. Like any supercomputer, it had its own memory and included modules for input and output operations. Durability was a key tenet of the design: it was designed to ride in inter-continental ballistic missiles, after all. As such, the unit was designed to survive intense vibration, acceleration, and temperatures up to 190 degrees Fahrenheit.

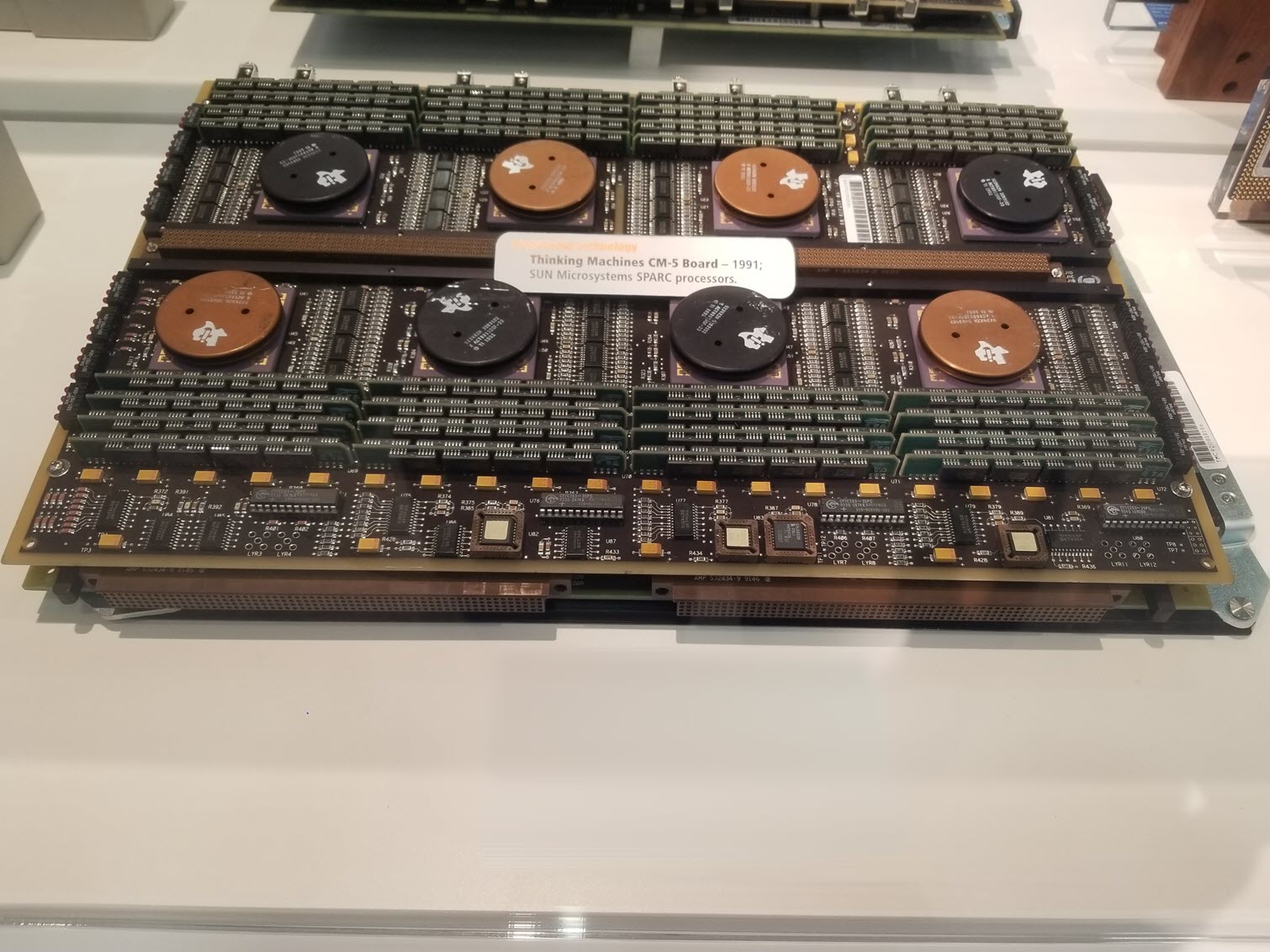

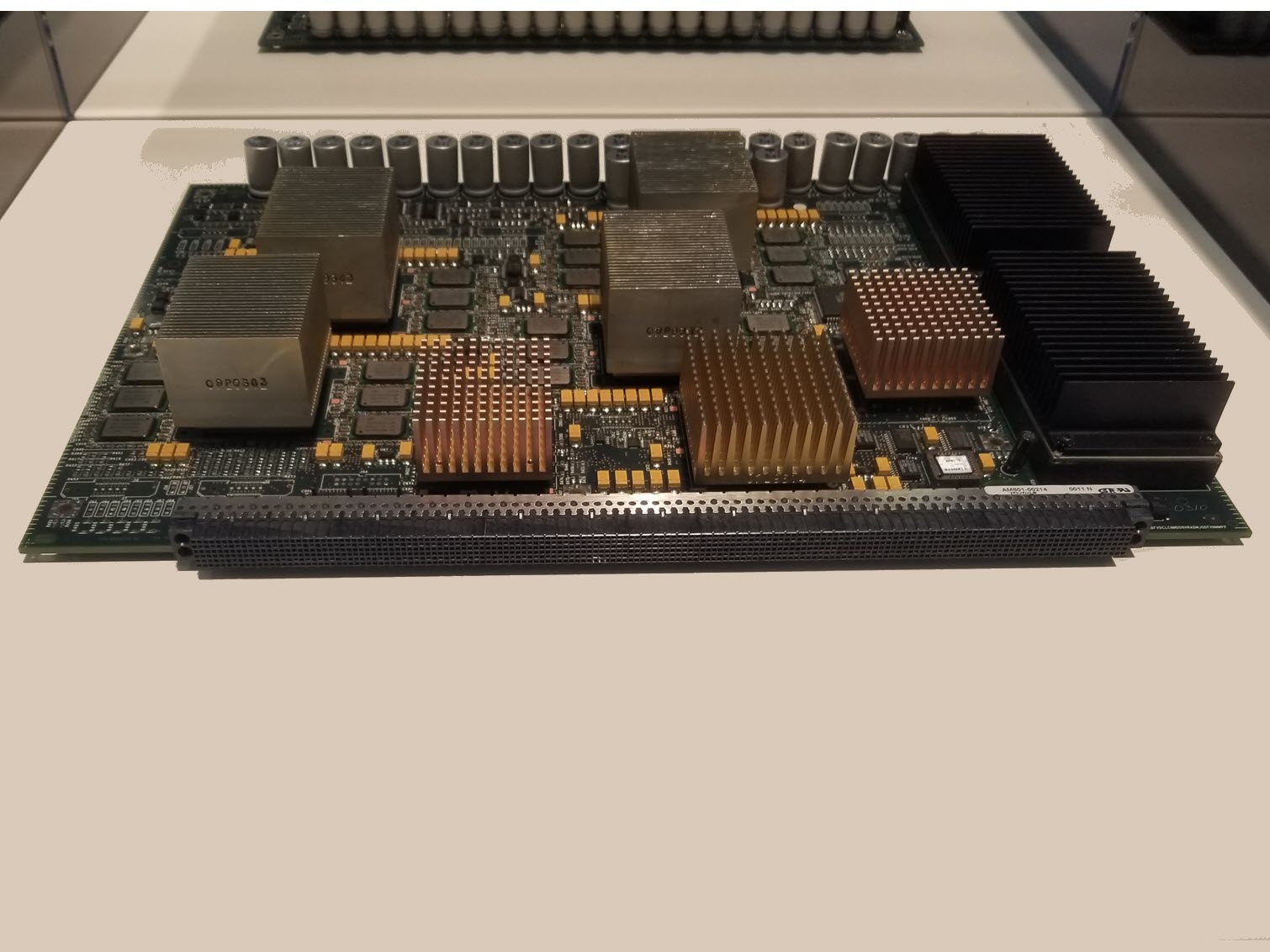

The Thinking Machines

Thinking Machines Corporation's (TMC) Connection Machines (CM) debuted in 1991. This board comes from the CM-5 machine, which wielded 1,024 SPARC RISC processors from Sun Microsystems workstations. This was on the of the early supercomputers that paved the way for the massively parallel architectures found in modern supercomputers. These supercomputers were based on the doctoral research of Danny Hillis at MIT during his search for alternatives to the tried-and-true von Neumann architecture.

Pictured above is a single circuit board from the supercomputer. A CM-5 supercomputer sat atop the TOP500 list in 1993, meaning it was the fastest supercomputer in the world, with 131 gigaFLOPS of performance. CM-5 systems continued to dominate the top ten rankings for several years after that.

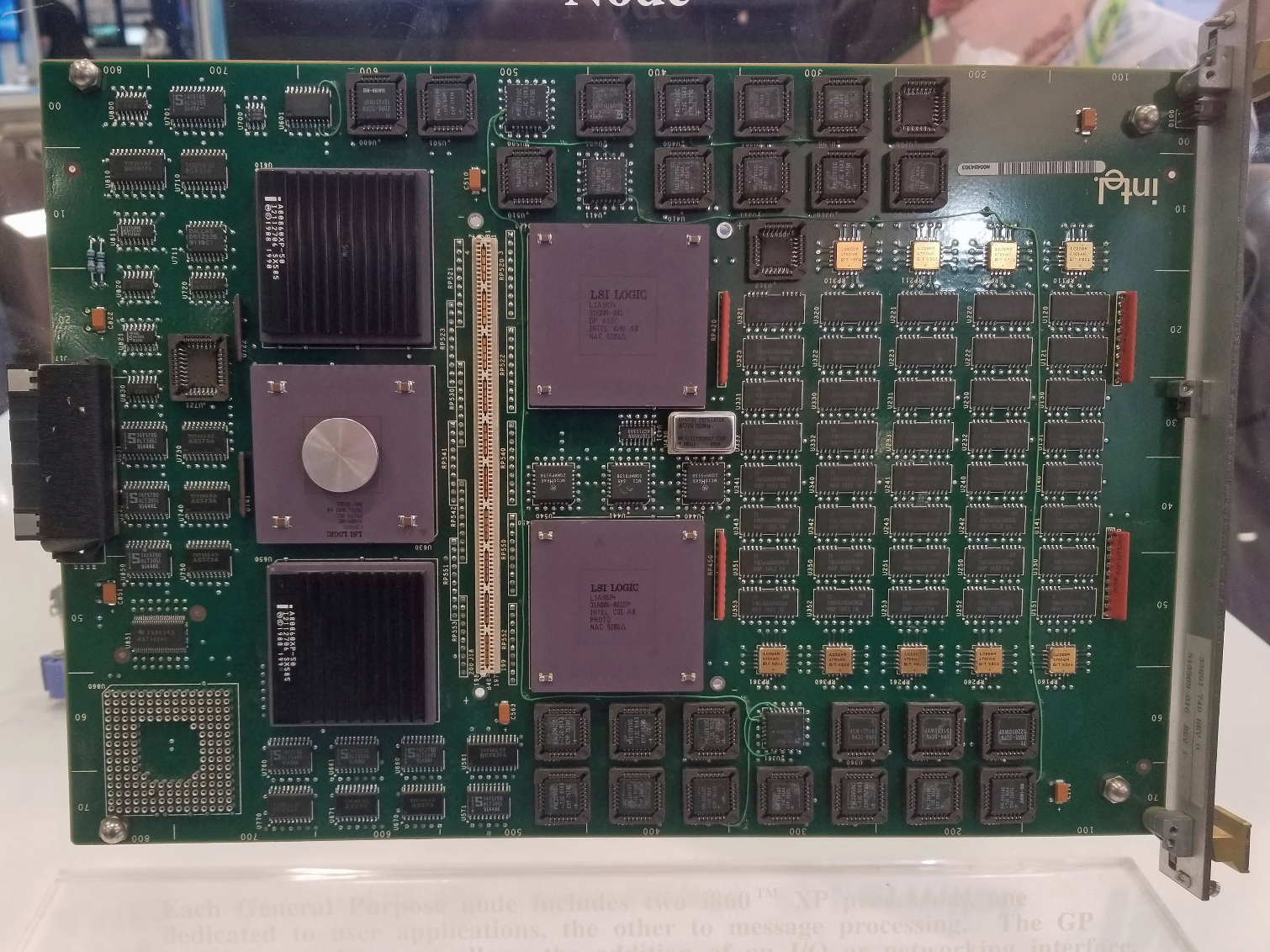

The Intel Paragon Node

Intel delivered its Paragon General-Purpose (GP) Node to the Oak Ridge National Laboratory in 1992. Each node carried two i860 XP processors (RISC), one dedicated solely to applications and the other to message processing. All told, the system featured 2,048 i860 processors spread across 1,024 of these nodes. Each node carried a whopping 16MB of memory and a B-NIC interface that connected to mesh routers on the backplane. The entire system pushed out peak performance of 143.4 gigaFLOPS, landing it at the top of the TOP500 list in June 1994.

The Paragon XP/S node pictured here leveraged the now-ubiquitous x86 instruction set and was used for code development.

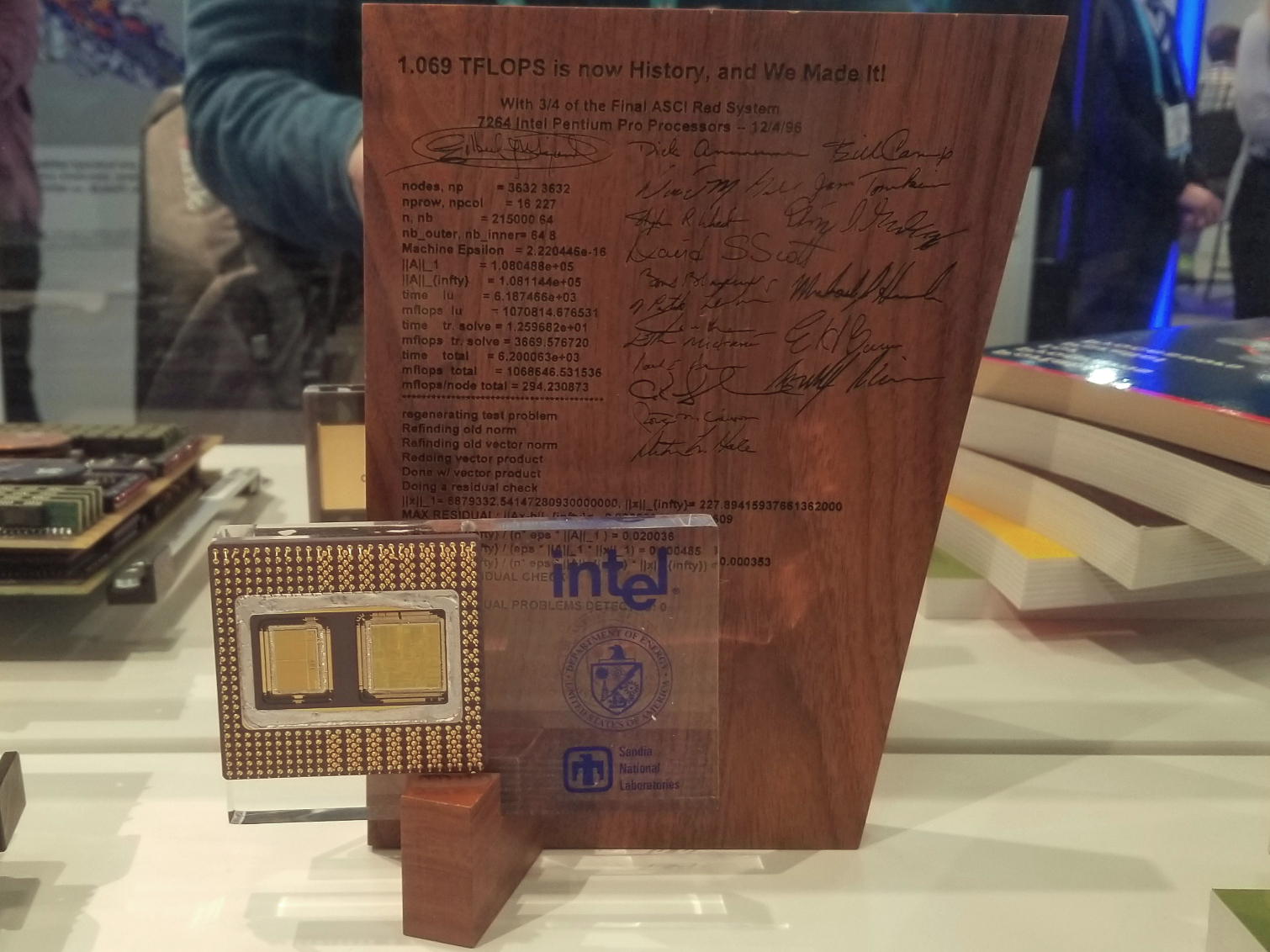

Intel Breaks the teraFLOPS Barrier with ASCI Red

The teraFLOPS (one trillion floating point operations per second) barrier loomed large for scientists as supercomputers evolved, but Intel and Sandia National Labs finally smashed that barrier in 1996.

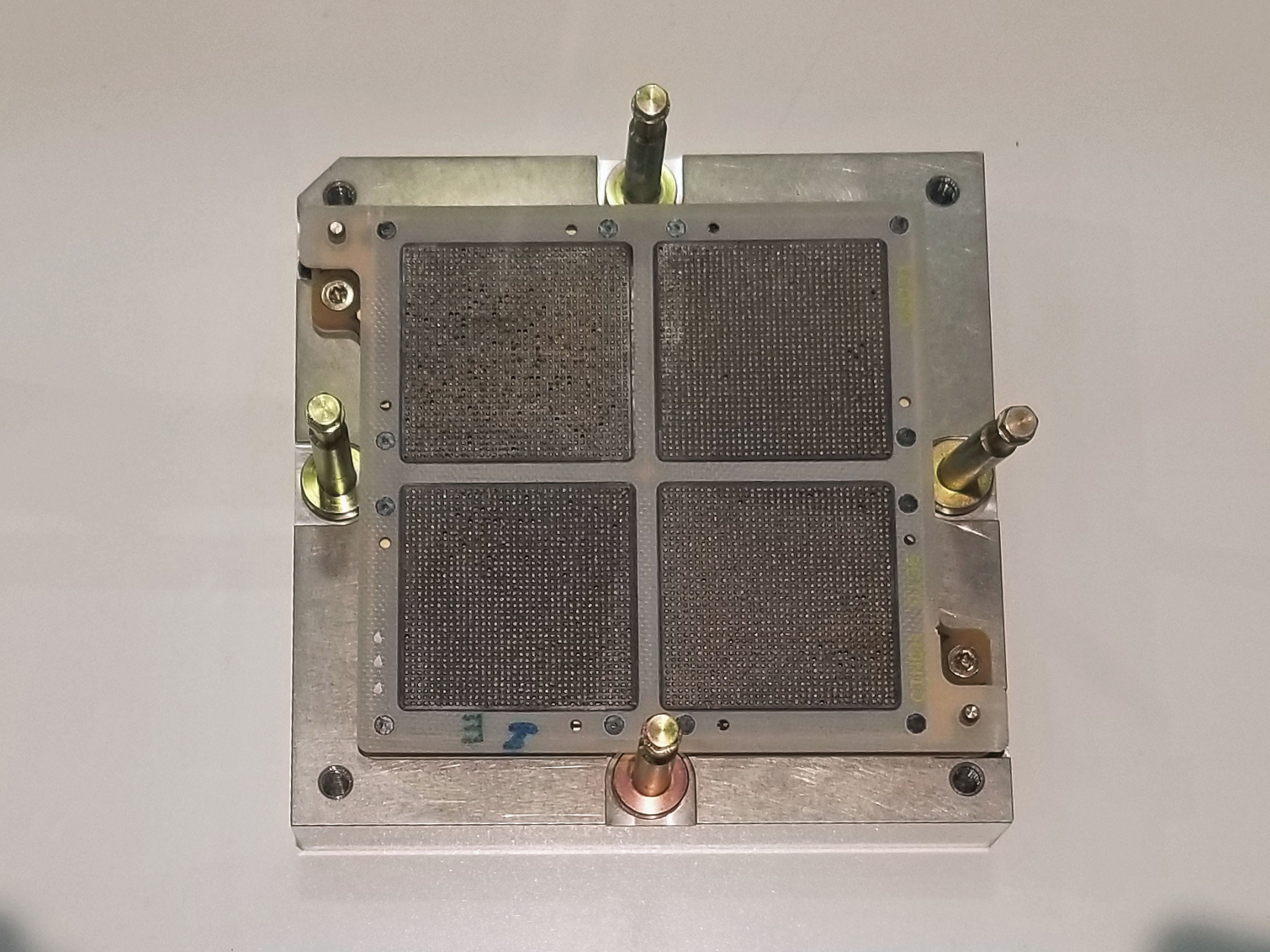

The ASCI Red system consisted of 104 cabinets that consumed 1,600 square feet of floor space. Seventy-six of the cabinets were dedicated to housing 9,298 Pentium Pro processors clocked at a whopping 200 MHz (later upgraded to Pentium II Xeon's running at 333 MHz).

The system had 1.2 TB of RAM dispersed throughout, along with eight cabinets dedicated to switching and 20 cabinets dedicated to disk storage. The storage subsystem delivered up to 1 GB/s of throughput courtesy of the Parallel File System (PFS) that was a key component to smashing the teraFLOPS barrier. The entire system consumed up to 850 kW of power.

The system was only 75 percent complete when it first broke the teraFLOPS barrier (as measured by LINPACK), but it later reached up to 3.1 teraFLOPS in 1999 after memory and processor upgrades. The system led the TOP500 from 1997 to 2000. This was the first system built under the Accelerated Strategic Computing Initiative (ASCI), a program designed to maintain the US nuclear arsenal after the halt of nuclear testing in 1992.

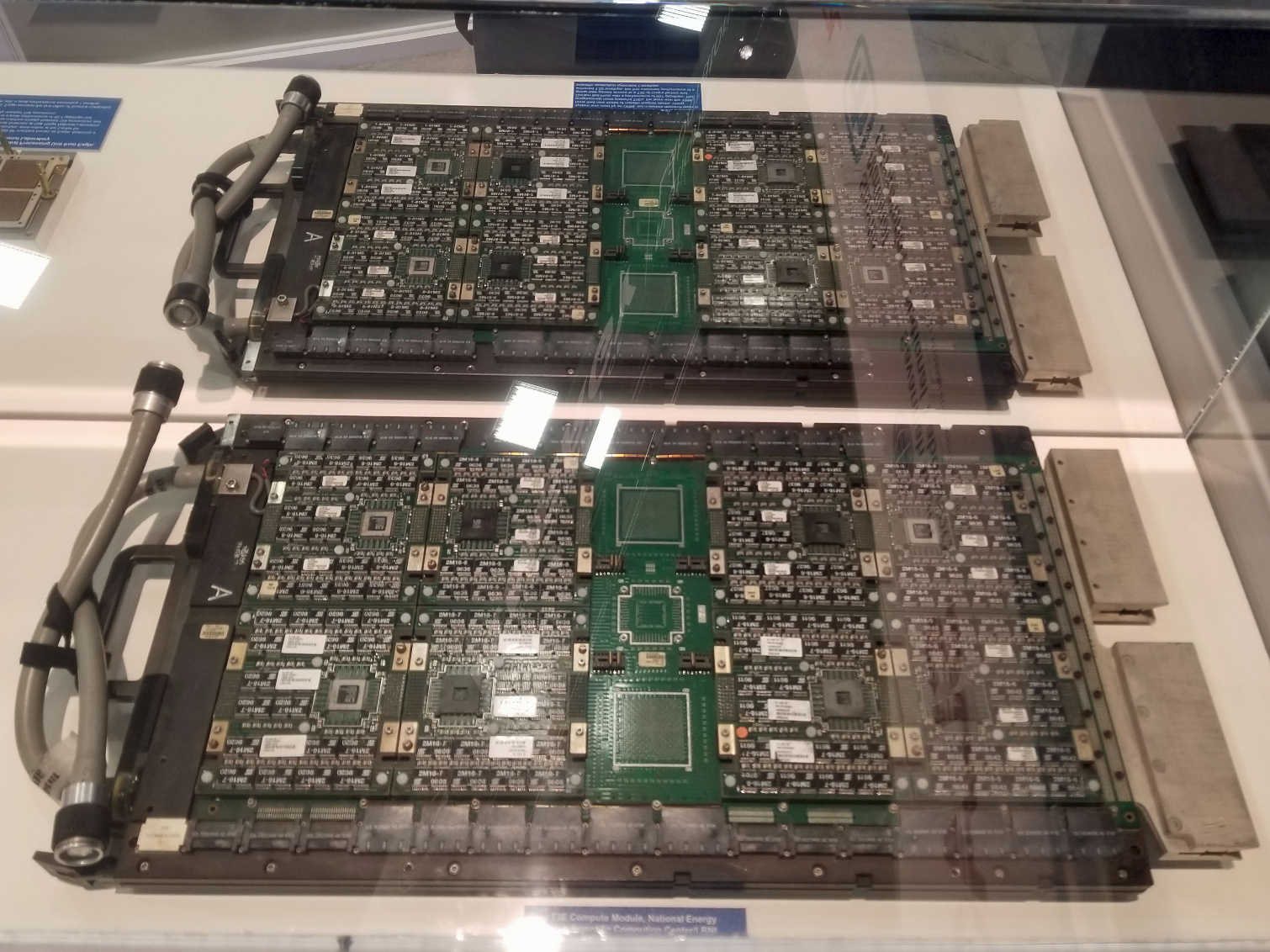

The Cray T3E-900 Compute Module

Here we see two modules from the "MCurie" Cray T3E-900 system that was installed at the Lawrence Berkley National Laboratory in 1997. The MCurie system wielded 512 DEC Alpha 21664A microprocessors running at 450 MHz, though Cray's design scaled up to 2,176 processors. Each compute module housed up to 2 GB of DRAM and a six-way interconnect router.

For purposes of record keeping, floating point performance is typically measured with LINPACK. However, this largely synthetic test doesn't require data movement or factor in other system-level considerations, thus supplying only a rough estimate of peak compute power. A team from the Oak Ridge National Laboratory won the 1998 Gordon Bell prize for reaching 657 gigaFLOPS of performance during while processing actual applications. Later, the same team used a T3E at Cray's factory to break 1.02 teraFLOPS of performance, marking the first time in history that a computer exceeded one teraFLOPS of sustained performance during a scientific application.

IBM Powers its way in

An IBM "Eagle" RISC System (RS)/6000 SP supercomputer with the Power3 architecture was installed at the Oak Ridge National Laboratory in 1999. This system had 124 processors that pushed out 99.3 gigaFLOPS, though it was later upgraded to a total of 704 processors. Here we can see a close-up shot of a Power3 processor that harnesses the power of 15 million transistors on a 270 mm2 die. IBM itself fabbed the processor on its CMOS-6S2 process (roughly equivalent to 250nm). The processor blasted along at 200 MHz, though its Power3-II successor brought that up to 450 MHz.

ASCI White

The ASCI White supercomputer was built of 512 of the IBM RS/6000 systems (previous slide) tied together. The system features 16 IBM Power3 processors per node, 8,192 processors running at 375 MHz, 6 TB of memory, and 160 TB of disk-based storage. The entire system was comprised of three different types of cabinets; the 512-node White, 28-node Ice, and 68-node Frost.

The 100-ton system was dedicated in 2001 and cost $110 million. The ASCI White supercomputer alone needed 3 MW of power, but another 3 MW was dedicated just to cooling the beast. The machine topped out at 12.3 teraFLOPS and was the fastest supercomputer on the TOP500 list from November 2000 to June 2002.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.