ARM Cortex-A72 Architecture Deep Dive

ARM's Cortex-A72 CPU adds power and performance optimizations to the previous A57 design. Here's an in-depth look at the changes to each stage in the pipeline, from better branch prediction to next-gen execution units.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Architecture Overview

Instruction Fetch

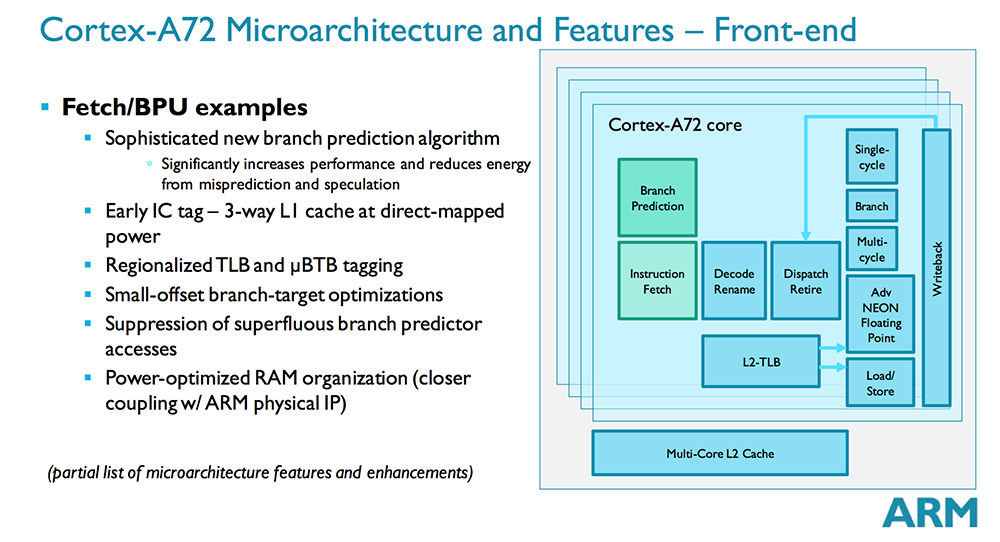

The A72 sees improvements to performance too, starting with a much improved branch prediction algorithm. ARM’s performance modeling group is continuously updating the simulated workloads fed to the processor, and these affect the design of the branch predictor as well as the rest of the CPU. For example, based on these workloads, ARM found that instruction offsets between branches are often close together in memory. This allowed it to make certain optimizations to its dynamic predictor, like enabling the Branch Target Buffer (BTB) to hold anywhere from 2000 large branches to 4000 small branches.

Because real-world code tends to include many branch instructions, branch prediction and speculative execution can greatly improve performance—something that’s usually not tested by synthetic benchmarks. Better branch prediction usually costs more power, however, at least in the front-end. This is at least partially offset by fewer mispredictions, saving power on the back-end by avoiding pipeline flushes and wasted clock cycles. Another way ARM is saving power is by shutting off the branch predictor in situations where it’s unnecessary. There are many blocks, for instance, where instructions between branches are greater than the 16-byte instruction window the predictor uses, so it makes sense to shut it down because the predictor will obviously not hit a branch in that section of code.

Decode / Rename

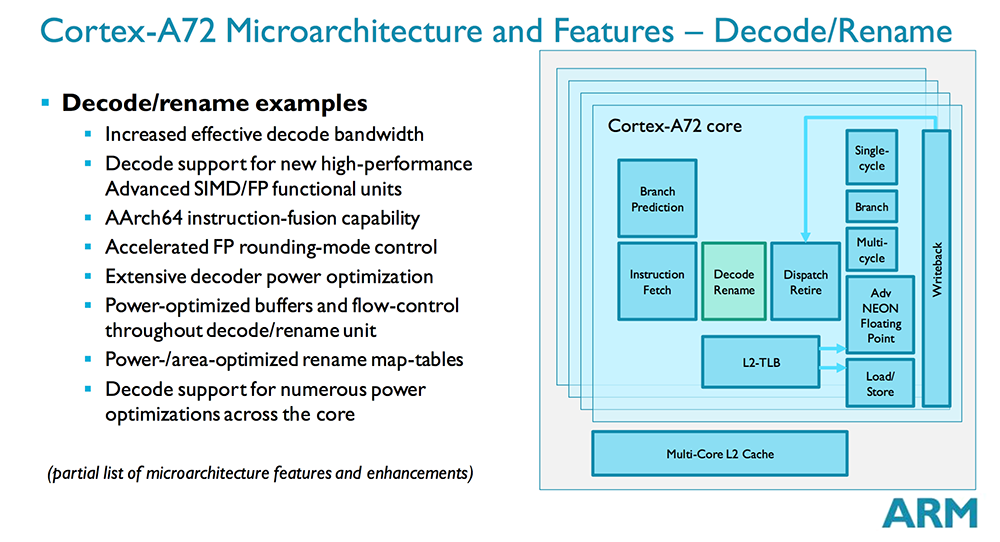

The rest of the front-end is still in-order with a 3-way decoder just like the A57. However, unlike the A57’s decoder, which decodes instructions into micro-ops, the A72 decodes instructions into macro-ops that can contain multiple micro-ops. It also supports AArch64 instruction-fusion within the decoder. These macro-ops get “late-cracked” into multiple micro-ops at the dispatch level, which is now capable of issuing up to five micro-ops, up from three on the A57. ARM quotes a 1.08 micro-ops per instruction ratio on average when executing common code. Improving the A72’s front-end bandwidth helps keep the new lower-latency execution units fed and also reduces the number of cases where the front-end bottlenecks performance.

Dispatch / Retire

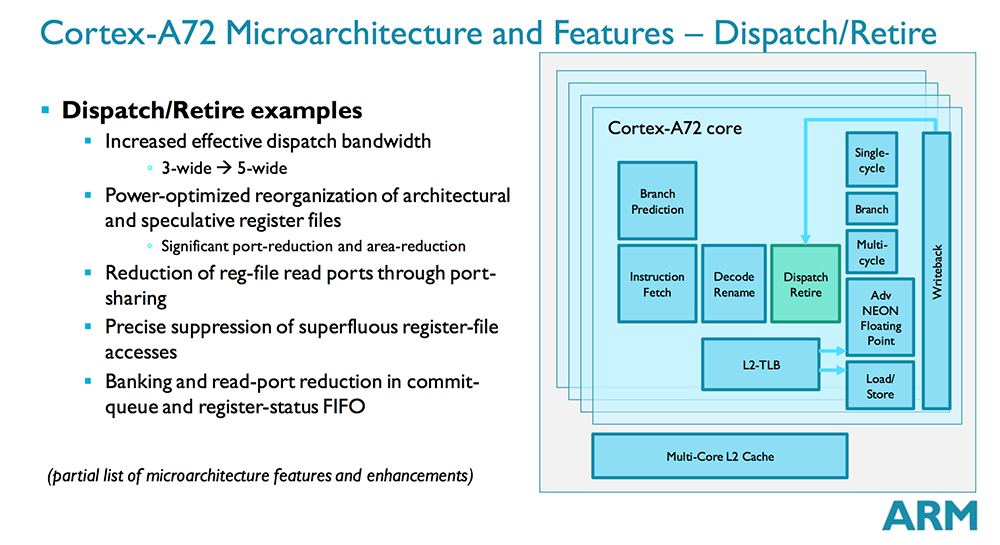

As explained above, the A72 is now able to dispatch five micro-ops into the issue queues that feed the execution units. The issue queues still hold a total of 66 micro-ops like they did on the A57—eight entries for each pipeline except the branch execution unit queue that holds ten entries. While queue depth is the same, the A72 does have an improved issue-queue load-balancing algorithm that eliminates some additional cases of mismatched utilization of the FP/Advanced SIMD units.

The A72’s dispatch unit also sees significant area and power reductions by reorganizing the architectural and speculative register files. ARM also reduced the number of register read ports through port sharing. This is important because the read ports are very sensitive to timing and require larger gates to enable higher core frequencies, which causes a second-order effect to area and power by pushing other components further away. In total, the dispatch unit sees a 10% reduction in area with no adverse affect on performance.

Execution Units

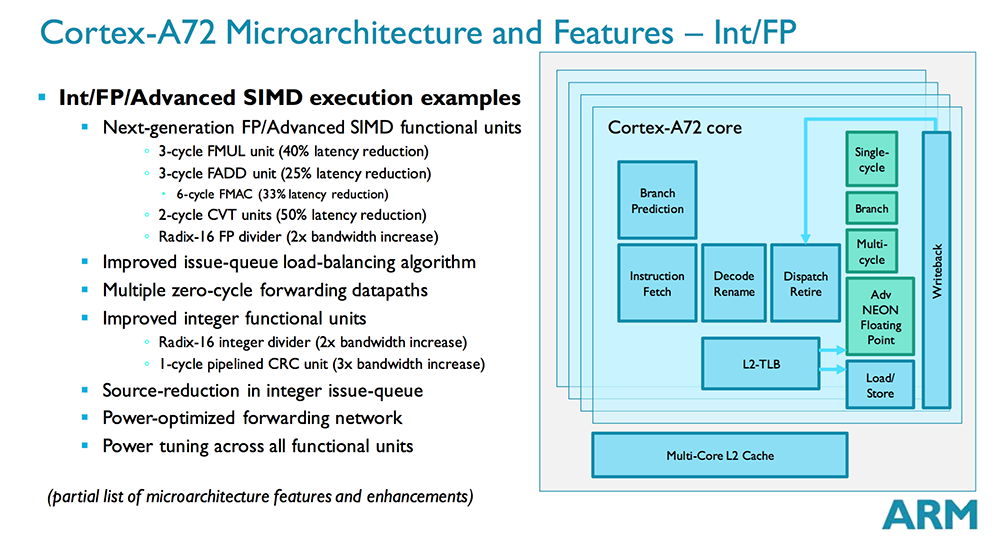

Moving to the out-of-order back-end, the A72 can still issue eight micro-ops per cycle like the A57, but execution latency is significantly reduced because of the next-generation FP/Advanced SIMD units. Floating-point instructions see up to a 40% latency reduction over the A57 (5-cycle to 3-cycle FMUL), and the new Radix-16 FP divider doubles bandwidth. The changes to the integer units are less extensive, but they also make the change to a Radix-16 integer divider (doubling bandwidth over A57) and a 1-cycle CRC unit.

Reducing pipeline length improves performance directly by reducing latency and indirectly by easing pressure on the out-of-order window. So even though the instruction reorder buffer remains at 128 entries like the A57, it now has more opportunity to extract instruction-level parallelism (ILP).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Expanded zero-cycle forwarding (all integer and some floating-point instructions) further reduces execution latency. This technique allows dependent operations—where the output of one instruction is the input to the next—to directly follow each other in the pipeline without a one or more cycle wait period between them. This is an important optimization for cryptography.

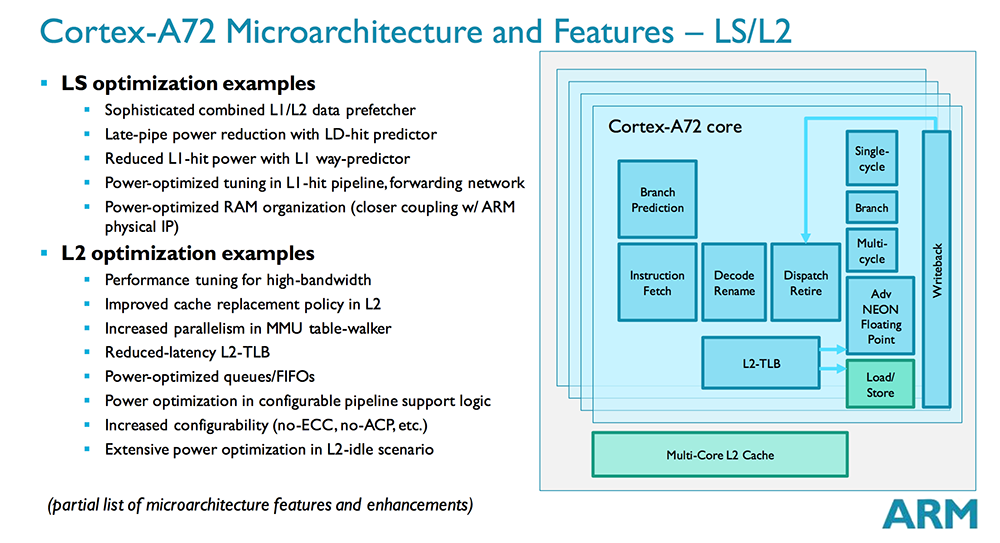

Load / Store

The load/store unit also sees improvements to both performance and power. One of the big changes from A57 is a move away from separate L1 and L2 prefetchers to a more sophisticated combined prefetcher that fetches from both the L1 and L2 caches. This improves bandwidth while also reducing power. There are additional power optimizations to the L1-hit pipeline and forwarding network too.

The L2 cache sees significant optimizations for higher bandwidth workloads. Memory streaming tasks see the biggest benefit, but ARM says general floating-point and integer workloads also see an increase in performance. A new cache replacement policy increases hit rates in the L2, which again improves performance and reduces power overall.

Another optimization increases parallelism in the table-walker hardware for the memory management unit (MMU), responsible for translating virtual memory addresses to physical addresses among other things. Together with a lower-latency L2 TLB, performance improves for programs that spread data across several data pages such as Web browsers.

-

Aspiring techie I wonder what would happen if these guys dipped their toe into the desktop CPU market.Reply -

utroz ReplyI wonder what would happen if these guys dipped their toe into the desktop CPU market.

Well no current Windows support so you would need to run Linux or other ARMv8 compatible OS. For people that just watch netflix, check facebook and other web pages, and type up a few papers for school or work an ARM cpu would have plenty of CPU performance.. -

InvalidError Reply

Not much.17300174 said:I wonder what would happen if these guys dipped their toe into the desktop CPU market.

Changing instruction set does not magically free the architecture from process limitations nor remove bottlenecks from software architecture. If ARM designed a CPU core specifically for desktop, it would hit most of the same performance scaling bottlenecks x86 has. You would likely end up with a 50W ARM chip being roughly even with a 50W Intel chip, the main difference between the two - aside from the ISA - being that the ARM chip is $100 while the Intel chip is $400. -

somebodyspecial And I think that's his point...The PRICE and how much of the public at large such a machine could get. Myself I can't wait until they put out a full desktop chip with heatsink/fan big psu, HD or SSD, 16-32GB mem etc (hopefully with an optional slot for discrete gpu when desired). As games amp up on ARM you'd most likely only be missing WINDOWS/x86 if you use pro apps stuff. Unreal4/Unity5 etc will provide nice graphics for games on the ARM side (most engines port easily today and get even better with Vulkan coming), so only pro apps would be left off for years and some of the big ones (adobe etc) might put out full apps soon anyway. Take off $200-300 for cpu and $100 for windows and I'm guessing an ARM desktop could do quite a bit of damage to WINTEL.Reply

We see people already opting for chromebooks, tablets etc as PC's. Knock a chunk off desktop prices and you'll gains some users and push devs past mobile on arm. I'm hoping NV builds such a box at some point (just a much bigger Shield TV box really), but with multiple OS's (steamos, linux, and android) or at least a way to do it yourself. That would be a pretty versatile box ;) It isn't so much about if they BEAT intel, as it is about dropping the price of PC's everywhere. If ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year. -

viewtyjoe ReplyIf ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.

ARM makes their money by licensing out their designs to other companies which manufacture the actual chips. The only company I'm aware of with the resources and ARM license to theoretically make something like this happen is AMD, and their use of ARM is more directed towards the server sector. -

TechyInAZ ReplyI wonder what would happen if these guys dipped their toe into the desktop CPU market.

I doubt that would happen, having ARM AND X86 on the desktop patform is just going to cause frustration. -

pug_s ReplyIf ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.

ARM makes their money by licensing out their designs to other companies which manufacture the actual chips. The only company I'm aware of with the resources and ARM license to theoretically make something like this happen is AMD, and their use of ARM is more directed towards the server sector.And I think that's his point...The PRICE and how much of the public at large such a machine could get. Myself I can't wait until they put out a full desktop chip with heatsink/fan big psu, HD or SSD, 16-32GB mem etc (hopefully with an optional slot for discrete gpu when desired). As games amp up on ARM you'd most likely only be missing WINDOWS/x86 if you use pro apps stuff. Unreal4/Unity5 etc will provide nice graphics for games on the ARM side (most engines port easily today and get even better with Vulkan coming), so only pro apps would be left off for years and some of the big ones (adobe etc) might put out full apps soon anyway. Take off $200-300 for cpu and $100 for windows and I'm guessing an ARM desktop could do quite a bit of damage to WINTEL.

We see people already opting for chromebooks, tablets etc as PC's. Knock a chunk off desktop prices and you'll gains some users and push devs past mobile on arm. I'm hoping NV builds such a box at some point (just a much bigger Shield TV box really), but with multiple OS's (steamos, linux, and android) or at least a way to do it yourself. That would be a pretty versatile box ;) It isn't so much about if they BEAT intel, as it is about dropping the price of PC's everywhere. If ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.And I think that's his point...The PRICE and how much of the public at large such a machine could get. Myself I can't wait until they put out a full desktop chip with heatsink/fan big psu, HD or SSD, 16-32GB mem etc (hopefully with an optional slot for discrete gpu when desired). As games amp up on ARM you'd most likely only be missing WINDOWS/x86 if you use pro apps stuff. Unreal4/Unity5 etc will provide nice graphics for games on the ARM side (most engines port easily today and get even better with Vulkan coming), so only pro apps would be left off for years and some of the big ones (adobe etc) might put out full apps soon anyway. Take off $200-300 for cpu and $100 for windows and I'm guessing an ARM desktop could do quite a bit of damage to WINTEL.

We see people already opting for chromebooks, tablets etc as PC's. Knock a chunk off desktop prices and you'll gains some users and push devs past mobile on arm. I'm hoping NV builds such a box at some point (just a much bigger Shield TV box really), but with multiple OS's (steamos, linux, and android) or at least a way to do it yourself. That would be a pretty versatile box ;) It isn't so much about if they BEAT intel, as it is about dropping the price of PC's everywhere. If ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.

It is possible to make a hardware ARM chip that rivals Intel. The only problem is software as there is no software that would take advantage of it. Maybe in the distant future that Android OS and its apps would morph to a desktop OS that would rival to Microsoft, but not yet. Microsoft's closest adaptation of ARM soc's are windows 10 developer edition running in the Raspberry Pi 2. All of the software and games have to be written to ARM compatible. Even so, Intel's main focus now are now on low powered and mobile chips that is competing with ARM itself. Who knows, maybe by then AMD would get its act together and compete with Intel on the low powered space when they have access to 14-16nm technologies.

-

MichaelWest With regard to the discussion of when someone is going to provide ARM type cpu's for desktops for ARM gaming etc we already pretty much have the beginning of this with all the many Media streaming TV boxes on the market. They mainly run Android which is not ideal for desktop applications yet and this could take many years to see this improve. Hardware wise they are more than fast enough for all the current ARM android games and there is room for them to get even faster. They don't face the same power usage limitations mobile devices face. They may be targeted for use on TV's with remotes but they can work just as well with HDMI monitors, keyboards, mice and game controllers. If all you wanted was a simple cheap desktop for email/internet and ARM gaming then they already fit the bill.Reply

-

cbxbiker61 ReplyIt is possible to make a hardware ARM chip that rivals Intel. The only problem is software as there is no software that would take advantage of it. Maybe in the distant future that Android OS and its apps would morph to a desktop OS that would rival to Microsoft, but not yet. Microsoft's closest adaptation of ARM soc's are windows 10 developer edition running in the Raspberry Pi 2. All of the software and games have to be written to ARM compatible. Even so, Intel's main focus now are now on low powered and mobile chips that is competing with ARM itself. Who knows, maybe by then AMD would get its act together and compete with Intel on the low powered space when they have access to 14-16nm technologies.

That is only true when you define "software" being "Windows binaries".

More and more people every day are waking up to the fact that "software" is really source code, which can be compiled on any architecture for which there is a compiler. You just have to use an open platform with an open compiler, i.e. Linux/BSD.

-

bit_user Reply

If that were true, Intel could've made an Atom that's at least as efficient as competing ARM cores. But ISA does actually count for something. x86 is significantly harder to decode, and occupies more space in ICaches.17300684 said:Changing instruction set does not magically free the architecture from process limitations nor remove bottlenecks from software architecture. If ARM designed a CPU core specifically for desktop, it would hit most of the same performance scaling bottlenecks x86 has. You would likely end up with a 50W ARM chip being roughly even with a 50W Intel chip

It would be interesting if someone designed an ARM v8 core for optimal single-thread performance. I'm pretty sure it could provide superior performance at the same power, and use less power at the same performance as Skylake (assuming similar design resources & process node as Intel). But this is a tall order, and there's not yet a big enough market. Maybe in 5 years, once ARM has grabbed a significant chunk of server market share, there'll be enough interest in building workstation-oriented ARM cores.