Reader's Voice: Building Your Own File Server

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Buses, Controllers, And Disks

Most older motherboards sport 32-bit PCI slots, all of which share bandwidth. If you look at a chipset diagram for a chipset on one of those boards, the Ethernet controller, IDE controller, and SATA controller all connect to the PCI bus. Combined, disk bandwidth and Ethernet bandwidth are limited to a theoretical 133 MB/s. This will work, but you will end up with a slower file server.

There are many older server class motherboards that have some PCI-X slots. These are often favorable, because they use a bus that is separate from the 32-bit PCI bus. So, you can put your disk controllers in the PCI-X slots and not have anything else interfere with their I/O.

My first file server uses an Asus CUR-DLS motherboard with 64-bit, 33 MHz (or 266 MB/s) PCI-X slots. My second file server uses an Asus NCCH-DL motherboard with 64-bit, 66 MHz PCI-X slots, supporting 533 MB/s, which is faster than my six SATA drives. The controller card works at up to 133 MHZ, which would be 1,066 MB/s if I had a newer motherboard.

If you have a PCI Express-based platform, anything more than one lane should be plenty of bandwidth for a home file server, as that's good for 266 MB/s of throughput.

There is another bus speed to worry about: the connection between the northbridge and southbridge on your motherboard. Even though the Asus NCCH-DL has 64-bit, 66 MHz PCI-X slots, the link from the northbridge to southbridge is only 266 MB/s. In theory, this bus limits I/O. Fortunately, this isn't a big problem in practice, and newer chipsets usually have higher connection speeds.

Disk Controller Card

Modern motherboards have up to six SATA 3 Gb/s connectors. Older ones have fewer available ports, and they might use the slower SATA 1.5 Gb/s standard. It is likely that you will need to add a controller card to your system.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

There are many kinds of controller cards available with different interfaces. For newer systems, PCI Express is perhaps most popular. The interface offers plenty of bandwidth, while PCI-X still serves up enough bandwidth for older systems. For less-expensive systems, 32-bit PCI can be used, although it will limit performance.

There are plain disk controller cards (host bus adapters) and RAID controllers. Using Linux terminology, the RAID cards break down into two groups, FakeRAID and real RAID. If the card performs XOR (parity) calculations by itself, it is considered to be real RAID. Otherwise it relies on the main CPU and software drivers to do the hard work.

My current file server uses the Supermicro SAT2-MV8 eight-port SATA 3 Gb/s controller card. It is a PCI-X controller and will work with a bus speed up to 133 MHz. It is a very nice card with good software support. I chose it because my existing motherboard didn't have any SATA 3 Gb/s ports, but did have PCI-X slots.

I also bought a Rosewill four-port SATA 1.5 Gb/s HBA controller card. It is a 32-bit PCI solution, though it will work at 33 and 66 MHz. It supports JBOD configurations, which is what is needed in order to let software-based RAID do its job. My Asus NCCH-DL has a Promise PDC20319 controller, which is another HBA, but does not support JBOD and is therefore useless here.

It is a good idea to check that Linux supports the controller card (assuming that this is the operating environment you'll be running). To do this you will need to find out the actual storage controller used on the board and research Linux support for that chip. Of course, if the card maker offers a Linux driver, there's a good chance that you're in luck.

Disks

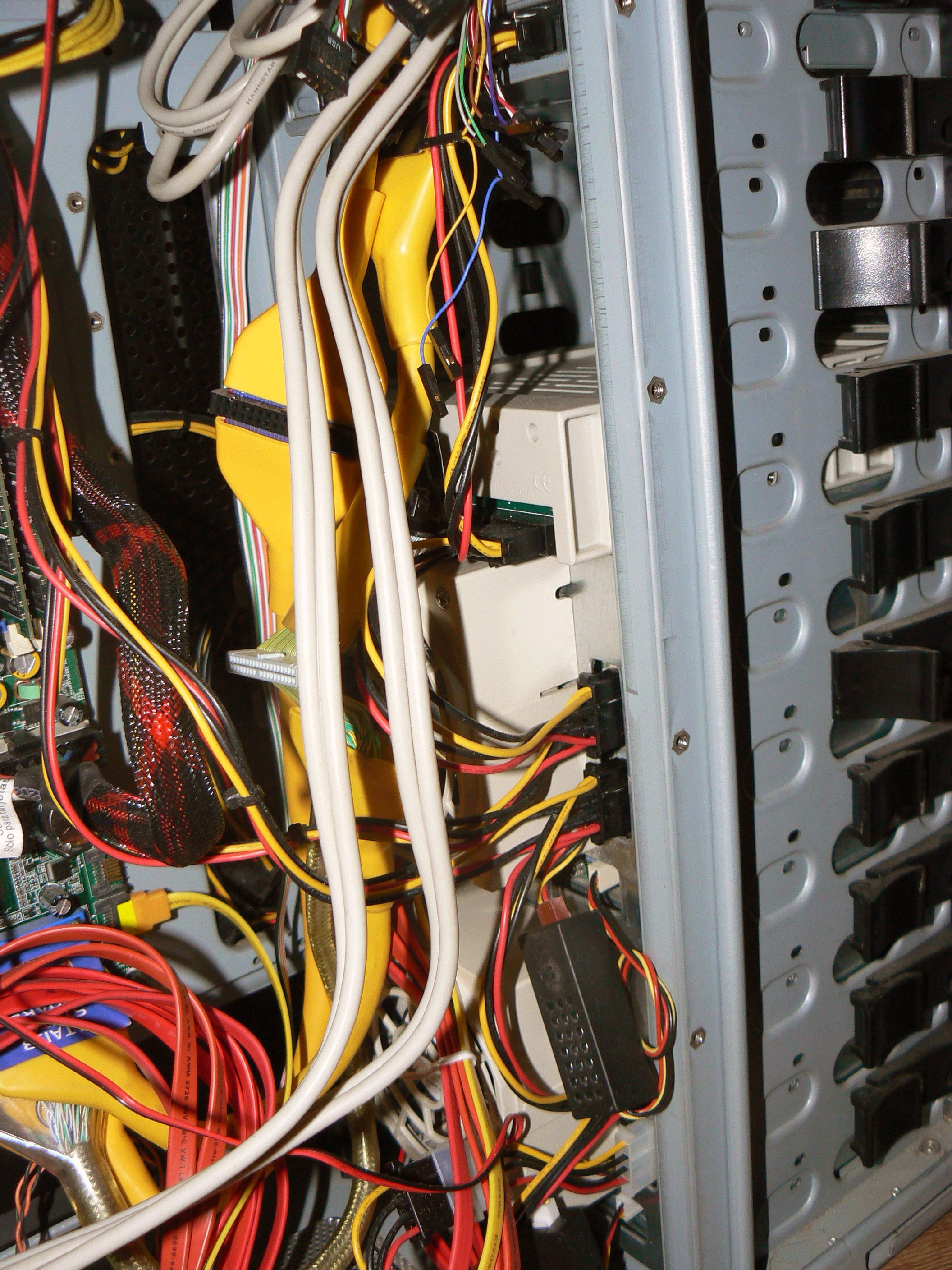

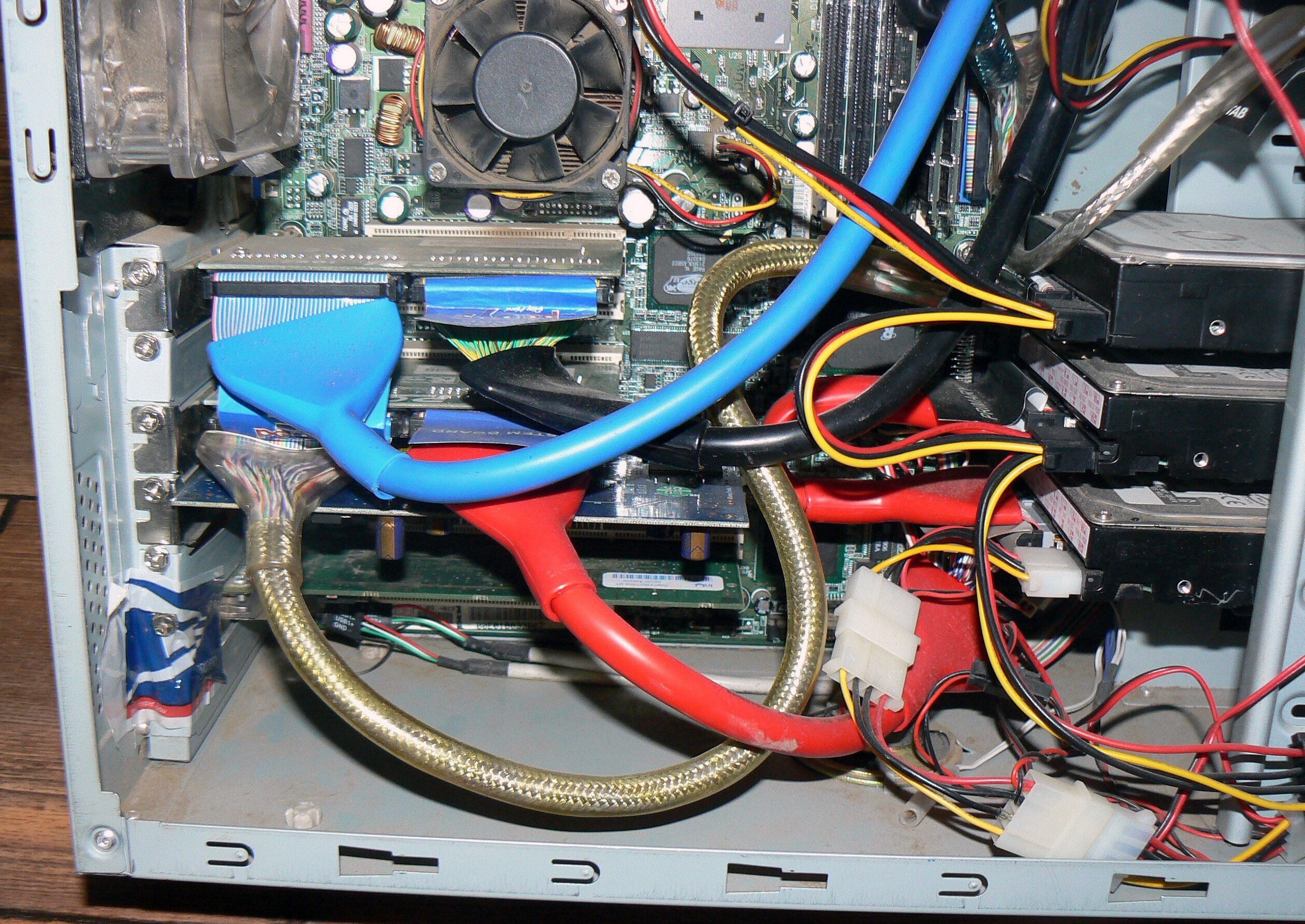

I recommend SATA disks. They offer the largest capacities and are inexpensive. They employ a point-to-point architecture that doesn't share bandwidth. I built my first file server with parallel ATA (PATA) disks and put two disks on each channel. If a disk were to fail, the controller would likely act as if both disks failed, and I would be stuck. If you buy a good PATA RAID card, it will only support one drive per controller to eliminate this problem. Of course, you will end up with a snake’s nest of cables. This is one of the reasons why the industry went to SATA.

Current page: Buses, Controllers, And Disks

Prev Page Power, Heat, And Memory Next Page CPU, Power, And Costs-

wuzy Yet again why is this article written so unprofessionally? (by an author I've never heard of) Any given facts or numbers are just so vague! It's vague because the author has no real technical knowledge behind this article and are basing mainly on experience instead. That is not good journalism for tech sites.Reply

When I meant by experience, I didn't mean by self-learning. I meant developing your own ideas and not doing extensive research on every technical aspects for the specific purpose. -

wuzy And even if this is just a "Reader's Voice" I'd expect a minimum standard to be set by BoM on articles they publish to their website.Reply

Most IT professionals I have come to recognise in the Storage forum (including myself) can write a far higher caliber article than this. -

motionridr8 FreeNAS? Runs FreeBSD. Supports RAID. Includes tons of other features that yes, you can get working in a Linux build, but these all work with just the click of a box in the sleek web interface. Features include iTunes DAAP server, SMB Shares, AFP shares, FTP, SSH, UPnP Server, Rsync, Power Daemon just to name some. Installs on a 64MB usb stick. Mine has been running 24/7 for over a year with not a single problem. Designed to work with legacy or new hardware. I cant reccommend anything else. www.freenas.orgReply -

bravesirrobin I've been thinking on and off about building my own NAS for around a year now. While this article is a decent overview of how Jeff builds his NAS's, I also find it dancing with vagueness as I'm trying to narrow my parts search. Are you really suggesting we use PCI-X server motherboards? Why? (Besides the fact that their bandwidth is separate from normal PCI lanes.) PCI Express has that same upside, and is much more available in a common motherboard.Reply

You explain the basic difference between fakeRAID and "read RAID" adequately, but why should I purchase a controller card at all? Motherboards have about six SATA ports, which is enough for your rig on page five. Since your builds are dual-CPU server machines to handle parity and RAID building, am I to assume you're not using a "real RAID" card that does the XOR calculations sans CPU? (HBA = Host Bus Adapter?)

Also, why must your RAID cards support JBOD? You seem to prefer a RAID 5/6 setup. You lost me COMPLETELY there, unless you want to JBOD your OS disk and have the rest in a RAID? In that case, can't you just plug your OS disk into a motherboard SATA port and the rest of the drives into the controller?

And about the CPU: do I really need two of them? You advise "a slow, cheap Phenom II", yet the entire story praises a board hosting two CPUs. Do I need one or two of these Phenoms -- isn't a nice quad core better than two separate dual core chips in terms of price and heat? What if I used a real RAID card to offload the calculations? Then I could use just one dual core chip, right? Or even a nice Conroe-L or Athlon single core?

Finally, no mention of the FreeNAS operating system? I've heard about installing that on a CF reader so I wouldn't need an extra hard drive to store the OS. Is that better/worse than using "any recent Linux" distro? I'm no Linux genius so I was hoping an OS that's tailored to hosting a NAS would help me out instead of learning how to bend a full blown Linux OS to serve my NAS needs. This article didn't really answer any of my first-build NAS questions. :(

Thanks for the tip about ECC memory, though. I'll do some price comparisons with those modules. -

ionoxx I find tat there is really no need for dual core processors in a file server. As long as you have a raid card capable of making it's own XOR calculations for the parity, all you need is the most energy efficient processor available. My file server at home is running a single core Intel Celeron 420 and I have 5 WD7500AAKS drives plugged to a HighPoint RocketRAID 2320. I copy over my gigabit network at speeds of up to 65MB/s. Idle, my power consumption is 105W and I can't imagine load being much higher. Though i have to say, my celeron barely makes the cut. The CPU usage goes up to 70% while there are network transfers, and my switch doesn't support jumbo frames.Reply -

raptor550 Ummm... I appreciate the article but it might be more useful if it were written by someone with more practical and technical knowledge, no offense. I agree with wuzy and brave.Reply

Seriously, what is this talk about PCI-X and ECC? PCI-X is rare and outdated and ECC is useless and expensive. And dual CPU is not an option, remember electricity gets expensive when your talking 24x7. Get a cheap low power CPU with a full featured board and 6 HDDs and your good to go for much cheaper.

Also your servers are embarrassing. -

icepick314 Have anyone tried NAS software such as FreeNAS?Reply

And I'm worried about RAID 5/6 becoming obsolete because the size of hard drive is becoming so large that error correction is almost impossible to recover when one of the hard drive dies, especially 1 TB sized ones...

I've heard RAID 10 is a must in times of 1-2 TB hard drives are becoming more frequent...

also can you write pro vs con on the slower 1.5-2 TB eco-friendly hard drives that are becoming popular due to low power consumption and heat generation?

Thanks for the great beginner's guide to building your own file server... -

icepick314 Also what is the pro vs con in using motherboard's own RAID controller and using dedicated RAID controller card in single or multi-core processors or even multiple CPU?Reply

Most decent motherboards have RAID support built in but I think most are just RAID 5, 6 or JBOD.... -

Lans I like the fact the topic is being brought up and discussed but I seriously think the article needs to be expanded and cover a lot more details/alternative setup.Reply

For a long time I had a hardware raid-5 with 4 disk (PCI-X) on dual Athlon MP 1.2 ghz with 2 GB of ECC RAM (Tyan board, forgot exactly model). With hardware raid-5, I don't think you need such powerful CPUs. If I recalled, the raid controller cost about as much as 4x pretty cheap drives (smaller drives since I was doing raid and didn't need THAT much space, it at most 50% for the life of the server, also wanted to limit cost a bit).

Then I decided all I really needed was a Pentium 3 with just 1 large disk (less reliable but good enough for what I needed).

For past year or so, I have not had a fileserver up but planning to rebuild a very low powered one. I was eyeing the Sheeva Plug kind of thing. Or may be even a wireless router with usb storage support (Asus has a few models like that).

Just to show how wide this topic is... :-)