Camera Phone Technology 101

From sensors to optics to features, we'll run down the basics of mobile digital camera technology in this primer.

Optics

The first cell phones were equipped with sub-megapixel cameras and single lens optics focused to infinity. They produced pixelated, flat photos which were barely suitable for sharing on the Web. While the optics for mobile cameras still use a fixed focal length and a fixed aperture, they have grown in complexity and performance to match the improvements in sensor technology.

The two most common lens materials are glass and plastic. Glass has many advantages, including a high Abbe number (lower chromatic aberration) and a relatively high refractive index (a measure of how much it bends light rays). Because of its excellent optical qualities, glass lenses are used in high-end cameras, microscopes, eyeglasses (depending on prescription), and just about anywhere else lenses are needed.

The limited thickness of smartphones and tablets presents unique challenges to lens system design, however, requiring complex, aspherical shapes and gradient refractive indices. This rules out traditionally made glass lenses that are ground and polished. Instead, lenses for mobile cameras are generally made from injection molded optical grade plastics. A high-temperature compression molding process does exist for making aspherical glass lenses with better optical performance, but molded plastic lenses still hold the advantage in cost, weight, and manufacturing volume. Where plastic comes up short is hardness, which is why the plastic lens elements are capped by a glass or sapphire lens to resist scratches.

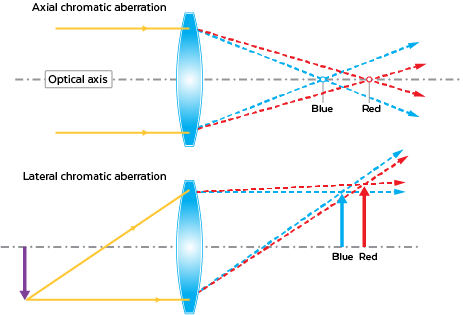

In order to meet thickness requirements and reduce optical aberrations, mobile cameras use lens assemblies with as many as six lens elements. Deficiencies in lens design can produce distortion around the perimeter of the captured image or vignetting which causes images to darken in the corners. Another aberration to watch out for is chromatic fringing. Because the refractive index of a lens varies by wavelength, different colors of light will separate (think prism) and either focus at different distances (axial aberration) or at different spots in the sensor plane (transverse aberration). This results in colored fringes (usually purple since the greatest separation is between red and blue light) around the edges of objects.

Color shading is yet another optical issue, although it’s not actually caused by the lenses. Because CMOS sensors are very sensitive to infrared wavelengths, the lens assembly includes an IR filter. These filters are quite effective, but they’re not perfect. For interferential type filters, the cutoff wavelength is dependent upon the angle of the incoming light rays. Mobile cameras, whose lenses are close to the image sensor, are especially susceptible to this aberration because of the larger CRA values (chief ray angle, which is the angle a light ray passing through the center of the lens aperture makes with the lens array centerline) they encounter. Color shading results in anomalous color variation across the image. This issue may be more noticeable using different light sources, particular those that do not have a continuous spectrum such as LED or fluorescent lights.

Generally speaking, the larger the lens aperture and/or the smaller the focal length (both of which lead to larger CRA), the more lenses are needed to compensate for optical aberrations. The simplest case is a pinhole camera, where the aperture is so small that no lenses are needed. In the most extreme case, a camera with a wide aperture and a large zoom range can have over 15 lens elements. As mobile devices continue to get thinner and mobile cameras try to compensate for smaller CMOS pixels by gathering more light with larger apertures, optical aberrations will become increasingly difficult to avoid.

Based on these trends, the image signal processor’s (ISP) role will continue to increase. The ISP already controls the automatic camera functions, including automatic focus, automatic white balance, and automatic selection of exposure and ISO, along with other essential operations such as demosaicing (reconstructing a full color image by interpolating an RGB value for each pixel). It also handles post-processing algorithms for noise reduction and HDR. As if all this is not enough to keep it busy, the ISP also runs algorithms for correcting imperfections in the lens assembly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The Exposure Triangle: Aperture, ISO & Shutter Speed

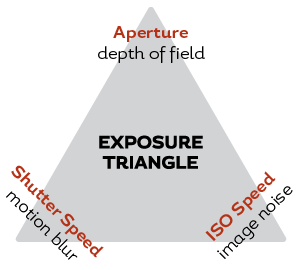

Exposure measures how much light makes it to the camera sensor and helps determine how bright the photograph appears. If a photograph isunderexposed, the sensor does not receive enough light and produces an image that’s too dark with a loss of shadow detail. If a photograph is overexposed, the sensor receives too much light and produces an image that’s too bright with a loss of highlight detail. Exposure is affected by three camera settings which form the corners of the exposure triangle: aperture, ISO, and shutter speed.

A good analogy for exposure is collecting rain in a bucket. Unless you’re in a studio, you generally do not have control over illumination, just like you do not have control over the rainfall. What you can control is the diameter of the bucket (aperture), the amount of rain to collect (ISO), and how long the bucket sits in the rain (shutter speed).

Aperture

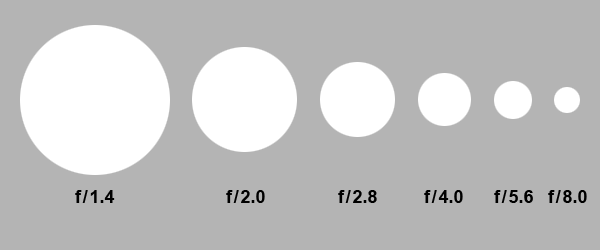

Aperture is specified as an f-number or f-stop value like f/1.9, and it’s equal to the focal length divided by the diameter of the entrance pupil. This means that for a given focal length, an entrance pupil with a larger diameter will let in more light and have a smaller f-stop number, while a smaller diameter that lets in less light will have a larger f-stop number.

Comparing cameras based on f-stop values alone can be tricky. Generally speaking, lower f-stop values are desirable, because they allow more light to reach the camera sensor. However, you cannot compare f-stop values directly and say that an f/1.9 lens lets in 16% more light than an f/2.2 lens. For one thing, the cameras may have different focal lengths. The f-stop value is also nonlinear: Cutting the f-stop value in half quadruples the entrance pupil area. Therefore, we need to calculate the area of the entrance pupils in order to accurately compare the light gathering capability for two different lenses. For example, assuming that the two cameras compared above have the same 3.78mm focal length, then the f/1.9 lens will let in 34% more light than the f/2.2 lens, significantly more than the 16% figure from comparing the f-stop values directly. It’s also conceivable that an f/2.0 lens would let in more light than an f/1.9 lens given a significant difference in focal length. This is why marketing claims about lower f-stop values should be treated with some skepticism.

So, if lower f-stop values are generally better, why not make an f/1.0 or f/0.2 lens? Because, like other optical parameters, there are tradeoffs. Keeping the focal length constant (which is restricted by the device thickness anyway) and increasing the diameter of the entrance pupil increases the chief ray angle. In our discussion above about camera optics, we saw that large chief ray angles can lead to undesirable optical aberrations. Adding additional lenses to the array can partially compensate for some of these effects, but the thickness constraint prevents this.

Aperture also affects a photograph’s depth of field (DOF), which is the range of distance from the camera where objects appear to be in focus. Technically, a camera can only focus at a single distance, but because image sharpness decreases gradually to either side of the focusing distance, we perceive objects to remain acceptably sharp over a wider span. Higher f-stop values increase DOF, leading to images where a larger portion of the foreground and background remain in focus. Conversely, lower f-stop values decrease DOF, leading to the bokeh effect where the subject of the photograph appears sharp in stark contrast to the heavily blurred background. Handheld cameras allow a photographer to adjust the aperture to achieve the desired effect. Mobile cameras, however, used fixed aperture lenses, so the resulting DOF needs to be appropriate for many different scenarios. Lowering the f-stop too far would result in mostly blurry images.

ISO

A camera’s ISO value determines how sensitive it is to light. Lowering the ISO or sensitivity means the camera requires more light to get the proper exposure. ISO is inversely proportional to exposure, so each time ISO gets doubled (from 100 to 200, for example), the camera needs only half as much light for the same exposure.

Raising the ISO value increases the amplification gain of the sensor, reducing the number of electrons the pixels need to register a certain luminance value. The penalty, however, is a lower signal-to-noise ratio which increases the amount of visible noise artifacts in the image. Some of this noise can be removed by post-processing algorithms, but increasing the exposure using aperture and/or shutter speed produces better results.

Shutter Speed

A shutter controls the amount of light entering the camera and there are two types: mechanical and electronic. A mechanical shutter physically blocks the light from reaching the sensor. When a photo is taken, the shutter moves out of the way for the duration of the exposure time. Because of cost and space constraints, mobile devices use electronic shutters. An electronic shutter has no moving parts and does not actually block any light from reaching the sensor. Instead, it resets the pixels to zero and allows them to collect light for a duration of time equal to the shutter speed. Electronic rolling shutters expose and read the pixels line-by-line, which means that pixels on one side of the sensor are exposed at an earlier time than the pixels on the other side. Depending on the selected shutter speed and the bandwidth of the image sensor, there can be a significant delay during this process, resulting in geometric distortions for moving objects. A global shutter solves this problem by exposing and reading all of the pixels at the same time, capturing a full frame in one chunk. Global shutters require more circuitry, however, increasing the cost of the sensor. Regardless of the type of shutter used, the longer the exposure, the more light will be allowed in.

Completing The Triangle

The combination of aperture, ISO, and shutter speed settings give the photographer control of exposure and allow for some interesting effects. For instance, using a high shutter speed, along with an ISO increase to maintain brightness, can “freeze” a subject in time, a useful effect when photographing a sporting event or energetic kids. Using very slow shutter speeds (usually one second or more) can create motion blur effects and light streaks. When combined with a tripod or OIS, slower shutter speeds capture low-light photos at a lower ISO value, reducing image noise.

Unfortunately, aperture is fixed for mobile cameras, reducing the camera’s flexibility. This is another reason to choose a camera with a lower f-stop that allows in more light. You can decrease the amount of light hitting the sensor with shutter speed and reduce the sensor’s sensitivity to light with ISO, but neither can make up for the loss in pupil diameter that results from a higher f-stop.

-

wtfxxxgp ReplyThanks for the informative article. You might have just cured my insomnia.

Geez. That was a cruel comment! hahaha

Seriously though, that article was very informative for those of us who like to know a bit about everything :) -

David_118 Great article. Very Informative. It would be useful, knowing these concepts, if there was a studio that took mobile cameras and used them on some standard set of scenes, either photographing paper with test patterns, or constructed scenes in low light, or whatever, that could measure things like signal-to-noise, HDR performance, optical abberations, shutter speed, etc. Anyone know of such an outfit?Reply -

MobileEditor ReplyGreat article. Very Informative. It would be useful, knowing these concepts, if there was a studio that took mobile cameras and used them on some standard set of scenes, either photographing paper with test patterns, or constructed scenes in low light, or whatever, that could measure things like signal-to-noise, HDR performance, optical abberations, shutter speed, etc. Anyone know of such an outfit?

There are several labs, such as DxOMark, Image Quality Labs, and Sofica, that perform these tests. We're considering adding some of these tests to our reviews, but they require expensive equipment and software to do it right. Hopefully, we'll be able to expand our camera testing in the future--budget and time permitting.

- Matt Humrick, Mobile Editor, Tom's Hardware -

zodiacfml Good job. Finally, someone who really knows about what's being written. The tone and some information though might be too much for someone who has not even heard of shutter speeds or aperture. I also feel there's information overload as each topic/headline deserves its own article including investigation on megapixel/resolution spec.Reply