AMD Fusion: How It Started, Where It’s Going, And What It Means

You've already read about APUs, and maybe you're even using them now. But the road to creating APUs was paved with a number of struggles and unsung breakthroughs. This is the story of how hybrid chips came to be at AMD and where they’re going.

HSA's Big Picture

With all of the focus on APUs and GPGPU, it’s easy to forget that there’s more to life than parallel code. The CPU remains a critical part of heterogeneous computing. Much of the code in modern applications remains serial and scalar in nature and will only run well on strong CPU cores. But even for the CPU, there are different types of workloads. Some loads do best on a few fast cores, while others excel on a larger number of lower-power cores. In both cases, and as mentioned earlier, applications need to be tailored to fit a power envelope for a particular device, whether it’s an all-in-one, notebook, tablet, or phone. As APUs gradually take over most (but not all) of the CPU arena, we’re seeing APUs diversify and segment in order to address these different power requirements. The difference now is that APUs seem likely to soon offer nearly twice the diversity of recent CPU families since they must address both scalar- as well as vector-based needs across device markets.

I attended AMD’s 2012 Fusion Developer Summit (AFDS) in Seattle, and, to my ears, it sounded like the last thing on anyone’s mind was the desktop market. There was a lot of buzz about AMD leveraging HSA to find better roads into the mobile markets. The biggest news was far and away ARM, the leading name in ultramobile processors, joining the HSA Foundation (remember, HSA is agnostic to architecture). This carries significant ramifications in many directions.

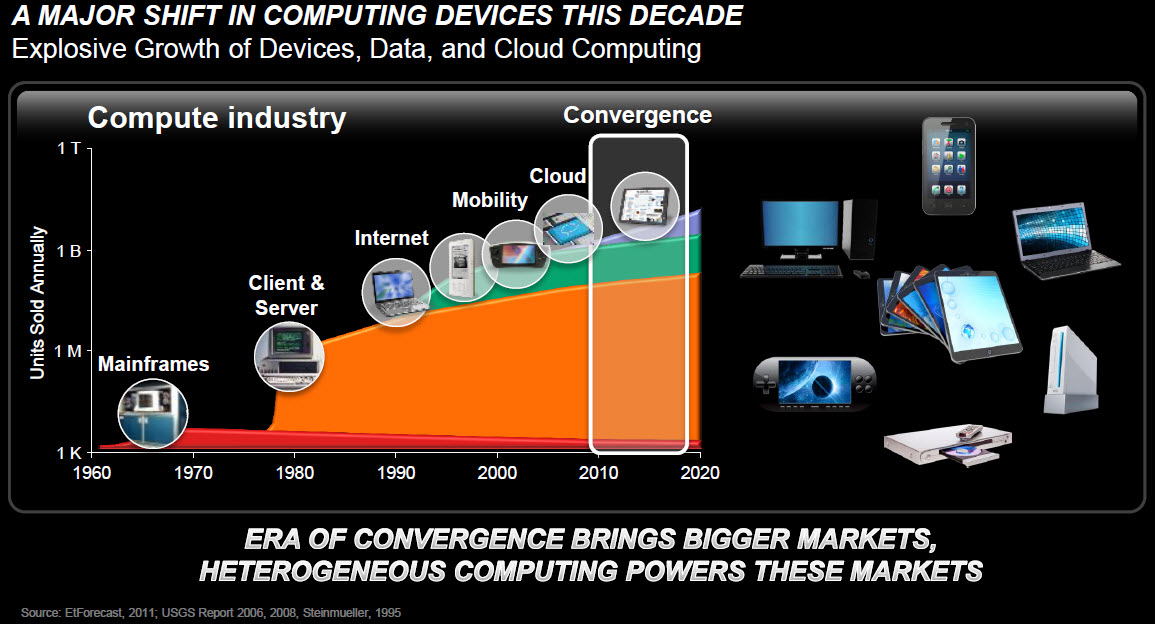

Everybody knows that mobile is hot and desktop is flat, at least in industry sales terms. To me, much of the messaging at AFDS seemed to reflect this, perhaps because desktop is the segment that seems to care least about the power and efficiency benefits HSA promises. So when I was able to sit down with Phil Rogers, I asked him if one of the outcomes of HSA would be a gradually increasing shift by AMD toward battery-powered devices and a leaning away from desktops.

"This is a common misconception," he said. "Power matters a lot on the whole range of platforms. On the battery situation, everybody gets it. Even with desktops, and more and more, what we’re calling desktops are becoming all-in-ones, people want to know not just how fast it runs but how quiet it is and how attractive it is as a product. We’re seeing that even gamers don’t want a box next to their leg pumping hot air on their shins while they’re playing. What they really want is a 30” screen on the wall with a PC built into it that runs fantastic. And in that environment, you do care about power. Even if you don’t care about the electricity bill, you don’t want fans whining and screaming or heating up and taking away clock speed."

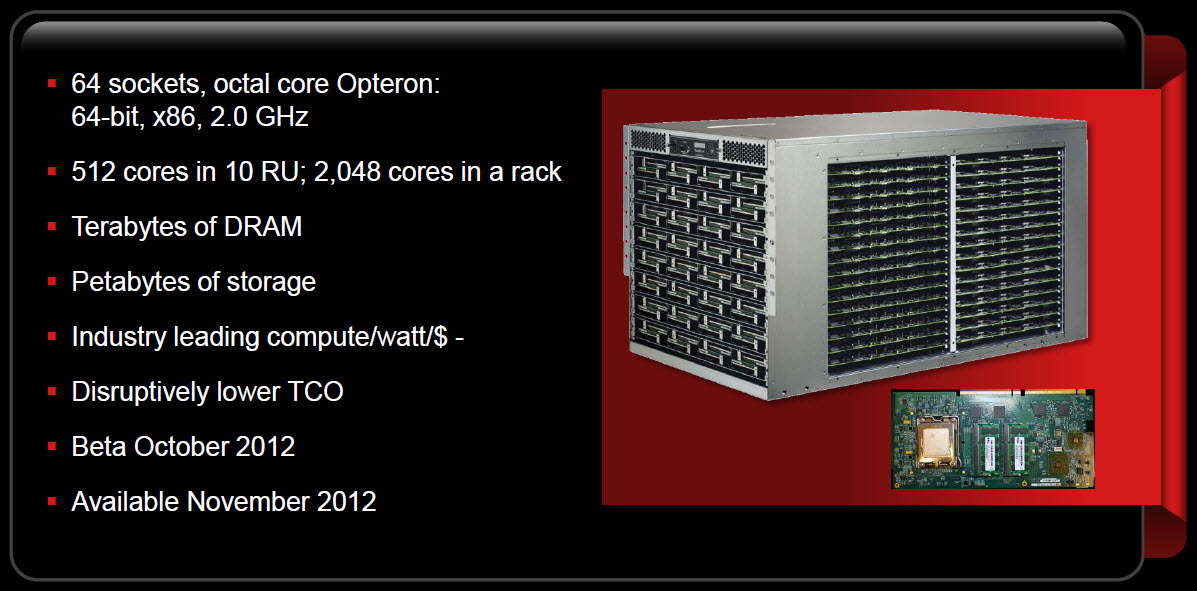

At the other end of the market, servers stand to benefit greatly from HSA. Consider data centers and the continuing growth of cloud computing. With even smallish data centers now hosting more than 10 000 servers each, power efficiency continues to grow in urgency. Generally speaking, hardware costs comprise only one-third of a server's total cost over its service life. Another third is spent on electricity used, and the remaining third goes toward cooling costs. If HSA can help improve compute efficiency, allowing systems to complete tasks more quickly so they can turn off large logic blocks or entire cores, then power consumption can decline drastically.

"You can only pack processors so densely," says Hegde. "HSA allows you to process more densely and at a lower power envelope. I don’t need to tell you that CUDA has been doing HPC applications for five years. All those applications are so much easier with HSA because HSA is heterogeneous between GPU and CPU. Nvidia always leans toward the GPU. And certainly, there are some embarrassingly parallel applications out there, but the vast majority of applications are not, including many HPC applications. So don’t think that HSA is just about client; it’s an architecture that spans many platforms."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: HSA's Big Picture

Prev Page Focus On The Programmer Next Page More About The Big Picture-

mayankleoboy1 Wont the OS have to evolve along with the HSA to support it? Can those unified-memory-space and DMA be added to Windows OS just with a newer driver?Reply

With Haswell coming next year, Intel might just beat AMD at HSA. They need to deliver a competitive product. -

Reynod Great article ... well balanced.Reply

I think you were being overly kind about the current CEO's ability to guide the company forward.

Dirk Meyer's vision is what he is currently leveraging anyway.

A company like that needs executive leadership from someone with engineering vision ... not a beancounter from retail sales of grey boxes.

History will agree with me in the end ... life in the fast lane on the cutting edge isn't the place for accountants and generic managers to lead ... its for a special breed of engineers.

-

jamesyboy AMD is the jack-of-all-trades and the master-at-none. Even the so called "balancing" that they're supposed to be doing is already being done better by Nvidia, ARM, and now Intel with Medfield. AMD doesn't stand a chance trying to bring a ARM like balance to the x86 field. I have no idea what they were thinking when they decided that they'd rather be stuck in between mobile and desktop. They have all of this wonderful IP, all those wonderful engineers. I fear that what's best for AMD will be to leave the x86 battlefield all together, and become a company like Qualcomm or Samsung, and leverage their GPU IP into the Arm world--i fear this because a world where Intel is the only option, is one that's far worse off for the consumer.Reply

They don't have the efficiency of Ivy Bridge, or Medfield, they don't have the power of Ivy Bridge, and they're missing out on this round of the Discrete Graphics battle (they were ahead by so far, but nvidia seems to have pulled an Ace out of their butt with the 600 series). So what exactly IS AMD doing well? HTPC CPUs? Come on! The adoption rate for the system they're proposing with HSA is between 5 and 10 years off....and because they moved too early, and won't be able to compete until then, they have to give the technology away for free to attract developers.

Financially, this a company's (and a CEOs) worst nightmare...they're too far ahead of their time, and the hardware just isn't there yet.

This will end of being just like the tablet in the late '90s, and early '00s. It won't catch on for another decade, and another company will spark, and take advantage of the transition properly, much to AMD's chagrin.

I'm not sure if it was the acquisition of ATI that made AMD feel like it was forced to do this so early, but they aren't going to force the market to do anything. This work should have been done in parallel while making leaps and bounds within the framework of the current model.

You can't lead from behind.

I've always been a fan of AMD. They've brought me so e of the nicest machines I've ever owned...the one that had me, and still have me most excited. But I have, and always will buy what's fastest, or best at the job I need the rig for. And right now...and for the foreseeable future, AMD can't compete on any platform, on any field, any where, at anytime.

AMD just bet it's entire company, the future of ATI (or what was the lovely discrete line at AMD), the future of their x86 platform, and their manufacturing business all on something that it wasn't sure it would even be around to see. They bet the farm on a dream.

Nonetheless, i disagree that you were being overly kind about the CEOs ability to lead the company. I think you're being overly kind for thinking this company has a viable business model at all. Theyll essentially have to become a KIRF (sell products that are essentially a piece o' crud, dirt cheap) f a compay to stay alive.

This is mostly me ragin at the fail. The writer of this article deserves whatever you journalist have for your own version of a Nobel.

This was a seriously thorough analysis, and by far the best tech piece i've seen all year. We need more long-form journalism in the world, for i her way too many people shouting one line blurbs, with zero understanding of the big picture.But i have to say, that while this artucle is 98% complete, you missed speaking anout the fact that this company is a company...an enterprise that survives only with revenue. -

A Bad Day I really enjoyed this article.Reply

Now, does anyone want to play Crysis in software rendering with max eyecandy? -

army_ant7 @jamesyboy:Reply

It's not over until the fat lady sings. As I read your post, I felt that you were missing a (or the) big point of the APU and this article.

It's about how software is developed nowadays and how there is such a huge reserve of potential performance waiting to be tapped into. I could imagine that if future software bite into this "evolution" to more GPGPU programming then I would expect a huge jump in performance even on the current, or shall I see currently being phased out, Llano APU's.

Yes, current discrete GPU systems would improve in performance as well significantly I would think, but to the same degree that APU's would improve, especially with the new technologies to be implemented like unifying memory spaces, etc? I don't think so.

I'm not saying that you're totally wrong. AMD might end up croaking, but we can't say for certain 'til it happens. Don't you agree? :-) (I'm not picking any fights BTW. Just sharing my thoughts.) -

blazorthon jamesyboyAMD is the jack-of-all-trades and the master-at-none. Even the so called "balancing" that they're supposed to be doing is already being done better by Nvidia, ARM, and now Intel with Medfield. AMD doesn't stand a chance trying to bring a ARM like balance to the x86 field. I have no idea what they were thinking when they decided that they'd rather be stuck in between mobile and desktop. They have all of this wonderful IP, all those wonderful engineers. I fear that what's best for AMD will be to leave the x86 battlefield all together, and become a company like Qualcomm or Samsung, and leverage their GPU IP into the Arm world--i fear this because a world where Intel is the only option, is one that's far worse off for the consumer.They don't have the efficiency of Ivy Bridge, or Medfield, they don't have the power of Ivy Bridge, and they're missing out on this round of the Discrete Graphics battle (they were ahead by so far, but nvidia seems to have pulled an Ace out of their butt with the 600 series). So what exactly IS AMD doing well? HTPC CPUs? Come on! The adoption rate for the system they're proposing with HSA is between 5 and 10 years off....and because they moved too early, and won't be able to compete until then, they have to give the technology away for free to attract developers.Financially, this a company's (and a CEOs) worst nightmare...they're too far ahead of their time, and the hardware just isn't there yet.This will end of being just like the tablet in the late '90s, and early '00s. It won't catch on for another decade, and another company will spark, and take advantage of the transition properly, much to AMD's chagrin.I'm not sure if it was the acquisition of ATI that made AMD feel like it was forced to do this so early, but they aren't going to force the market to do anything. This work should have been done in parallel while making leaps and bounds within the framework of the current model.You can't lead from behind.I've always been a fan of AMD. They've brought me so e of the nicest machines I've ever owned...the one that had me, and still have me most excited. But I have, and always will buy what's fastest, or best at the job I need the rig for. And right now...and for the foreseeable future, AMD can't compete on any platform, on any field, any where, at anytime.AMD just bet it's entire company, the future of ATI (or what was the lovely discrete line at AMD), the future of their x86 platform, and their manufacturing business all on something that it wasn't sure it would even be around to see. They bet the farm on a dream.Nonetheless, i disagree that you were being overly kind about the CEOs ability to lead the company. I think you're being overly kind for thinking this company has a viable business model at all. Theyll essentially have to become a KIRF (sell products that are essentially a piece o' crud, dirt cheap) f a compay to stay alive.This is mostly me ragin at the fail. The writer of this article deserves whatever you journalist have for your own version of a Nobel.This was a seriously thorough analysis, and by far the best tech piece i've seen all year. We need more long-form journalism in the world, for i her way too many people shouting one line blurbs, with zero understanding of the big picture.But i have to say, that while this artucle is 98% complete, you missed speaking anout the fact that this company is a company...an enterprise that survives only with revenue.Reply

Funny, but last I checked, AMD's Radeon 7970 GHz edition is the fastest single GPU graphics card for gaming right now, not the GTX 680 anymore. Furthermore, AMD can compete in many markets in both GPU and CPU performance and price. AMD's FX series has great highly threaded integer performance for its price (much more than Intel) and the high end models can have one core per module disabled to make them very competitive with the i5s and i7s in gaming performance. Going into the low end ,the FX-4100 and Llano/Trinity are excellent competitors for Intel. Some of AMD's APUs can be much faster in both CPU and GPU performance than some similarly priced Intel computers, especially in ultrabooks and notebooks where Intel uses mere dual-core CPUs that either lack Hyper-Threading or have such a low frequency that Hyper-Threading isn't nearly enough to catch AMD's APUs. Is this always the case? No, not at all. However, you ignore this when it happens (which isn't rare) and you ignore many other achievements of AMD.

As of right now, there is no retail Nvidia card that has better performance for the money (at least when overclocking is concerned) than some comparably performing AMD cards anymore. The GTX 670 ca't beat the Radeon 7950 in overclocking performance and it can't beat the 7950 in price either. The GTX 680 is no more advantageous against the Radeon 7970 and 7970 GHz Edition. I'm not saying that these cards don't compete well or that they don't have great performance for the money (that would be lying), but they don't win outside of power consumption, which, although important, isn't significant enough of an advantage when the numbers are this close.

Whether or not AMD will fail as a company remains to be seen. Maybe they will, maybe they won't. However, if you want to say that they do, then the supporting info that you give should be more accurate. -

army_ant7 blazorthonAs of right now, there is no retail Nvidia card that has better performance for the money (at least when overclocking is concerned) than some comparably performing AMD cards anymore. The GTX 670 ca't beat the Radeon 7950 in overclocking performance and it can't beat the 7950 in price either. The GTX 680 is no more advantageous against the Radeon 7970 and 7970 GHz Edition.Interesting. I didn't know that. :-) Is this generally true about the whole GCN lineup vs. the whole Keppler line up? I'm talking about overclocking performance of course since by default, the high-end Nvidia cards are more recommended, well at least the GTX 670. :-)Reply