Intel Xeon and AMD Opteron Battle Head to Head

Memory Interface

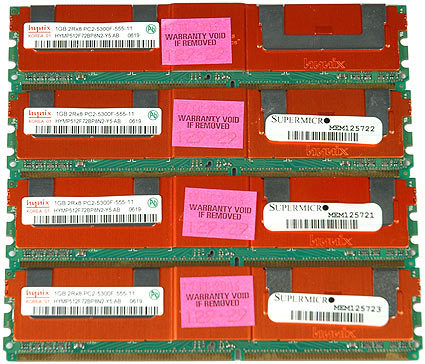

Our Intel test system has four 1 GB DDR2-667 FB-DIMMs inside. The theoretical throughput for this setup is 21.4 GB/s for the 1333 MHz FSB Woodcrest, and 17.4 GB/s for the 1066 MHz FSB Dempsey processor.

Our AMD system has four 1 GB DDR-400 modules, so its throughput should be 2x 6.4 GB/s due to the CPU's integrated memory controllers. The next generation Opteron based on the AM2 platform makes use of DDR2-800, which theoretically doubles the throughput.

The Opteron's integrated memory controllers (IMC) result in much better latency, while Intel's solution is the use of a larger shared 4 MB L2 cache, which highly reduces memory access time. Which of these two methods is superior is controversial. The advantage of Intel's approach is that by keeping the memory controller on the chipset, they have greater flexibility as to what type of memory can be used, where an integrated memory controller would need a redesign of the CPU core. Given that Intel's Xeon processors share the same architecture as the desktop version CPUs, it is more efficient to have one core architecture that can be used with the standard DDR2 as well as the servers' FB-DIMM modules. Also, the fact that cache uses less power than an integrated memory controller is a plus in a market where power consumption is a significant factor. Having said this, it is a known fact that an IMC results in significant performance gains, but is it the additional cost and power requirements? Intel has decided that it is not.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.