Is This Even Fair? Budget Ivy Bridge Takes On Core 2 Duo And Quad

Reader requests affect much of the work we do, and we constantly receive email asking for this one: compare Intel's older Wolfdale- and Yorkfield-based designs against today's budget-friendly Ivy Bridge-based processors. Well, you asked, and we deliver.

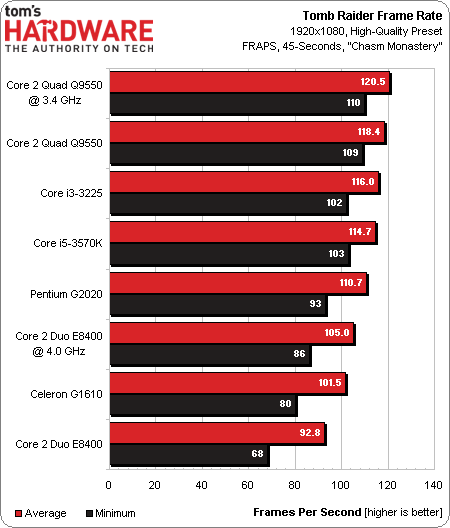

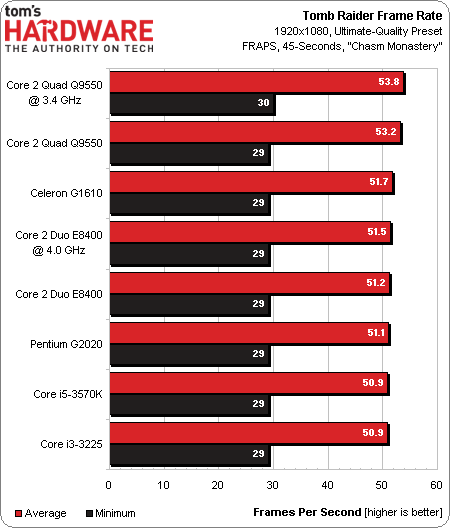

Results: Tomb Raider

Tomb Raider, one of AMD’s latest Gaming Evolved titles, is in my opinion one of the biggest hits thus far in 2013. It takes powerful graphics hardware to deliver playable performance using Ultimate quality settings, which enable realistic TressFX hair. Having dumped quite a bit of time into both playing the game and analyzing its performance, I felt it was beneficial to test two different game levels for today’s story.

Our 45-second custom run of the “Chasm Monastery” level contains a short cinematic that punishes graphics processors far more forcefully than the built-in benchmark, although mostly when TressFX hair is enabled. This manual run-through is easily repeatable and great for testing graphics cards.

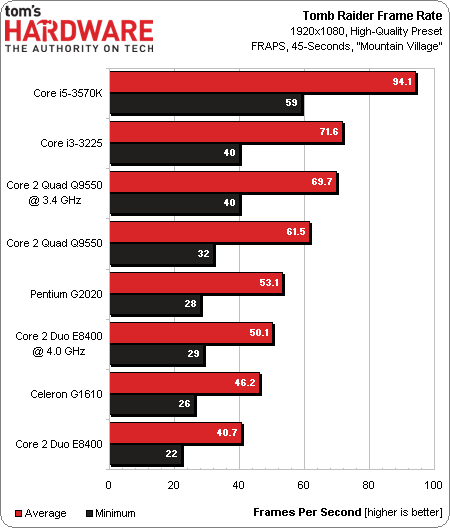

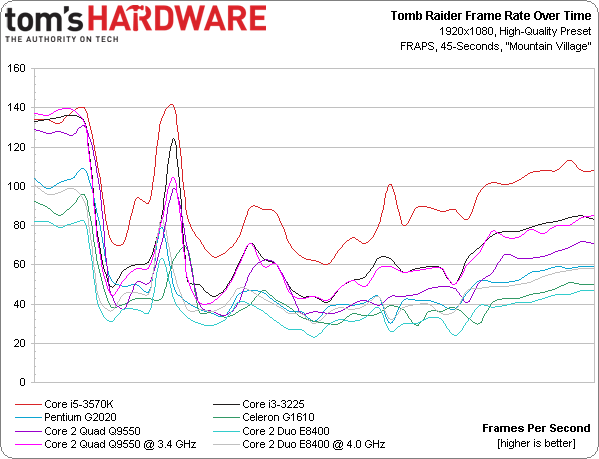

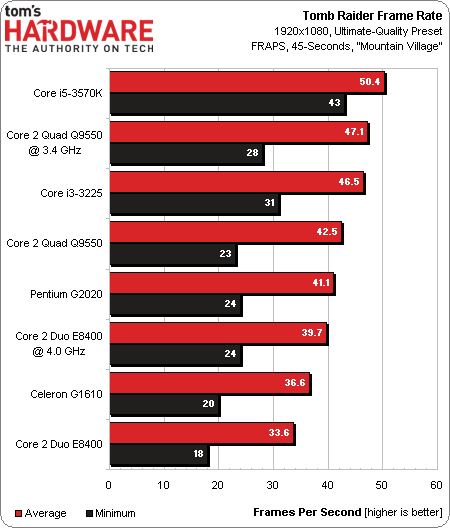

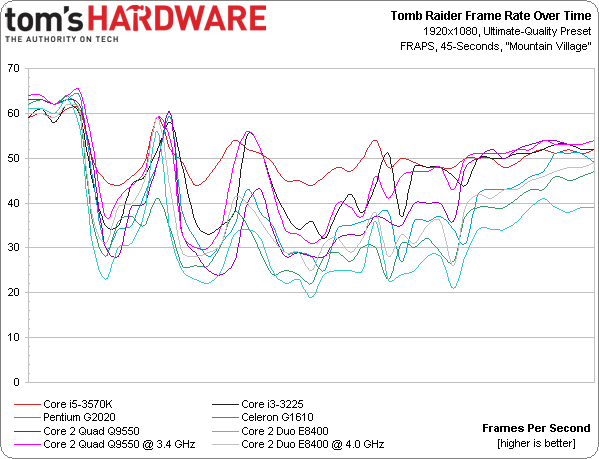

Of course, as with many games, the hardware demands fluctuate from one map to the next. Outdoor areas encountered in the “Mountain Village” level offer a far better look at the game’s CPU demands. You’ll see less of this one on Tom’s Hardware, as it requires more user control and also overwrites the saved game, requiring somewhat tedious save slot juggling before each run. But used together, these two benchmarks provide a worst-case look at both the game’s CPU and GPU requirements.

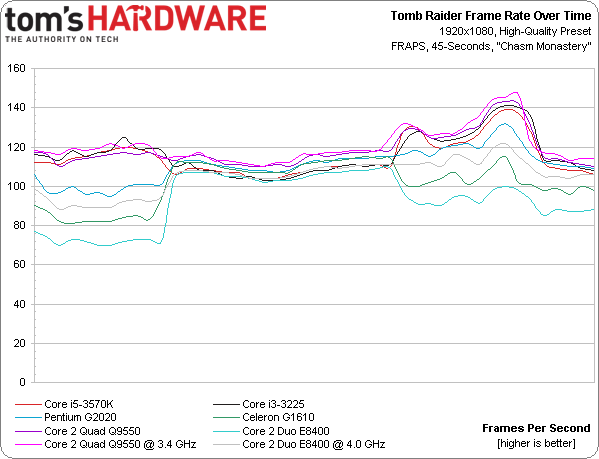

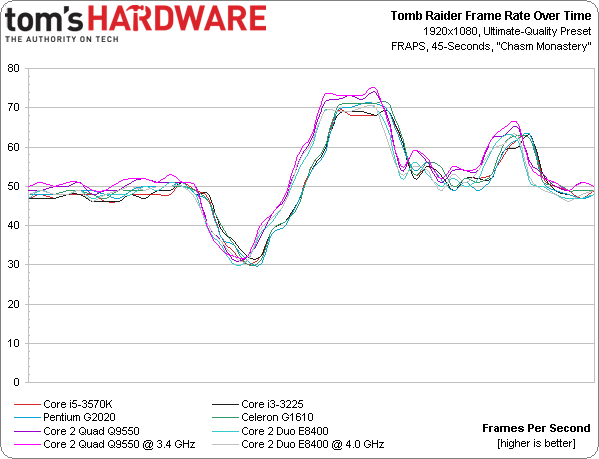

Only normal hair effects are enabled at the High quality preset, and that flat area in the middle of our line graphs, where the dual-core processors receive a performance boost, is the cinematic sequence. All of our tested dual-core processors fly through this part of the game, yielding similar performance.

However, frame rates plummet once we step outdoors and overlook “Mountain Village”. This performance hit is most noticeable on the dual-core processors, and at times the Core 2 Duo E8400 makes it difficult to precisely control Lara’s maneuvers.

TressFX hair enabled by the Ultimate quality preset completely changes the flat cinematic portion of our run, and the mighty Radeon HD 7970 drops to 30 FPS, no matter the processor pairing. Once the camera zooms off of Lara, frame rates spike before control is handed back to the user. Similar cinematic sequences are unavoidable, and a big part of the game, which is why we’re taking the time to demonstrate this behavior within a CPU shootout.

Without a doubt, it takes powerful graphics hardware to crank out Ultimate details, but parts of this game really smack the processor as well. Game play is adversely affected by our two slowest dual-core chips; the Core i3 and overclocked Q9550 are about the least I’d want when playing though these areas of the game. But it’s the Core i5-3570K that earns respect for delivering far more consistent frame rates.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Results: Tomb Raider

Prev Page Results: The Elder Scrolls V: Skyrim Next Page Overclocking: More Voltage, Higher Clocks-

ASHISH65 Wow! this is the review i am waiting from long time.Really good one for budget gamers.Reply -

DarkSable Now this is cool stuff.Reply

Also, amoralman, did you read this? It's basically assuring you that your C2D is still awesome as a budget processor. -

Steelwing Very nice review! I've got a C2D E6600 (2.4 GHz) and had been considering the Core i5-3570K (or possibly wait for a Haswell i5) and was wondering about the performance differences. My CPU is still good for a lot of apps, but I can definitely see a reason to upgrade.Reply -

AMD Radeon pentium dual core G2020 is the minimum i can recommend to budget gamers. i often listed it in sub 450 gaming PCReply -

lpedraja2002 Excellent article, I'm glad I have a more accurate idea on where I stand based on CPU performance, I'm still using my trusty Q6600, G0 @ 3.2ghz. Its good that Tom's still hasn't forgotten that a lot of enthusiast still are rocking Core 2 architecture lol. I think I can manage until Intel releases their next revolutionary CPU.Reply -

assasin32 I been wanting to see one of these for a long time but never thought I get to see it. I just wish they had the good ol e2160, and q6600 thrown into the mix. I have the e2180 OC to 3ghz. It's still chugging along surprisingly enough, I just realized how old the thing was last night after thinking about how long I've had this build and looking up when the main components were produced. Safe to say I got my use out of that $70 cpu, did a 50% OC to it :) and it still had room to go but I wanted to keep the voltage very low.Reply -

jrharbort I've always been curious about how well my own Core 2 Duo P8800 (45nm & 2.66GHz) would stand up against modern ivy bridge offerings. And even though I'm talking about he mobile space, I'm guessing the gains would be comparable to those seen by their desktop counterparts. Each day I'm reminded more and more that I seriously need to move on to a newer system, especially since I work with a lot of media production software. Thanks for the article, it provided some interesting and useful insight.Reply -

smeezekitty Kind of interesting that the old Core 2s beat the I5 in tombrader with TressFX on.Reply

Also holy crap on 1.45 vcore on the C2D -

Proximon I would not have predicted this. Not to this extent. I hope we can make these broader comparisons across years more frequently after this. I predict this will be a very popular article.Reply