High Powered Web Servers from HP and IBM

Water cooling for Web 2.0

Using less power to start with means the iDataPlex needs less cooling – again up to 40%. Add the optional water-cooled rear door heat exchanger and you can do away with air conditioning altogether. This fits on the back of the rack, because using the longest dimension (47”) gives you more length for heat exchange and McKnight says it’s far more efficient than using cooling the air.

“In a data center the air conditioning is 50 feet away so you blow cool air at great expense of energy under the floor past all the cables and floor tiles,” McKnight said. “It’s like painting by taking a bucket of paint and throwing it into the air.”

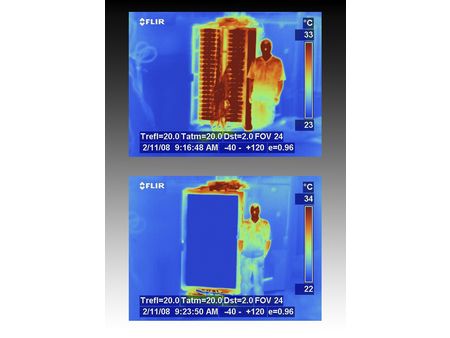

The heat exchanger takes the cooled water or refrigerant that’s already in the air conditioning system directly to the rack through a hose, saving 20% to 60% in cooling costs. McKnight set up an iDataPlex rack in his lab with each server using around 390W of power, dissipating a total of 32kW of power. The temperature was 72 degrees Fahrenheit at the front of the rack and 130 degrees behind the rack.

“We turned on the liquid to cool the exchanger and in 20 seconds and the temperature at the rear of the rack dropped to about 60 degrees, which means the exchanger is cooling the environment around it,” McKnight said. “We had 10,752 servers being cooled entirely by the heat exchanger, and as long as you keep the total rack power at or below 32 KW, you can remove all your air conditioning. Compare that to a 1U rack system with the same number of servers at 52 KW per rack and you can have 14 air conditioning units that cost $100,000 each and still be unable to cool those servers. “

This is different from water-cooling a desktop or gaming PC, where you have a closed loop of liquid acting as a heat sink that draws heat out by contact, or a spray cooling device, because those are only cooling the CPU, usually to allow over-clocking. You still need fans to cool components like memory and drives, and you still need to cool the air that the fans are drawing over the components; the water cooling is just to compensate for the disproportionate amount of heat that the overclocked processor produces – more than the fans are designed to cope with. Running the cooling into the individual iDataPlex servers wouldn’t make sense; plumbing for every single server and CPU would be more expensive and it’s not significantly more efficient.

If water cooling is so efficient, why isn’t it used more often in servers? “The biggest issue is an emotional one,” McKnight said. “People say ‘I don’t want water in my data center’ but you already have water near that data center for the air conditioning and you may have water on the floor above for the fire extinguishers. Time and time again I’ve spoken to people who have had water damage in their data center – when they say they don’t have water in the data center.”

Servers Like Cookie Sheets

With the back of the iDataPlex reserved for the heat exchanger, everything in the rack has to be serviced from the front. To make that easier, the power supplies and fans attach directly to the chassis of the rack and the servers slide in and out like baking trays in an oven, held in place by two thumb levers. 1U servers are often known as pizza boxes and IBM has nicknamed these “cookie sheets.” The fans are grouped into packs of four, with a single power connector and latch. Fans in 1U servers are usually replaced individually, each of which has to have its own power connector, latch mechanism and rail.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“If you’re buying 10,000 servers, with up to eight fans in a 1U server, you’re spending a lot of money up front for the opportunity to replace a single fan,” McKnight said. “Leaving out the extra power connectors makes the servers cheaper and makes up for having to replace all four fans if one fails. After enough cookie sheets and fans have failed to make it worthwhile, you can return them to IBM for servicing and replacement.”

The trays that the cookie sheets fit into can hold a motherboard, disks or PCI connectors for plug-in cards. The motherboards are industry standard SSI form factors, so although IBM only offers Intel quad-core CPUs today, if demand for AMD chips like Barcelona returns, then IBM can off them. Disks can be 3.5” SAS, 3.5” SATA or twin 2.5” SAS drives for hot swapping, with software or hardware RAID. The drives attach directly to the servers, with up to eight drives connected to each server and Ethernet, InfiniBand or ISCSI connections from the rack. The iDataPlex rack has vertical space for 16U of switches fitted between the columns of servers, so you’re not sacrificing server space.

You can use these as building blocks to choose 22 different configurations for the iDataPlex. IBM will pre-assemble the systems, which McKnight claims represents a significant saving on the cost of configuring and installing commodity servers in racks, over and above a price that’s 25% cheaper than the equivalent system built from 1U servers. “The system comes configured and pre-built,” McKnight said. “You’re not spending time unboxing servers and fixing them into the racks with screw guns and cabling them up and then spending money to debug any cabling faults.”

Current page: Water cooling for Web 2.0

Prev Page IBM: Turning Servers Sideways Next Page HP Pushes Petabytes-

pelagius No offense but this article is VERY poorly edited. Its almost to the point where its hard to read.Reply

"then IBM can off them." I assume you ment to say the IBM can offer them. There are a lot more mistakes as well... -

This article is full of factual errors. The IBM servers aren't "turned sideways". They're simply shallow depth servers in a side by side configuration. They're still cooled front to back like a traditional server. The entire rack's power consumption isn't 100 watts. It's based on configuration and could easily run 25-30kw. And comparable servers don't necessarily draw more power. IBM has simply cloned what Rackable Systems has been doing for the past 8 years. Dell and HP also caught on to the single power supply, non-redundantly configured servers over the past few years. IBM certainly has a nice product but it's not revolutionary.Reply

-

You might want to remind your readers that Salesforce.com made their switch right after Michael Dell joined their board... their IT folks think Dell's quality is horrible, but they were forced to use them.Reply

-

It seems that Salesforce.com needs to do some research on blade systems other than Dell. HP and IBM both have very good Blade solutions that areReply

A. IBM and HP Blade's take less Power & cooling than 14-16 1u servers

B. Most data centers can only get 10 1U servers per rack right now because power is constrained.

Its Salesforce that just blindly buy crappy gear and then justify it by saying well blades don't have the best technology so i will go and waste millions on dell's servers. ( way to help out your biz )

if they would Just say i am to lazy to do any real research and dell buys me lunch so buy hardware from them it would be truthfully and then we would not get to blog about how incorrect his statements are.