Benchmarked: How Well Does Watch Dogs Run On your PC?

Watch Dogs is one of the most anticipated games of 2014. So, we're testing it across a range of CPUs and graphics cards. By the end of today's story, you'll know what you need for playable performance. Spoiler: this game is surprisingly demanding!

Results: Low Detail, 1280x720

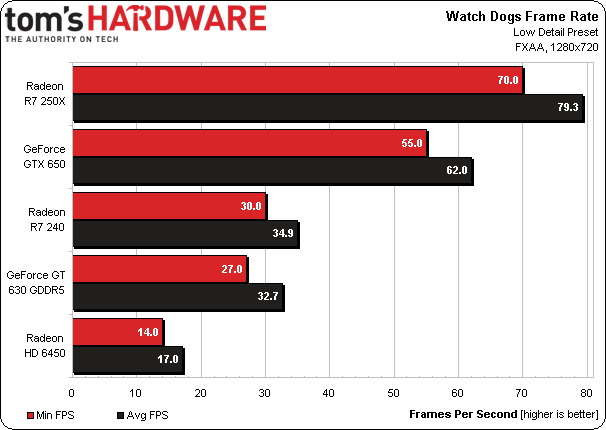

Our first tests were run on low-end graphics cards from the Radeon HD 6450 and GeForce GT 630 GDDR5 to the Radeon R7 250X and GeForce GTX 650. We chose the minimum detail settings coupled with FXAA anti-aliasing at 1280x720.

The Radeon HD 6450 is unplayable, but the GeForce GT 630 GDDR5 and Radeon R7 240 squeeze out passable performance with 27- and 30-FPS minimums, respectively. AMD's Radeon R7 250X leads the budget pack, never dropping below 70 FPS.

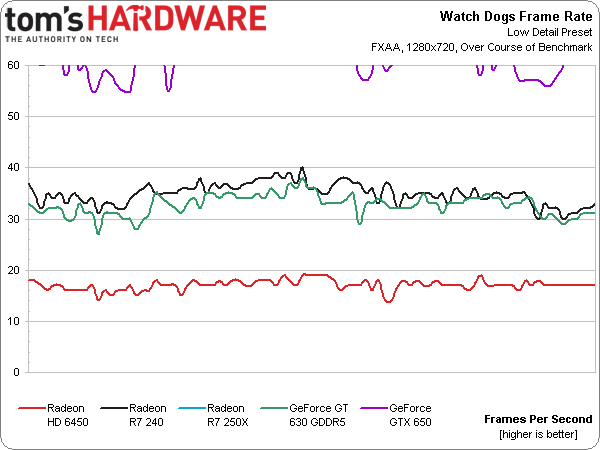

Frame rates over time are fairly consistent with this class of graphics card. Nvidia's GeForce GTX 650 barely drops below the 60 FPS threshold, and the Radeon R7 250X remains above this chart's upper bound.

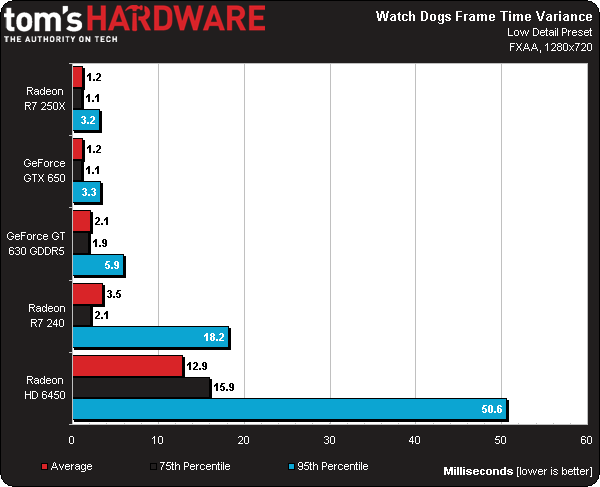

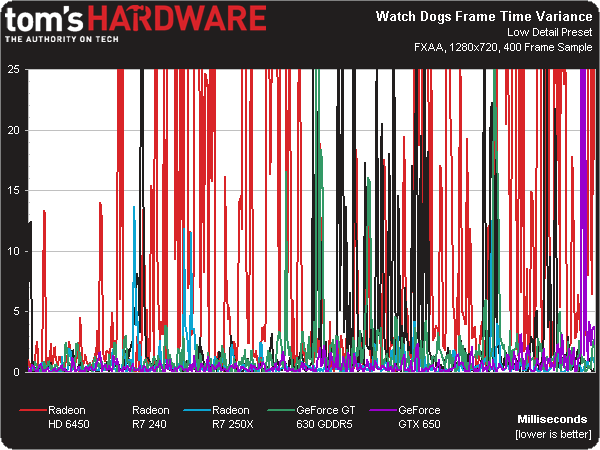

There's a significant amount of frame time variance, which manifests as occasional stutters on the affected cards. AMD's Radeon HD 6450 is affected most, though the Radeon R7 240 also suffers compared to the competition.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Results: Low Detail, 1280x720

Prev Page How We Tested Watch Dogs Next Page Results: Low Detail, 1920x1080Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.

-

coolcole01 Running on my system with ultra and highest settings and fxaa it is pretty steady at 60-70 fps with weird drops randomly almost perfectly to 30 then up to 60 almost like adaptive sync is on, Currently playing it withe the texture at high and hba0+ and smaa and its a pretty rock steady 60fps with vsync still with the random drops.Reply -

edwinjr why no core i5 3570k in the cpu benchmark section?Reply

the most popular gaming cpu in the world. -

chimera201 So a Core i5 is enough compared to Ubisoft's recommended system requirement of i7 3770Reply -

jonnyapps What speed is that 8350 tested at? Seems silly not to test OC'd as anyone on here with an 8350 will have it at at least 4.6Reply -

Patrick Tobin Most 780Ti cards come with 3GB of ram, the Titan has 6GB. This is an unfair comparison as the Titan has more than ample VRAM. Get a real 780Ti or do not label it as such. HardOCP just did the same tests and the 290X destroyed the 780 since the FSAA + Ultra textures started causing swapping since it was pushing past 3GB.Reply -

tomfreak If u dont have 780ti, 780, just show us stock Titan speed, Why would u rather show us Titan OCed speed than showing Titan stock speed & all that without showing 290X OCed speed? Infact an OCed Titan does not represent a 780Ti, because it has 6GB VRAM. Vram is a big deal in watchdog. So ur Oced titan does not look like 780ti nor a real titan.Reply -

AndrewJacksonZA Hi DonReply

Please could you include tests at 4K resolution, and also please use a real 780Ti and also a 295X2? Can you not ask another lab to do it, or get one shipped to you please?

+1 also on what @Patrick Tobin said.

I can appreciate that you might've spent a lot of time on this review, and we'd really appreciate you doing the final bit of this review. I know that not a lot of gamers currently game at 4K, but I am definitely interested in it please.

Thank you! -

Lee Yong Quan why doesnt you have the high detail setting? and would a 7790 1gb perform the same as 260x 2gb in medium texture? if not which is betterReply