Increased Linux kernel timer frequency delivers big boost in AI workloads

But moving up from 250 Hz is otherwise underwhelming.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

At the weekend, a Google engineer proposed raising the default Linux kernel timer frequency from 250 Hz to 1,000 Hz. Linux-centric tech site Phoronix has quickly stepped up and assessed what the change might mean to users with a suite of A/B testing. Spoiler alert: The most notable benefits were seen in AI LLM acceleration. Elsewhere, the differences might be considered within the margin of error of system benchmarking. System power consumption changes seen in the comparison were also minimal.

Let's recap the patch statement shared by Google engineer Qais Yousef on Sunday before looking at some measured impacts. As mentioned, Yousef's main thrust was to propose that the current Linux kernel default to a timer frequency of 1,000Hz. The reasoning behind the proposal was that Linux users would benefit from improved responsiveness and faster workload completion in general.

Yousef also thought that moving to 1,000 Hz would banish the 250 Hz-related issues with "scheduler decisions such as imprecise time slices, delayed load balance, delayed stats updates, and other related complications," reported Phoronix. Toward the end of his patch notes, the engineer openly pondered over system power consumption, assuming that "the faster TICK might still result in higher power."

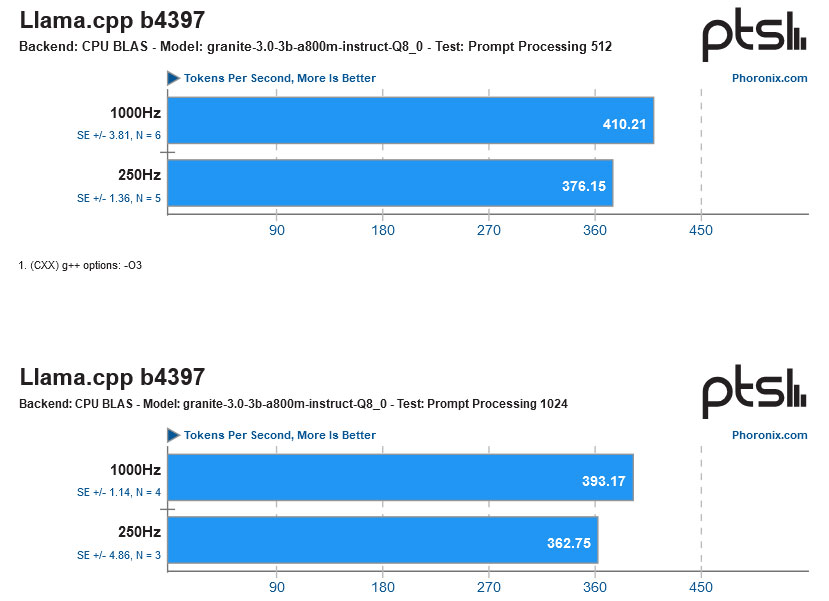

Phoronix's beefy AMD Ryzen 9 9950X (16C/32T) system with 32GB RAM and a Radeon RX 7900 XTX graphics card was the A/B Linux kernel timer frequency testing test bed. We see the first set of tests for processing performance in Llama LLMs, measured in tokens per second. This is probably the greatest showcase for the move to 1,000 Hz, with benefits from the change edging into double figures. However, the Nginx web server also showed double-figure percentage gains in requests handled per second, benefitting more with more connections.

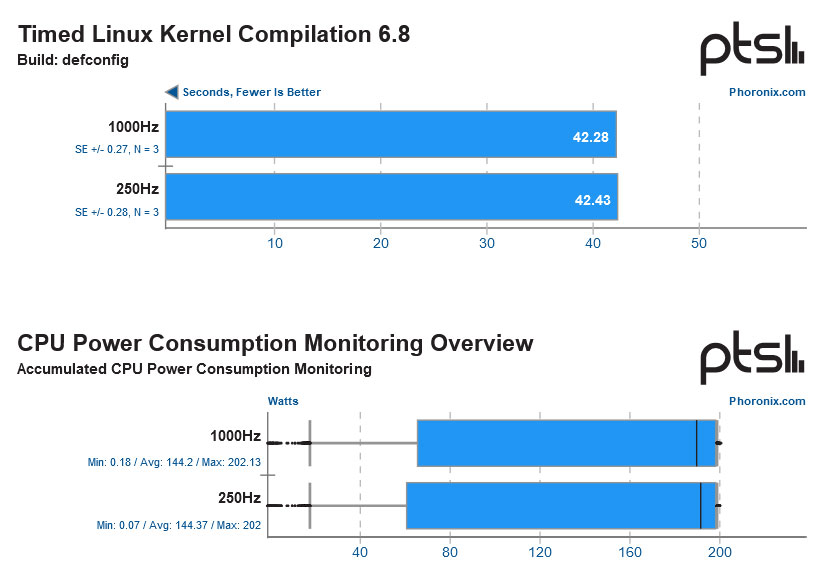

Other test results shared included image processing (250 Hz win), SQL database manipulation (evens), gaming (probably unnoticeable but consistent benefit for 1,000 Hz), browsing, rendering, and compiling (all even).

Interestingly, the frequency difference precipitated a negligible change in power CPU consumption differences. We've shared some of the numbers and charts from Phoronix (above), but check out the original post for fuller details.

Lastly, we must highlight that some popular Linux distributions already have a 1,000 Hz default kernel timer frequency. Ubuntu and SteamOS are already in the 1,000 Hz camp, for example. Nevertheless, changing the default would help bring the plethora of other distros in line.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

bit_user Reply

No. Margin of error should be like < 1%. Several other benchmarks had differences were well above that.The article said:Elsewhere, the differences might be considered within the margin of error of system benchmarking.

nginx had a case that benefited from the 1kHz tick rate by 8.6%.

Darktable had a case where 250 Hz was 7.5% faster.

A few others in the 2-3% range.

I've seen no compelling explanation for performance changes of such a magnitude. It's generally accepted that a faster tick rate just adds overhead, which is why the default is now 250 Hz and used to be even lower.

You missed a key part of the explanation, which was right in the first paragraph:The article said:Let's recap the patch statement shared by Google engineer Qais Yousef

"The larger TICK means we have to be overly aggressive in going into higher frequencies if we want to ensure perf is not impacted. But if the task didn't consume all of its slice, we lost an opportunity to use a lower frequency and save power. Lower TICK value allows us to be smarter about our resource allocation to balance perf and power."

Source: https://www.phoronix.com/news/Linux-2025-Proposal-1000Hz -

mitch074 To my knowledge, current default configuration is for the kernel to be tickless on all but the boot CPU core - meaning that this setting should have very little impact on power consumption as only one core would be affected, and it's the busiest one anyway.Reply

Still, that default would change the system wide interrupt being sent once every 3 000 000 cycles - that's equivalent to a Pentium III 750 running a 250 Hz tick.