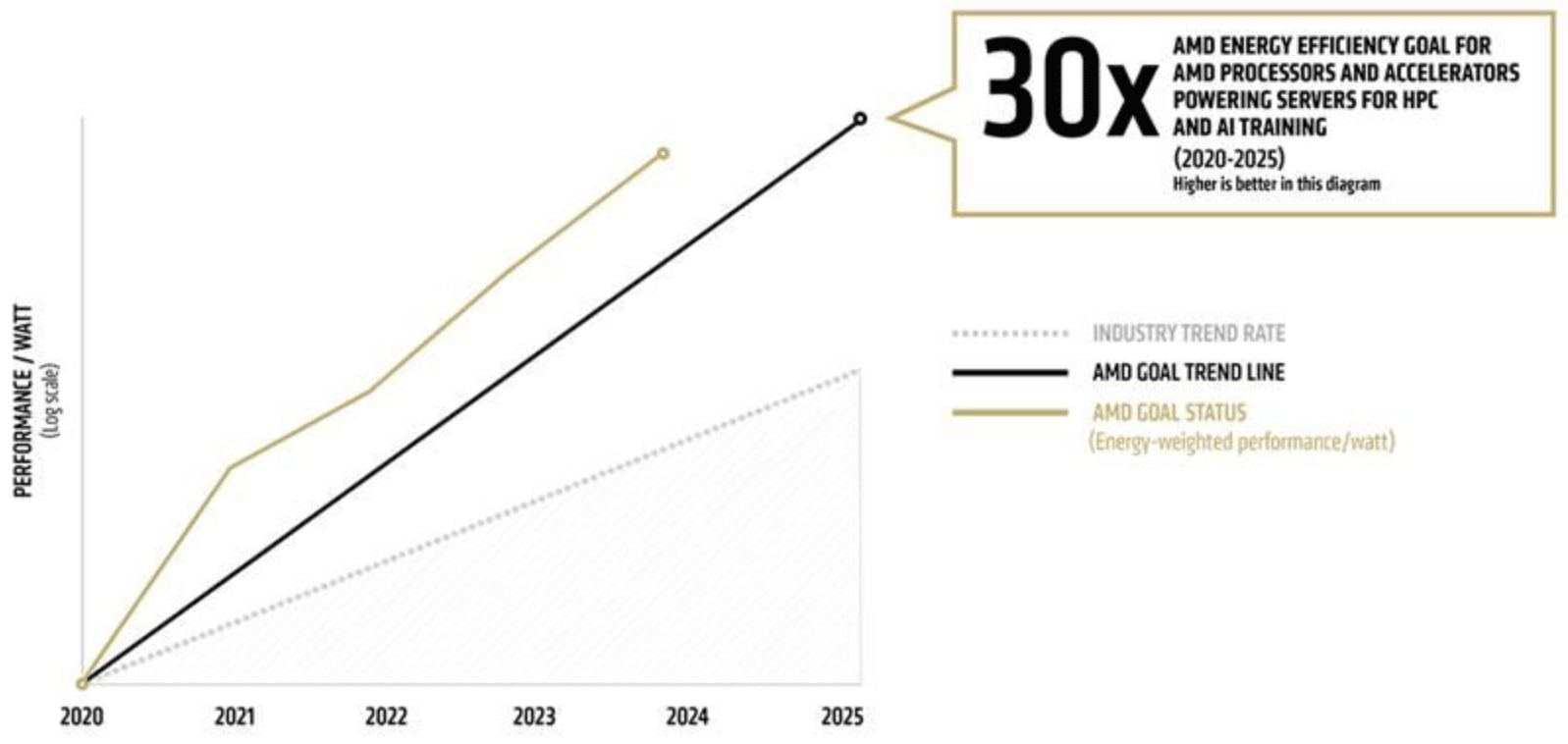

AMD nearly beats 30X power efficiency goal a year early — AMD's new AI servers are 28.3 times more efficient than 2020 versions

The specs of the 2020 AI machine were not disclosed

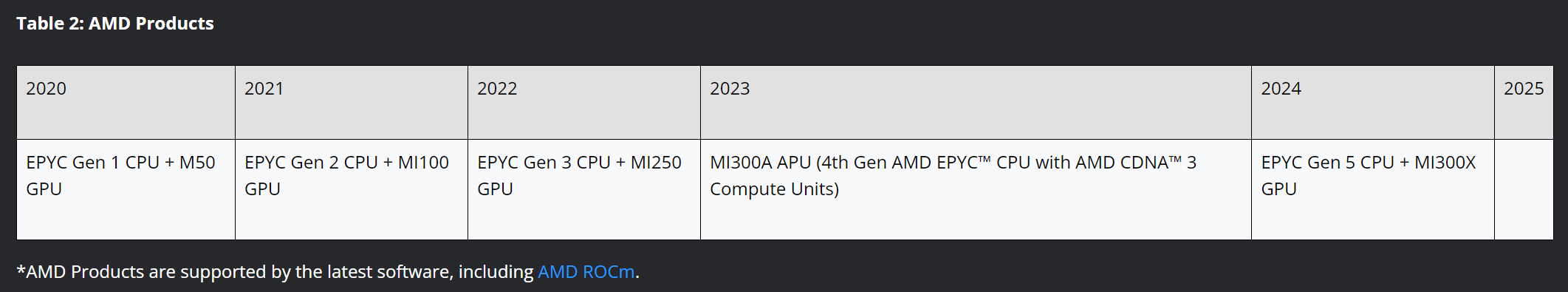

Performance efficiency is key to the rapid increase in the performance of AI and HPC processors, so AMD and other companies fight for it fiercely with every new product generation. Back in 2021, the company set a goal of 2025 to increase the energy efficiency of its EPYC processors and Instinct accelerators by 30 times compared to 2020. It appears that with its latest EPYC 9005-series 'Turin' CPUs and Instinct MI300X GPUs, it has basically achieved its goal, but a year early.

To prove its point, AMD used a machine equipped with two 64-core EPYC 9575F CPUs, eight Instinct MI300X accelerators, and 2,304 GB of DDR5 memory and tested its inference performance in Llama3.1-70B (vLLM 0.6.1.post2, TP8 Parallel, FP8, continuous batching) model. Using a complex set of calculations, AMD determined the energy efficiency of this system and compared it to an undisclosed machine from 2020, discovering that the new machine is 28.3 times more energy efficient than the old one.

AMD didn't disclose the specifications of its 2020 system, but we can imagine that it is based on the company's EPYC 7002-series processors, which feature the Zen 2 microarchitecture with up to 64 cores per CPU, and Instinct MI100 accelerators, which are based on the CDNA 1 architecture. [EDIT: AMD did divulge the setup here. We've also added the table below to reflect the setups used.]

AMD's Instinct MI100 does not support FP8 (unlike MI300X, which supports it at the same rate as INT8), though if we compare INT8 performance of MI100 (184.6 TOPS) and MI300X (2615 TOPS/5230 TOPS with sparsity), the difference will be 14 – 28 times on paper. About the same difference can be observed with FP16, so the comparison is valid. When we factor in dramatically better memory subsystems (32 GB HBM2 at 1.20 GB/s vs. 192 GB HBM3 at 5.30 GB/s) and dramatically better CPUs, it does not come as a surprise that AMD's existing machines are dramatically faster and more performance efficient than its systems from 2020.

AMD itself says that in addition to 'brute force' hardware improvements, its higher performance efficiency was achieved by a combination of architectural advances and software optimizations, which is to be expected.

Just recently, the company introduced its Instinct MI325X accelerators based on the CDNA 3 architecture, yet featuring a 288 GB HBM3E memory subsystem. Next year, the company is set to roll out its Instinct MI355X processors, which will be based on the CDNA 4 architecture and boost compute FP8 and FP16 performance by about 80% compared to the MI325X. In addition to FP8 and FP16, the MI325X will add support for FP4 and FP6 formats for AI, which will increase its peak performance to 9.2 PetaFLOPS (FP4), something that will be useful for many large language models. That said, AMD is more than on track to achieve a 30 times higher energy efficiency of its compute platforms by 2025 when compared to 2020.

"With our thoughtful approach to hardware and software co-design, we are confident in our roadmap to exceed the 30x25 goal and excited about the possibilities ahead, where we see a path to massive energy efficiency improvements within the next couple of years," wrote Sam Naffziger, Senior Vice President, AMD Corporate Fellow, and Product Technology Architect at AMD.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD still sees room for improvement and even sees a pathway to improving energy efficiency by 100X by 2027.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

abufrejoval I'd be insterested in some data points on FP8 vs INT8.Reply

For inference it doesn't seem to make much of a difference in terms of quality from what I've glanced, yet with training my gut feeling would be that it's way too much work thinking which layers might tolerate the reduced precision.

So is FP8 actually used widely in the field? I can see how very specific dense models (e.g. vision) might still work and profit from the speed vs. bfloat16, but those sound like industrial and automotive to me.

Dojo is really big on exploiting many permutations of reduced precision data types, but I've found nothing similar elsewhere.

Do any of the gaming use cases rely on FP8? -

edzieba Without disclosing the 2020 comparison system, this is just a pick-your-desired-marketing-multiplier exercise. In 2020, AMD had chips ranging from the Radeon Instinct MI100 all the way down to the teeny little Athlon Gold 7220U. Where in that range you pick from to compare to today likely gives you an order of magnitude range to choose from depending on what performance comparison you want to stick in your powerpoint.Reply -

Jame5 Reply32 GB HBM2 at 1.20 GB/s vs. 192 GB HBM3 at 5.30 GB/s

Knowing HBM bandwidths, shouldn't that be TB/s ? -

ottonis An 28x improvement in performance per Watt within just 4 years is an incredible feat.Reply

However, why does AMD not disclose the reference system from 2020 that was used for this comparison? Without knowing what the current machine was compared against, leaves the auditorium wondering why this information is being hidden? -

rluker5 So specific hardware is more efficient than general hardware. Good to know.Reply

As far as apples to apples comparisons RDNA2 came out in 2020 and AMD hasn't improved on that efficiency.

Edit: the Ryzen 9000 series is more efficient than the 5000 series from 2020, but not by 30x. -

tisOddOne Reply

Even 28X over mi100 should not be surprising, mi100 has no int8 unitsottonis said:An 28x improvement in performance per Watt within just 4 years is an incredible feat.

However, why does AMD not disclose the reference system from 2020 that was used for this comparison? Without knowing what the current machine was compared against, leaves the auditorium wondering why this information is being hidden?