Benchmarked: How Copilot+ PCs Handle Local AI Workloads

There aren't many local workloads yet, but Qualcomm performs well on those we tried.

It’s been weeks since Copilot+ PCs, the new generation of Windows laptops with Qualcomm Snapdragon X processors, hit store shelves. We’ve had several in our office and have been running them through a lot of the same tests we use on typical Intel or AMD-powered laptops, but what we really want to know is how do local AI workloads run on these computers?

Unfortunately, we haven’t found a ton of local AI applications we can run on Snapdragon X-powered laptops at this time. There are three exclusive Windows features for Copilot+ PCs: Codesigner, Windows Studio Effects and Live Captions with Transcriptions. Whether you think these features are valuable or not, they can’t be used for benchmarking purposes, because they don’t leave behind a repeatable metric. We could take a stopwatch and measure how long it takes Codesigner to build an image after you enter a prompt, but even then, we can’t compare it to the same application on an Intel or AMD-powered PC, because Codesigner is exclusive to Snapdragon X-powered Copilot+ PCs.

So, at the moment, we’ve been able to work with just a couple of real-world AI tests that run on both Snapdragon X-powered laptops and Intel Core Ultra 7 “Meteor Lake” laptops. We don’t currently have a Ryzen 7840U “Phoenix” laptop on hand to test with, but considering that Ryzen “Strix Point” laptops are due out in just a few weeks, those comparisons would soon look old anyway.

| Laptop | CPU |

|---|---|

| Lenovo ThinkPad X1 Carbon (Gen 12) | Intel Core Ultra 7 155H |

| Acer Swift Go 14 | Intel Core Ultra 7 155H |

| Surface Pro | Snapdragon Elite X (X1E-80-100) |

| Surface Laptop 15 | Snapdragon Elite X (X1E-80-100) |

Whisper Transcription

Our first test used the Whisper AI Base English Model to transcribe a 12-minute MP3 audio file into text. Whisper is one of the most practical use cases for local AI, because people who record conversations for work or education – students recording a lecture or journalists recording an interview – really need them turned into copy. OpenAI makes Whisper, but it’s free for anyone to download and use in their own programs.

There are many ways to deploy Whisper, but we used it in a custom Python script, which prints the time before the transcription begins, performs the transcription which it writes to a text file, and then records the time when it’s over. By getting the difference between the times, we can tell how quickly each system executes the test. We’re not judging transcript accuracy, because they’re all using the same model. If we were doing this for our own use, we would prefer to use the Whisper Large model, which is much more accurate than the Base model, but Qualcomm has not made a version of Whisper Large available for Snapdragon yet.

The code is slightly different on the Intel and Arm versions of the script, simply because Intel Meteor Lake uses the Ipex open extension for Pytorch to access its GPU, while Snapdragon X requires a QNN Execution Provider for ONNX Runtime to allow access to the NPU. Making sure we use the proper runtimes is essential because otherwise, our scripts will send the work of transcription (or any other task) to the CPU when the NPU or GPU will complete the same task much faster (and using less power). Running an AI workload on your CPU is always the slowest option. However, in our prior series of Meteor Lake tests, we found that the internal Intel Arc GPU is actually faster than the NPU while the NPU is the best choice on Snapdragon processors.

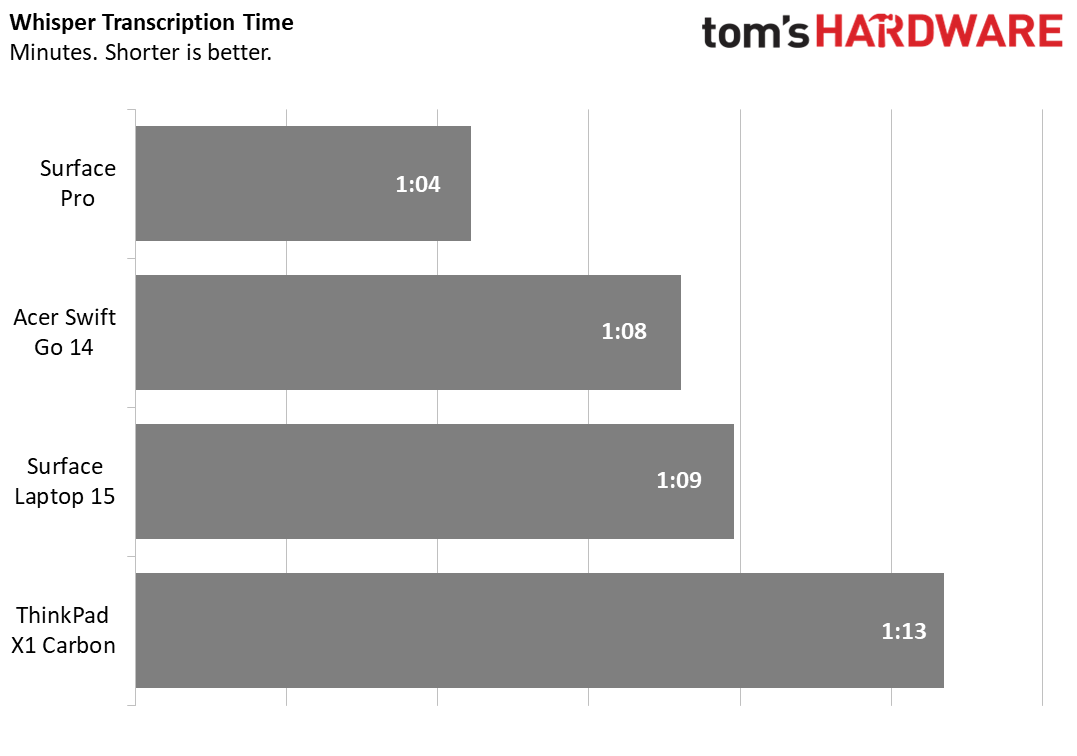

On Whisper, the results were a mixed bag. If we look only at the time it took for our Python script to transcribe the audio file, the Snapdragon laptops were about the same speed as their Intel competitors, give or take a few seconds on each model, with completion times ranging from 1:04 on the Snapdragon X-powered Surface Pro to 1:13 on the Core Ultra 7-powered X1 Carbon. In general, the Snapdragon laptops were a few seconds quicker, but the Surface Laptop 15 took 1:09 while the Acer Swift Go 14 (Core Ultra 7) finished at 1:08.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

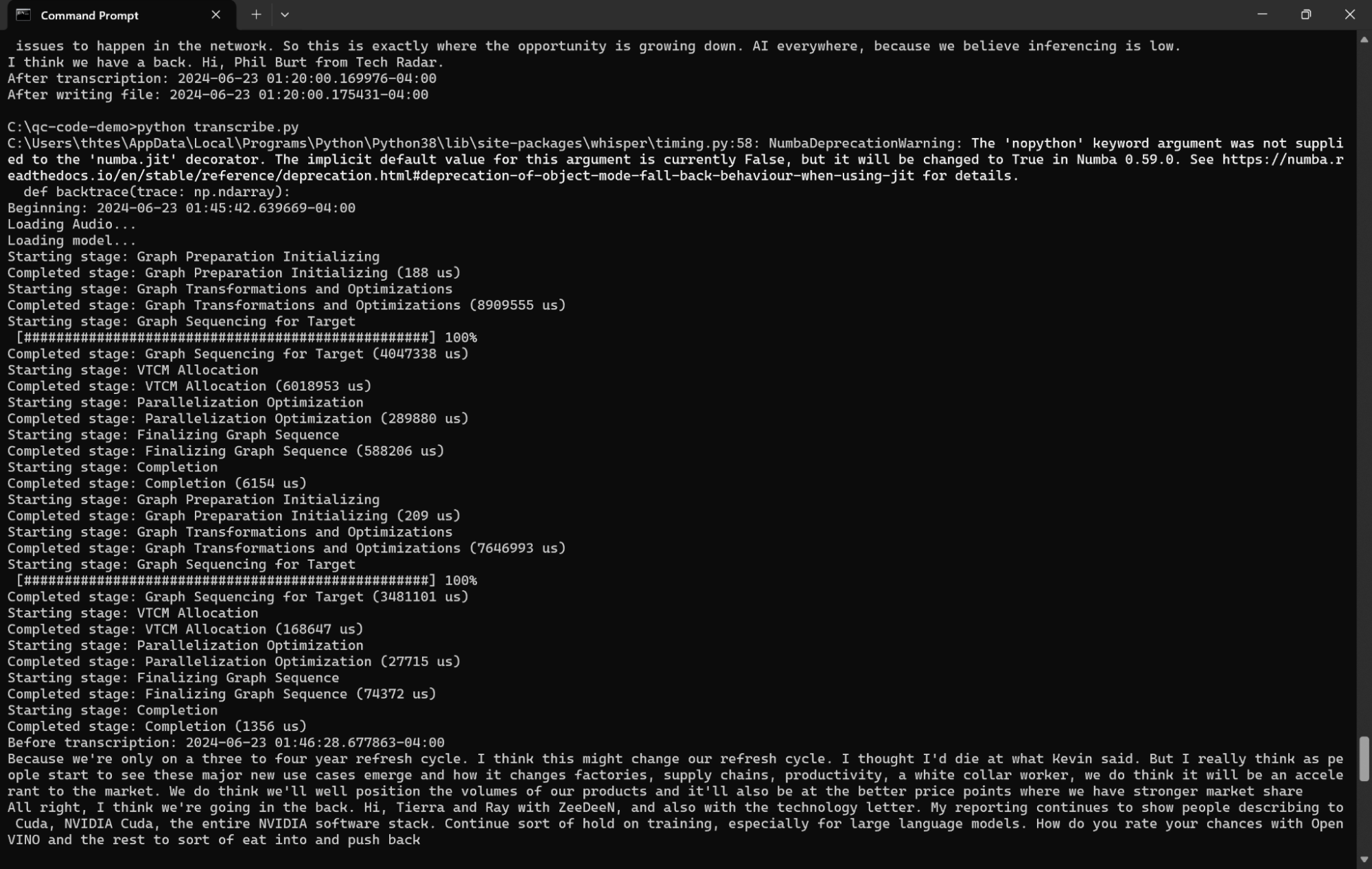

But there’s a huge catch. Using the Python script with the instructions that Qualcomm developers sent me, the Snapdragon X systems take an additional 43 to 47 seconds of preprocessing time before beginning the transcription. This time is taken up converting the MP3 file into a Mel Spectrogram before feeding it to the Whisper model to transcribe. If you look at the screenshot below, you’ll see a huge section of graphing between the line that says “Beginning: 1:45:42” and “Before transcription: 01:46:28.”

On an Intel device, there’s no requirement to do this (perhaps it happens automatically) but there’s about a 4 to 7-second delay between launching our Python script and transcription beginning. When you take the spectrogramming into account, Intel completes the job about 40 seconds faster.

Stable Diffusion Image Generation

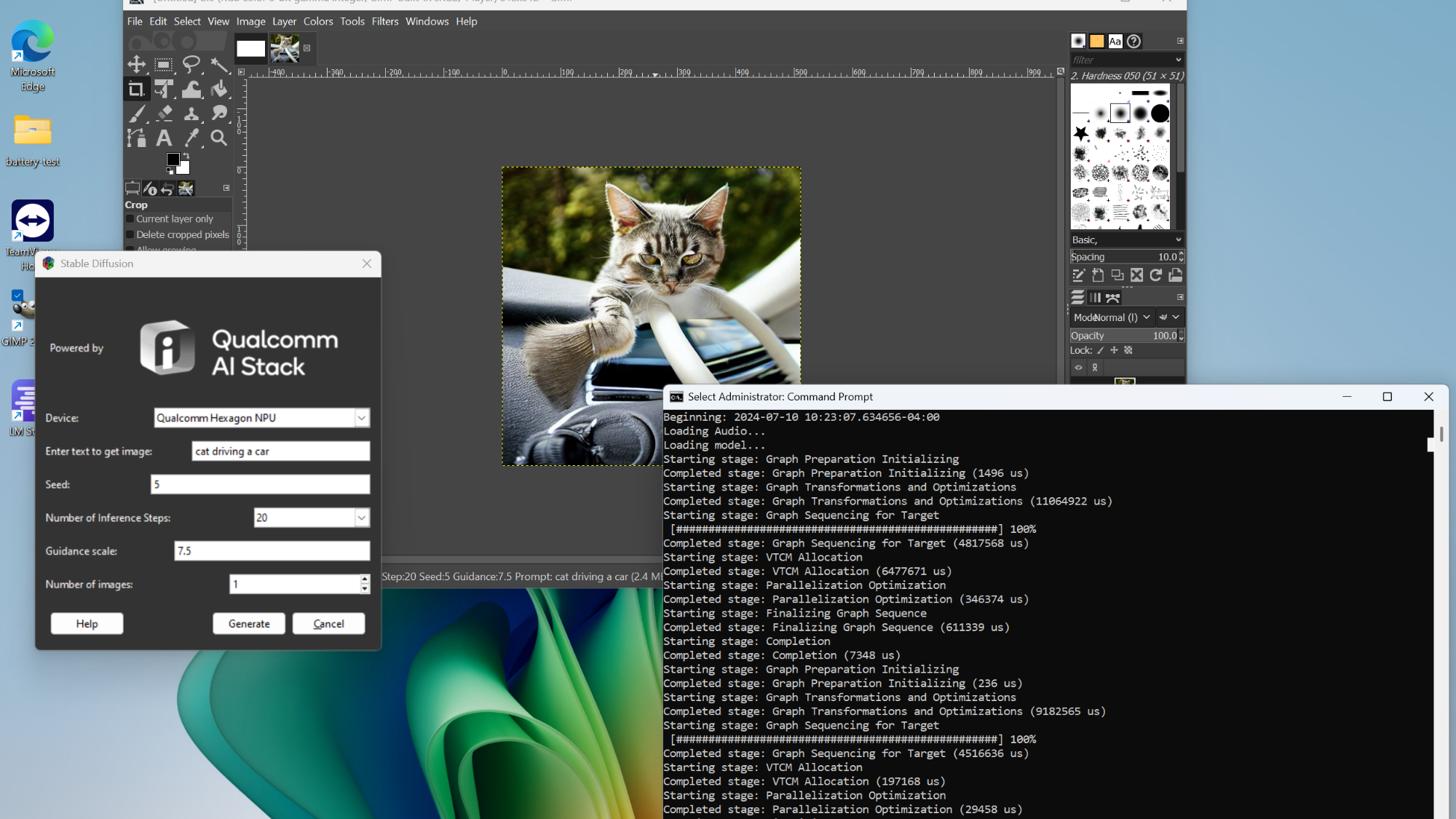

The other workload we benchmarked was using Stable Diffusion 1.5, a popular text-to-image generator. Qualcomm still hasn’t come up with an official way on its AI Hub to use Stable Diffusion with the image prompt of your choice (you can with their one sample prompt), but fortunately a few developers have made a Stable Diffusion 1.5 plugin for GIMP, which we used for testing. On Intel-powered laptops, we were able to use our choice of either Automatic1111. which provides a local UI in your browser for making prompts or the OpenVINO GIMP Plugin. They each delivered the same results.

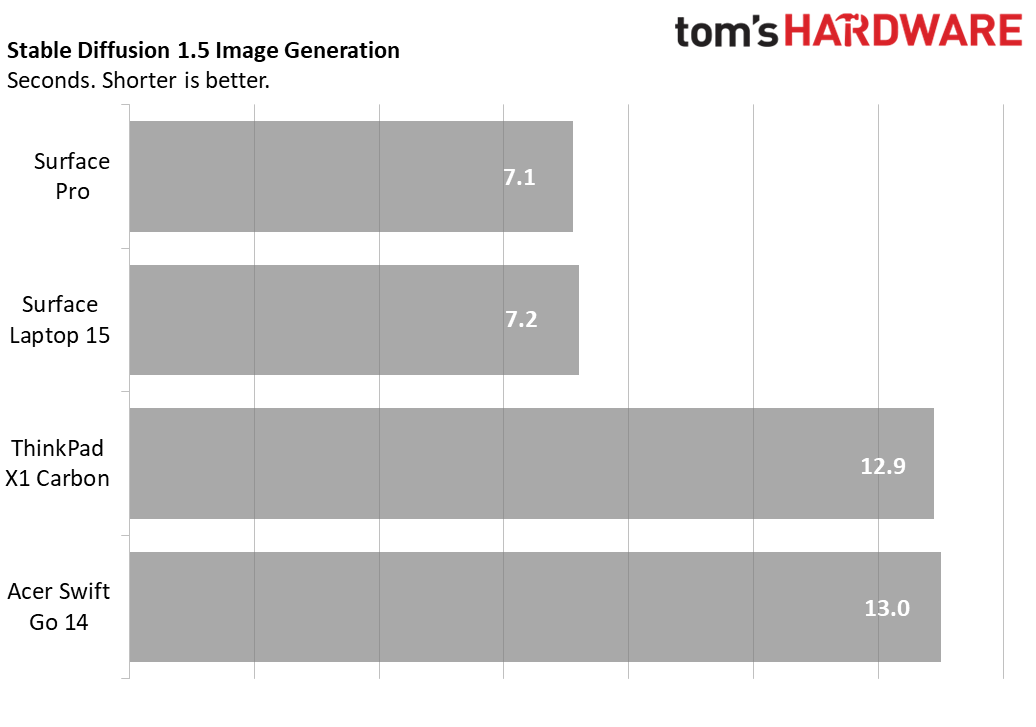

With Stable Diffusion 1.5, it took the Surface Pro and Surface Laptop 15 just 7.1 and 7.2 seconds respectively to draw a picture of a cat driving a car (my usual prompt). The two Intel Core Ultra 7-powered laptops we tested, the ThinkPad X1 Carbon (Gen 12) and Acer Swift Go 14 completed the same task in 12.9 and 13 seconds.

So what we see here is that Qualcomm is pretty much tied with Intel on Whisper, except that the current implementation we used adds an additional 45-second conversion process. And on Stable Diffusion, where there is no extra conversion process, the Snapdragon chips are 45 percent faster.

In the case of the Intel systems, they offloaded their AI workloads to their Arc GPUs, which is a good thing for them as their NPUs aren’t as powerful. However, in the case of the Snapdragon systems, they sent all of the AI workload to their NPUs, which are powerful but also efficient.

Unfortunately, we couldn't find more readily-available AI workloads we could test on both Qualcomm-powered and Intel-powered laptops. However, as time goes by, we expect that there will be more local AI apps and models that run on both platforms, allowing for better comparison.

It looks like Snapdragon X may have a slight leg up on Intel “Meteor Lake” right now, but considering that Intel plans to release a new platform, Lunar Lake, in the next few months, and AMD will be coming out with its Strix Point CPUs in just a few weeks, the x86 companies could pull ahead or at least reach parity very shortly.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

Blastomonas I guess that we will be needing a new chart for AI performance.Reply

The current offerings don't seem very compelling at the moment. -

dehjomz This just goes to show that AI and all this talk about TOPS/NPUs is a gimmick at the moment.Reply -

Alvar "Miles" Udell I'm disappointed LM Studio wasn't tested. Could have used models such as Microsoft Phi3 and Wizard LM-2. Compare Snapdragon X against current AMD and Intel CPUs, and in the soon to be released update that supports NPUs add those results in.Reply

-

qwertymac93 No legend for the X axis and not starting at 0 for a time metric is just not good form for a chart.Reply

https://cdn.mos.cms.futurecdn.net/TXuQYGXLVBgkHV7z85AnEK-970-80.png -

dmitche31958 I'm no fan of AI as I do not personally see any benefit to myself. I do so a lot of downsides. The one no one is talking about is the percentage of CPU usage that I will lose because some crap decides that "I really need to analysis global warming." or other crap. I don't want to pay for this in decreased performance and if and when AI actually hits my PC I will be either turning it off or if the OS won't allow that switching to Linux.Reply