I tested Meteor Lake CPUs on Intel's optimized AI tools: AMD's chips often beat them

The new chips' NPU helps a lot, but AMD's AI accelerated Ryzen CPUs won on some tests.

Having just launched last week, Intel's new generation of "Meteor Lake" mobile CPUs herald a new age of "AI PCs," computers that can handle inference workloads such as generating images or transcribing audio without an Internet connection. Officially named "Intel Core Ultra" processors, the chips are the first to feature an NPU (neural processing unit) that's purpose-built to handle AI tasks.

But just how well does Meteor Lake handle specific, real-world inference? To find out, we tested Intel's own OpenVINO plugins for Audacity and GIMP, open-source audio and image editors. We also compared the results with those from two laptops that use AMD's AI-accelerated Ryzen 7840U chip -- and found that, on some tests, AMD's processors completed these workloads faster.

To see how much of a generational leap the Meteor Lake chips make, we also included two 13th Gen Intel-powered laptops in our test pool. On the Meteor Lake systems, we ran all tests in CPU-only mode, NPU mode and GPU mode. The tests we conducted were:

Tests We Ran

- OpenVINO Stable Diffusion: Generate images in GIMP.

- OpenVINO Music Generation: A text-to-music feature in Audacity where you can give a prompt and get back a very basic tune.

- OpenVINO Whisper Transcription: Transcribes your audio to text in Audacity.

- OpenVINO Noise Reduction: Removes background noise in Audacity.

You'll notice that all of these plugins have the word "OpenVINO" at the beginning. OpenVINO stands for Open Visual Inference and Neural Network Optimization, a popular AI toolkit made by Intel. Intel is hardly a neutral party, of course; we chose these plugins because they provide real-world, offline workloads, and unfortunately, there are no equivalent plugins optimized for AMD or Nvidia chips.

It's important to note that, while OpenVINO software / plugins, will run on any x86 hardware, they are specifically optimized for Intel CPUs and GPUs. So, for example, when I ran these workloads on my desktop PC's RTX 3090 GPU or either AMD laptop's integrated GPU, they ran very very slowly because AMD and Nvidia GPUs aren't on OpenVINO's list of supported devices. The GPUs on the AMD Ryzen laptops were so slow to perform these AI tasks that we stopped after a few runs and aren't using the AMD GPU-mode results in our charts (if you are on AMD, simply use the CPU mode which is default). The integrated GPUs on our Meteor Lake laptops ran the workloads very quickly, however.

The OpenVINO plugins specifically recognized the Meteor Lake laptops' NPU and presented it as a device option. However, they didn't recognize the Ryzen chips' Ryzen AI accelerator and present it as an option. So we don't know if the AMD laptops, which we ran in CPU mode, utilized their AI accelerators at all during testing. Despite Intel having these obvious advantages, AMD's Ryzen chips still outpaced Meteor Lake on two of four tests.

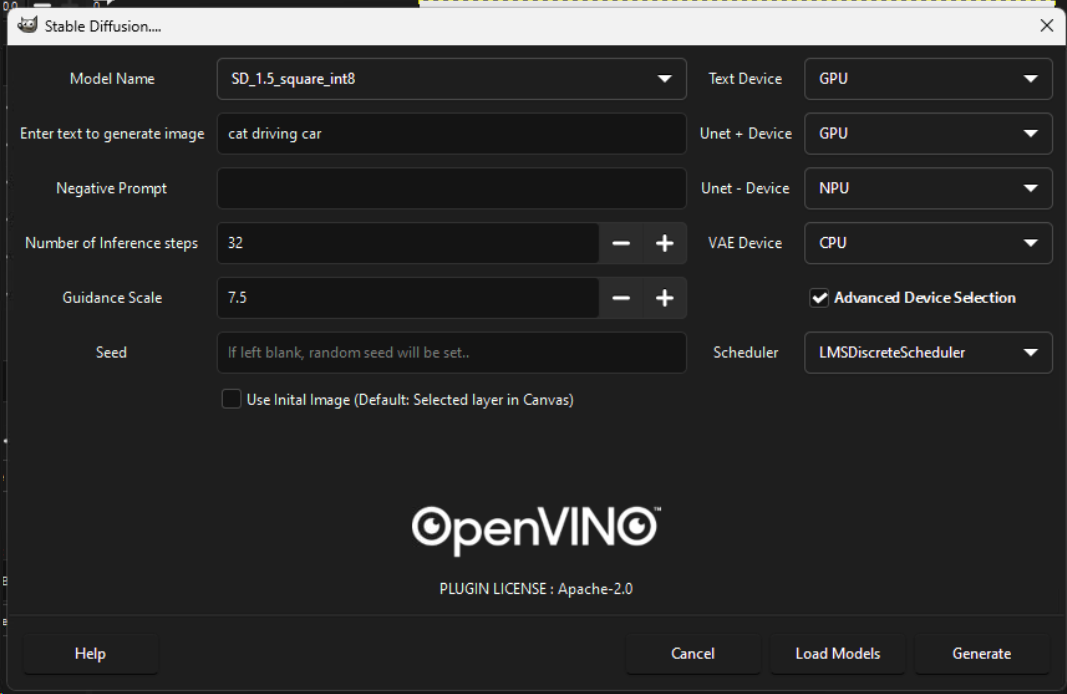

Test 1: GIMP Image Generation

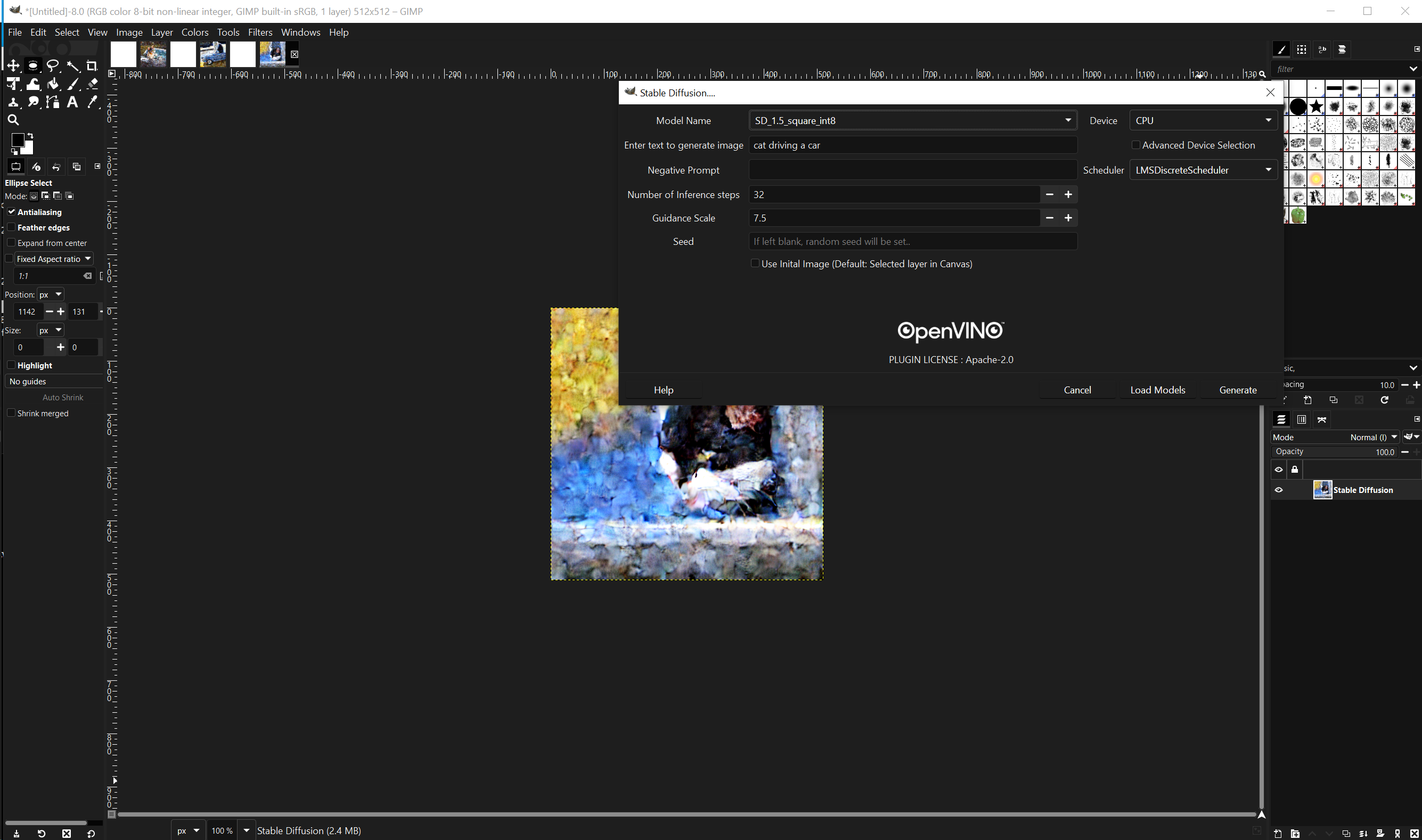

Installing Intel's OpenVINO plugins for GIMP is an odyssey. I won't go into every step (you can see the install instructions here), but it involves creating a Python virtual environment (in a now-outdated version of Python), installing a beta version of GIMP and downloading several gigabytes of training model data.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Once you're set up, GIMP has four OpenVINO plugins: Semantic Segmentation, StyleTransfer, SuperResolution and Stable Diffusion. The first three of these are very basic filters and, in my experience, took only a couple of seconds to activate, with no software timer to measure performance.

Stable Diffusion for GIMP is an image generator that takes anywhere from 16 seconds to a few minutes to output an image. It's effectively a GIMP version of the popular Stable Diffusion tool you can use online or install locally and it puts the image it outputs directly into the image editor's workspace.

There are several different models you can choose among, but I went with SD_1_5_square_int8, because it's one of the few that allows you to use the Meteor Lake NPU as an output device. I found the output of SD_1_5_square_int8 kind of ugly because it gives you what looks like a messy finger painting rather than a photo-realistic image, but the point was seeing how fast it completed each task. My prompt was simply "cat driving a car," something SNL fans can appreciate.

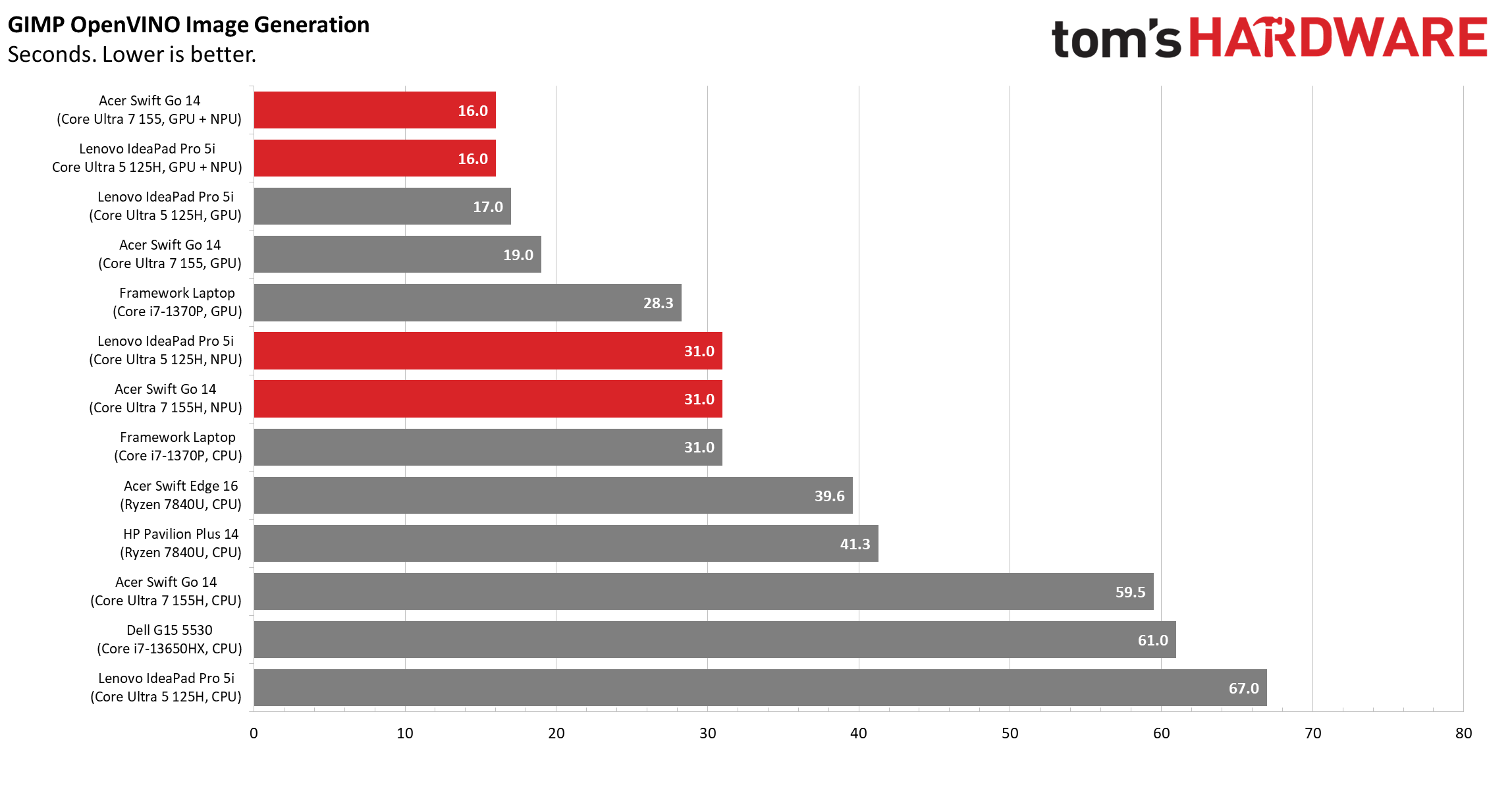

As you can see, the two laptops that fared the best on this test, by far, were the two that have Meteor Lake CPUs: the Acer Swift Go 14 with its Core Ultra 7 155H processor and the Lenovo IdeaPad Pro 5i with its Core Ultra 5 125H chip. Interestingly, they were fastest when the GPU and NPU were combined in settings.

How can you use two different devices in Stability Diffusion? In the SD_1_5_square_int8 model, you can choose four different devices to work on different parts of the output: Test Drive, Unet + Device, Unet - Device and VAE Device. By device, we mean either "CPU," "GPU" or, on Meteor Lake systems only, "NPU." All four devices can be the same or you can switch them up; I found that having NPU as the Unet - Device with GPU as the other three yielded the fastest times.

Generating the image took just 16 seconds on both Meteor Lake laptops. Those times rose by a couple of seconds when we used the GPU alone and nearly doubled when we used the NPU. Times with the CPU alone were the highest of all, clocking in at 59.5 and 61 seconds.

The Framework laptop with its, last-gen Core i7-1370P CPU and Intel Iris Xe integrated graphics fared pretty well in both GPU and CPU modes while CPU mode alone on the Swift Go 14 and IdeaPad Pro 5i was slower than the results from the AMD-powered laptops. We only ran the AMD-powered laptops and the Dell G15 5520 in CPU modes as those systems don't have GPUs which run well in OpenVINO.

By the way, this is the kind of result you get when you ask GIMP's Stable Diffusion plugin for a cat driving a car with the SD_1_5_square_int8 model.

If you want better-looking output, use the SD_1_5_square model which generates photo-realistic images. I didn't use this model for testing, because it doesn't offer NPU support, but when I did test with with the IdeaPad in CPU mode, it took an average of 120 seconds, more than double the time it took for SD_1_5_square_int8. But with the regular SD_1_5_square, the driving cat looks like this.

Test 2: Whisper Audio Transcription

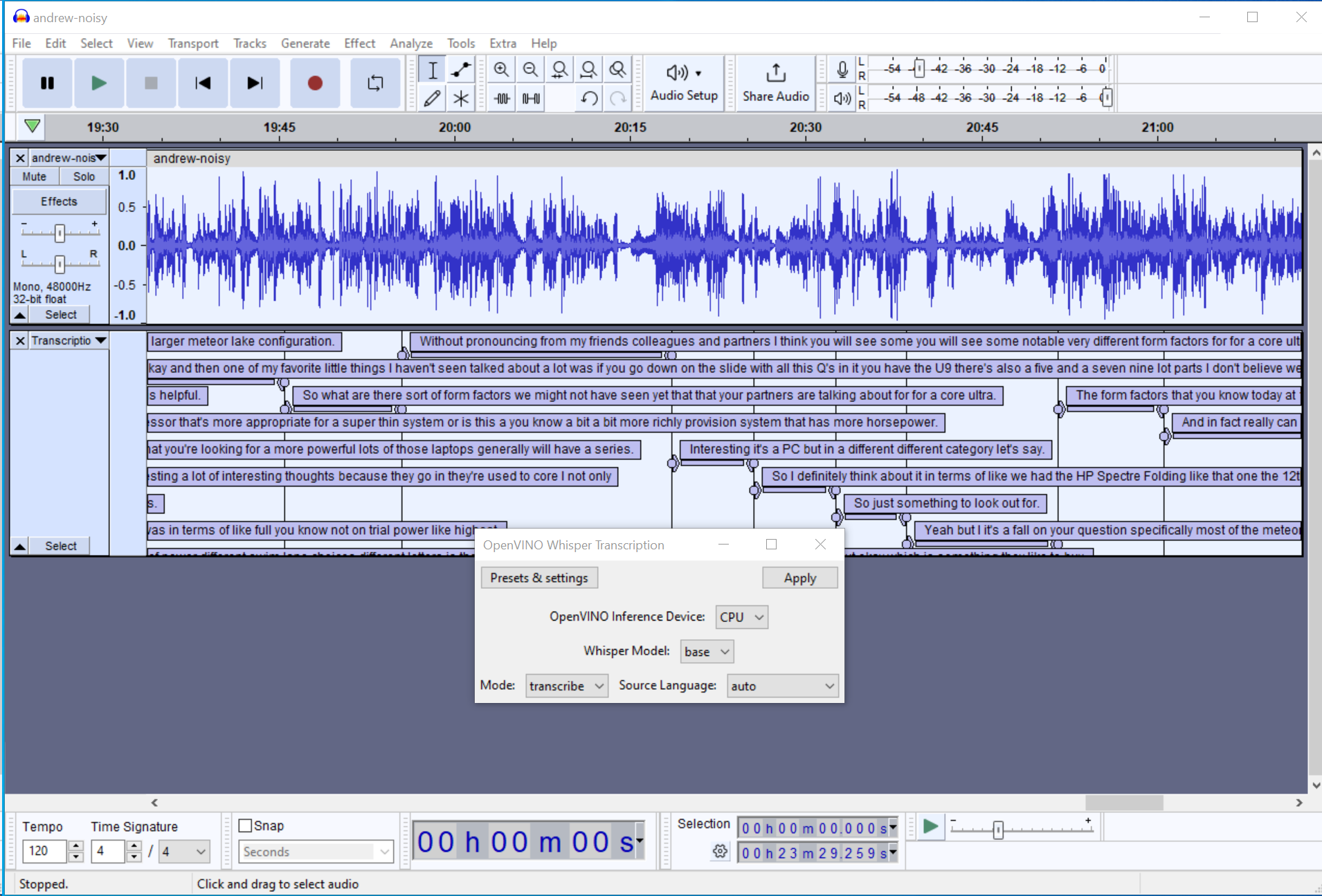

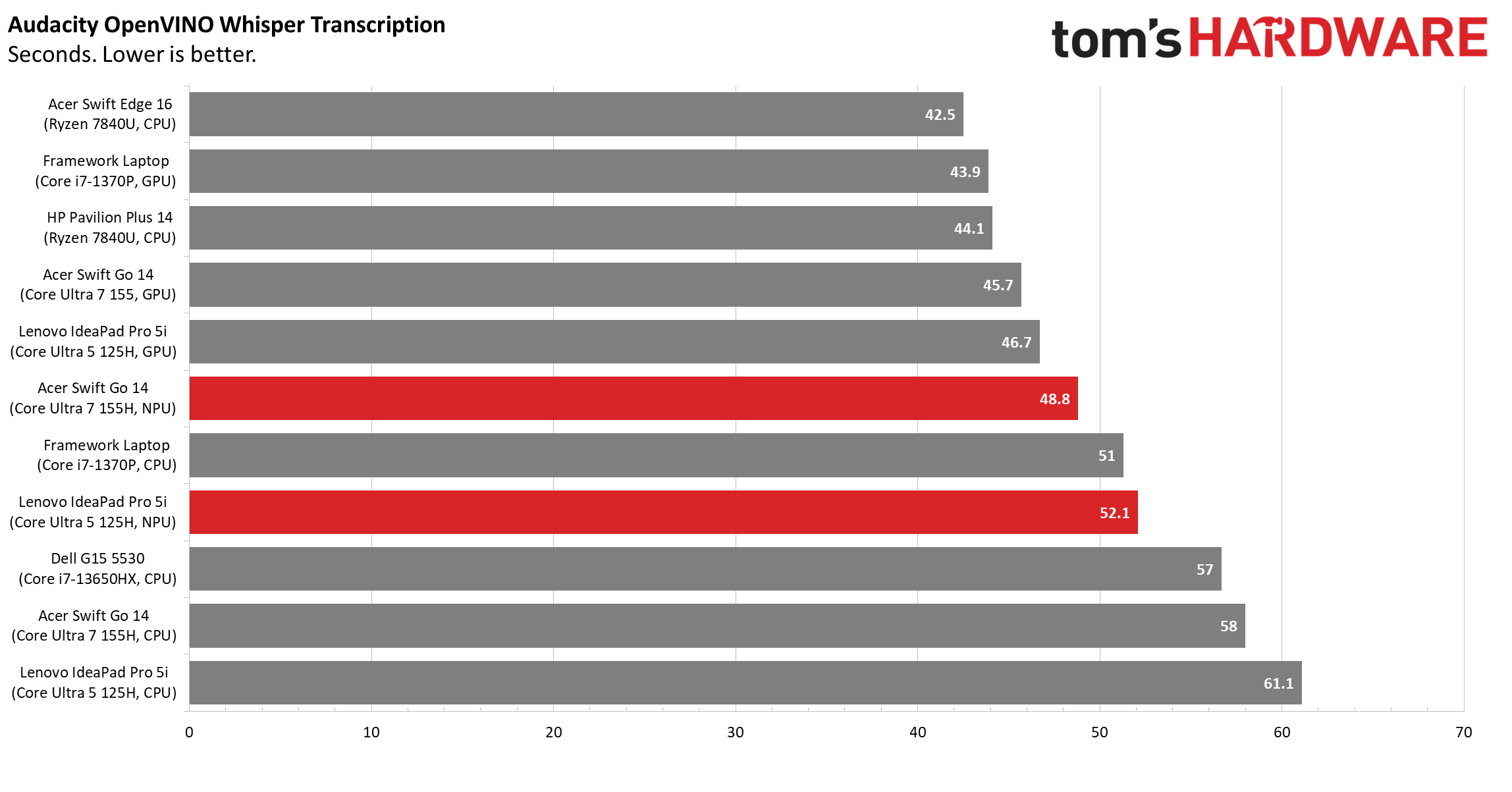

The Audacity OpenVINO Whisper Transcription plugin will use your CPU, GPU or NPU (just one of these at a time) to turn the speech in your clip into a text file with timestamps.

It uses the Whisper AI "basic" transcription model from Open AI. To be fair, the "basic" model is not nearly as good as the "large" model, but we used basic as that was what came built into the plugin. We ran the plugin on a 23-minute audio interview that Senior Editor Andrew E. Freedman had recorded.

Time ranged from a brisk 42.5 seconds to a still-pretty-quick 61.1 seconds. That's pretty good considering the source material was more than 23 times as long. Here, we see the two AMD Ryzen 7840U-powered laptops take the lead, but only by a few seconds.

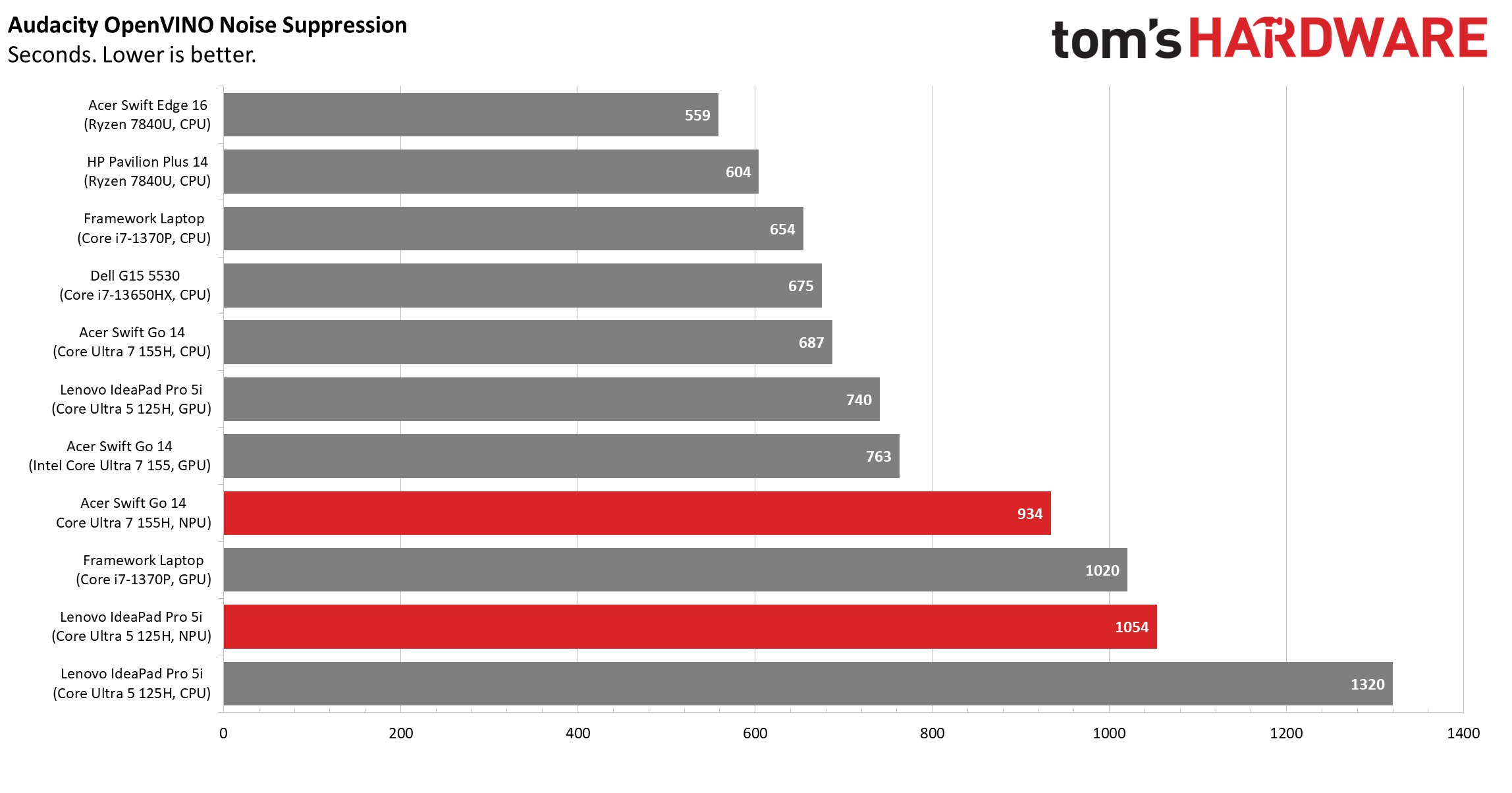

Test 3: Audacity OpenVINO Noise Suppression

Another Audacity plugin, OpenVINO Noise Suppression removes background noise from clips. So we took the same 23-minute audio clip we used for transcription and ran the noise suppression plugin on it. The interview Andrew conducted was in a noisy place so the suppression really helped get rid of background chatter.

When running the noise suppression plugin, you are given a choice of CPU, GPU or NPU as processing device. There's only a single device involved so there's no mixing of the GPU and NPU or CPU and GPU.

This noise suppression process, by far, took the longest of any test we conducted, with times ranging from 559 seconds (9 minutes) all the way up to a whopping 1,320 seconds (22 minutes). Here the AMD Ryzen-powered laptops dominated, hitting the top two spots.

This must be a pretty CPU-intensive workload as we saw laptops in their CPU modes coming up highest. In particular it's interesting that the Core i7-1370P, a 28-watt CPU, does better than the Core Ultra 7 155H, another 28-watt part. The Dell G15 5530 has a 45-watt CPU in the Core i7-13650HX. However, the 28-watt Ryzen 7840U is still the overall winner here.

Running on the NPU here was not helpful. Both the Swift Go and the IdeaPad Pro 5i ran very slowly in NPU mode.

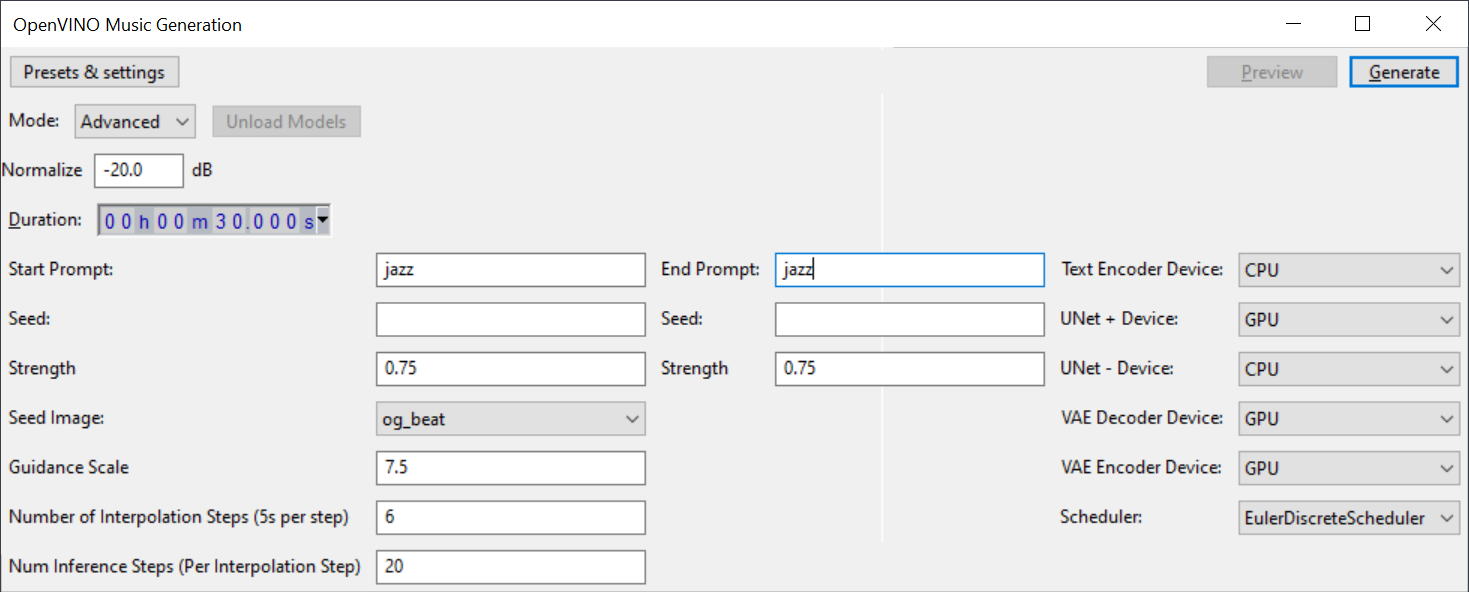

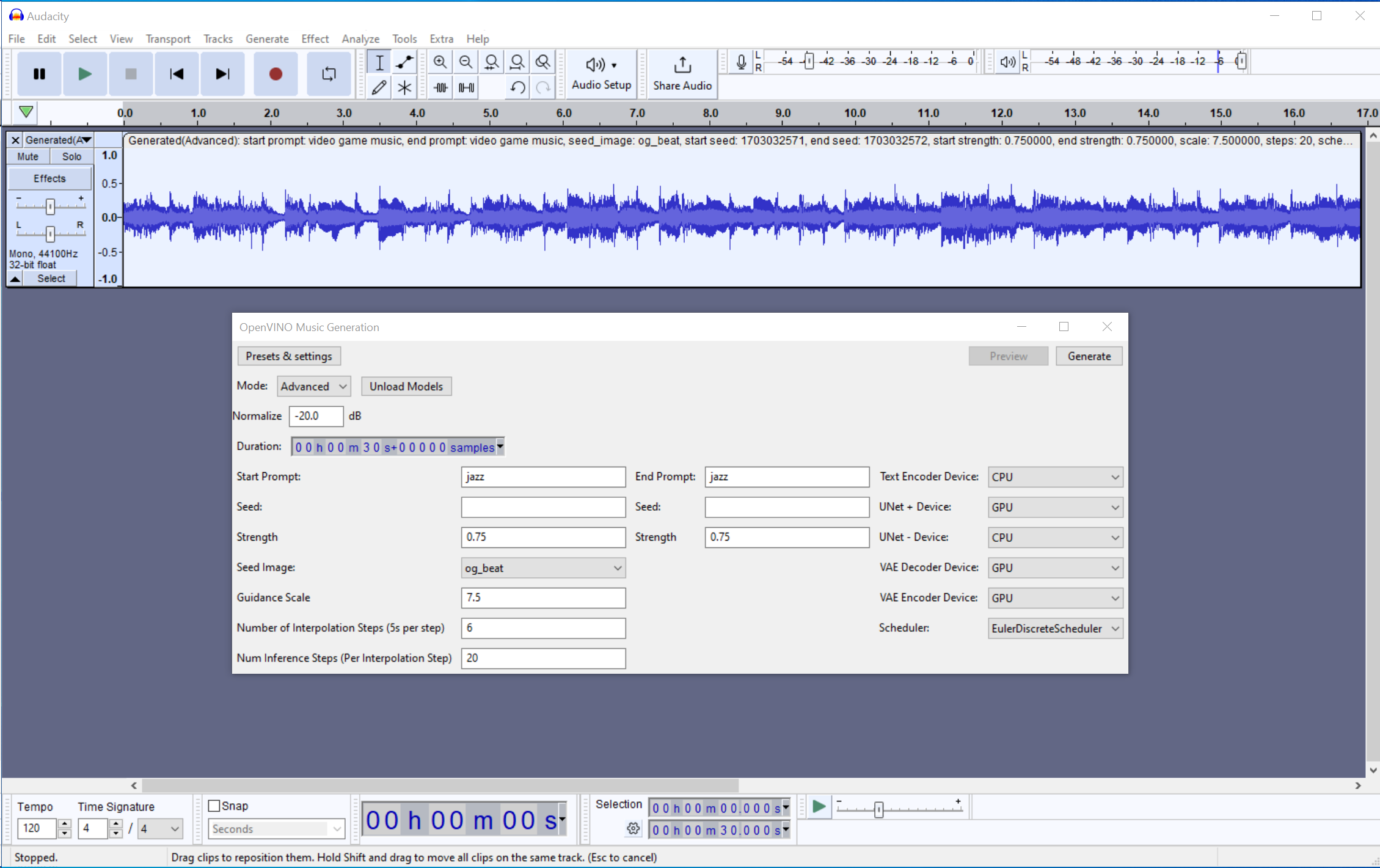

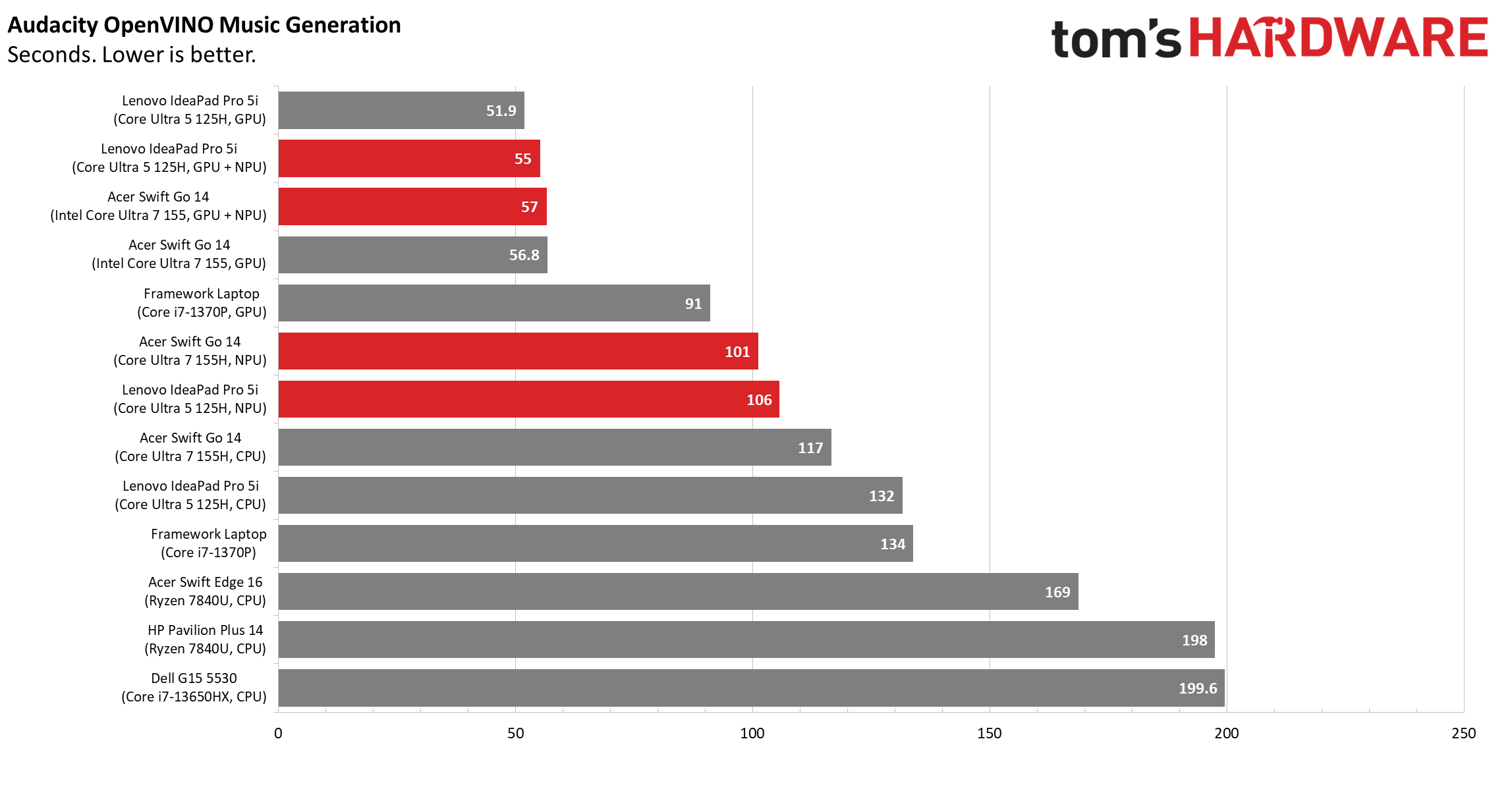

Test 4: OpenVINO Music Generation with Stable Audio

One of the other interesting OpenVINO plugins for audacity uses Stable Audio, Stability AI's music models, to generate tunes from text prompts. Before you get too excited, I should note that the tunes I got from it were on par with one of the "beats" buttons on a Casio Music Maker from 1986. They were very generic MIDI loops that sounded very synthetic.

I also found that, while you can use anything you want as a prompt, it can't actually mimic the style of any particular artist. When I put in "taylor swift," I got static back. When I entered "Ghostbusters," hoping to get a song like the Ray Park Jr. ditty, I ended up with some kind of creepy dirge and when I entered in a band name with a music style (ex: "AC/DC rock"), it really only paid attention to the music genre word.

For our tests, I asked for a 30-second song with the prompt "jazz," 6 interpolation steps and 20 inference steps. There are five different device settings here: Text Encoder Device, Unet + Device, Unet - Device, VAE Decoder Device and VAE Encoder Device. And you can choose among CPU, GPU or (on Unet + / -) NPU.

Times on this test ranged from 51.9 seconds on the low end to 199.6 seconds on the high end. Here, Meteor Lake won and did so primarily on the strength of its integrated Iris Xe GPUs. The top results were all either in GPU mode or a combination of GPU + NPU (GPU for all settings except Unet -).

On this particular test, AMD's processors did not fair well at all, taking a painful 169 and 198 seconds to complete the 30-second song.

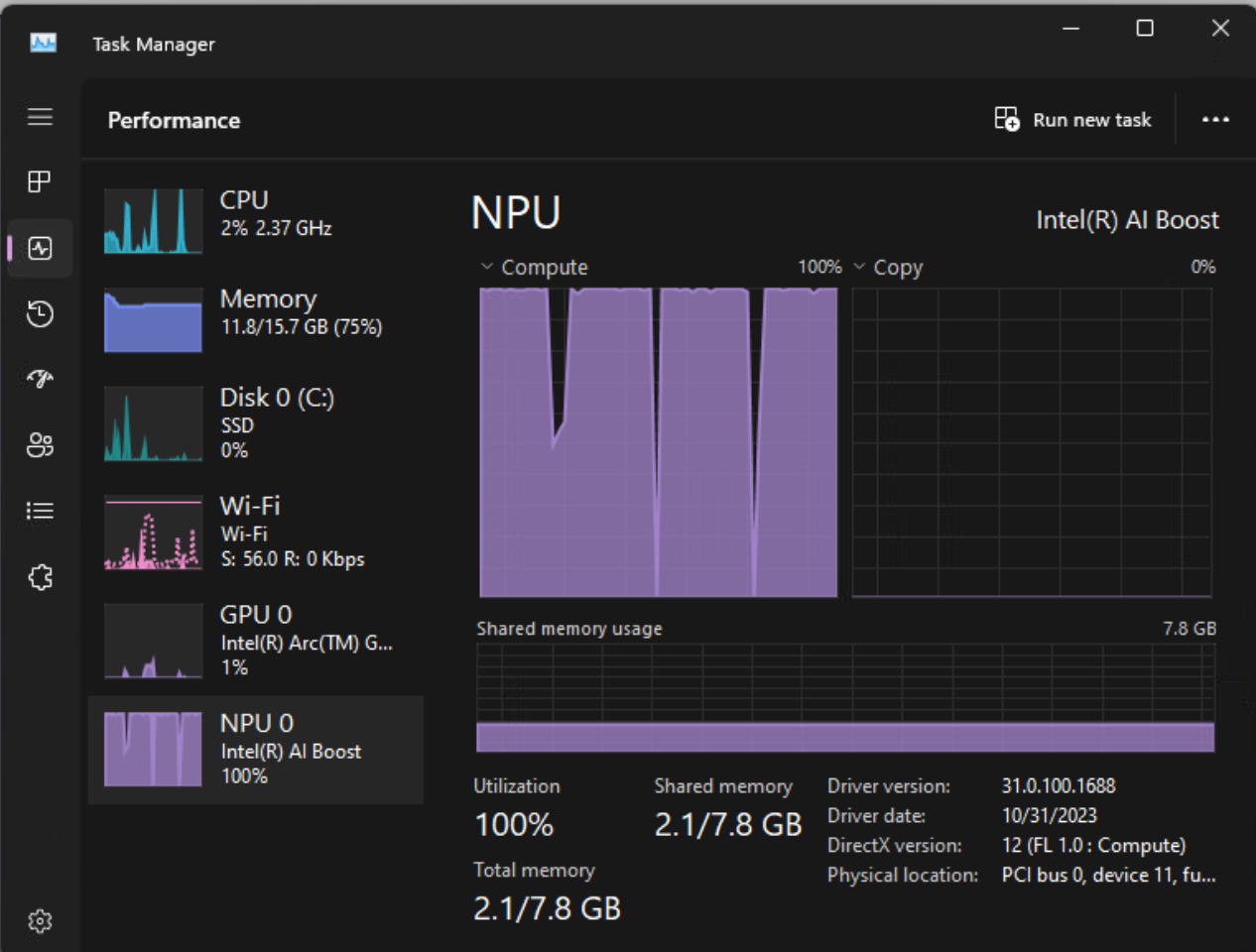

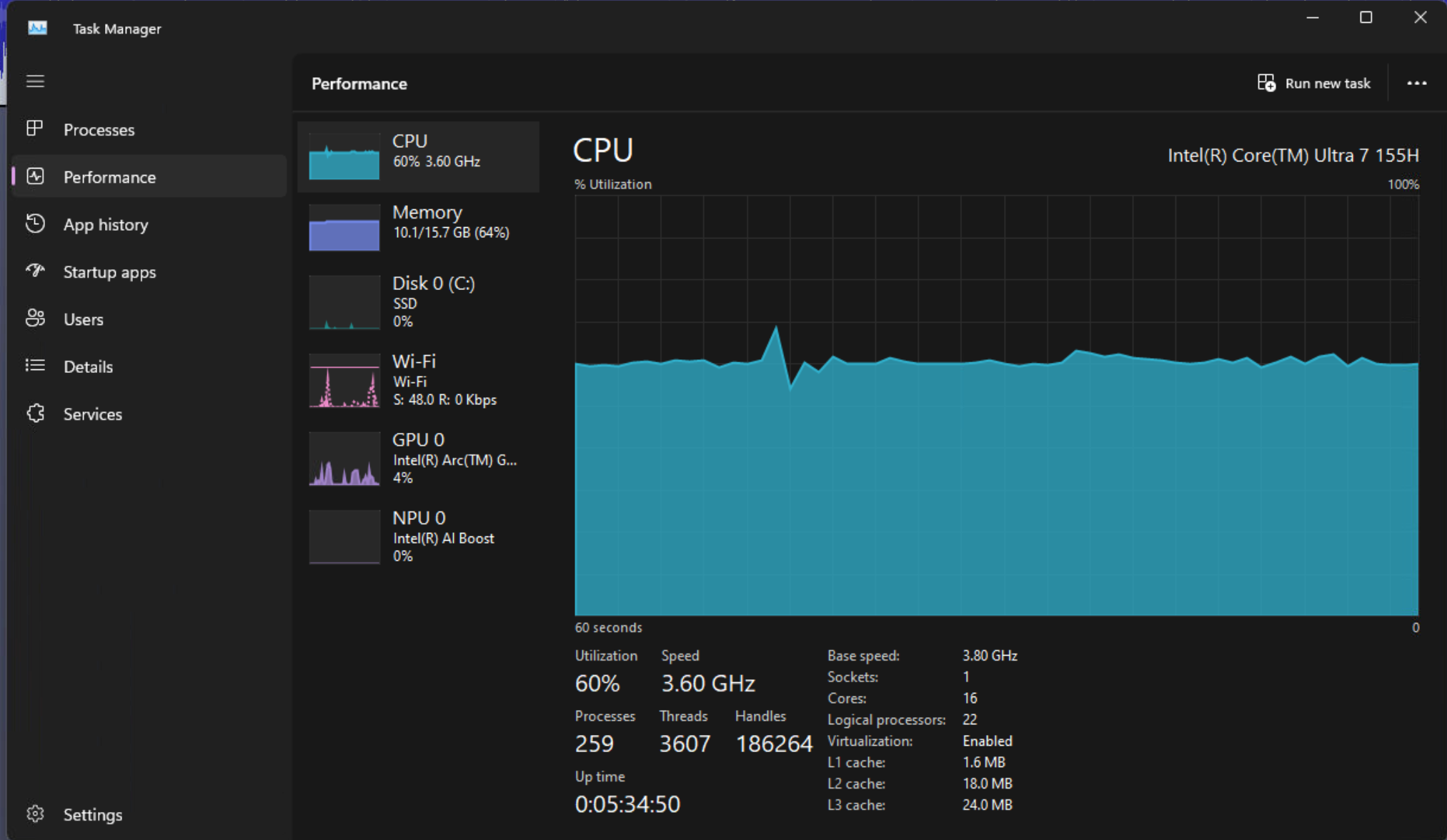

NPU Relieves the CPU

One benefit of the NPU that our test numbers don't reflect is how it takes a load off of your CPU. For example, when I ran an NPU-exclusive workload on the Acer Swift 14 Go and opened up the task manager, the CPU utilization was at a mere 2 percent while the NPU was using 100 percent.

But when I ran the same workload in CPU mode, the processor was pegged at 60 percent during the task. If we were unplugged, relying on the NPU would probably conserve power for battery life. Also, with the NPU taking on the AI workload, the CPU is free to do other things.

Will people be sitting in the airport, far from an outlet and using Audacity to generate AI music? I'm doubtful that will happen much in real life, but there are other AI workloads which could be happening during seemingly "regular" PC use.

For example, Rewind AI is working on a Windows version of its tool, which reads every single pixel on your screen and then uses machine learning to answer questions and offer solutions for your daily life (ex: putting a task on a to-do list because you mentioned it in a chat). If you don't find this kind of screen-reading creepy (I do), you'd need AI processing to handle it 24/7.

CPUs and Laptops Tested

| Processor | Cores | Integrated GPU / AI | Clock Speed | TDP | Laptops Tested |

|---|---|---|---|---|---|

| Intel Core Ultra 7 155H | 16 (6P + 8E + 2 Low-Power) | Intel Arc, NPU | 4.8 GHz Boost | 28W | Acer Swift Go 14 |

| Intel Core Ultra 5 125H | 14 (4P + 8E +2 Low-Power) | Intel Arc, NPU | 4.5 GHz Boost | 28W | Lenovo IdeaPad Pro 5i |

| AMD Ryzen 7 7840U | 8 | Radeon 780M, Ryzen AI | 5.1 GHz Boost | 28W | Acer Swift Edge 16, HP Pavilion Plus 14 |

| Intel Core i7-1370P | 14 (6P + 8E) | Intel Iris Xe | 5.2 GHz Boost | 28W | Framework Laptop 13 |

| Intel Core i7-13650HX | 14 (6P + 8E) | Intel UHD | 4.9 GHz Boost | 45W | Dell G15 5530 |

All of the CPUs except the Core i7-13650HX have a 28W TDP and all of the Intel ones have some combination of performance and efficiency cores. Despite its higher TDP, however, the Dell G15 5530 didn't do that well on most of the tests, showing the importance of having dedicated cores, an AI accelerator or a higher-quality integrated GPU.

Bottom Line

The workloads we tested were just a small sampling of the many local AI use cases that exist today and a tiny fraction of those which will exist in the future. Some common workloads, such as using ChatGPT, may require cloud services forever, but in the age of the AI PC, more and more ML functions can take place on your computer, which is a good thing for your privacy.

Intel's Meteor Lake chips certainly save on power and system resources with their NPUs, but they aren't the fastest at every workload, even when using Intel's own OpenVINO framework, which is optimized for Intel products only. It would be nice to see more AI apps that are optimized for all kinds of hardware, from AMD's Ryzen AI accelerator to Nvidia's CUDA cores and Intel's NPUs.

So far, it looks like whatever app you choose is going to be biased in favor of one hardware platform or another. But AMD's Ryzen chips should be praised for being so competitive, even when the toolset is made by their competitor.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

xyster More efficient power usage is very welcomed within a laptop, as was the value gained by hardware video acceleration. And just like with HWA, freeing up more CPU capacity for other tasks is going to gain traction in some communities, like live streaming.Reply

Imagine, a green screen , blurred background, or digital background effect on your video streams that adds no additional CPU load? The AV1 encoding would also be handled by the chip, leaving the CPU wide open to keep OBS from crashing.

I always figured the GPU would be the main horse in handling AI workloads, but that perception is changing. It paints a concerning picture for perhaps companies like Nvidia, who might start to lose releveance in the AI space as they did with crypto. The era of being able to game, mine, and train on a single desktop gaming GPU is slowly fading away. The entire China sanctions thing might only accelerate this.

Anyways, I think Intel has done quite well steering its ship in the right direction of late, and OpenVINO is finally maturing into something that's a bit of a blessing for all PC users. If OpenCL got traction instead of CUDA, maybe we'd be here already long ago. I'll wait for more comprehensive tests, but I'm excited to buy a new laptop -- no more 14nm+++! -

domih Readers can also refer to https://www.phoronix.com/review/intel-core-ultra-7-155h-linuxReply

From the article:

<<In fact, out of 370 benchmarks run on both the Ryzen 7 7840U and Core Ultra 7 155H focused strictly on the processor performance, the Ryzen 7 7840U was the best performer 80% of the time!

When taking the geometric mean of all 370 benchmark results, the Ryzen 7 7840U enjoyed a 28% lead over the Intel Core Ultra 7 155H in these Linux CPU performance benchmarks. This was all the while the Ryzen 7 7840U was delivering similar or lower power consumption than the Core Ultra 7 155H with these tests on Ubuntu 23.10 with the Linux 6.7 kernel at each system's defaults. The Core Ultra 7 155H also had a tendency to have significantly higher power spikes than the Ryzen 7 7840U.>>

Chart: https://www.phoronix.com/benchmark/result/intel-core-ultra-7-155h-vs-amd-ryzen-7-7840u-linux-benchmarks/result-1.svgz

As the French say: "y a pas photo" (kind of slang expression which means: no need to use the photo to distinguish the winner on the finish line)

EDITFound after I posted this, Tomshardware refers to the Phoronix article there: https://www.tomshardware.com/pc-components/cpus/amds-ryzen-mobile-chips-beat-intels-new-meteor-lake-in-linux-benchmarks-ryzen-7-7840u-and-intel-core-ultra-7-155h-go-head-to-head

EDITOn the other hand, the INTEL 7 155H with the new iGPU based on Arc Graphics shines and passes AMD RDNA 3 in a majority of benchmarks.

Ref: https://www.phoronix.com/review/meteor-lake-arc-graphics -

usertests Reply

I'm wondering the same about these NPUs, although I think we can safely assume it's low and more efficient.rluker5 said:How much power does the NPU consume? Isn't it on the low power SOC?

It's worth noting that Hawk Point has its "NPU" apparently clocked up to 60% higher than Phoenix for about 40% more performance. It's probably sacrificing a bit of efficiency for more performance.

Some of that is very obscure. It will be interesting to see how gaming actually shakes out.domih said:EDITOn the other hand, the INTEL 7 155H with the new iGPU based on Arc Graphics shines and passes AMD RDNA 3 in a majority of benchmarks.

Ref: https://www.phoronix.com/review/meteor-lake-arc-graphics

Also, only Meteor Lake-H gets 8 Xe cores (128 EUs), while Meteor Lake-U will only come with 4 Xe cores (64 EUs). So comparing Intel's "U" to AMD's "U" won't go so well. -

ThomasKinsley I'm split on this. On the one hand although Meteor Lake makes some gains it comes up short in expected battery life and core performance. On the other hand the NPU might eventually mature and developers could leverage its use in many more applications after they learn how to code for it.Reply -

bit_user ReplyThe OpenVINO plugins specifically recognized the Meteor Lake laptops' NPU and presented it as a device option. However, they didn't recognize the Ryzen chips' Ryzen AI accelerator and present it as an option. So we don't know if the AMD laptops, which we ran in CPU mode, utilized their AI accelerators at all during testing.

No, if you didn't see it, then Ryzen AI definitely wasn't used by the Ryzen 7840U (i.e. Phoenix). At best, the OpenVINO CPU backend was simply using AVX-512 on the CPU cores (at worst, just AVX2).

Based on specs of both Meteor Lake's NPU and Phoenix' Ryzen AI, I get the sense that they are comparable and AMD might even have a slight edge. I look forward to a proper comparison between them. I hope AMD can facilitate this (e.g. by adding the proper support to OpenVINO). -

rluker5 Reply

Intel's MTL "U" has a max TDP of 15w while AMD's "U" has a max TDP of 28w. Not as comparable as the same letter suggests. You would have to compare by TDP.usertests said:I'm wondering the same about these NPUs, although I think we can safely assume it's low and more efficient.

It's worth noting that Hawk Point has its "NPU" apparently clocked up to 60% higher than Phoenix for about 40% more performance. It's probably sacrificing a bit of efficiency for more performance.

Some of that is very obscure. It will be interesting to see how gaming actually shakes out.

Also, only Meteor Lake-H gets 8 Xe cores (128 EUs), while Meteor Lake-U will only come with 4 Xe cores (64 EUs). So comparing Intel's "U" to AMD's "U" won't go so well. -

bit_user @apiltch , the labels in this graphic got truncated, preventing us from seeing whether some of the tests used CPU, GPU, NPU, etc.Reply

-

bit_user Reply

The iGPU in these Meteor Lake processors lacks the XMX cores (analogous to Nvidia's Tensor cores), seen in Intel's dGPUs. I think the jury is still out on whether GPUs can remain relevant, in the AI race.xyster said:I always figured the GPU would be the main horse in handling AI workloads, but that perception is changing. It paints a concerning picture for perhaps companies like Nvidia, who might start to lose releveance in the AI space as they did with crypto.

Anyway, Nvidia, AMD, and Intel have all created separate architectures and product lines for their high end compute & AI products. They don't much resemble consumer GPUs, any more. Intel is also pursuing a parallel track of improving their Gaudi series of dedicated AI accelerators.

From what I've seen, OpenVINO's GPU backend actually uses OpenCL. If AMD would beef up their OpenCL support, perhaps it would "just work" on their GPUs, as well.xyster said:OpenVINO is finally maturing into something that's a bit of a blessing for all PC users. If OpenCL got traction instead of CUDA, maybe we'd be here already long ago. -

bit_user Reply

As usual, the picture is more complex than that.rluker5 said:Intel's MTL "U" has a max TDP of 15w while AMD's "U" has a max TDP of 28w. Not as comparable as the same letter suggests. You would have to compare by TDP.

Processor Base Power 15 W

Maximum Turbo Power 57 W

Minimum Assured Power 12 W

Maximum Assured Power 28 W

Source: https://ark.intel.com/content/www/us/en/ark/products/237329/intel-core-ultra-7-processor-165u-12m-cache-up-to-4-90-ghz.html

I think it will turn out to be implementation-specific, and looking at what sustained power a specific laptop (or mini-PC) utilizes.