Meta will have 350,000 of Nvidia's fastest AI GPUs by end of year, buying AMD's MI300, too

Meta expects to have 600,000 H100 GPU-equivalent AI horsepower by end of 2024.

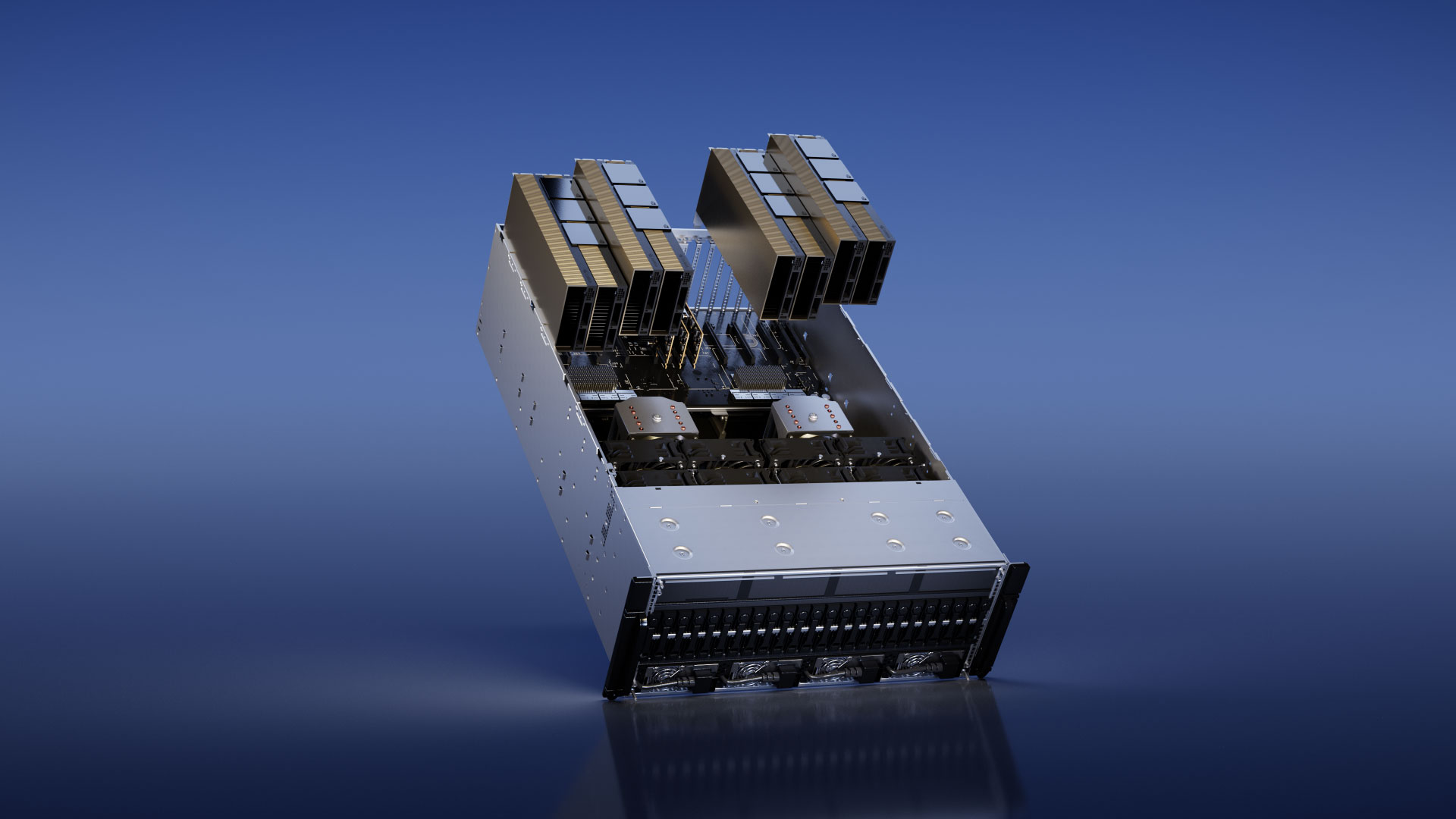

Mark Zuckerberg this week published an update on Meta's artificial intelligence (AI) and the hardware that powers it in particular. By the end of the year Meta will have AI hardware with the performance equivalent to 600,000 Nvidia H100 GPUs. Apparently, Meta, which is known to be one of the major purchasers of Nvidia's H100 GPUs, buys many kinds AI processors, including AMD's Instinct MI300.

A post shared by Mark Zuckerberg (@zuck)

A photo posted by on

"We are currently training our next-gen model Llama 3, and we are building massive compute infrastructure to support our future roadmap, including 350,000 H100s by the end of this year — and overall almost 600,000 H100s equivalents of compute if you include other GPUs," Zuckerberg wrote in an Instagram post.

Based on data from Omdia, Meta procured some 150,000 Nvidia GPUs in 2023 with only Microsoft being able to challenge it. Google, Amazon, Oracle, and Tencent could only get around 50,000 H100 GPUs each, based on the numbers from Omdia. As it turns out, Meta is now on track to buy and install hundreds of thousands of Nvidia H100 GPUs in the coming quarters. Whether Nvidia can fulfil the demand for its AI GPUs and AI-oriented Grace Hopper products is another question.

Dylan Patel, Chief Analyst of SemiAnalysis, models that Nvidia will be able to increase output of its Hopper-based products — the family that today includes H100, H200, GH100, GH200, and H20 — to 773,000 in Q1 2024 and then 811,000 in Q2 2024, which is an impressive growth considering the fact that the company shipped approximately 300,000 H100s in Q2 2023.

What is interesting is that Zuckerberg notes that by the end of 2024 his company is going to have AI performance equivalent to 600,000 H100 GPUs. This probably indicates that Meta is going to add some all-new Nvidia Blackwell AI GPUs to its fleet (which is logical as its software is designed around CUDA-based processors) and then procure AI hardware from other suppliers. In fact, both Meta and Microsoft are procuring plenty of AMD's Instinct MI300 processors too, according to SemiAnalysis.

"While Nvidia has capacity to continue to grow in the 2nd half, the demand modeling we have done indicates a fall off in Hopper demand as hyperscalers shift to looking towards Blackwell," Dylan Patel told Tom's Hardware. Meta and Microsoft are also buying significant MI300.

Meta's longer-term goal is to build artificial general intelligence (AGI) that will be able not only to learn, generate, and respond, but also has cognitive capabilities and ability to reason its decisions. To achieve this, Meta apparently brings its AGI and generative AI research efforts closer together. Meanwhile, Zuckerberg did not disclose whether existing hardware is enough to build working AGI models.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"Our long-term vision is to build general intelligence, open source it responsibly, and make it widely available so everyone can benefit," the head of Meta said. "We are bringing our two major AI research efforts – Fundamental AI Research (FAIR) and Generative AI — closer together to support this."

In addition to building massive AI-oriented datacenters to support FAIR and GenAI applications, Facebook is also working on AI-enabled devices and plans to expand the lineup of such gadgets.

"Also really excited about our progress building new AI-centric computing devices like Ray Ban Meta smart glasses," Zuckerberg said. "Lots more to come soon."

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

thisisaname Reply

They do something, something profitable to them!. Good for anyone else that is less sure.RichardtST said:Cool! Now they can accomplish nothing even faster!