How to Make a Good Built-in Game Benchmark

Hint: Extra delays suck

My job consists largely of running gaming benchmarks and then writing about the results. When I look in the benchmark results folders of some of the games I've used a lot, finding over 2,000 individual test results causes me to reflect. But what hurts more than realizing that I've spent over 200 hours in Shadow of the Tomb Raider — most of that time being used in running benchmarks — is knowing just how pointless a lot of that testing was.

This article is less for our normal readers, though hopefully some of you will appreciate my ranting, and more for the game developers. Creating a built-in benchmark takes time and effort, but even after all that effort, some games just cause the people who are most likely to use the feature — people like me — extra pain for no good reason. So, here are the things I wish built-in benchmarks would get right, along with call-outs to some particularly good examples, as well as a public shaming of some egregious games.

1 - Repeatability Is Critical

The first requirement of any good benchmark needs to be repeatability. If I run a benchmark five times in a row, using the same settings, the results should all fall in a narrow range. I always toss the first result since the graphics card hasn't warmed up yet, game data files might not be cached, and there's usually a lot more variability on that first run. The second, third, etc., runs, however, shouldn't have more than about a 1% spread. Unfortunately, that's often not the case.

Take Dirt 5 as an example. All of the cars are simulated in the benchmark, and the weather seems to vary a bit as well. That means sometimes you get a "perfect" run, and performance might be 5% faster than a "bad" run. That means repeating the test more times to ensure the results represent what the hardware can do, rather than showcasing whether a particular card got lucky.

Assassin's Creed Odyssey is another terrible example, or at least it was at launch. Weather effects like rain and heavy clouds could drop performance by as much as 20%. The potential for rain was later removed from the benchmark, but the cloud cover still impacts framerates. A clear, sunny day can perform over 10% better than a heavily clouded day.

It's not just weather and time of day effects, though. Gears 5 has an otherwise great built-in benchmark (the Microsoft Store notwithstanding), but it has issues with framerate caps at the beginning of the test about every second or third run. I'm not sure if that was ever fixed, but it was possible to get a 60 FPS cap for the first 10 seconds or so on some runs, which could skew performance downward by 15% or more.

So, skip the randomness for any built-in benchmark, even if that's normally a big part of an actual game. The same cars should win every race, in the same order, like in Forza Horizon 4. The same people should get shot, in the same way, every time a test gets run. I don't want extraneous people or effects that only show up a third of the time, periodic rainfall, etc. Just make it consistent, please.

2 - Don't Waste Time

This is actually my biggest pet peeve in built-in benchmarks. As much as I want a reliable test, big time-wasters are the bane of my existence as a graphics card tester. These can range from navigating a few extra screens every time, to long test sequences that stop being meaningful after the first minute or so, to horribly slow loading times. All of these are bad, most are unnecessary at best, and some are outright hostile at worst. Anything that takes more than two minutes basically wastes time providing data that few, if any, people will notice.

Ubisoft is an interesting case when it comes to built-in benchmarks. Most of their big-name games include a built-in benchmark, which I greatly appreciate. For example, all of the recent Assassin's Creed games have one, the Far Cry games have included a test sequence since the second game in the series, The Division 2 and Watch Dogs Legion also include useful benchmarks. But the various games vary greatly in how easy it is to repeat a test.

Far Cry 5 shows you the results at the end, you press escape to leave the page and you're right back to the display settings page where you started. Press F5, and you can quickly run the test again — great. Assassin's Creed Valhalla is on the other end of the spectrum.

First, the benchmark can take 30–40 seconds to start from the main menu (though at least it's not buried in the video submenu). But at the end of the test, when you're shown the results, pressing escape drops you back to the title screen before the main menu — and takes 15 seconds to get there in the first place. Then, it's another five-second transition to get back to the main menu to restart the benchmark. Basically, it's at least one minute of waiting and navigating menu screens for every 90 seconds of the actual benchmark.

The Division 2 has shorter transitions than Valhalla, which is greatly appreciated and leaves you at the same Benchmark menu option after you leave the results screen. Press enter twice, and you're back in business. Watch Dogs Legion takes you back to the main menu, but at least the transitions only take a few seconds rather than 20 or 30 seconds. Sadly, the Far Cry 6 benchmark is actually a step backward from the previous games, because now it drops you to the main menu like Legion. <Sigh> But at least it has a relatively fast transitions between the screens.

I think the best example of getting it right, again, is Forza Horizon 4, which lets you press "Y" on the detailed results screen to immediately restart the test. After the first run, it's literally about five seconds to start another run. Awesome!

Shadow of the Tomb Raider conveniently lets you immediately run another test from the results screen by pressing "R," but it commits other sins. The entire benchmark consists of three separate scenes, with black loading screens in between. The second sequence is useless (too light on the workload), and the third scene is arguably the best since it lasts longer and includes more NPCs, though the first sequence is also decent. Either way, the game wastes two minutes of my time for each benchmark run. It's not the worst example of this, sadly.

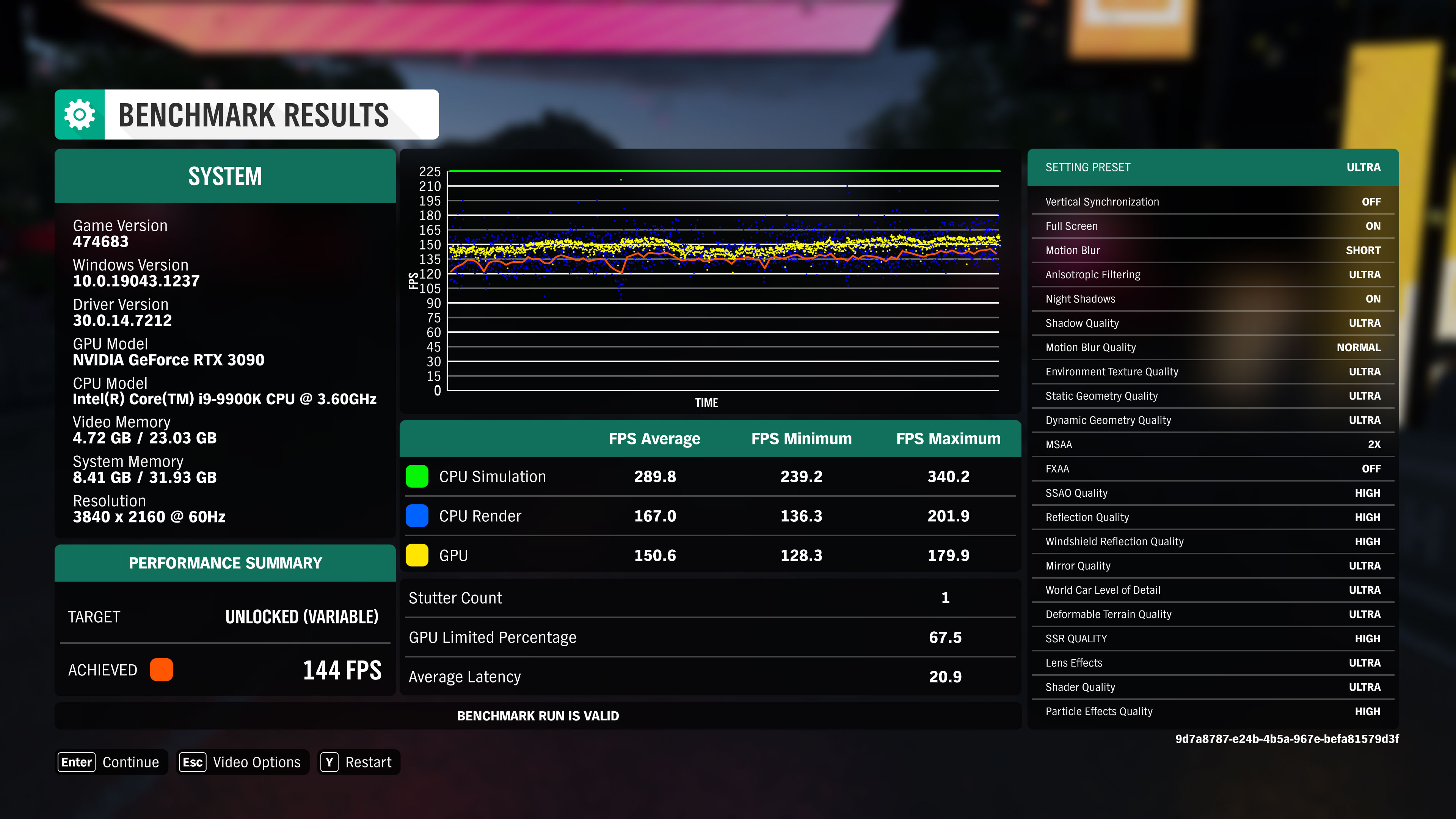

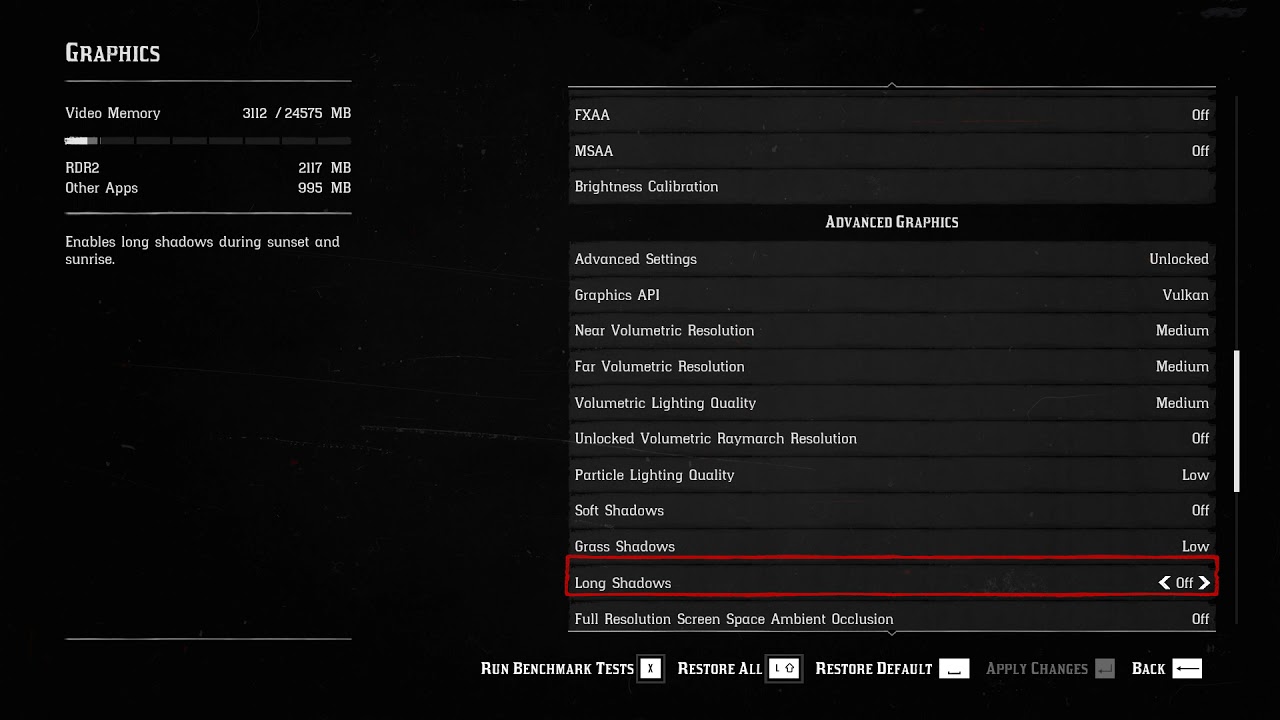

Rockstar earns a special place of disdain in my heart for both Grand Theft Auto V and Red Dead Redemption 2 for exactly the same reasons. First, neither game includes a proper collection of presets, both have dozens of settings, and many of the settings matter very little. But even worse is that both games take a virtual eternity to run the benchmark sequence. GTAV requires about four minutes per loop, and RDR2 'wins' as the longest benchmark in my suite at about six minutes per loop — and about a minute of that is in loading screens and menu navigation. Each game shows four shorter scenes that aren't particularly useful (IMO), followed by a longer third scene, the one I actually use for testing.

3 - Needs to Represent Actual Gameplay

This one should be obvious, but a benchmark needs to give a reasonable approximation of the performance people will encounter when actually playing the game. A canned benchmark that runs 25% faster than the game isn't useful. Most games with built-in benchmarks get this right, but there are always exceptions.

Strange Brigade immediately comes to mind as a game with a somewhat questionable built-in benchmark sequence. It's consistent and easy to run, but the test has tons of characters frozen in time while a camera flies through the scene. It's one of the reasons the framerates can hit such high levels — no AI or other calculations need to happen. That doesn't make the benchmark useless, but it does make it less pertinent for anyone who wants to know what hardware is required to hit a certain level of performance.

Using a canned benchmark that properly represents gameplay means using one of the more demanding sequences a user might encounter while playing a game. For example, if some levels run at 120 fps but others only run at 90 fps, use the latter. Otherwise, people should just use 3DMark, which would likely tell them just as much about how well their PC can run games.

Of course it's possible to benchmark just about any game in a way that provides real-world performance metrics. Anything without a built-in benchmark can be manually tested using OCAT, PresentMon, or similar tools. But that means having someone physically sit there and run the exact same test sequence potentially hundreds of times — something I do for certain games. It's far more time-consuming and not necessarily any better than most built-in benchmarks.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

4 - Keep the Settings Relatively Simple

Presets are great, and while I appreciate being able to fine-tune my settings as much as anyone, it's possible to get far too much of a "good" thing. Basically, if a setting doesn't make more than a 1–2% difference in performance, especially if it's difficult to see the difference in screenshot comparisons, merge it with some other setting. Not including presets makes it easy to accidentally use different settings when testing hardware, leading to non-comparable results.

Provide useful presets as well. Most games have low, medium, high, and ultra as presets, and that's more than sufficient. We don't need very low and very high since those should really be the low and ultra settings. What we do need, however, are extra presets for games that include particularly strenuous features like ray tracing.

Cyberpunk 2077 includes a built-in benchmark now (though it makes you go back to the main menu between iterations), and it has low, medium, high, ultra, DXR-medium, and DXR-ultra presets. Most other games with ray tracing will stick those settings down in an extra section at the bottom, which is less great. Control also gets this right, with a primary preset on the top of its settings page, plus a ray tracing preset below, though again, it's easy to reach the point of settings overload if a developer isn't careful.

Bad examples are particularly numerous. I've already called out Rockstar's GTAV and RDR2 above, and Red Dead Redemption 2 has about 30 settings you can tweak, with a "Quality Preset Level" that actually doesn't select the same settings for every GPU — it seems to base its recommendations mostly on how much VRAM your card has. Two thumbs down! Gears 5 also had up to 28 settings you could tweak, only five of which caused more than a 5% change in performance. Borderlands 3, Assassin's Creed Valhalla, Destiny 2, and many other games have upward of a dozen customizable settings, often with fewer than half having more than a negligible impact on visuals and performance.

Of course, it's possible to go too far in the other direction by not including enough settings to tweak. For example, The Outer Worlds only had six individual settings, with no way to disable anti-aliasing or motion blur. Basically, if a setting can significantly impact performance or visuals, it should be included as something the user can adjust.

Other related complaints include spreading the settings out over multiple menus. Do we really need separate display, graphics, and advanced graphics screens? No, we don't. There's also no reason to put stuff like FidelityFX Super Resolution on a different menu from the resolution section, and please stop using scaling factors as part of the presets (looking at you, Fortnite). When a game says 1920x1080, that should be at 100% scaling by default. I don't mind providing users with the option to tweak that, but let us decide to use upscaling. Otherwise, testers — the ones actually using the benchmark function! — will just need to remember to manually change this every time they use a preset, and we're likely to make mistakes, which wastes time and potentially leads to bad data.

5 - Bonus Points, Extras, and Penalties

The above guidelines are things that should be considered for any developer looking to include a built-in benchmark for their game. However, that's more the minimum of what should be included, and there are plenty of other extras that can be useful.

One option that a few games have opted to use is the standalone benchmark tool. Metro Exodus (and previous Metro games) has a benchmark.exe file that allows you to select the settings and then start the benchmark. Even better, it lets you specify the number of iterations, and it also spits out a CSV file with all the frametimes for each run. This is a convenient and easy-to-use benchmark, the only real drawback being that you can't remove the defaults from the list, which makes the "run all" option a bit of a waste. Also, it opens a browser window after each settings test, and sometimes doesn't give focus back to the game window, which can result in invalid runs.

Final Fantasy XIV also has a standalone benchmark, and it's completely standalone — you don't even need to own the game. That's good, though the benchmark does take over five minutes to run, has black screens during scene transitions (and includes the low fps stutters that happen while loading the new scene in the benchmark results), and I'm not certain if the benchmark is representative of normal gameplay. It doesn't provide a CSV of the results either, instead giving a nebulous overall score.

Another great feature to include is the ability to launch a benchmark from the command line, including specifying the settings to use. The most recent Hitman games have been a good example of this. Ashes of the Benchmark... er... Singularity also had the ability to script things. It's possible but far more complex to manually script benchmarks, and often such scripts can skew the results. Batch files are far better in my book.

On the other side of the fence, let's also note that any modern game that implements a (usually temporary) lock-out feature when it detects too many hardware swaps needs to cut the crap. Denuvo did this, and it made benchmarking games with Denuvo a major pain. We have DRM of various forms built into Steam, Origin, Epic, and other digital platforms. Meanwhile, GoG gained a lot of goodwill by skipping DRM entirely. Either way, there's no sense in locking out a user for using too many different sets of hardware. That's especially true since the platforms don't allow concurrent play from multiple locations anyway.

Framerate caps are another horrible 'feature' that still shows up on occasion, most recently in Deathloop. We've had games successfully manage to work with uncapped framerates since the original Quake and Unreal, so how some developers and engines still think they're required strikes me as absurd. Not surprisingly, most games that include a benchmarking utility also feature unlocked framerates, and for people that want to lock things down there's always vsync. We're also not concerned with capped fps in game menus, where they don't really hurt anything (and my protect your GPU, like in New World). But otherwise there's no reason for fps caps these days. We already have monitors that can go as high as 360Hz, and we'll probably see even higher refresh rates in the future, so please: Quit locking the framerate to some arbitrary value.

To Err Is Human... (Closing Thoughts)

Look, I get that benchmarks aren't for everyone — some people just want to play games and not worry about settings and performance. While console players obviously fall into that grouping, there are plenty of PC gamers that just want things to work. But if a game is going to go to the trouble of including a built-in benchmark, hopefully these tips will help to make the included benchmark better.

I can think of few things worse than spending dozens of hours or more of time on something that nobody wants to use. I worked as a software developer in a previous job, and we did precisely that: I worked 50–60 hour weeks for the better part of two years, all the while toiling away on something that was clearly garbage. The product never saw the light of day, never mind the CEO's talks of changing the world. Someone needed to sit him down and say, in effect, we're doing it the wrong way!

That's the point of this piece about gaming benchmarks. If you're going to make one, make it a good one. Repeatability and relevance are important, but please don't waste my time, or the time of hundreds of other people who might use your benchmark. Get to the point of the test, basically. If a single run can't be done in less than two minutes, you're doing it wrong — and that includes all the menu navigation and such.

Let me close with a question for any readers that made it this far. What are some examples of great benchmarks, past or present, that you've seen? Are there any other games that need a public shout-out? Let us know in the comments.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

GenericUser The original F.E.A.R had a great benchmark in my opinion. It was relatively quick, gave a good sampling of different things to strain the card that would be realistically encountered in the game, gave some nice quick stats at the end (min, max and average framerates), and most importantly of all, each benchmark run was identical to the last in terms of what occurred.Reply

Partial tangent, I hate games where you choose an "ultra" or similar preset (basically whatever is their highest offered global preset) and there are a few settings that can still be pushed up one or two more levels. If I pick the preset for the highest thing, I expect every setting to be at it's highest. I usually just manually set everything myself because of this. -

Howardohyea you really have to appreciate all the work reviewers put into benchamrking graphics cards, they definitely deserves more creditReply -

husker "This article is less for our normal readers, though hopefully some of you will appreciate my ranting, and more for the game developers. "I love a good rant. Coming from a professional just makes it all the better. 🍿Reply