Far Cry 6 Benchmarks and Performance

A bit undercooked

Far Cry 6 has arrived, ushering in a new API and enhanced visuals compared to the last two games in the series. This marks the first time Far Cry has used the DirectX 12 API, a requirement for the DirectX Raytracing options. We've benchmarked over two dozen AMD and Nvidia GPUs, including most of the best graphics cards, to see how they stack up. Surprisingly, performance isn't significantly worse than Far Cry 5, at least not on our test system that appears to impose some CPU bottlenecks.

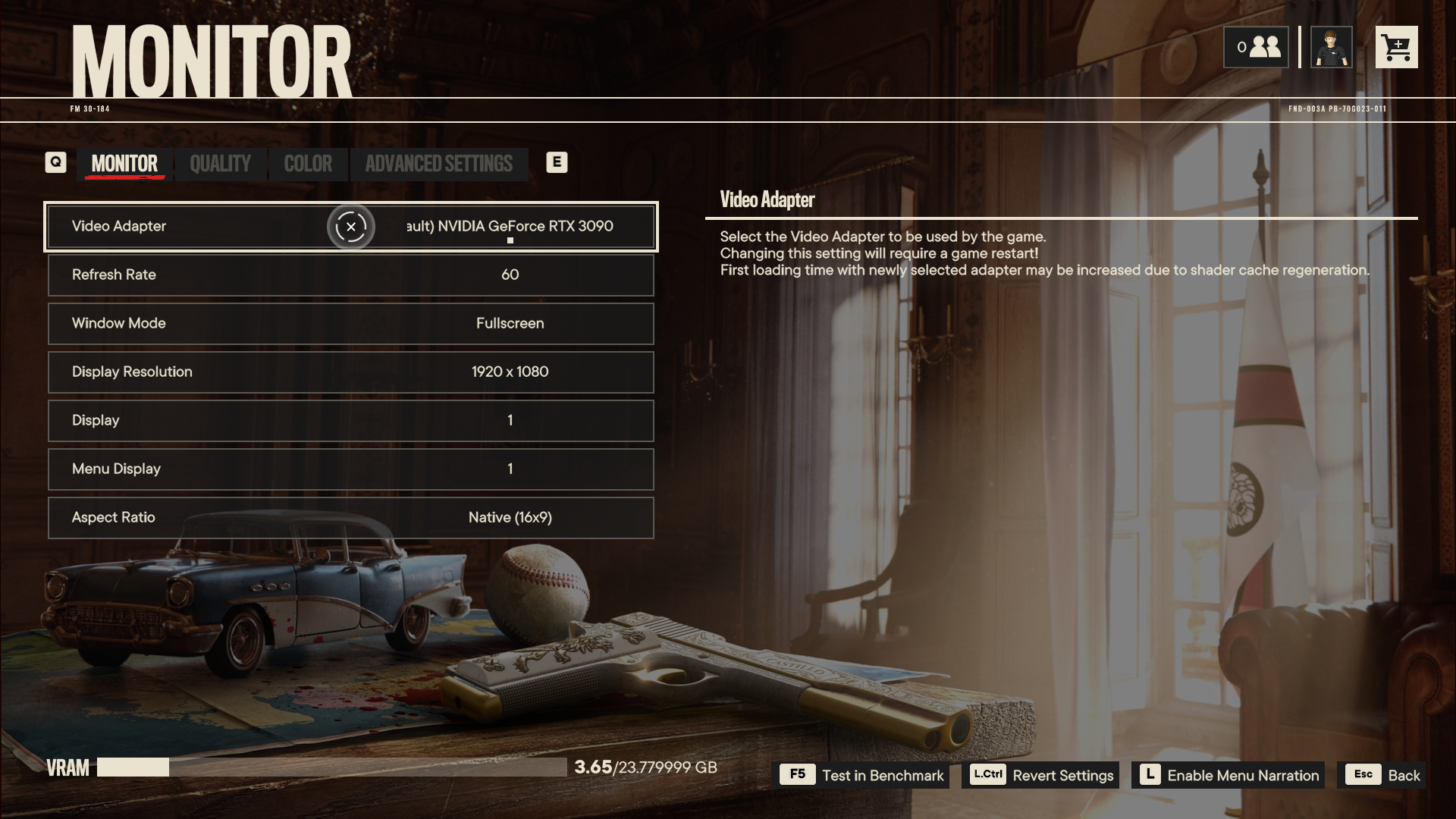

Officially, the Far Cry 6 system requirements look quite tame, at least for the minimum setup. A Radeon RX 460 or GeForce GTX 960 (both with 4GB VRAM) should suffice for more than 30 fps at 1080p low. Stepping up to 1440p ultra at 60 fps moves the needle quite a bit, with a recommended RX 5700 XT or RTX 2070 Super. Meanwhile, ray tracing at 4K ultra drops back to a 30 fps target, and an RX 6800 or RTX 3080 is recommended — and you really do need at least 10GB VRAM to handle native 4K ultra with DXR.

We've used our standard graphics test PC, equipped with a Core i9-9900K CPU, to see how the various GPUs actually stack up. That's a faster CPU than the recommended Core i7-9700 / Ryzen 7 3700, though we still ran into some bottlenecks.

We used Nvidia's most recent 472.12 drivers, which are not 'game ready' for Far Cry 6 — we asked about getting updated drivers but were not provided any in time for this initial look at performance. Meanwhile, AMD provided early access to its 21.10.1 drivers, which it released to the public on October 4, and those are 'game ready.'

Like most recent Far Cry games, Far Cry 6 includes a (mostly) convenient built-in benchmark — see our guide for how to make a good built-in benchmark for more details, but the latest installment actually represents a step back in terms of ease of use. We've used that for our testing, as it minimizes variation and keeps things simple. We tested each card multiple times at each setting, discarding the first run after launching the game — that run usually scores better as the GPU hasn't warmed up yet and can boost slightly higher.

For this initial look we used a preview version of the game provided by AMD and Ubisoft. We've since gone back to retest a few cards with the public release, and didn't notice any significant changes in performance. We've tested at 1080p, 1440p, and 4K using the medium and ultra presets. We left off the HD textures at medium and enabled them for ultra. We also tested at ultra with DXR reflections and DXR shadows enabled. We enabled CAS as well, mostly because I like the way it looks and it has a negligible impact on performance, left off motion blur, and only tested at native resolution — though I did run some additional tests with FidelityFX Super Resolution to see what sort of scaling users can expect.

We'll provide a deeper dive into the settings and image quality below, but let's get straight into the graphics card benchmarks. We've also added the newly launched Radeon RX 6600 to the charts now.

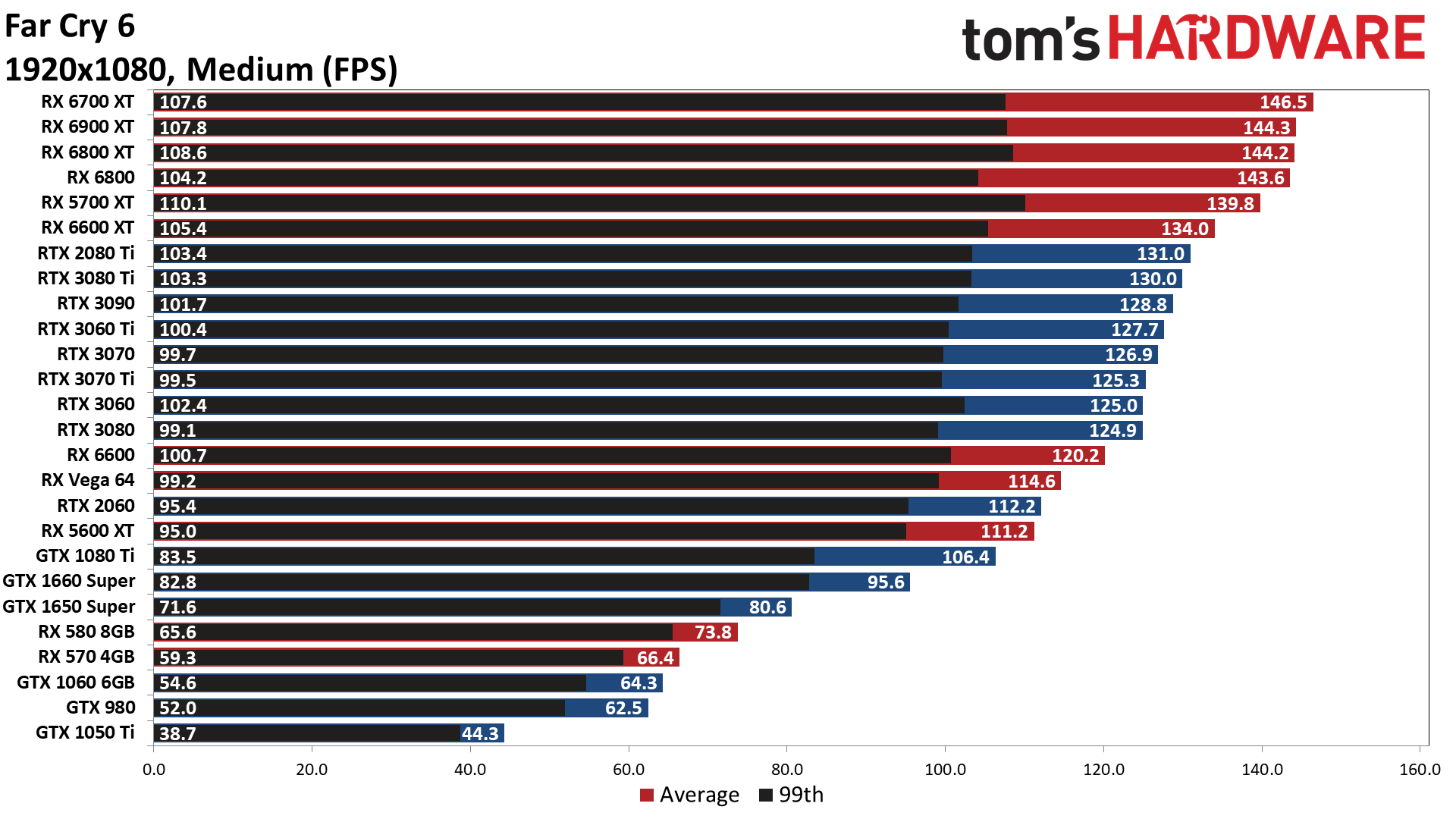

Did we mention that Far Cry 6 is an AMD-promoted game? We can't say for certain what's holding the Nvidia GPUs back, but the fastest cards can't even hit 144 fps at 1080p medium. Actually, if we're being frank, none of the cards maintain close to a steady 144 fps experience — AMD's fastest GPUs average around 144 fps, but minimums all hover in the low 100s. Nvidia's GPUs currently top out at around 130 fps.

There are also some weird variations among the GPUs at this admittedly low setting. Basically, the CPU bottlenecks combined with different GPU core counts and other elements mean that cards that are normally slower can end up outperforming higher-end models. We see that with AMD's RX 6700 XT as well as the RTX 2080 Ti. Again, updated Nvidia drivers, in particular, will likely improve the situation, but this is how things currently stand.

We don't have an RX 460 or GTX 960 still hanging around, so our slowest card for testing is the GTX 1050 Ti. That's generally a bit faster than the GTX 960 4GB, depending on the game code, though looking at the GTX 980 and GTX 1060 6GB, we suspect the 960 would be relatively close to the 1050 Ti. Anyway, it manages a reasonable 44 fps at 1080p medium, and dropping the settings to low should give it an added boost.

If you're after 60 fps or more, just about any reasonable mid-range or higher GPU released in the past five years should more than suffice. The GTX 1060 and RX 570 both clear that mark and most of the other GPUs hit triple digits — which is good, since GPU prices are still all kinds of messed up.

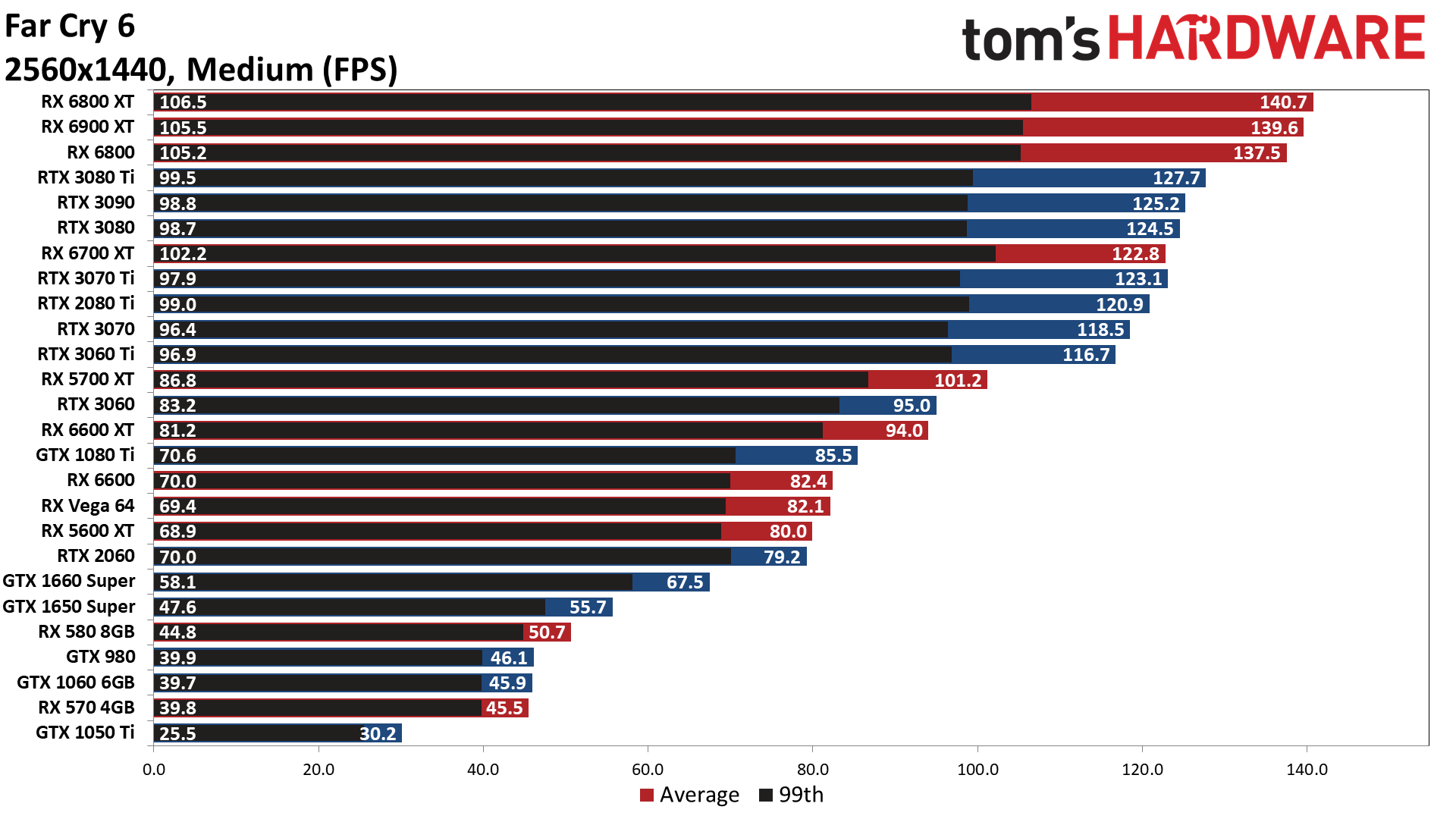

Most of the tested GPUs take a relatively small hit going from 1080p to 1440p at medium settings. We also start to see performance rankings line up more as we'd expect, though the RX 6800 XT still took top honors for AMD, and the RTX 3080 Ti edged out the RTX 3090. Everything from the RTX 2060 and above clears 60 fps, which means cards like the GTX 1080 and 1070 should manage okay as well at these settings.

I should also note that the GTX 1080 Ti showed some odd behavior initially, and I've now retested. I'm not certain what went wrong before, but retesting showed a 20-35% improvement compared to the original numbers. It seems my well-used sample was acting up, though even with the improved results the RTX 2060 still delivered a superior experience at 1080p, which is not normally the case.

This is also the last setting where most of the 4GB cards can deliver a decent experience, and the GTX 1050 Ti basically can't go much further. It performs about the same at 1080p ultra, but the card was never really intended for high-resolution gaming.

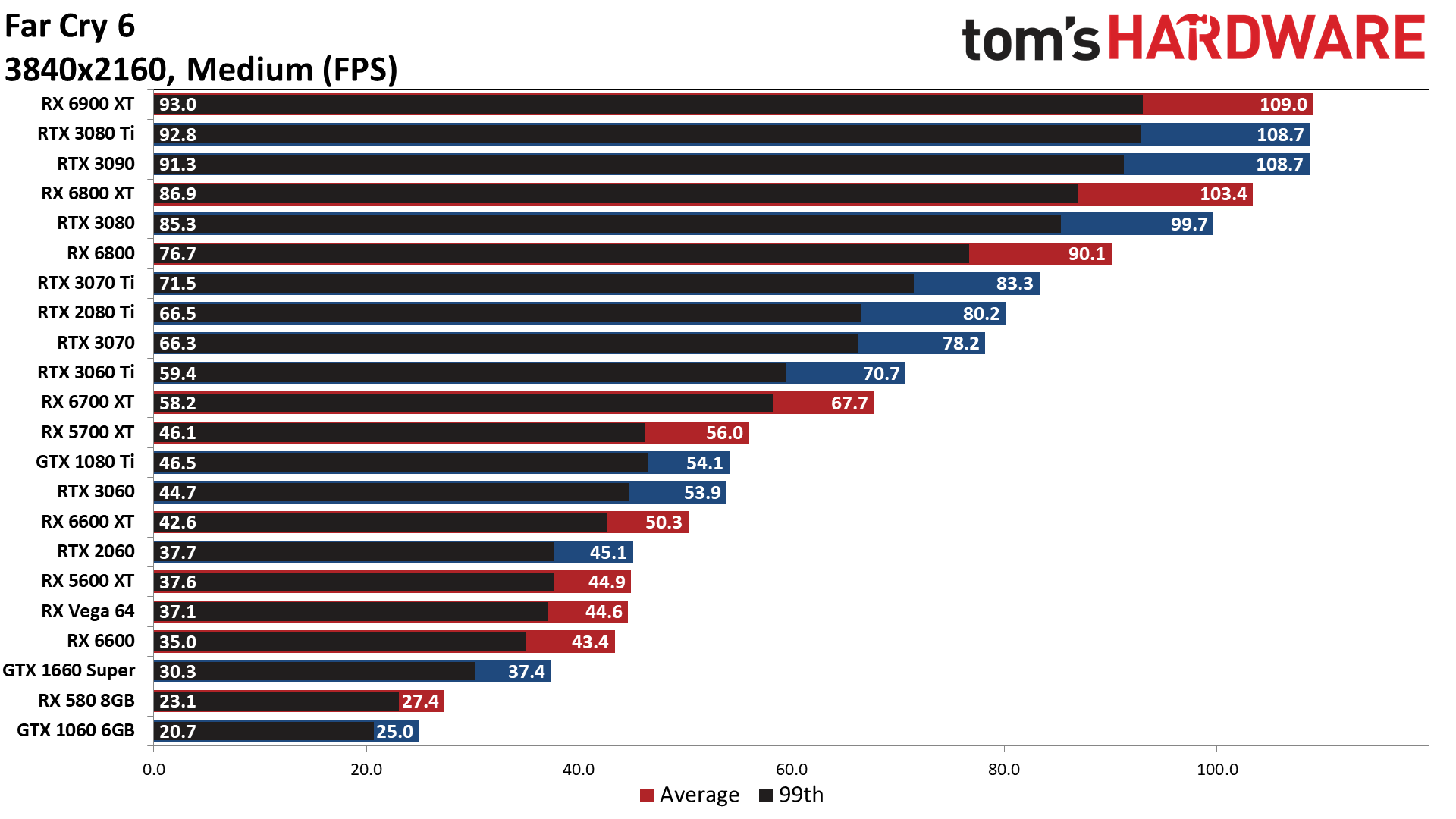

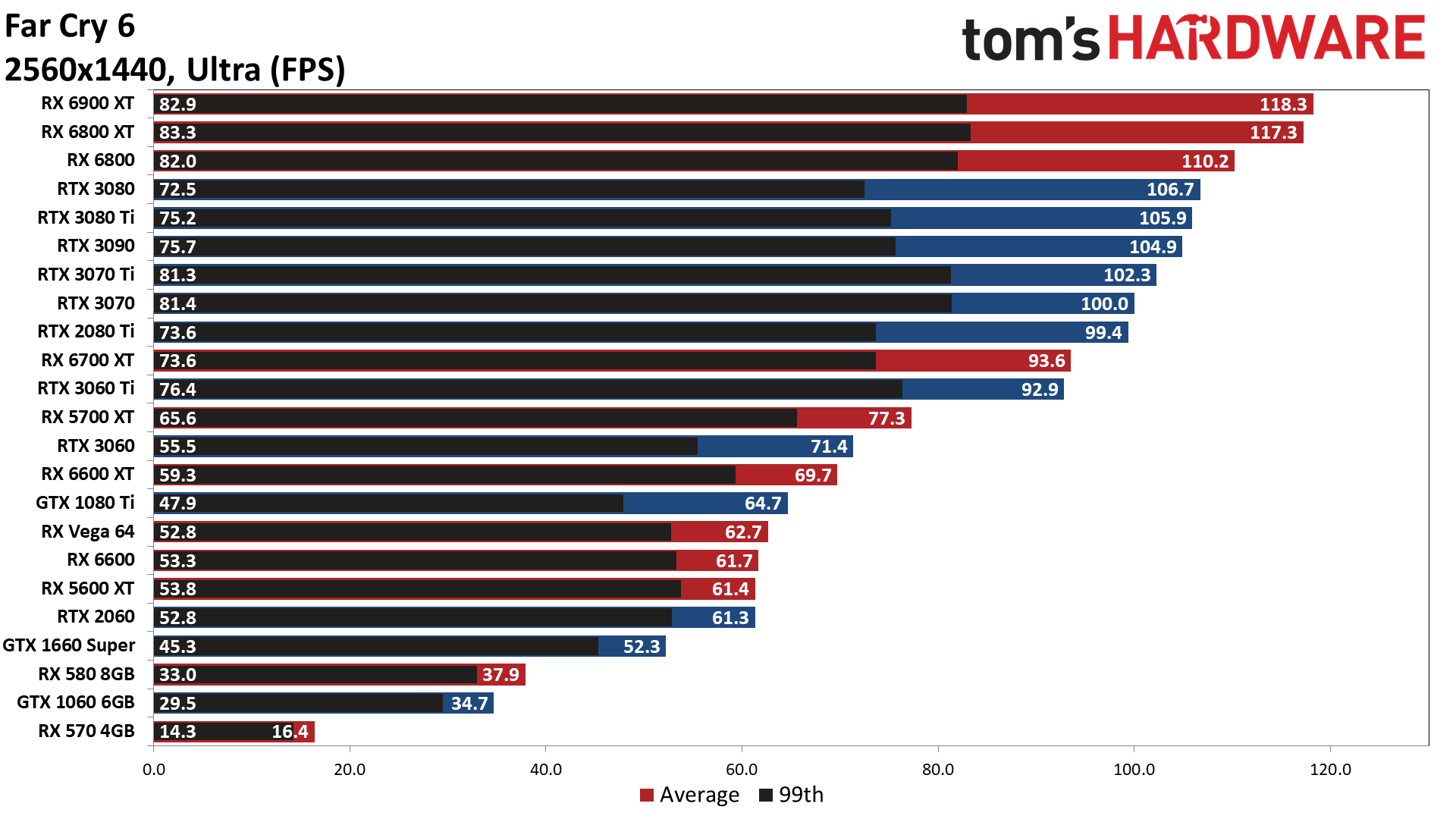

Finally, we get the usual range of performance and rankings that we'd expect, with a few minor quibbles. The RTX 3080 Ti still edges out the 3090 — and we're using the Founders Edition models, so it's not like we have an exceptionally overclocked 3080 Ti, or even a great cooler for that matter — but nearly everything else lands in the usual spot. It's finally worth discussing the AMD vs. Nvidia standings, as CPU bottlenecks aren't a factor here.

The top three cards all hit 109 fps, with AMD still holding a slight edge with the RX 6900 XT over Nvidia's RTX 3080 Ti and RTX 3090. This is also a resolution and setting combination that still runs okay on cards with 6GB VRAM, provided the GPU has enough gas in the tank. We're not using the HD textures for our medium quality testing either, which helps a bit.

4K does tend to penalize AMD's RDNA2 architecture a bit more relative to lower resolutions, as raw memory bandwidth starts to win out over the Infinity Cache. The RX 6700 XT falls below the RTX 3060 Ti, where it basically matched the RTX 3070 Ti at 1440p medium. The RX 6600 XT likewise falls behind the RTX 3060, though it still beats the previous generation RTX 2060 at least.

At the bottom of the chart, the RX 5600 XT and above easily break 30 fps, but both the RX 580 8GB and GTX 1060 6GB come up short. None of the cards with only 4GB VRAM could manage even 20 fps, so we dropped them from the charts.

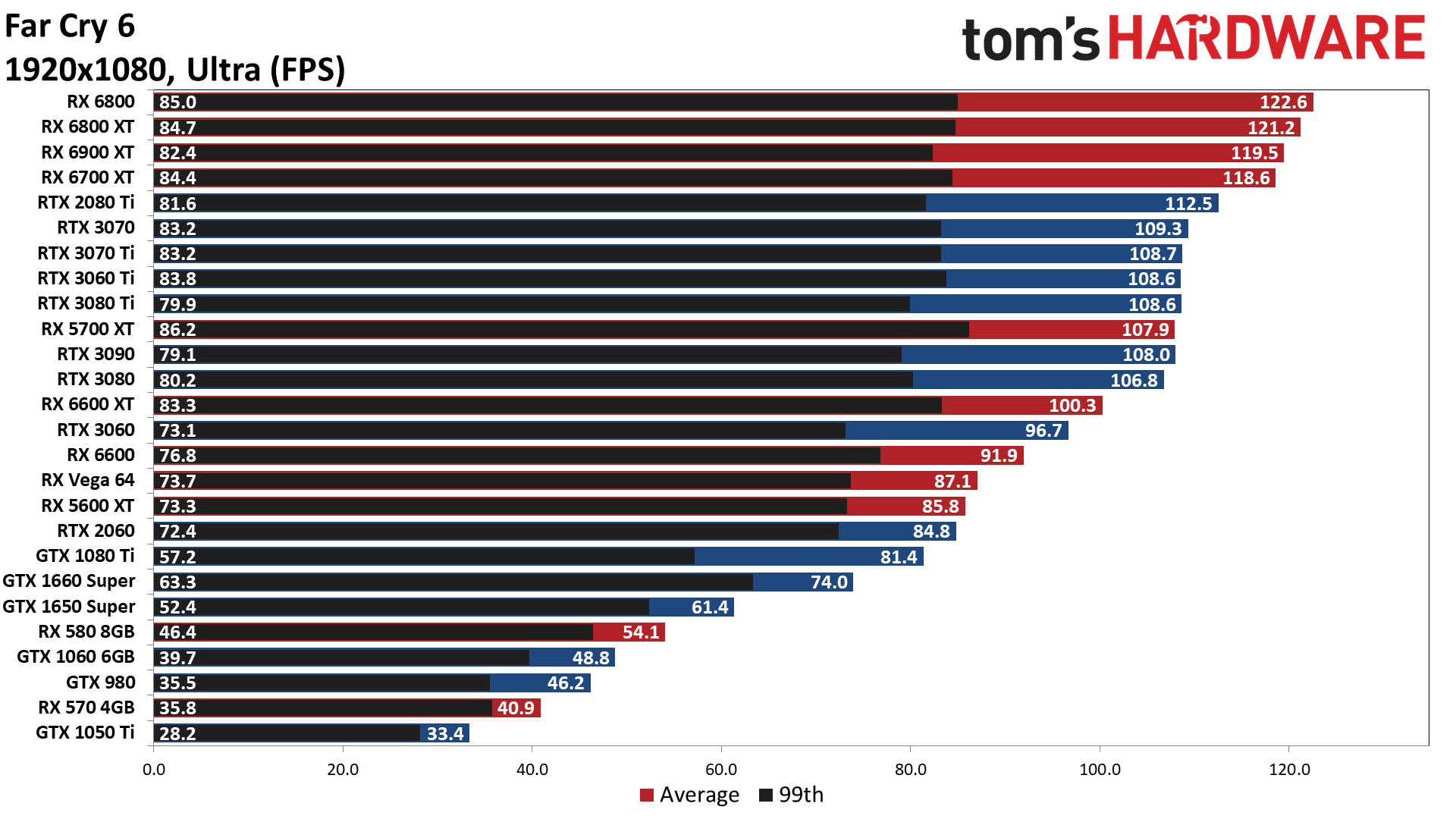

Kicking the settings up to ultra quality and enabling the HD texture pack generally results in slightly worse performance than 1440p medium, though interestingly, there are some exceptions to that rule. The bottom half of the chart, roughly, has GPUs that performed better at 1080p ultra, while the cards in the top half appear to hit a different bottleneck.

Ultra quality increases the load on the CPU, so the maximum fps on our i9-9900K drops from around 144 at medium to 123 fps at ultra. Of course, other factors may also come into play, but for the most part, you can choose between 1440p medium and 1080p ultra — or perhaps 1440p ultra with FSR enabled — and get a similar experience.

Depending on your GPU, 1440p ultra roughly equals 4K medium performance, but now we get even more oddities, particularly with the minimum fps on the Nvidia GPUs. Again, these are pretty clearly driver issues that are likely to be ironed out, as the RTX 3080 had the highest average fps but a lower minimum fps than the next six Nvidia GPUs.

AMD's GPUs continue to hold the pole positions, and while the gap isn't quite as egregious as in Assassin's Creed Valhalla — another Ubisoft and AMD-promoted game that favors AMD's latest architecture — it's still larger than in most other recent games.

I also left in one 4GB card here, the RX 570, as an example of how badly performance can tank when you exceed the VRAM. That card did okay at 1080p ultra, but performance drops by more than half moving up to 1440p. Use a card with 6GB, and performance only drops about 25–30%.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

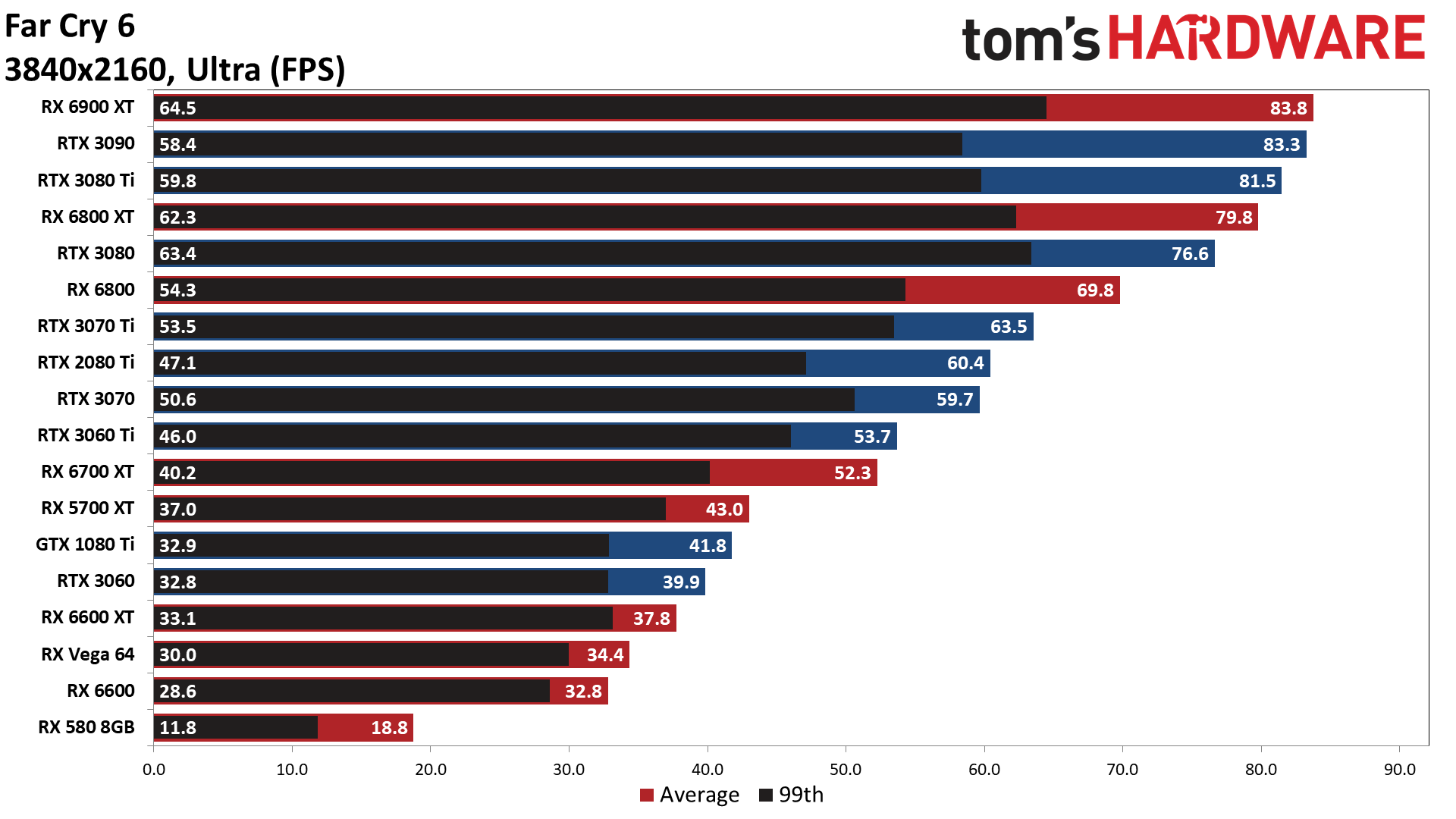

As you'd expect, 4K ultra punishes a lot of the GPUs. In fact, anything with less than 8GB VRAM basically fails to break 30 fps, often badly. So you can generally expect performance to be 40–50% slower than 1440p ultra in GPU limited games, and that's when you don't run out of VRAM.

This is also the first time the RTX 3090 has delivered better performance than the RTX 3080 Ti. While 12GB VRAM should be enough, Far Cry 6 at ultra settings starts to benefit from having 16GB or more.

Sixty fps at 4K is still possible on quite a few cards, though nothing that nominally costs under $500. And we haven't even maxed out the settings yet with ray tracing, so let's just go ahead and do that next.

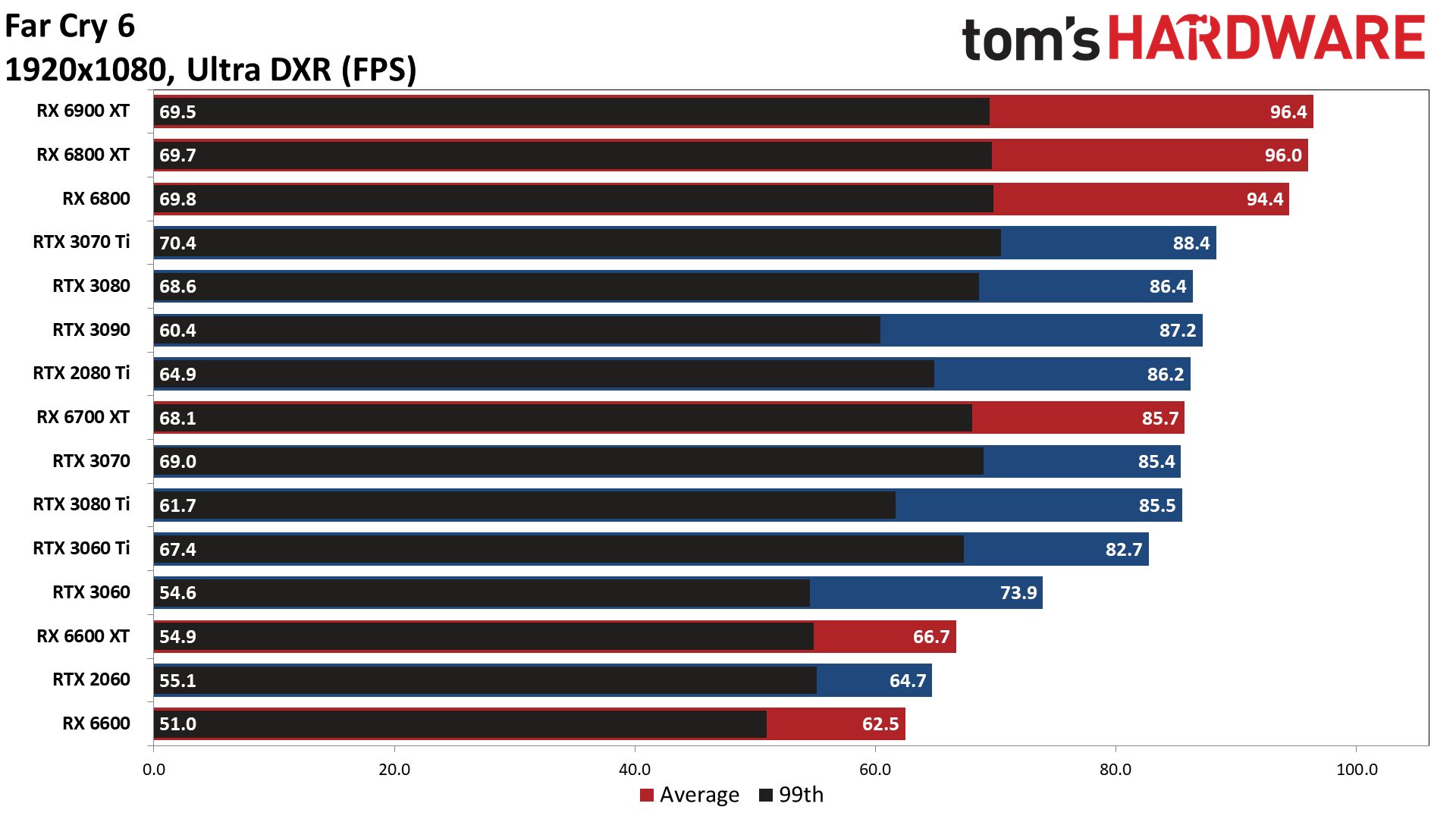

Considering Far Cry 6 has clearly been hitting some CPU bottlenecks at 1080p so far, the penalty for enabling ray traced shadows and reflections perhaps aren't that bad. Of course, part of that is thanks to the hybrid reflections that the game uses, combining SSR (screen space reflections) with RT to reduce the performance hit.

Considering the developers decided to not support ray tracing on the latest consoles, we were more than a bit surprised to see just how well Far Cry 6 runs with all the settings cranked to 11. Even the old RTX 2060 still managed to break 60 fps, which makes us wonder why Ubisoft didn't include RT (and FSR) on the PS5 and Xbox Series X — both of those should be more potent than the RX 6600 XT, which also delivered over 60 fps.

Compared to running without the DXR effects, performance drops about 20% on most of the GPUs. There are also still CPU limits in play, as evidenced by the wall at around 110 fps on the Nvidia GPUs and 120 fps on the top AMD GPUs. That's more than a bit surprising since ray tracing usually pushes the bottleneck so far toward the GPU side of the equation that the CPU no longer matters. But in open world games like the Far Cry series, even with all the graphics effects turning on, 1080p can still end up being CPU limited.

We're planning to do some additional testing and look at CPU scaling, but we couldn't get that done in time for the initial launch. Plus, we'll likely need to redo a lot of these tests once updated drivers come out and the game gets a few patches, but so far, it looks like Far Cry 6 continues the series' legacy of being CPU limited.

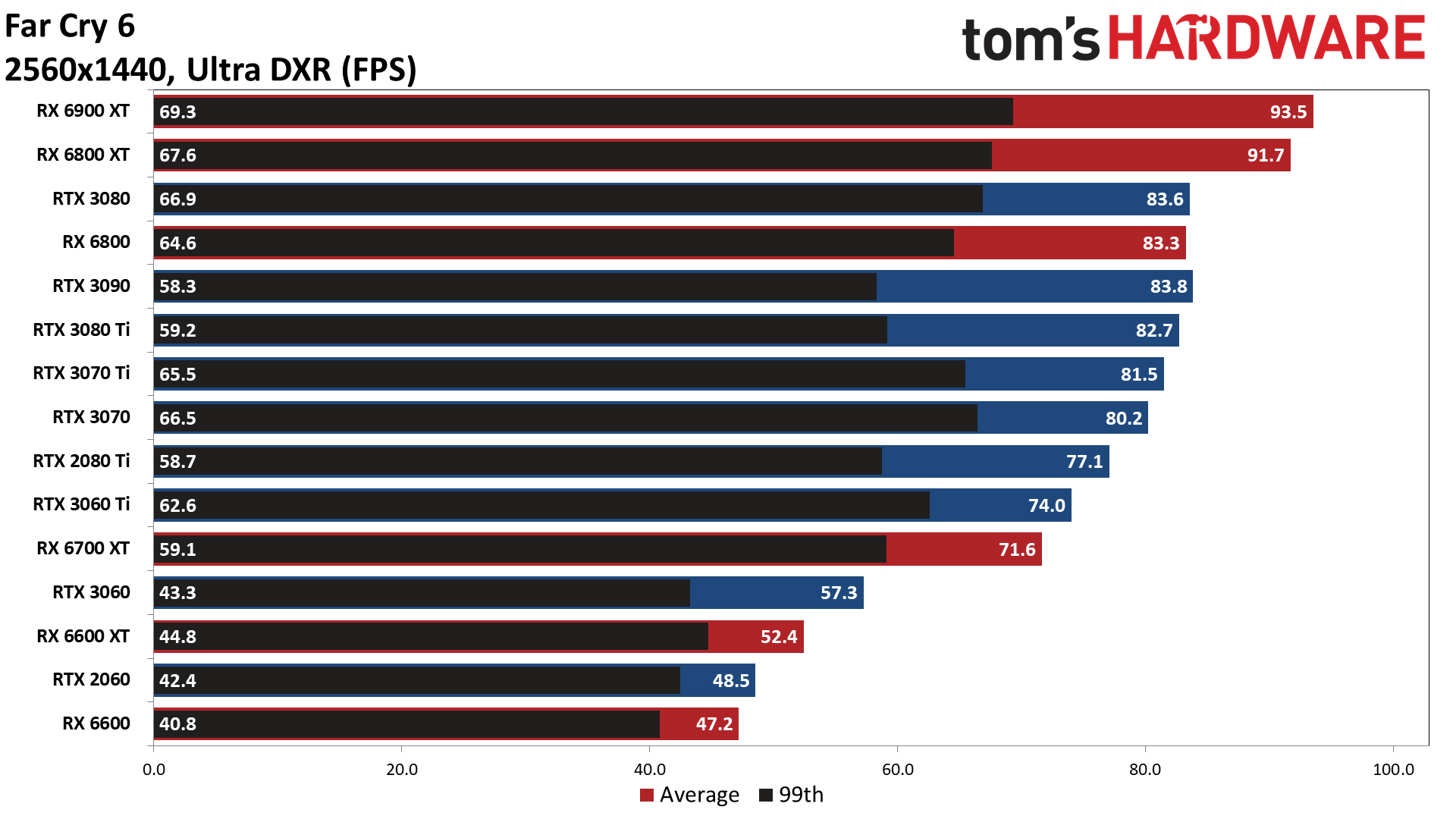

All GPUs technically remain playable even at 1440p with DXR enabled, though the RTX 2060, RTX 3060, and RX 6600 XT all fall below 60 fps now. The RTX 2080 Ti also shows yet again that the Ampere architecture really did improve performance in a lot of ways, with the RTX 3070 coming in 3 fps above the former heavyweight champion.

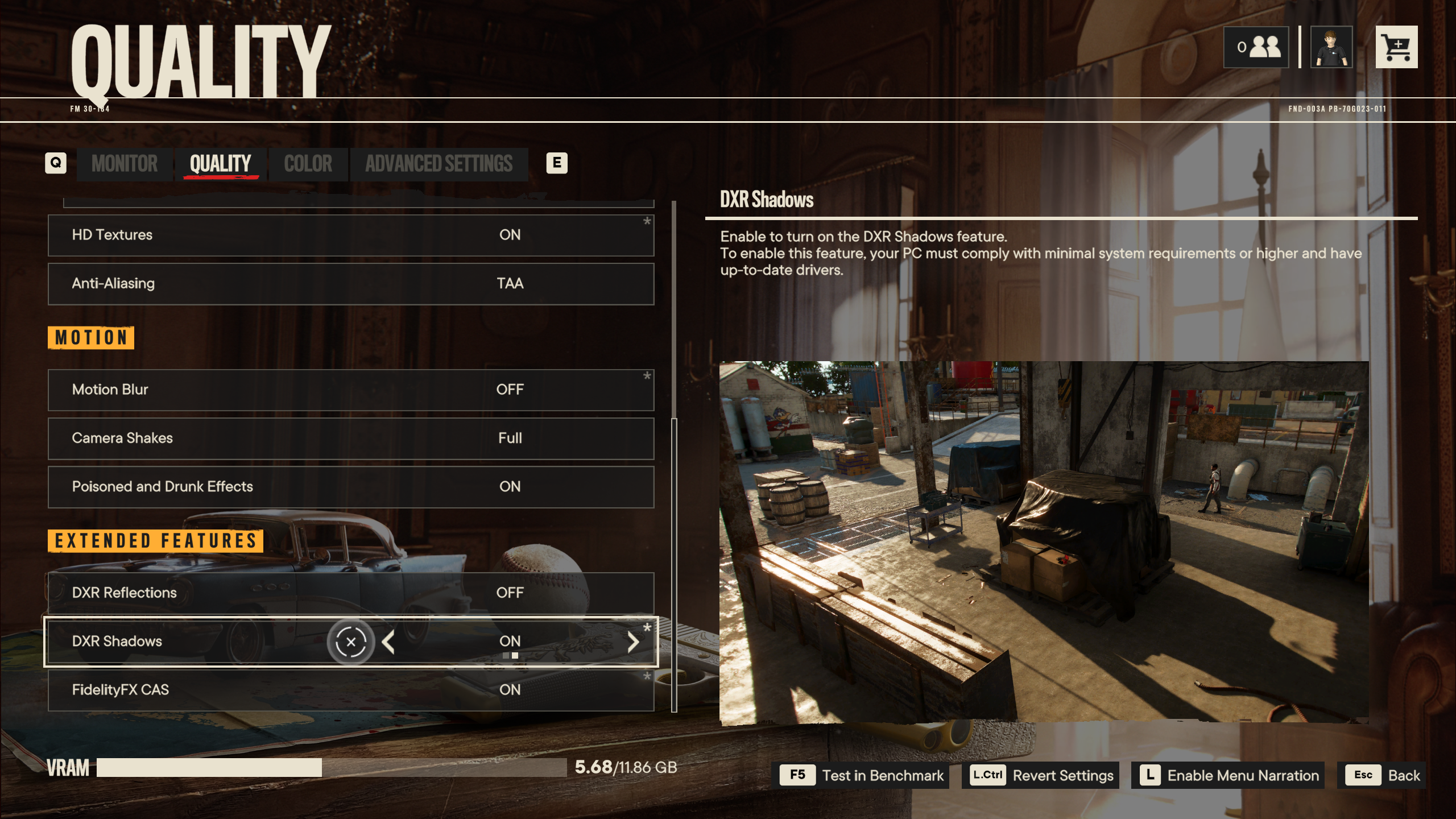

We should note that the RT effects really aren't all that visually impressive. That partly explains the relatively small performance hit. Certain things look better with DXR enabled, but you can easily turn off all the ray tracing stuff and not really feel like you're missing out. SSR still provides simulated reflections on puddles and such, so all you get are a few other objects reflected that SSR doesn't handle.

The shadows, on the other hand, remain mostly a waste of computational effort in my book. Sure, they're likely more accurate, but the default shadow mapping techniques still look fine to my eyes. The result of doing less complex ray tracing work is that Nvidia's RT hardware in the RTX 30-series cannot shine quite so brightly, so AMD's top RX 6000 cards still hold the top spots.

And not to beat a dead horse, but drivers are yet again a concern for Nvidia. The RTX 3080 takes the top spot, at 1440p with DXR, which basically makes no sense. But the Nvidia GPUs are limited to under 90 fps by the CPU or some other factor, while AMD's GPUs can hit closer to 100 fps.

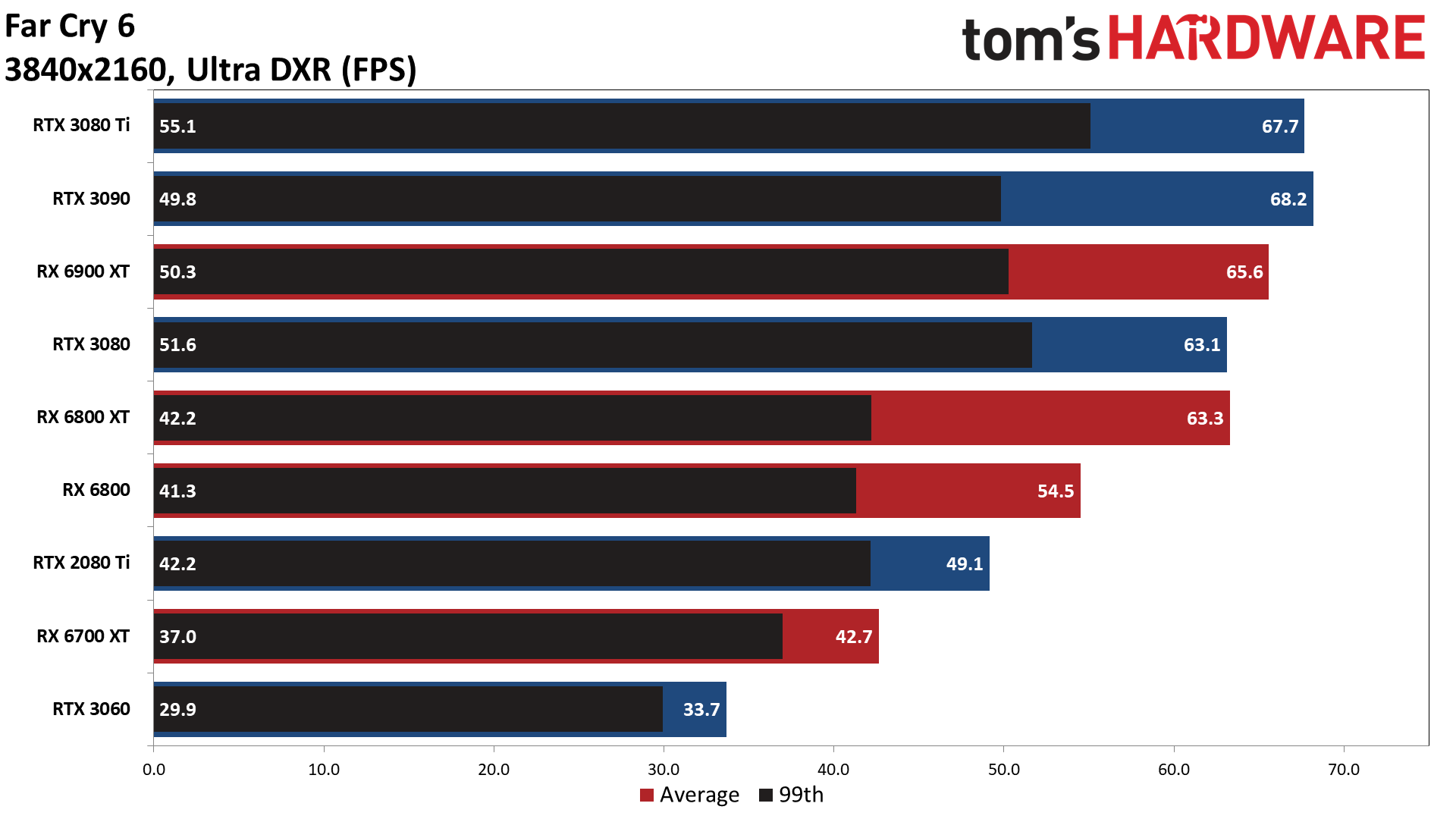

Last but not least, we have truly maxed out settings at 4K ultra with DXR. For the first time, Nvidia's RTX 3080 Ti and RTX 3090 claim the top spots, and the RTX 3080 edges the RX 6800 XT thanks to its higher minimum fps — though minimum fps still varies quite a bit more than we're used to and will likely improve with a patch or two.

Far Cry 6 says you need at least 11GB VRAM for 4K ultra with DXR, and it's mostly correct, though 10GB apparently will suffice. The 8GB cards, meanwhile, all dropped below 20 fps, or even 10 fps in several attempts to get the benchmark to run. You pretty much don't want to even try 4K with ray tracing in this game unless you have a card with at least 10GB VRAM. Or maybe we'll see drivers and patches improve this as well.

The game does have a memory usage bar, which suggests 4K ultra DXR only needs 6.82GB VRAM. That clearly isn't accurate, however, based on what happened to the 8GB GPUs we tried.

Far Cry 6 Settings Analysis

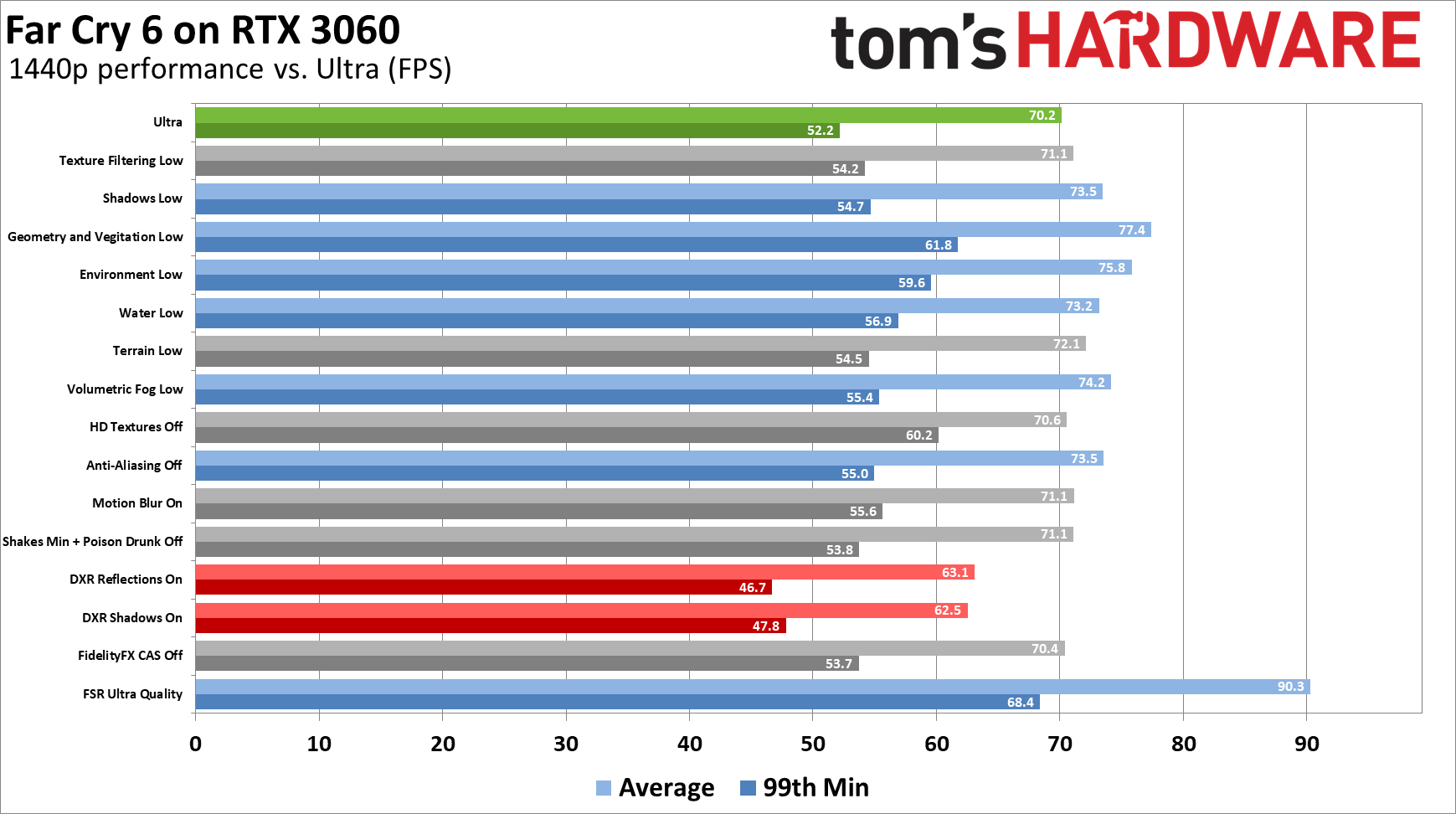

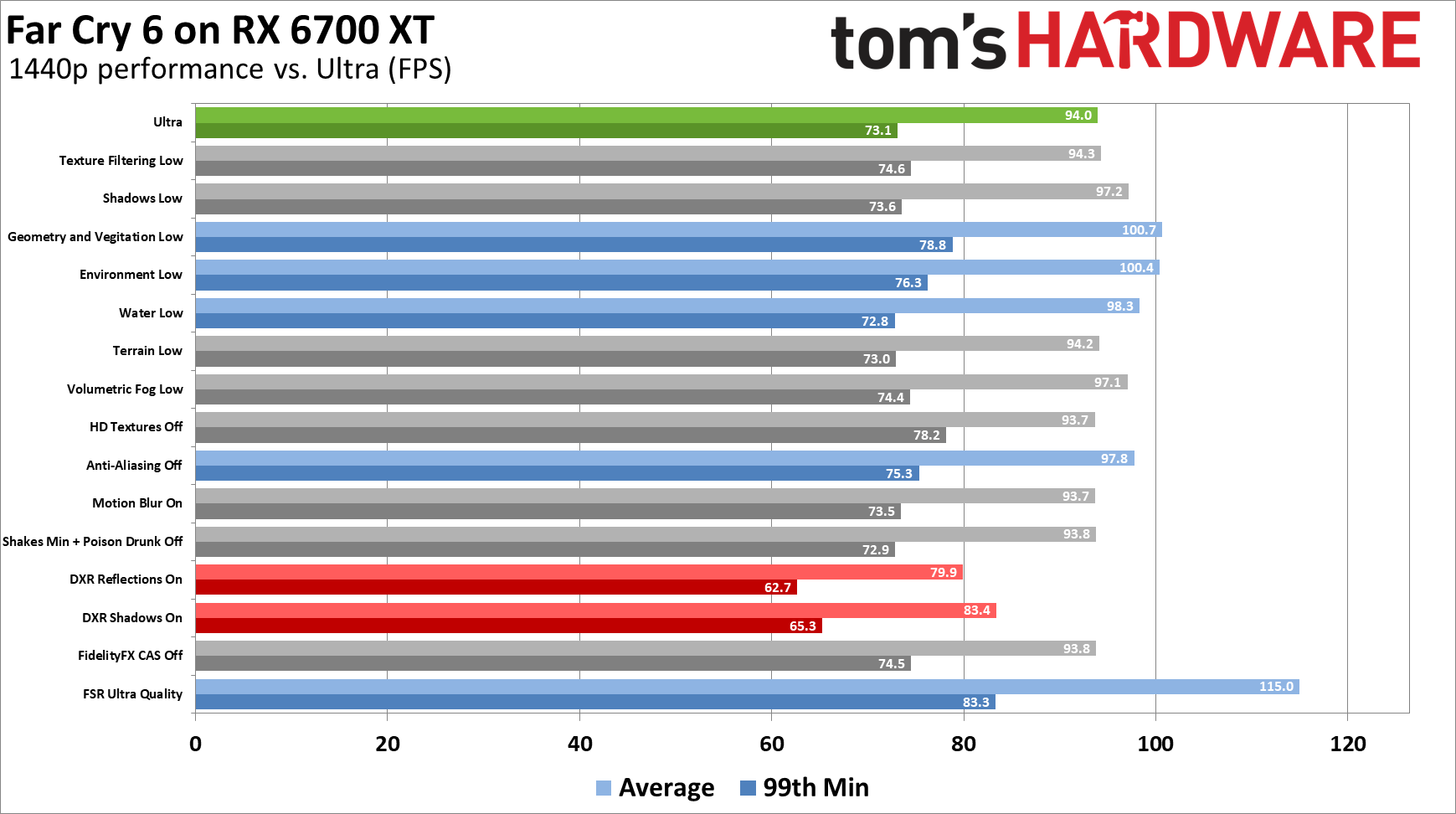

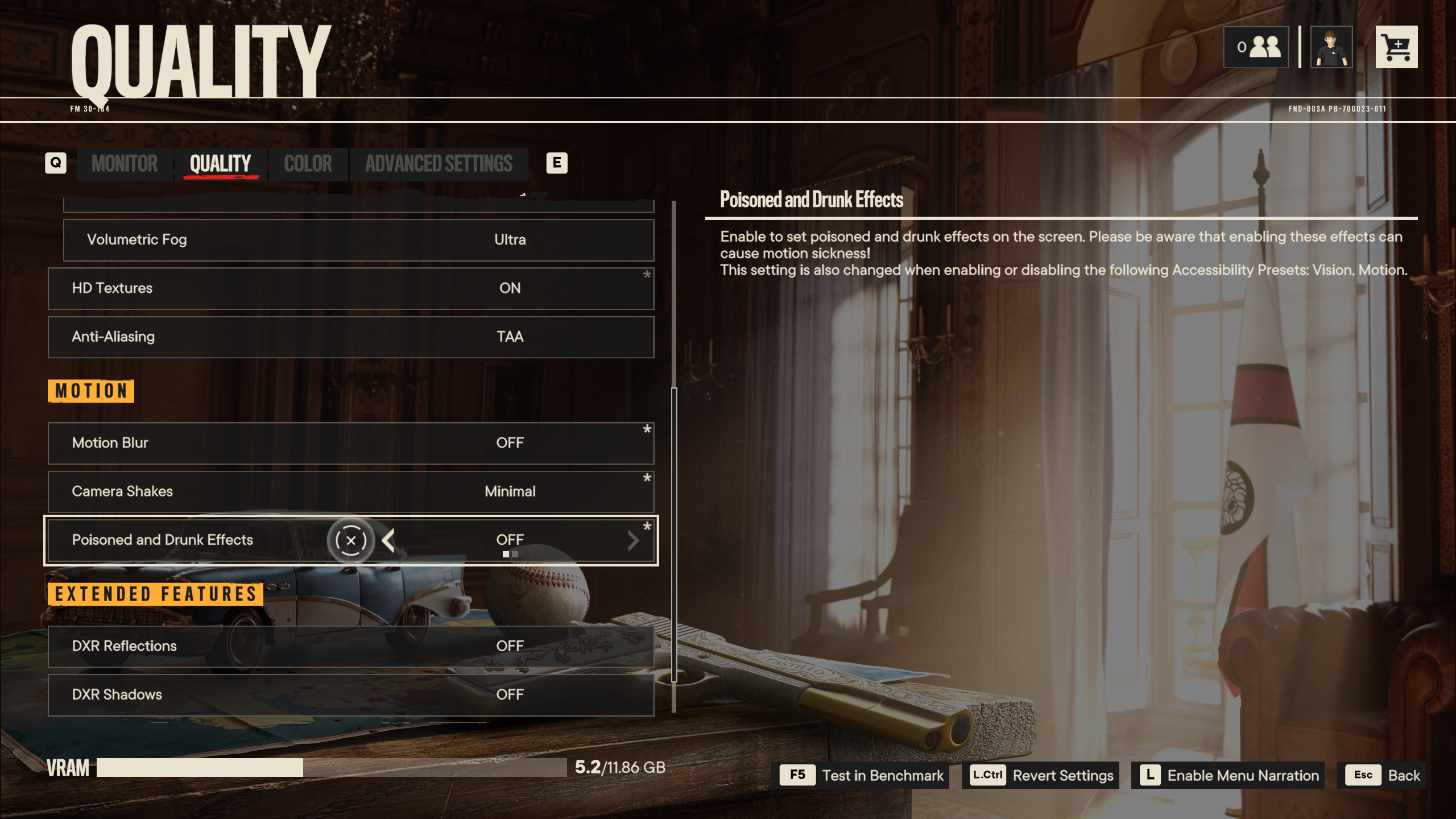

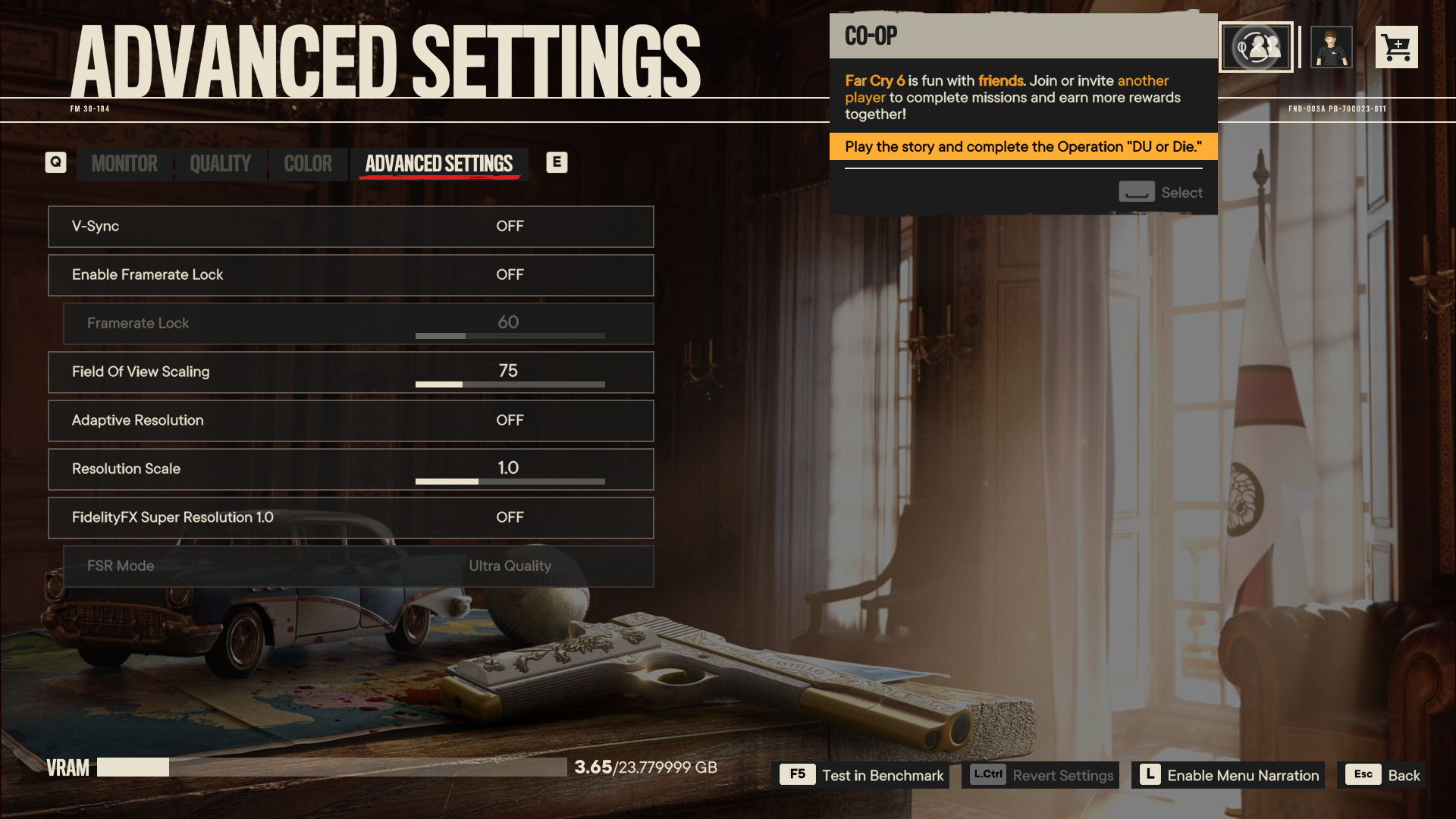

Far Cry 6 has about a dozen graphics settings you can tweak, depending on how you want to count and what GPU you're using. Here we've taken the RTX 3060 and RX 6700 XT — both with 12GB VRAM, so that we won't hit memory bottlenecks — and tested performance using the ultra quality preset with HD textures enabled. Then we've gone through and turned each individual setting to its minimum value and run the benchmark again to see how performance changed. In some cases (motion blur, DXR reflections, and DXR shadows) we've turned on a setting that was previously, which typically drops performance.

The charts are color coded with the ultra preset in green, settings that caused more than a 4% increase in framerates are in blue, and settings that cause more than a 4% drop in performance are in red. All the gray bars are settings that caused less than a 4% change in performance, which basically means you shouldn't worry about tweaking those unless you specifically don't like the way they make the game look.

You can see the screenshots and descriptions of the various settings in the image gallery. Based on the lack of performance impact, we'd leave Texture Filtering, Terrain, and FidelityFX CAS enabled. We'd also leave off motion blur, though some people like the effect and it doesn't impact performance. Camera shakes and poison/drunk effects also didn't impact performance in the benchmark sequence, and we would leave them on unless you experience discomfort from the effects.

Of the remaining settings, some had more of a performance impact on AMD than on Nvidia, and vice versa. DXR (ray tracing) obviously causes a significant drop in performance and you'll want a potent GPU if you plan on enabling those options. Interestingly, both the hybrid shadows and hybrid reflections caused about a 10–11% drop in performance on the RTX 3060, while on the RX 6700 XT reflections caused a slightly larger 15% drop. Of the two DXR options, reflections tends to be more visually impactful — while playing, it's difficult to tell if DXR shadows are on or off.

Outside of ray tracing, the geometry and vegetation setting is your best bet for improving performance. Actually, that's not quite correct as your best option to boost performance with a minimal loss to visual fidelity is to enable FidelityFX Super Resolution and set it to ultra quality. We'll discuss that a bit more in a moment. Turning geometry and vegetation down to low does result in a clear drop in image quality, however, so you'll probably want to keep that at medium or high if possible.

Environment also has a larger effect on performance, and stepping that down to high or medium can buy you a few extra fps. Volumetric fog is another relatively demanding setting, though it impacted the RTX 3060 more than the RX 6700 XT — possibly because the 6700 XT simply has more available compute to begin with. Turning water down a notch or two doesn't radically change the way the water looks, so that's another reasonable option. Shadows also impacted the RTX 3060 more than the 6700 XT, and we'd try to leave that set as high as possible.

Turning off anti-aliasing can boost performance 4–5%, but we really don't like all the jaggies that creates and would leave TAA enabled. If you opt to enable FSR, the ability to change the AA setting gets disabled.

Last, let's quickly talk about the HD texture pack. Even at 1080p, enabling HD textures pushes the VRAM requirement above 4GB, in theory. If you have enough VRAM, downloading and enabling the HD textures requires about 40GB of extra storage space, and visually it doesn't make a massive difference — even with it on, there are still some noticeably low-res textures for some surfaces (including on Guapo the crocodile). As far as performance goes, HD textures didn't drop average framerates, but on both GPUs it did cause more stuttering and hitching, which is why the 99th percentile fps improved quite a bit.

Far Cry 6 FidelityFX Super Resolution Performance Scaling

Let's talk a bit more about FSR scaling. We tested both the 6700 XT and 3060 above, but we also wanted to check how it affects performance with DXR enabled, which can be particularly useful at 4K on the 8GB cards.

Enabling FSR ultra quality boosted performance on the RX 6600 XT by 16% at 1080p (78 fps), and the ultra quality mode is nearly indistinguishable from native rendering. At 1440p FSR increased performance by nearly 20% (63 fps), while at 4K performance shot up 472% (42 fps)! That's mostly because the 8GB card couldn't handle native 4K DXR.

We also tested using the other FSR modes at 4K, though it should be noted the loss in visual fidelity does become more noticeable as you move up the scaling factor. Quality mode increased performance by 576% (50 fps), Balanced mode improved that to 54 fps (627%), and Performance mode broke the 60 fps mark and hit 62 fps, over 8x faster than native 4K rendering. Again, that's all because of the lack of VRAM, but it does show that clever algorithms can fix what was initially unplayable.

Generally speaking, if your GPU has enough VRAM, FSR ultra quality should boost performance by about 20%, provided CPU bottlenecks don't get in the way. That happens on the RTX 3090, as you'd expect. FSR ultra quality boosted performance by 23%, taking the card right near the ~85 fps limit of the CPU at maximum settings. Quality, balanced, and performance modes on the 3090 only improved framerates by another 1–2 fps.

As we noted above, enabling FSR ultra quality is practically free performance with very little loss in visual fidelity, particularly if you're running at 1440p or 4K — the lower render resolution of 1080p can result in a few visible difference, however. You can also skip FSR and just use 'normal' resolution scaling, though it's not clear whether Far Cry 6 uses some proprietary scaling algorithm or temporal scaling. Note that the console versions of the game don't use FSR, so perhaps the adaptive resolution scaling ends up being just as good — or at least more flexible.

Far Cry 6 VRAM Requirements

You've seen all the benchmarks above, but with the official launch now completed, there are people talking about the high VRAM requirements and saying you can't use the HD texture pack on cards with only 4GB VRAM. That's definitely false, as you can see the GTX 980, GTX 1650 Super, and RX 570 all have 4GB VRAM and still successfully ran the benchmark at 1080p ultra with the HD texture pack enabled.

But that's only the benchmark. What about actually playing the game? As you'd expect, depending on the area of the map you're in, performance can be lower (or higher!) than in the benchmark. I set about 'testing' Far Cry 6 by playing through the first few hours of the game, all at 1080p ultra running on the GTX 1650 Super. It generally ran fine, though there are some caveats to that.

For example, the longer you play, the more likely it became for the game to experience slowdowns — and at times potentially massive drops in framerates. Some of the cutscenes exhibited this problem as well, where the scene would play about maybe 10-15 fps, but after the scene ended performance would go back up to normal.

While the GTX 1650 Super achieved 61 fps in the benchmark sequence, testing during regular gameplay showed average fps results ranging from about 40 fps to as high as 65 fps. And then I cleared out one of the camps (after playing for a few hours) and suddenly performance plummeted to around 20 fps. I was worried it was just a very demanding area of the game, but after exiting and restarting, performance returned to normal again, even in that same area.

The settings menu shows an estimate of how much VRAM the game needs, but it's not fully accurate, and longer playing sessions are likely to need more VRAM. It's possible there are also bugs that may be affecting performance over time, but the fact that I was able to play through the entire prologue (up until you reach the main island) without much difficulty means 4GB is enough.

Still, the HD texture pack won't do a lot at 1080p anyway, since lower resolution mipmaps will usually get selected. If you're running a card with only 4GB (or less) VRAM, don't feel bad about turning off HD textures and tweaking the settings.

Initial Thoughts on Far Cry 6

We still have more testing to do, but we also want to give Nvidia and Ubisoft a chance to release updated drivers and patch the game, respectively. We tested with early access code, and we know there's a day zero patch incoming, though it isn't clear how much that will affect performance.

For all the enhancements, there are also some image artifacts in the game that aren't particularly pleasing. For example, the built-in benchmark does a flyby of a bunch of wet streets, and one of the things that really stands out is the halos around a lot of objects, particularly the cars. Those are present whether or not you use ray tracing, and hopefully a patch will get rid of those as well, as I found them more distracting than the jaggies you get without anti-aliasing.

Performance was generally decent, with the right settings, and most people with a gaming PC built within the past five years should be fine. Of course, you'll need to drop down from ultra quality if you're sporting a slower GPU, and you might not get a steady 60 fps if you're lacking in the CPU department, but most GPUs should manage okay. I'll be looking at testing more cards in the future, and I'm also planning to replace Far Cry 5 from my GPU benchmarks at some point, but I'll wait for drivers and patches to stabilize before retesting everything yet again.

As for the game itself, I'll leave critiquing that to others. It definitely feels like yet another Far Cry, though, with an absolutely over-the-top villain that needs to be put down by revolutionaries. I haven't gotten too far into the story (busy benchmarking, as you might imagine), but basically, it swaps out the religious crazies from Far Cry 5 and replaces them with equally stereotypical bad guy dictator armies.

If you're looking for an open-world game with guns, vehicles, and a deluge of things to do, Far Cry 6 should scratch that itch. Just don't go in looking for deep character development, as the exposition was already full of cliches.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

boju People will play a buggy game (what's new?)on release. I honour the brave first wave of players because they'll get the ball rolling and get most bugs addressed by the time i pick it up.Reply -

RodroX Nice review, I bet drivers and overall game patches will help fix some strange behaviors we see in some of the graphs.Reply

I would love to some follow up data with a R7 5800X at stock settings or with PBO enable since its a 8c/16t like the i9 9900K.

Anyways, thanks for the data! -

court655 Incredibly lame that they make an engine that goes out of its way to nerf Nvidia performance just because it's an "AMD titled game" so the AMD cards can be on top despite the clear performance difference in RT & Rasterizing performance between cards.Reply -

RodroX Replycourt655 said:Incredibly lame that they make an engine that goes out of its way to nerf Nvidia performance just because it's an "AMD titled game" so the AMD cards can be on top despite the clear performance difference in RT & Rasterizing performance between cards.

Im not so sure the engine "nerf" nvidia GPUs. You know, nerf is such a big word. Perhaps they did kept RT to a minimum to make AMD gpus look better. Maybe even kept it that way so its "easy" on PS5 and Xbox X/S hardware. -

hotaru.hino The discrepancy between the RTX 2060 and GTX 1080 Ti is interesting to me. Which got me thinking: I wonder if Far Cry 6 uses hardware features introduced in Turing. Another curious case I found where Turing utterly wrecks Pascal was AnandTech's benchmarks of Wolfenstein II. The RTX 2080 and the GTX 1080 Ti are supposedly on par with each other, but for some reason, the RTX 2080 commands a 50% lead over the 1080 Ti in the game. I'm also pretty certain Wolfenstein II was an AMD sponsored title as well.Reply

But seeing a RTX 2060 dominate a 1080 Ti raises even more questions. Especially considering RDNA1 also dominates against the 1080 Ti, even though some most of the features in Turing weren't introduced until RDNA2. -

JarredWaltonGPU Reply

I'm pretty sure there's either a drivers issue, or my 1080 Ti is having problems. I tested it multiple times and couldn't get a "clean" run -- there were periodic large dips every 5 seconds or so, which I didn't see on the 1060 or any other GPU. I may go back and rerun those tests after doing a driver wipe today, just to verify they're still happening. I'll toy with the fan settings and other stuff as well, and make sure some throttle wasn't kicking in.hotaru.hino said:The discrepancy between the RTX 2060 and GTX 1080 Ti is interesting to me. Which got me thinking: I wonder if Far Cry 6 uses hardware features introduced in Turing. Another curious case I found where Turing utterly wrecks Pascal was AnandTech's benchmarks of Wolfenstein II. The RTX 2080 and the GTX 1080 Ti are supposedly on par with each other, but for some reason, the RTX 2080 commands a 50% lead over the 1080 Ti in the game. I'm also pretty certain Wolfenstein II was an AMD sponsored title as well.

But seeing a RTX 2060 dominate a 1080 Ti raises even more questions. Especially considering RDNA1 also dominates against the 1080 Ti, even though some most of the features in Turing weren't introduced until RDNA2.

I'm not sure how much of it is intentionally hurting Nvidia performance versus just using DX12 code that's more optimized for AMD hardware. We've seen that a lot in the past, where generic DX12 code seems to favor AMD GPUs and Nvidia needs a lot more fine tuning.court655 said:Incredibly lame that they make an engine that goes out of its way to nerf Nvidia performance just because it's an "AMD titled game" so the AMD cards can be on top despite the clear performance difference in RT & Rasterizing performance between cards.

Remember that PS5 and Xbox X/S don't have FSR or RT support, though maybe that will come with a patch.RodroX said:Im not so sure the engine "nerf" nvidia GPUs. You know, nerf is such a big word. Perhaps they did kept RT to a minimum to make AMD gpus look better. Maybe even kept it that way so its "easy" on PS5 and Xbox X/S hardware. -

RodroX ReplyJarredWaltonGPU said:.... Remember that PS5 and Xbox X/S don't have FSR or RT support, though maybe that will come with a patch.

Wait, Im not a console guy so, Are you talking about RT support for this game, or in general?, Cause far as I know both consoles support RT and there are many titles with it already, right?

FSR is a different thing. -

VforV Reply

The engine is the one from Watch Dogs Legion (it's actually much older, but has been improved over time), but that game, Watch Dogs Legion, is an nvidia sponsored title, it has RTX and DLSS and no FSR, so no extra performance for Radeon and guess what? Nvidia has all the advantages there and wins vs AMD.court655 said:Incredibly lame that they make an engine that goes out of its way to nerf Nvidia performance just because it's an "AMD titled game" so the AMD cards can be on top despite the clear performance difference in RT & Rasterizing performance between cards.

The only thing different this time is that AMD sponsored FC6 and it does not have DLSS, that can help only nvidia.

Also AMD released new Radeon drivers boosting performance especially for this game's launch.

There is no consipiracy here and no intentional nerfing, it's just not intentionally pro-nvidia either this time around.

I'm pretty confident a patch will come later to enable RT in FC6 on the consoles. It's very doable at 1080p upscaled as Insomniac did with their games. Ubisoft would be really stupid not to do that after they add the necessary improvement patches.JarredWaltonGPU said:I'm not sure how much of it is intentionally hurting Nvidia performance versus just using DX12 code that's more optimized for AMD hardware. We've seen that a lot in the past, where generic DX12 code seems to favor AMD GPUs and Nvidia needs a lot more fine tuning.

Remember that PS5 and Xbox X/S don't have FSR or RT support, though maybe that will come with a patch. -

JarredWaltonGPU Reply

RT and FSR in Far Cry 6. They’re absent on the latest consoles for some reason. I wrote this last week, though the headline workshop maybe ended up too inflammatory. LOLRodroX said:Wait, Im not a console guy so, Are you talking about RT support for this game, or in general?, Cause far as I know both consoles support RT and there are many titles with it already, right?

FSR is a different thing.

https://www.google.com/amp/s/www.tomshardware.com/amp/news/far-cry-6-no-ray-tracing-on-consoles -

RodroX ReplyJarredWaltonGPU said:RT and FSR in Far Cry 6. They’re absent on the latest consoles for some reason. I wrote this last week, though the headline workshop maybe ended up too inflammatory. LOL

https://www.google.com/amp/s/www.tomshardware.com/amp/news/far-cry-6-no-ray-tracing-on-consoles

lol Ok, I missed that article!

As you wrote, perhaps they enable it with future updates/patches.