Time To Upgrade: 10 SSDs Between 240 And 256 GB, Rounded Up

Should You Care About Over-Provisioning On A SandForce-Based SSD?

Now that SandForce allows its partners to disable over-provisioning (both Adata and Transcend choose to do this), you're probably wondering why an SSD vendor would or would not do this. After all, based on the Iometer results you've already seen, the benchmark results aren't affected much. It'd be easy to conclude, then, that setting aside capacity on a SandForce-based drive has no benefit. Might as well make that space available for user data, right?

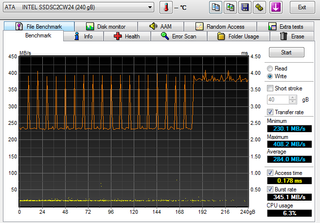

Not necessarily. SSDs based on SandForce's hardware perform their garbage collection duties in the foreground, as you're writing to them. On an over-provisioned drive, that reserved space is used for moving data around on the drive.

In the screen shot above, we've filled Intel's SSD 520 with incompressible data. Then, we write 128 KB blocks back in a sequential access pattern. Performance starts slow, but picks up as the drive leverages its over-provisioned capacity to achieve higher transfer rates.

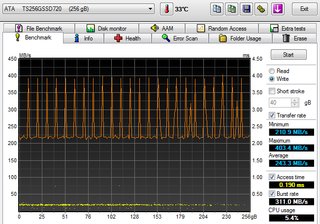

On the drive without over-provisioning, performance never picks up because there is no scratch space to use for shuffling data around. Once you've written to every memory cell, writing back a second time only happens as fast as the controller is able to free up capacity.

In this chart, we're demonstrating the performance of Transcend's SSD720, though the same theory holds true for Adata's XPG SX900, too. The SSD320 and SP900 would give us similar behavior. But because they employ slower asynchronous NAND, their charts shift down around 40 MB/s.

The other thing to keep in mind is that we're hitting these drives with serious workloads. In the real world, you shouldn't see anything of the sort unless your SSD is pretty much full.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: Should You Care About Over-Provisioning On A SandForce-Based SSD?

Prev Page Real-World Write Testing Next Page 10 New SSDs: What Does It Take To Turn Heads?-

mayankleoboy1 get the cheapest, biggest you possibly can. Benchmarks exaggerate the difference between SSD's.Reply -

A Bad Day I agree. Unless if you're buying a glorified USB stick (there is a 128 GB stick) or an SSD with an OC'ed processor, the main factor that consumers should be concerned about is price per gigabyte.Reply -

A Bad Day EDIT: And reliability.Reply

"In order to install a new firmware that significantly boost performance and stability, you must backup all of your data because it will be wiped." -

Tanquen Yea, it’s getting a little out of hand. For 90% of the things 90% of people do on their PC, 200MBs+ read and write speeds just don’t mean much. There are too many other bottle necks going on. I messed around with a RAM drive using most of my 64GB of RAM and the read and write speeds are fun to test (4000MBs or so) but games and VMware sessions I launched from the RAM disc saw no noticeable improvement in launch times or anything else. Same goes for my 830 SSD drive. It’s fast but games and software I use for SCADA development just don’t see any real benefit. They are cool if you want to open 10 sessions of MS Word and 15 Internet Explorer and a bunch of other stuff at the same time but if you just open one instance of Excel and use it and the Photo Shop and use it and then a web browser and use it, you’ll never really see the difference. You have to benchmark it or have two PCs setting right next to each other to see that something started or saved a split second faster.Reply

At least with my 64GB of RAM and actually get 64GB of RAM unlike HDs and SSDs.

-

tomfreak unless the sandforce drive is priced a lot cheaper than the similar capacity non-sandforce SDD. I always choose the non-sandforce SSD. 16GB is a big deal in SSD.Reply -

stoogie until theres affordable 512gb ssd's then i wont get 1, my c drive is 360gb~ and i have 11tbReply -

sna TanquenYea, it’s getting a little out of hand. For 90% of the things 90% of people do on their PC, 200MBs+ read and write speeds just don’t mean much. There are too many other bottle necks going on. I messed around with a RAM drive using most of my 64GB of RAM and the read and write speeds are fun to test (4000MBs or so) but games and VMware sessions I launched from the RAM disc saw no noticeable improvement in launch times or anything else. Same goes for my 830 SSD drive. It’s fast but games and software I use for SCADA development just don’t see any real benefit. They are cool if you want to open 10 sessions of MS Word and 15 Internet Explorer and a bunch of other stuff at the same time but if you just open one instance of Excel and use it and the Photo Shop and use it and then a web browser and use it, you’ll never really see the difference. You have to benchmark it or have two PCs setting right next to each other to see that something started or saved a split second faster.At least with my 64GB of RAM and actually get 64GB of RAM unlike HDs and SSDs.Reply

to see the difference you will need to put the system itself on RAM Disk. not only the installed programs.

-

Where are Samsung SSDs ? Especialy model Samsung 830- 256GB which is on sale in Europe for 160-180€. That is best offer, reliable, faster than basic 840. Get some MB with Z77 chipset and you can RAID them with TRIM support. 2x256 for 330€ is so awesome with 1035Mb/s read in RAID 0. I tested it on Gigabyte Z77-UP4 TH, its a shame that there are only 2x6Gbit ports so 1x840 Pro + 2x830 in RAID 0 is impossible on this MB without SATA2 speed loss on remaining SATA ports. This was my scenario for fast gaming /500GB Steam inventory/ : Raptor, later RAID 0 HDDs, later Velociraptor, next 128GB SSD + 1GB Samsung HDD cached by OCZ Synapse 64GB /totaly unreliable/. So I ended up with 1x boot SSD + 2x SSD in RAID 0. Maybe I am little bit offtopic but any ideas how to "live" with increased Steam inventory and keep it fast enough ? Steamover SW is not reliable for me. Thanks for nice article.Reply

-

ojas ReplyWhen Thomas, Don, and Paul prioritize the parts for their quarterly System Builder Marathon configurations (the next of which is coming soon, by the way)

Wait, SBMs are fine, but where oh where went BestConfigs? -

-Fran- I went for a Vertex4 and placed it in my notebook. What a boost. I'd say it also helped improve battery life.Reply

For a Desktop, I don't have a particular use TBH. I have a RAID0 with 2x512GB WD's and it works amazingly good (and fast as well). I'd say, for desktops, SSDs are still not viable because of price, unless you clench your teeth with loading times or such, hahaha.

Cheers!

Most Popular