Why you can trust Tom's Hardware

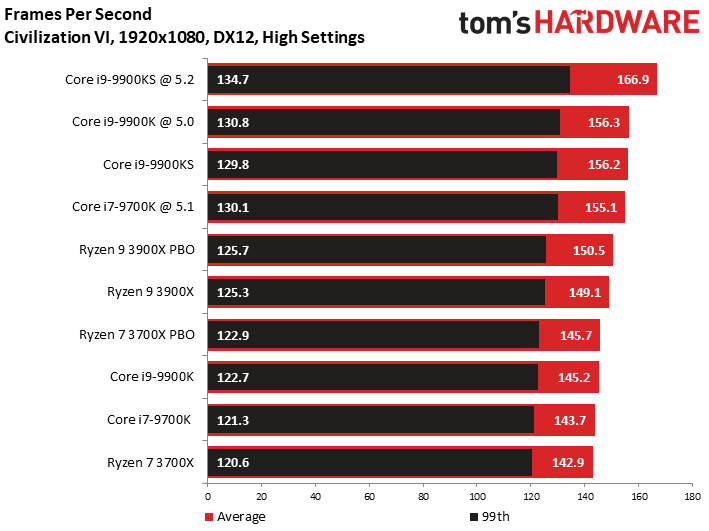

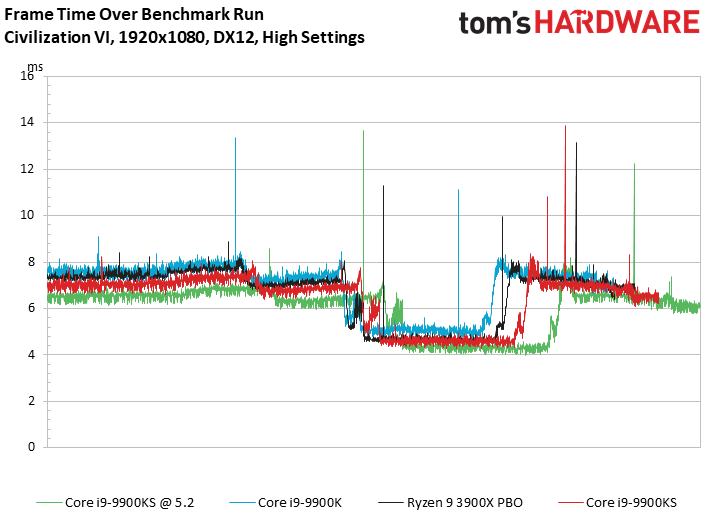

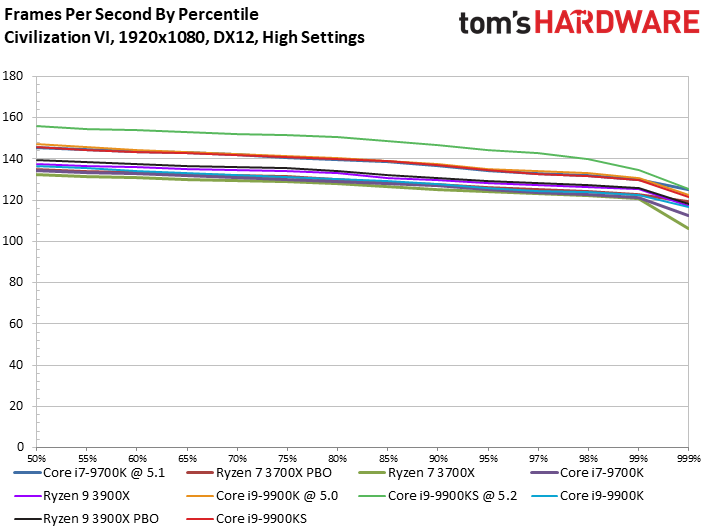

Civilization VI

The Civilization VI benchmark prizes per-core performance, and that plays extremely well to the overlocked Core i9-9900KS as it takes a big lead over the -9900K. The -9900KS is also impressive at stock settings as it outstrips the Ryzen competition, but it is important to remember that these deltas will shrink with higher resolutions.

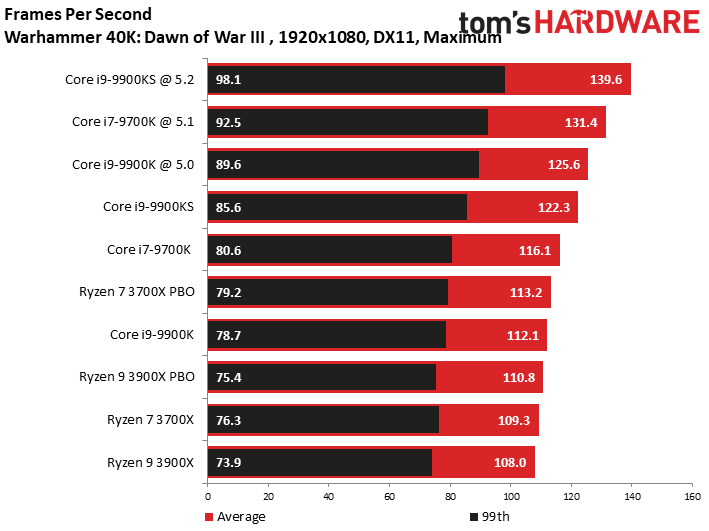

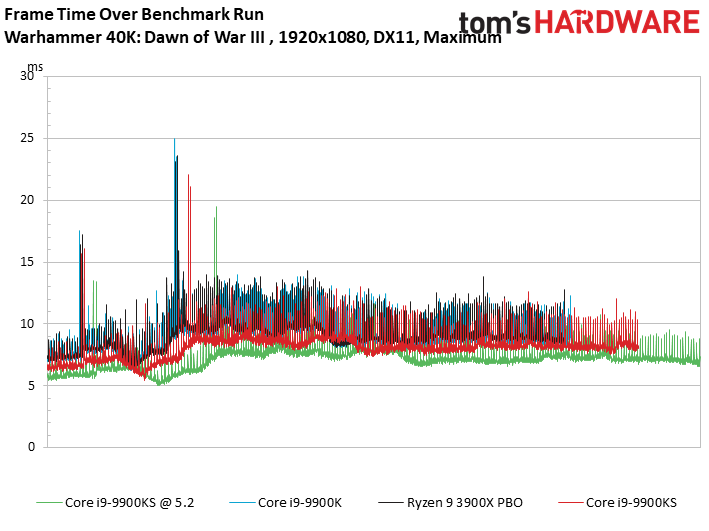

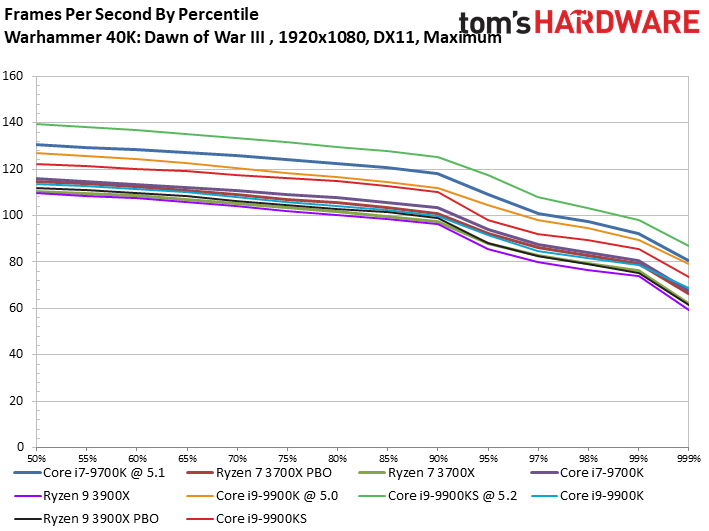

Dawn of War III

The Warhammer 40,000 benchmark responds well to threading, but it's clear that Intel's clock speed advantage has an impact. The Core i9-9900KS again notches a big win after overclocking, but the Core i7-9700K offers a pretty compelling value alternative for mainstream gamers.

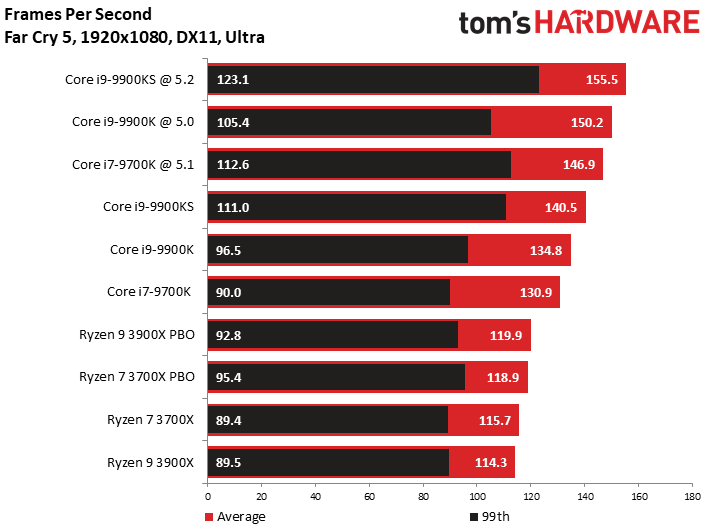

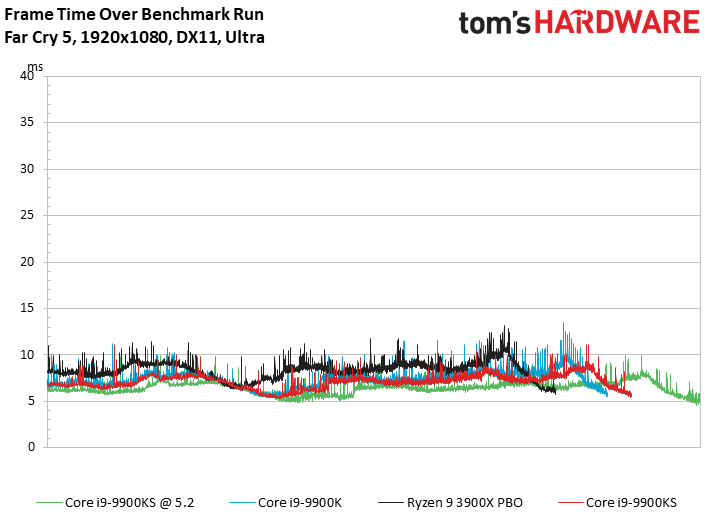

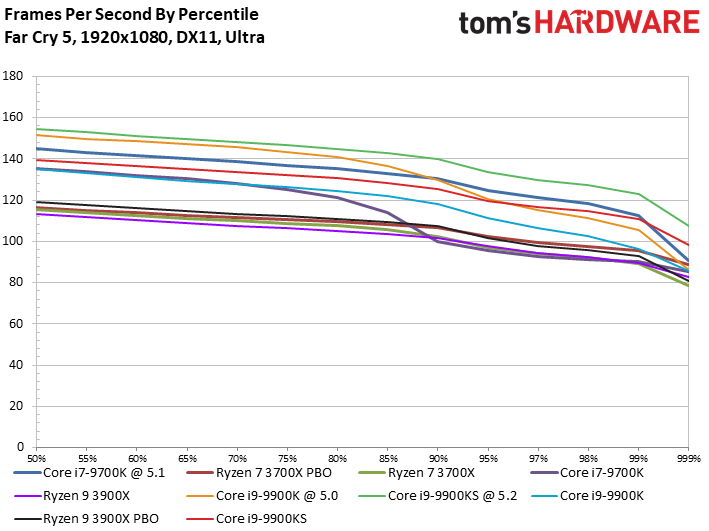

Far Cry 5

We see much the same trend with the Far Cry 5 benchmark results. AMD's Ryzen processors aren't as nimble in this benchmark and land at the bottom of the chart.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Civilization VI Graphics, Dawn of War III and Far Cry 5

Prev Page VRMark, 3DMark, Chess and AI Engines, Ashes of the Singularity Next Page Final Fantasy XV and Grand Theft Auto V

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Aspiring techie If my memory serves me correctly, the Stockfish chess engine got stomped by Google's Deepmind AlphaZero, not the other way around.Reply -

joeblowsmynose I just watched Steve Burkes review, and he notes some pretty interesting caveats with this chip ...Reply

The title of this article: "5.0 GHz on All the Cores, All the Time" --- is not true if it is used with a motherboard manufacturer that stuck to Intel's TDP guidelines, like Asus did.

On Asus boards, this chip only boosts all core @5ghz for a limited time then drops back down to maintain reasonable power consumption, as per Intel's own TDP specification for this processor. So basically, Intel gave mobo makers specs to keep TDP ~127w but really it was just their way of lying about TDP but deferring that misinfo to the mobo makers. The mobo maker that actually chose not to allow a misleading TDP now gets punished ... sounds like an Intel move.

So I assume this will mean that Asus is going to be pissed with Intel since gigabyte and MSI boards will let it suck all the power it needs to maintain 5ghz -- completely disregarding the TDP is the only way it boosts at 5ghz all cores, full time.

So how this chip performs has far more to do with the mother board, than the chip. This is stupid.

As an aside question ... what's the cooling power required for OCing? The OC testing here was done using 720mms worth of radiators on a custom loop - what's next ... LN2 testing? ;) We know the limit is somewhere between the H115i and the dual rad custom loop, but I wonder where that is. A lot of cooling for any OCing anyway it seems ... (but expected). -

colson79 I find it sort of annoying how all these reviews always talk about how much better gaming performance is on Intel but leave out the fact that that is only 1080P or lower. The push Intel like it's the only choice for gamers without mentioning the fact that almost every game has the same performance with resolutions over 1080P. I haven't gamed on a 1080 P resolution for years. I think a lot of less informed people completely skip AMD as an option because these review sites push the Intel 1080 P benchmarks so hard. At a minimum I think they should include the 2k and 4k benchmarks in their CPU reviews.Reply -

PCWarrior Reply

If "Out of the box" was literally meant to mean “no bios fiddling whatsoever” then stock behaviour should also be with the XMP profile disabled as you need to get into the bios in order to enable it. And for ASUS boards, the moment you go to enable XMP, it prompts you to load optimised defaults which removes power limits. Also it should be pointed out that no-power-limits and MCE are not the same thing and are not viewed as the same thing by Intel. Reviewers like Steve Burkes from Gamers Nexus and Der8auer seem to conflate the two. They are NOT the same. No-power-limits sticks to stock turbo frequency tables (for example the regular 9900K still only boosts to the stock all-core turbo of 4.7 GHZ but instead for only 25 seconds it does so indefinitely). MCE, on the other hand, means both no-power-limits AND to make the all-core turbo boost equal to the single-core turbo boost (for example for the regular 9900K with MCE enabled it means boosting to 5GHZ on all cores indefinitely). For warranty purposes MCE is considered an overclock by Intel. No-power-limits is NOT considered an overclock by Intel. It is stock and it is left to the motherboard vendor how the settings are configured out of the box.joeblowsmynose said:I just watched Steve Burkes review, and he notes some pretty interesting caveats with this chip ...On Asus boards, this chip only boosts all core @5ghz for a limited time then drops back down to maintain reasonable power consumption, as per Intel's own TDP specification for this processor. -

PCWarrior Reply

They use 1080p because this is currently the highest resolution where with a top GPU there is definitely a cpu bottleneck and it is therefore a cpu test. It is not a cpu test when there is a gpu bottleneck. With current gpus, even with the likes of 2080Ti, you can have an i3 8100 and still do as well in 4K gaming as with a 9900K. However fast forward to the future and using something like a 3080Ti or a 4080Ti and you will be having a cpu bottleneck across all games at 1440p and probably even at 4K. With a 3080Ti or a 4080Ti you will be getting the same fps that you currently get with a 2080Ti at 1080p but at 1440p and 4K. You can verify this by going backwards to a lower resolution. If you have a 2080Ti, you get the same fps for 720p and 1080p because in a cpu bottleneck situation fps can only increase with a better cpu, not a better gpu.colson79 said:I find it sort of annoying how all these reviews always talk about how much better gaming performance is on Intel but leave out the fact that that is only 1080P or lower. -

joeblowsmynose ReplyPCWarrior said:If "Out of the box" was literally meant to mean “no bios fiddling whatsoever” then stock behaviour should also be with the XMP profile disabled as you need to get into the bios in order to enable it. And for ASUS boards, the moment you go to enable XMP, it prompts you to load optimised defaults which removes power limits. Also it should be pointed out that no-power-limits and MCE are not the same thing and are not viewed as the same thing by Intel. Reviewers like Steve Burkes from Gamers Nexus and Der8auer seem to conflate the two. They are NOT the same. No-power-limits sticks to stock turbo frequency tables (for example the regular 9900K still only boosts to the stock all-core turbo of 4.7 GHZ but instead for only 25 seconds it does so indefinitely). MCE, on the other hand, means both no-power-limits AND to make the all-core turbo boost equal to the single-core turbo boost (for example for the regular 9900K with MCE enabled it means boosting to 5GHZ on all cores indefinitely). For warranty purposes MCE is considered an overclock by Intel. No-power-limits is NOT considered an overclock by Intel. It is stock and it is left to the motherboard vendor how the settings are configured out of the box.

A complicated way to lie about TDP ... lol. Whatever you say, it disingenuous. -

TJ Hooker Reply

FYI this applies to all Intel CPUs with turbo boost, not just the 9900KS. Officially they're all supposed to have a limited duration boost buy nearly all mobos remove this limit. And they've been doing so for some time, so it seems Intel doesn't really care.joeblowsmynose said:On Asus boards, this chip only boosts all core @5ghz for a limited time then drops back down to maintain reasonable power consumption, as per Intel's own TDP specification for this processor. So basically, Intel gave mobo makers specs to keep TDP ~127w but really it was just their way of lying about TDP but deferring that misinfo to the mobo makers. The mobo maker that actually chose not to allow a misleading TDP now gets punished ... sounds like an Intel move.

Which makes sense, as it improves their benchmark scores and if anyone complains about power draw they can just point to their states rules for power levels and blame the mobo manufacturers, even though they've implicitly given them permission to do this by allowing it to go on in a widespread fashion. -

TJ Hooker Last page:Reply

Bear in mind that we tested with an Nvidia GeForce GTX 1080 at 1920x1080 to alleviate graphics-imposed bottlenecks.

Should say 2080 Ti. -

Soaptrail ReplyPCWarrior said:They use 1080p because this is currently the highest resolution where with a top GPU there is definitely a cpu bottleneck and it is therefore a cpu test. It is not a cpu test when there is a gpu bottleneck. With current gpus, even with the likes of 2080Ti, you can have an i3 8100 and still do as well in 4K gaming as with a 9900K. However fast forward to the future and using something like a 3080Ti or a 4080Ti and you will be having a cpu bottleneck across all games at 1440p and probably even at 4K. With a 3080Ti or a 4080Ti you will be getting the same fps that you currently get with a 2080Ti at 1080p but at 1440p and 4K. You can verify this by going backwards to a lower resolution. If you have a 2080Ti, you get the same fps for 720p and 1080p because in a cpu bottleneck situation fps can only increase with a better cpu, not a better gpu.

Yes but they should include a couple 1440p and 4K resolution benchmarks to put context. It could still be beneficial to buyers to get the cheaper options like the AMD Ryzen 3600 and upgrade in a couple years than buy the 9900KS and stick with it for 6 years.