Talking Heads: VGA Manager Edition, September 2010

CPU/GPU Hybrids And Performance Integrated Graphics

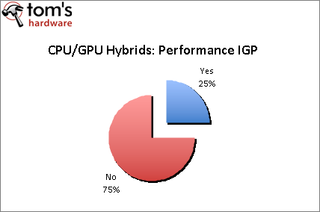

Question: CPU/GPU hybrid designs like Sandy Bridge and Llano potentially eliminate the need for a separate graphics card. Historically, integrated graphics have been inadequate for everything above productivity-oriented desktops. Do you think the integrated graphics processors in the first generation of CPU/GPU hybrids are powerful enough to replace low-end to mid-range discrete graphic solutions?

- The definition of "powerful enough" really depends on what kinds of applications are applied. Integrated graphics are good enough for 2D and some 3D applications. However, when more complicated 3D applications are required, integrated graphics may not be enough. It is always the software and hardware trading game. For basic use, it is enough.

- CPU/GPU hybrid designs might have an impact on the low-end graphics card market, but they will never replace mid-range discrete graphic solutions, at least for the time being. Intel's solution is still unsatisfactory due to poor drivers. AMD might be better on the driver side, but we still do not see the level of performance that can be matched against a mid-range graphics card. Although CPU/GPU hybrid designs provide a step up from historical IGP solutions, discrete graphics cards, on the other hand, are also improving at high speed.

- Generally, if users have low-end to mid-range discrete graphics cards for their home media solutions, CPU/GPU hybrids may be powerful enough to provide a fluent video experience. But for mid-range gaming products, it remains to be seen whether CPU/GPU hybrids have enough processing capabilities.

- The design concepts in Fusion products especially include a multi-processing GPU-style architecture more than capable of delivering mainstream graphics performance, and also using the GPU architecture for general-purpose applications that benefit from a multi-stream architecture, like rendering, video transcoding, and the many other applications that can be accelerated by ATI Stream today.

- The integrated graphics is only for low-end graphic solutions.

- Discrete graphic cards should still have better performance, and end-users should consider the total cost of whole PC. CPU/GPU hybrid solution should have higher cost premium than normal one.

- For very low-end systems, these architectures should help bring down the cost of the total system. However, I don't see this concept ever replacing mid-range graphics solutions.

It is a mixed bag here. AMD- and Nvidia-exclusive vendors don’t lean one way or the other. More than likely, our question was subject to different interpretations. The delta between the low-end and mid-level discrete solutions is actually quite large. And as one person pointed out, it also depends on how we are defining market spaces and what we mean by “powerful enough.” Does this mean powerful relative to this generation or the generation that is to be concurrently available with Sandy Bridge and Llano?

Looking back, we should have asked two questions here. Our actual aim was to gauge whether anyone felt as if consumers might rely more heavily on upcoming integrated graphics engines, forgoing discrete cards altogether. After all, this is the very question analysts have been dying to ask. Even though we are withholding company names, most of our responses were given in an even more unofficial capacity because of the level of technical details disclosed. We have already had Sandy Bridge samples in our labs in and have seen the Intel roadmaps even past 2012. Short of a pricing problem, Sandy Bridge and its successors have the potential to go par for par with everything below today’s $75 discrete graphic space.

However, it is not sufficient to deliver mainstream graphic performance on par with today’s graphic solutions. Today’s $75 graphic card will be inevitably be tomorrow’s $50 graphic card, so these hybrids will need to evolve in tandem with the discrete space if they plan to eat into nontraditional market spaces. If the two companies want to demonstrate tangible value in what they’re doing (a single-chip solution capable of general-purpose processing and graphics), then they have to take two steps forward. This is in addition to making sure the software developers adopt processor extensions necessary to capitalize on the performance that these hybrids can provide.

It is important to point out that, in the discrete mid-range segment, driver quality can provide critical and noticeable benefits to the user experience. In the past, Intel was slow to provide driver-based improvements on a regularly updated basis. There needs to be an abrupt change here. Intel has a good opportunity to put an emphasis on software development, an often-understated necessity given AMD’s software resources. To the company’s credit, we recently had a discussion with software engineers who were previously working on Larrabee, and are now focused on improving the integrated graphics drivers. However, there is still a lot of room for improvement, especially if they want to compete with AMD’s Fusion campaign.

Perhaps the most interesting comment came from the idea that, as a total platform, Sandy Bridge and Llano will actually cost more. If AMD and Intel both want success, this can’t occur. The idea of integration is supposed to drive the cost of the system down. A hybrid solution that delivers a $60 market graphic solution is going to be a hard sell if the graphic portion marks up the premium $100. One of our sources has access to early pricing information, so we are left wondering if the total cost of ownership concern is perhaps also tied to an upgrade. If getting these next-gen CPU/GPU hybrids means buying a new processor, a new motherboard, and, if you’re a true gamer, adding a graphics card to the equation as well, then yes, we’re looking at an expensive proposition. The average age of the PC may very well continue to rise. If this is the case, we certainly could understand this argument, as Sandy Bridge and Llano platforms compete with their predecessors.

Yet research in one begets the other, so we will continue to see integrated graphics processors advancing alongside discrete graphics. They are already somewhat competitive in mainstream applications. IGP solutions started to really eat their way into environments normally addressed by low-end graphics cards years back (for example, ATI’s RS300). The big question now is if they can make serious inroads into environments where we’d normally expect to see mid-range graphic cards.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

The most realistic appraisals of the situation were responses #4 and #7. If you concede that IGP solutions can be competitively-priced, then replacing low-end discrete graphics is a forgone conclusion once the right factors line up: driver support, discretionary spending, economic climate, etc. If hybrids can’t be priced at the same cost/performance ratio as their discrete brethren, both companies have some serious explaining to do to customers and shareholders. Early reports suggest performance in the range of the mainstream Radeon HD 5550, or possibility beyond, from some of our inquiries regarding Llano. Given what this means for Bulldozer, once you factor in regular driver enhancements, AMD can potentially pull out a bazooka to counter Intel’s Uzi. Better yet, in 2011, we estimate IGP can cause entry-level and mid-low-range desktops prices to fall $35-65 further--good news to consumers all around. However, the success of IGP solutions for mainstream (mid-range) desktops continues to be unclear. We say “unclear” because we are referring to the current sweet spot for mainstream discrete graphics: $125 to $175 solutions.

An increasing number of consumers are engaging in multitasking workloads with heavy 2D and 3D content interaction. To what extent does this trend continue? Is DX11 gaming going to be made possible in the mainstream space, or will entry-level solutions continue to be too anemic to drive DX11-class effects at “reasonable” resolutions? Does this mean playing at 25+ or just getting by at sub 20 frames per second? What about 3D content creation? Despite a majority of the world’s PC’s still using Windows XP (according to the same Microsoft partner conference mentioned earlier), the upgrade to Windows 7 is geared specifically for a more multimedia-heavy experience.

There seems to be two likely scenarios for discrete graphic vendors:

- In the future, the performance demands of the mainstream market eventually migrate closer to what we expect from the high-end space. Almost a mesh of the two, something like splitting the current high-end market into high-low and high-high spaces, with a few offerings peppered in the middle.

- The other possibility is that we see a more granular mainstream market. Something more tiered in scale, where graphic card companies offer solutions for the spectrum that Sandy Bridge and Llano cannot occupy.

Right now, it is hard to see how this is going to play out. A successful launch of the new Fermi-based DX11 and late Q4 AMD offerings could be indicative of the mainstream market’s eventual move as far as (performance) demands go.

At the end of the day, as our own Don Woligroski says, “The prospect of playable 720p DirectX 11-class visuals with inexpensive integrated logic cannot be understated.” For the vast majority of mainstream gamers, an AMD IGP solution on par with the Radeon HD 5550’s performance provides muscle to spare. World of Warcraft gamers definitely won’t complain. In that context, AMD will definitely have a game changer that Intel and Nvidia will need to address, with or without programmable logic hitting the market in 2011. Keep in mind that while all the buzz is about Llano, it is only because AMD is on the marketing warpath. Intel is going to provide strong competition with its high-end 2000-series. Though, we would be a bit more comfortable to see about another 15% improvement in Intel’s final product before they take on the next generation of discrete graphics, given the progression of beta transcoding driver performance we’ve been tracking over the past few months. Nvidia’s recent price cuts don’t really change the landscape of the industry; they just change the terms of the battle. As far as we are concerned, this is still a three-way bar fight, except two people just picked up to billiard cues.

Current page: CPU/GPU Hybrids And Performance Integrated Graphics

Prev Page Question 1: Nvidia’s Low- To Mid-Range Products Next Page CPU/GPU Hybrids And The Death Of The Graphics Business?-

Who knows, By 2020, AMD would have purchased Nvidea and and renamed Geforcce to GeRadeon... And talk about considering integrating RAM, Processor, Graphics and Hard drive in Single Chip and name it "MegaFusion"... But there will still be Apple selling Apple TV without 1080p support, and yeah, free bumpers for your Ipods( which wont play songs if touched by hands !!!)Reply

-

Kelavarus That's kind of interesting. The guy talked about Nvidia taking chunks out of AMD's entrenched position this holiday with new Fermi offerings, but seemed to miss on the fact that most likely, by the holiday, AMD is going to already be starting to roll out their new line. Won't that have any effect on Nvidia?Reply -

The problem I see is while AMD, Intel, and Nvidia are all releasing impressive hardware, no company is making impressive software to take advantage of it. In the simplest example, we all have gigs of video RAM sitting around now, so why has no one written a program which allows it to be used when not doing 3d work, such as a RAM drive or pooling it in with system RAM? Similarly with GPUs, we were promised years ago that Physx would lead to amazing advances in AI and game realism, yet it simply hasn't appeared.Reply

The anger that people showed towards Vista and it's horrible bloat should be directed to all major software companies. None of them have achieved anything worthwhile in a very long time. -

corser I do not think that including a IGP on the processor die and conecting them doesn't means that discrete graphics vendors are dead. Some people will have graphics requirements that will overhelm the IGP and connect an 'EGP' (External Graphics Processor). Uhmmmm... maybe I created a whole new acronym.Reply

Since the start of that idea, believed that IGP on the processor die could serve to offload math operations and complex transformations from CPU to IGP, freeing CPU cycles for doing what is intended to do.

Many years ago Apple made somewhat similar to this with their Quadra models that sported a dedicated DSP to offload some tasks from the processor to the DSP.

My personal view on all this hype is that we're going to a different computing model, from a point that all the work was directed to the CPU and making some small steps making that specialized processors around the CPU do part of the work of the CPU (think on the first fixed instruction graphics accelerators, sound cards that off-load CPU, Physx and others).

From a standalone CPU -> SMP ->A-SMP (Asymetric SMP). -

silky salamandr I agree with Scort. We have all this fire sitting on our desks and it means nothing if theres no software to utilize it. While I love the advancement in technology, I really would like devs to catch up with the hardware side of things. I think everybody is going crazy adding more cores and having an arms race as a marketing tick mark but theres no devs stepping up to write for it. We all have invested so much money into what we love but very few of us(not me at all)can actually code. With that being said, most of our machines are "held hostage" in what they can and cannot do.Reply

But great read. -

corser Hardware should be way time before software starts to take advantage of it. Has been like this since the start of the computing times.Reply -

Darkerson Very informative article. I'm hoping to see more stuff like this down the line. Keep up the good work!Reply -

jestersage Awesome start for a very ambitions series. I hope we get more insights and soon.Reply

I agree with Snort and silky salamandr, we are held back by developments on the software side. Maybe because developers need to take backwards compatibility into consideration. Just take games for example: developers would like to keep the minimum and even recommended specs down so that they can get more customers to buy. So we see games made tough for the top-end hardware but, thru tweaks and reduced detail, can be played on a 6-year old Pentium 4 with a 128mb AGP card.

From a business consumer standpoint, and the fact that I work for a company that still uses tens of thousands of Pentium 4s for productivity related purposes, I figure that adoption of the GPU/CPU in the business space will not happen for another 5-7 years AFTER they launch. There is simply no need for an i3 if a Core2 derivative Pentium Dual Core or Athlon X2 could still do spreadsheet, word processing, email, research, etc. Pricing will definitely play into the timelines as the technology ages (or matures) but both companies will have to get money to pay for all that R&D from somewhere, right? -

smile9999 great article btw, out of all this what I got seems that the hyprid model of cpu/gpu seems more of a gimmick that an actual game changer, the low end market has alot of players, IGPs are a major player in that field and they are great at it and if that wasnt enough there still is nvidia and ati offerings, so I dont think it will really shake the water much as they predict.Reply

Most Popular