MIT Attacks Wireless VR Problem With 'Millimeter Waves' Solution

Technology advances at an incredible pace these days. Less than a year ago, the world was anxiously awaiting notice of the launch dates for the Oculus Rift and HTC Vive consumer VR systems. Now, less than eight months after the magical technology of virtual reality was bestowed upon the world, it appears that cutting the cord is the next frontier.

The Rift and Vive offer incredible experiences, but there’s no denying that both would be much improved without the cables that leave you tethered to your computer. However, wireless VR wasn’t a feasible option when the Rift and Vive were in development. The bandwidth demands of high-quality, position-tracked VR exceed the limitations of traditional wireless and Bluetooth, so the idea of Wireless HMDs was shelved in order to avoid delaying the release of consumer VR hardware.

Fortunately, thanks to the hard work of multiple companies, and some of the gifted minds at MIT, wireless VR is coming along faster than many expected. In September, we reported that a company called Quark VR is working closely with Valve to build a wireless system for the Vive, and on November 11, HTC China opened pre-orders for a limited run of wireless upgrade kits of the Vive from a company called TPCAST. Intel also recently demonstrated an experimental wireless VR headset system. And now, researchers at the Computer Science and Artificial Intelligence Laboratory at MIT (CSAIL) are also working on a solution for wireless data transmission suitable for virtual reality systems.

“It’s very exciting to get a step closer to being able to deliver a high-resolution, wireless-VR experience,” said MIT professor Dina Katabi, whose research group has developed the technology. “The ability to use a cordless headset really deepens the immersive experience of virtual reality and opens up a range of other applications.”

The team at MIT believes that “millimeter waves” (mmWaves) are the solution for replacing the HDMI cable with a wireless signal. MmWaves are signals that operate in the 24GHz and higher range. These high-frequency radio signals can transmit “billions of bits per second,” which make them suitable for high-bandwidth tasks such as transmitting low-latency stereoscopic video signals.

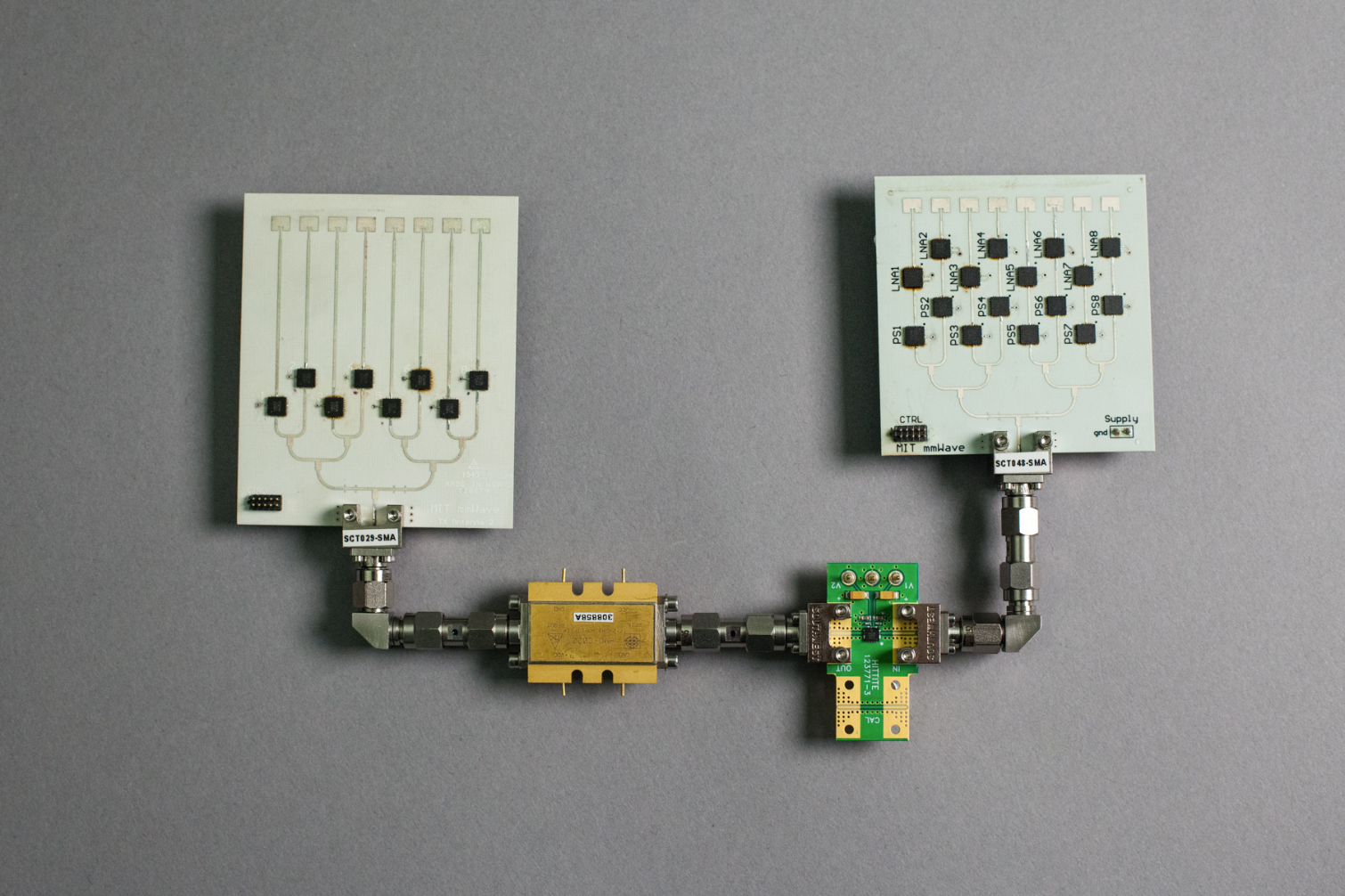

MIT's solution isn’t as simple as plugging a transmitter into the computer and a strapping a receiver onto the headset, though. MmWaves antennas are directional, and the beams that they cast are narrow, so they aren’t well suited for room-scale VR. However, the team at MIT is developing a system called MoVR that can follow the receiver’s movement automatically, which enables wireless room-scale VR tracking.

MoVR doesn’t receive or transmit the VR data; it simply reflects the signal from the transmitter towards the receiver. The MoVR system calculates the angle of reflection at startup and calibrates itself to reflect the signal where it needs to be. The researchers said that calibration is the most time-consuming part of the process. Once it has the angle calibrated, the MoVR system uses the position data from the VR HMD via Bluetooth to determine the best angle at any given moment.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Data transmission via mmWave is fast. MIT said its system could transmit the VR signal in under 10ms, and reflecting the wireless signal has no effect on the data rate.

Unlike the Quark VR and TPCAST wireless systems, MIT's MoVR wireless system is just experimental. The researchers didn’t indicate specific plans to introduce the technology as a consumer product. For now, MIT is focusing on refining the technology. Future goals include shrinking the hardware to “be as small as a smartphone” and creating the ability to have more than one wireless headset in the same space.

This may be the year of VR, but 2017 is shaping up to be the year of wireless VR.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

nutjob2 "Now, less than eight months after the magical technology of virtual reality was bestowed upon the world..."Reply

Another Millennial who thinks the world was created the day he was born. -

kcarbotte Reply18868154 said:"Now, less than eight months after the magical technology of virtual reality was bestowed upon the world..."

Another Millennial who thinks the world was created the day he was born.

Well that's the first time anyone has ever referred to me as a millenial. lol -

clonazepam I'm confused. I've seen testing on a standard PC with a wired USB mouse where they hooked up LED directly to the mouse. Then they taped the LED to the monitor. Using a high speed camera, they recorded the latency between clicking the mouse (LED light) and the action taking place on the screen.Reply

Now we're talking about a wireless controller to the PC, and then to the headset. Also, headset movement to the PC and then back. What kind of round trips are we talking about?

I think a 60 hz monitor, v-sync with double or triple buffer sees a latency of around 140ms average. The average latency for 144fps, 144Hz vsync, double triple buffer is around 80ms or thereabouts. If you just forego vsync altogether on the 144Hz and shoot for 300fps, you're looking at about 40ms avg.

Where does all of this tech land compared to that? Perhaps they aren't even aiming for a gaming environment anyway, so it'd be fine, if a little frustrating like the latency when trying to bring up your cable or satellite service's guide. -

none12345 "I think a 60 hz monitor, v-sync with double or triple buffer sees a latency of around 140ms average. The average latency for 144fps, 144Hz vsync, double triple buffer is around 80ms or thereabouts. If you just forego vsync altogether on the 144Hz and shoot for 300fps, you're looking at about 40ms avg."Reply

Just changing your monitors in your example wouldnt affect the latency numbers as much as you indicate.

60hz = 16.66ms, 144hz = 6.94ms, 300 hz = 3.33ms. In all 3 cases, with vsync, thats on top of whatever delay you get from the game engine to the graphics driver to the link to the monitor. As well as the delay from mouse click or keyboard press till the game engine registers it. Changing the monitor wouldnt affect any of that. If you have a gpu rendering 60 fps, you are seeing a new frame every ~16.66ms, but with tripple buffering its at least 50 ms old, if we had freesync/gsync throw on another 10 ms or so, if we have neither then add the above monitor numbers, then throw on say another 1/4 to 1/2 of that again for the image to actually stabilize, and say another 10ms for the mouse click or keyboard press to be recgonized by the game engine.

The 80ms guess is probably pretty good if you have a gpu rendering 60 fps and a 60 fps monitor; 10ms mouse/keyboard + 50ms tripple bufering + 16.66ms cycle time + 4 ms image stabilization + say 10 ms everythign else.

However, changing the montior only affects the 16.66+4ms number above. A 300 hz monitor would only be say 3.33ms+1.6 = 5ms, youd only save 15ms. And be about 65ms or so.

What would cut off a lot more latency would be changing the gpu to a 300 fps gpu on the same crappy 60hz monitor. Now you can cut into that huge 50ms delay in the tripple buffer and drop it to 10ms. And on the same crappy 60hz monitor you are down to a ~40ms delay. Course would make no sense to buy a gpu that can do 300fps and cheap out on a 60hz monitor!

Do both and you can could get down to about 25ms. Even tho the gpu can render in 3 and the monitor can display in 3, there are still other sources of latency you havent fixed.

Of course dont forget human reaction time, add about 250ms for average human reaction time.....so umm....oops none of the above matterd because humans arent very quick by comparison! -

clonazepam Yeah, reaction time matters when doing something for the first time. It's fine until you begin predicting when to enter your input, which can be down to just a few frames. Now it matters quite a bit.Reply -

anbello262 I would actually like to have a link or more info about those numbers. From my understanding, the real numbers are a lot lower than yours, but I might be believing some incorrect rumours. Still, I believe that claiming "input lag" for a simple mouse click to be over 100ms is not only far from reality. Having more than 100ms total input lag for simple mouse lcick is very easily noticeable. I think you're off by a factor of 5 at least.Reply

Maybe, when you are saying "average" you are also taking into account very slow TVs that are not designed to be used with any kind of fast input instruction? -

clonazepam Reply18869009 said:I would actually like to have a link or more info about those numbers. From my understanding, the real numbers are a lot lower than yours, but I might be believing some incorrect rumours. Still, I believe that claiming "input lag" for a simple mouse click to be over 100ms is not only far from reality. Having more than 100ms total input lag for simple mouse lcick is very easily noticeable. I think you're off by a factor of 5 at least.

Maybe, when you are saying "average" you are also taking into account very slow TVs that are not designed to be used with any kind of fast input instruction?

I'm skeptical as well.

https://www.youtube.com/watch?v=L07t_mY2LEU

-

TJ Hooker Reply

Those results just don't make sense to me. Fast-sync as I understand it, and how it is explained in the video, is the very definition of triple buffering. Yet somehow triple buffering doubles input lag compared to fast-sync.18869045 said:I'm skeptical as well.

https://www.youtube.com/watch?v=L07t_mY2LEU

Also, he states that G-sync/free-sync add input lag, without providing any evidence/reasoning behind that. I don't think that's the case unless you're trying to drive fps higher than monitor refresh rate.

All that being said, biggest difference in input lag was only like 60 ms, which I'm pretty sure is insignificant given the limitations of human reaction time. -

SockPuppet None of you seem to understand what is happening here.Reply

In VR, you have a movement-to-photon budget of 20ms. From the time the user moves their head to the time the screen updates and fires a photon at the user's eyeball you have a MAXIMUM of 20ms. If you go over that your brain realizes that something isn't right, the most likely cause of your brain-movement disconnect is food poisoning, and then it empties your stomach in response.