QiVARI Eye Tracking Tech Taking On Tobii, Fove In AR/VR HMDs

In the burgeoning VR market, eye tracking is becoming a technology that may be one of the more coveted features for upcoming VR HMDs. We've seen solutions from the likes of Tobii and Fove, and both hold a great deal of promise as they continue to develop, but at CES we encountered another, more unlikely contender.

At this point it's a proof of concept more than anything, but two companies -- EyeTech Digital Systems and Quantum Interface -- are partnering to create QiVARI, which they hope will become industry-leading eye tracking technology.

A New Market

I found Keith Jackson, EyeTech's director of sales and marketing, and Jonathan Josephson, Quantum Interface's COO/CTO in the Sands at CES. Unlike so many huge, multi-story booths (yes, multi-story, with rooms) we see at the show, the two companies were sharing a small booth. However, the size of the booth belied the passion and apparent bonafides of its denizens. Jackson, Josephson, and the rest of their respective crews are not fresh-faced youngsters hawking their first pretty cool idea; they're industry veterans looking to combine their expertise and IP and push into the consumer VR/AR market.

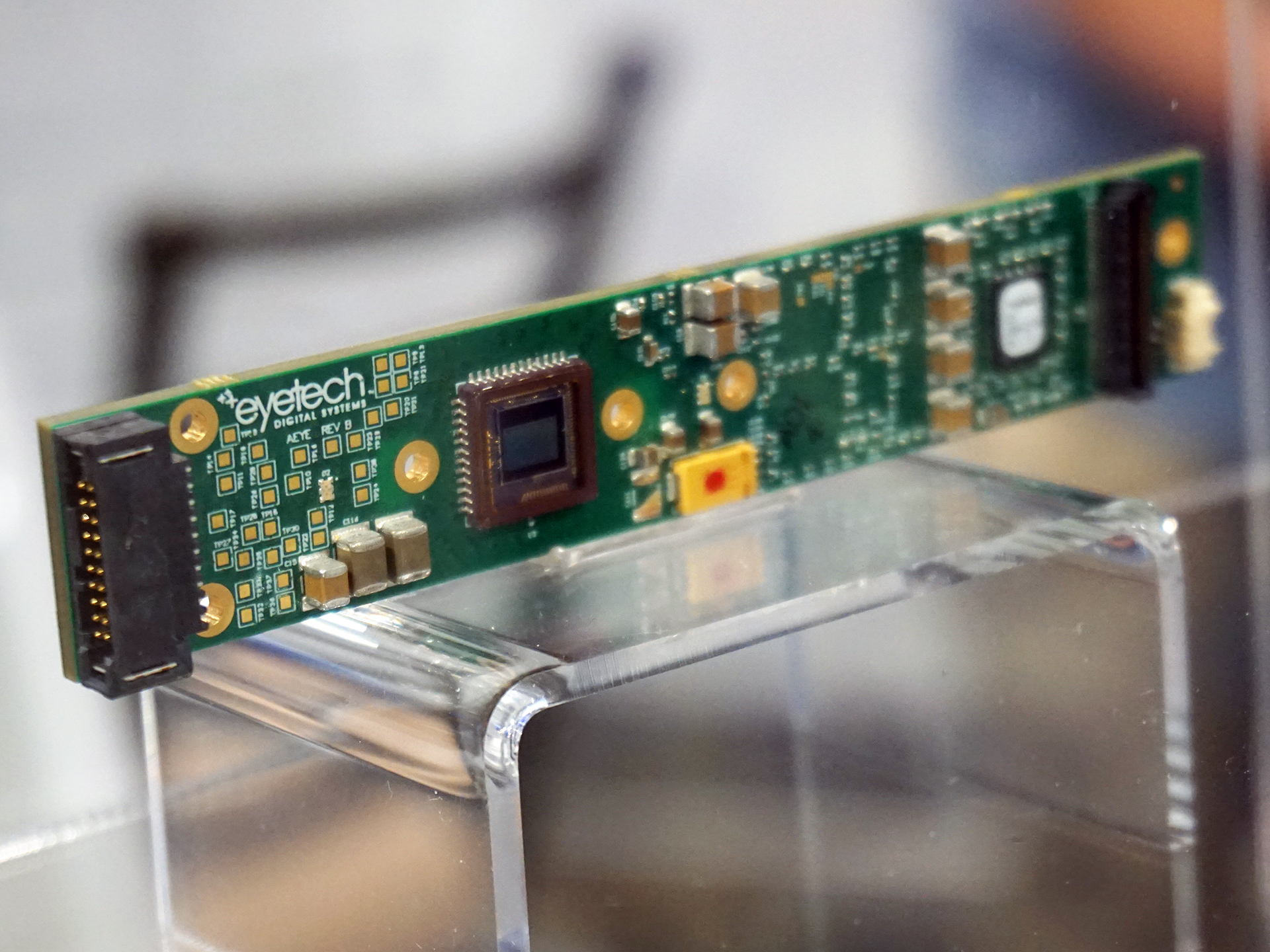

EyeTech is providing the hardware -- the PCB, the sensors and so on -- and QI is bringing its predictive software and UI to the table. The package is basically a vision processor.

Eyes, The Window To Fast And Intuitive Navigation

At this point in time, we have a number of means of input and navigation with our devices, generally speaking. There's the tried-and-true mouse and keyboard, as well as touch screens, voice input, and body tracking and gesture control like the Kinect or Leap Motion. QI's Josephson, though, said that eye tracking is dynamic, and therefore more preferable in many situations, to touch, gesture and voice.

I pressed him on that point; how are the aforementioned methods of input not dynamic? After all, they all relate to natural functions of the body, just like eye tracking. However, QiVARI has an edge, he explained, because its software is predictive.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

QiVARI employs three keys:

Change of motion (angle, speed, etc.)The user is the origin of the movementVector drawing, which is extrapolated to predict what the user will do

Together, those three aspects of the software and UI are supposedly able to register what your eye wants to do and use vectoring to take you there before you realize what's happening. It tries to stay a step ahead of you, so even though there is latency ("one over the frame rate," or 18 ms at 60 fps), the software smoothes things over so that ideally, you'll never notice it.

Jackson said that this technology primarily differs from Tobii's in that Tobii uses two cameras and does all the processing on the PC, whereas QiVARI has a single camera and performs all the processing on-device. However, at some point, QiVARI will likely employ a two-camera setup for better accuracy, as well.

Getting Here And There

The group showed me a demo of a mocked-up interface. You bring up the menu just by looking at the button you want. From the main menu, you can look in eight different directions (L, R, up, down, upper right, upper left, lower right, lower left), and you get three options at each location. Therefore, from a single menu, you have quick access to 24 total items. Imagine the multiplicity from there.

How fast is it? Josephson told me that he can whip through three menu levels in 1/100th of a second.

Other controls include zoom, pan and scroll. (The software uses the device's camera and gyroscope to create X and Y axes.) You can also adjust the DPI, just like on a mouse, which seems like a crucial feature.

You can see another demo here:

The HMD: Proof Of Concept, Work In Progress

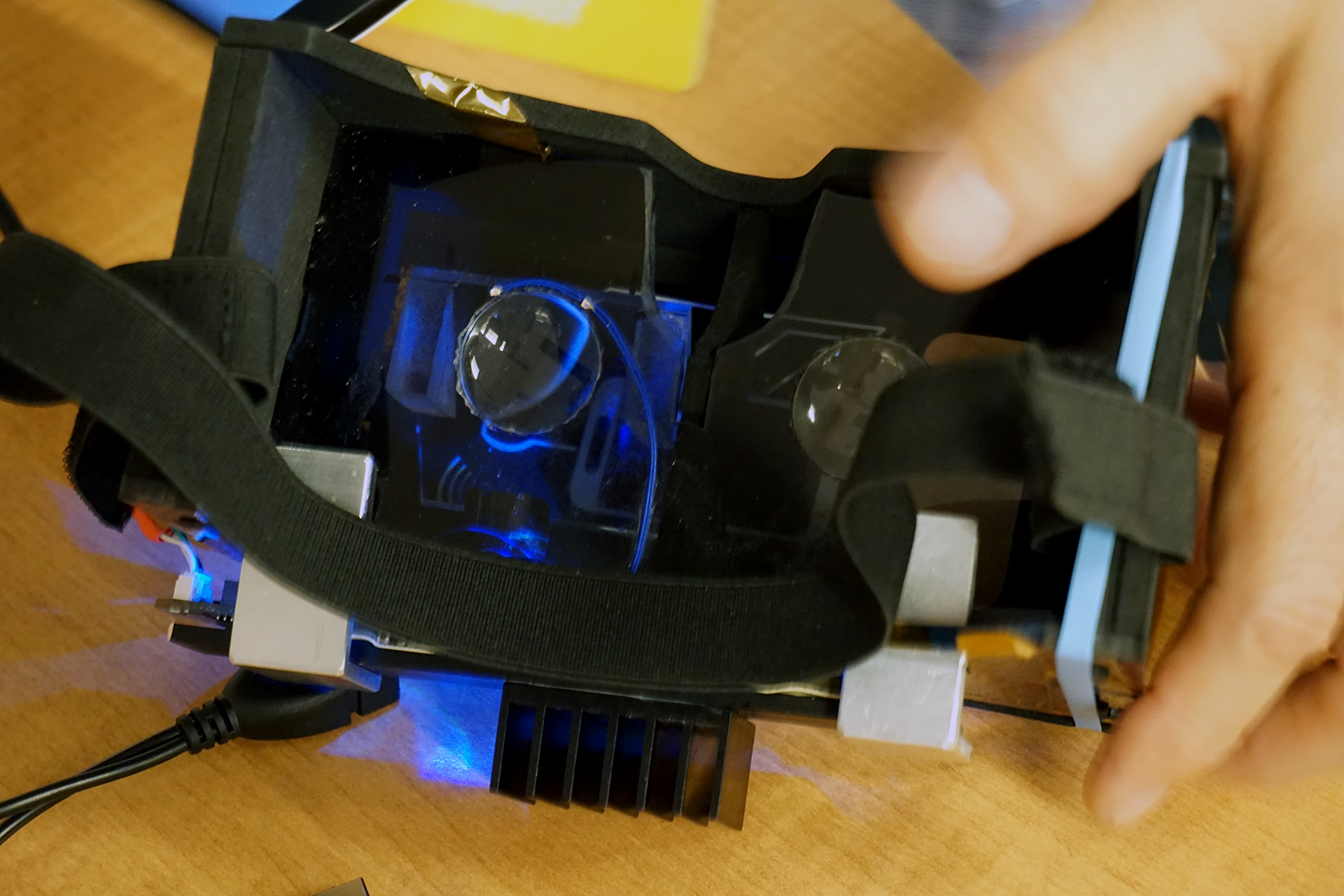

The QiVARI technology is compatible with Google Cardboard, and the team(s) have created their own HMD prototype to show off the eye tracking technology. It's a fresh prototype -- so fresh, in fact, that I was the first journalist to try it. A PR rep walked into our meeting straight from picking it up from the post office, and they opened it in front of me.

It's...not pretty. It's big and clunky and kludgy-looking, but then again, it's just a prototype. Further, I don't believe that these guys are interested in making an HMD; they want to get their tech into other HMDs, just as Tobii, for example, is looking to do.

I had little time to hunker down and get a clean calibration with the device, but I did have a chance to see how it all works. With the HMD on, you calibrate by staring a dot that appears on the display at various points on the screen. After enough dots have been looked at, you're ready to go. (They plan to add a voice control aspect to the calibration for more accuracy.)

My calibration wasn't set especially well, but it was good enough to let me move through some menu items. The demo they had on the HMD was quite simple, and deterministic; you could select only a couple of paths through the levels. Because my calibration was off, I struggled somewhat, but I could certainly see (pun) how intuitive and fluid the technology could be. You just look, and there your cursor is, selecting things for you.

It's possible that I could have adjusted to it with time, but the DPI felt too high. Just like a mouse with the DPI cranked to the proverbial 11, I didn't have enough control (eyeballs are really active, go figure). I'm a lower-DPI-type user anyway, so perhaps my mouse predilections followed my eyes. In any case, the DPI of this eye tracking is adjustable, so if and when I have a chance to try QiVARI out again, I'll dial it down.

The EyeTech and Quantum Interface guys didn't give me a timeline for when QiVARI would be polished, nor when we might see it on shipping devices, but they're clearly both passionate and confident about what it can offer the VR/AR world. They have a similar product coming for car HUDs called QiHUD.

Seth Colaner is the News Director for Tom's Hardware. Follow him on Twitter @SethColaner. Follow us on Facebook, Google+, RSS, Twitter and YouTube.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.