AVIVO HD vs. Purevideo HD: What You Need to Know about High-Definition Video

CPU Usage Benchmarks, Continued

However, the Geforce 8800 GTX and 8600 GTS seem to have lowered CPU utilization significantly. It looks like the CPU has to work about 20% less with these cards. Of course, the VC-1 isn't particularly difficult to decode and even without acceleration the e4300 CPU is performing well.

As a point of interest, the Geforce 8500 GT seems to require a bit more resources to play VC-1 video than all of the other cards. This is a strange result and we're not quite sure what to make of it.

With Windows XP results analyzed, let's see if anything changes when we run the same test in Windows Vista:

Here we see almost carbon copy results of the test in Windows XP, with the notable difference that the Geforce 8500 GT is now providing the same decoding prowess as the 8800 GTX and 8600 GTS.

In any case, it seems that VC-1 decoding favors the Geforce line at this point (save the anomaly with the 8500 GT in Windows XP). But as we said, the VC-1 codec doesn't really tax new low-end CPUs like the e4300. Let's see if the situation changes when we view an HD video encoded with the resource-hogging H.264 codec:

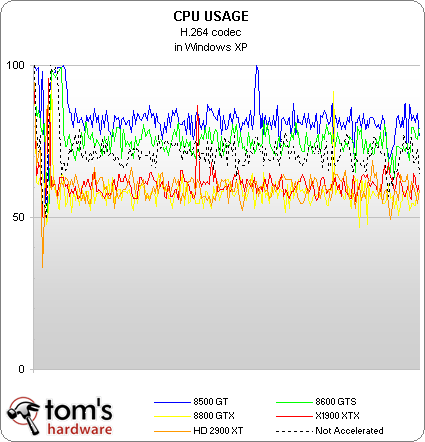

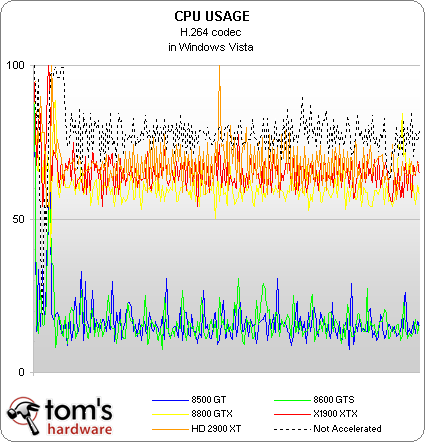

The H.264 Codec in Windows XP and Windows Vista:

First off, take note of the CPU utilization for non-accelerated H.264 video: it's much higher than VC-1 is, hovering at about 75% on our e4300 test CPU. That's pretty high, and effectively tells us that H.264 video will probably skip on older CPUs, many of which are still in plenty of people's systems.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In Windows XP, we see some interesting reversals. The Radeon X1900 XTX and HD 2900 XT are now assisting in the decode process, taking a sizable load off the CPU. The Geforce 8800 GTX is also assisting video decoding, with results essentially identical to that of the Radeon cards.

The Nvidia representatives said the 8500/8600 drivers are not yet decoding H.264 video in Windows XP, and here is the proof. In fact, these video cards actually seem to require additional CPU resources to play HD video. Quite puzzling. Let's see what happens when we move to Windows Vista:

Impressive! The new 8500 GT and 8600 GTS really show their stuff, lowering CPU utilization from the 80% territory down to 20% territory. This is a really compelling result and goes to show that, yes, these new Geforce cards really should make HD video a reality on old systems. The only problem: at this point, you need that old machine to be running Windows Vista to see the benefits. I don't know if people with old single-core CPUs are going to upgrade to Vista. Hopefully, Nvidia will soon release new Windows XP drivers for the 8500/8600 cards that will expose this cool video decoding prowess.

Ironically, folks with older CPUs will probably run Windows XP in the meantime, and in that case the Radeon X1900/HD 2900 and Geforce 8800 GTX will produce more effective video acceleration. But I'm not going to advise owners of old platforms to get a high-end graphics card. If you have an older single-core CPU and want to watch HD DVD/Blu-ray disks, your best bet is to wait until Nvidia enables 8500/8600 decode acceleration in Windows XP.

Don Woligroski was a former senior hardware editor for Tom's Hardware. He has covered a wide range of PC hardware topics, including CPUs, GPUs, system building, and emerging technologies.