Nvidia GeForce GTX 1080 Pascal Review

Power Consumption Results

Test Setup

If you'd like to read more about our power testing setup, check out The Math Behind GPU Power Consumption And PSUs. We're also adding two more measurement series' with 500ns and 10ms time intervals to meet any challenges GPU Boost 3.0 might throw at us. Also, our current clamps were recalibrated for accuracy and speed.

Our test equipment hasn't changed, though.

| Power Consumption | |

|---|---|

| Test Method | Contact-free DC Measurement at PCIe Slot (Using a Riser Card) Contact-free DC Measurement at External Auxiliary Power Supply Cable Direct Voltage Measurement at Power Supply Real-Time Infrared Monitoring and Recording |

| Test Equipment | 2 x Rohde & Schwarz HMO 3054, 500MHz Digital Multi-Channel Oscilloscope with Storage Function 4 x Rohde & Schwarz HZO50 Current Probe (1mA - 30A, 100kHz, DC) 4 x Rohde & Schwarz HZ355 (10:1 Probes, 500MHz) 1 x Rohde & Schwarz HMC 8012 Digital Multimeter with Storage Function 1 x Optris PI640 80Hz Infrared Camera + PI Connect |

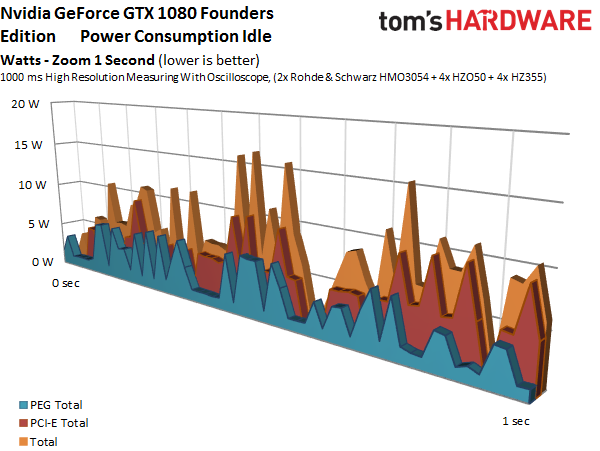

Before we get going, we’d like to note that power consumption measurements at idle always pose a challenge. Even an empty desktop might see sporadic load fluctuations. Consequently, we use a long-term measurement and then choose a representative two-minute sample for our test.

| Please note that the minimum and maximum states in the following tables don’t always occur at the same time. This is why the individual numbers for the rails don’t necessarily add up to the total for all of the rails. |

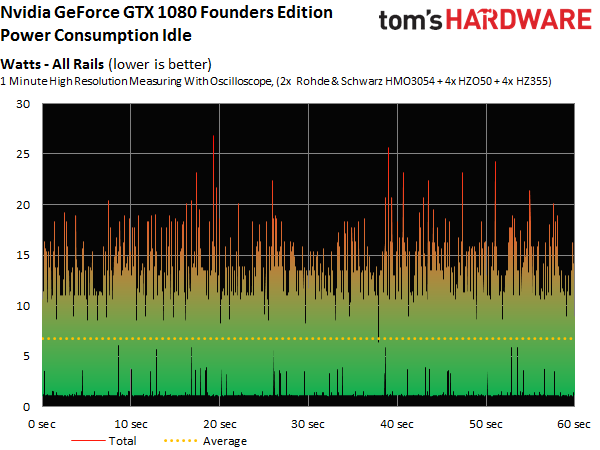

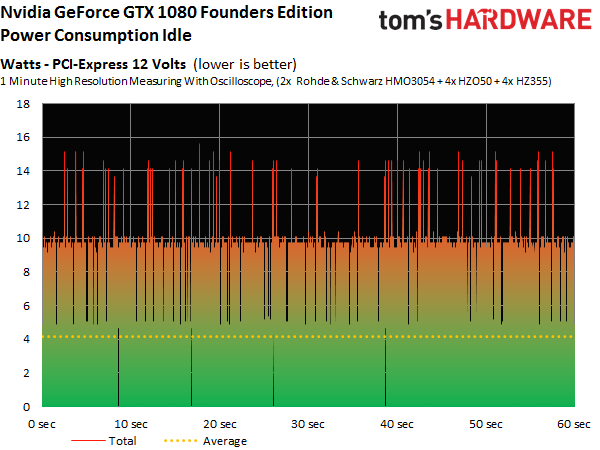

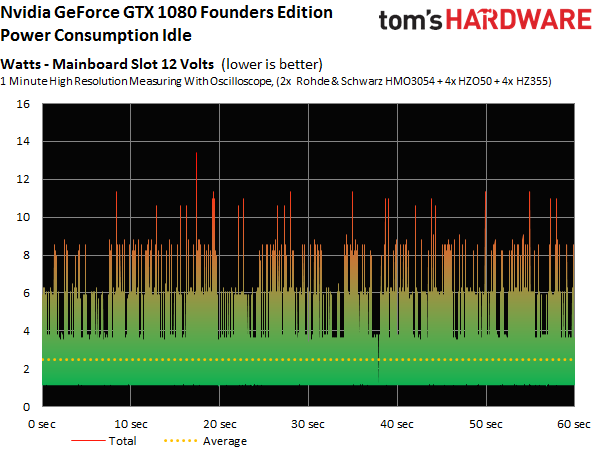

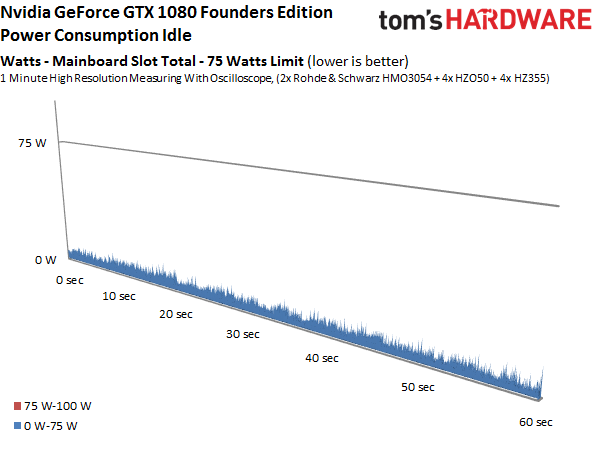

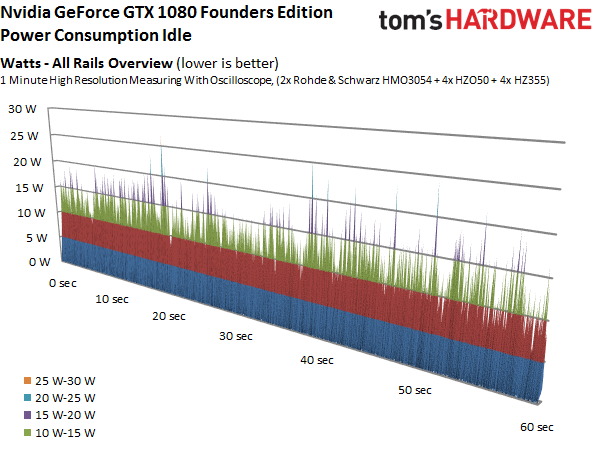

Idle Power Consumption

Idle power consumption looks great. Altogether, our measurements indicate 6.8W. Some of this goes toward the fan, the memory and the voltage converters, which means that we’re probably looking at approximately 5W for the GPU alone. That's a fantastic result.

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe Total | 0W | 16W | 4W |

| Motherboard 3.3V | 0W | 0W | 0W |

| Motherboard 12V | 1W | 13W | 3W |

| Graphics Card Total | 1W | 27W | 7W |

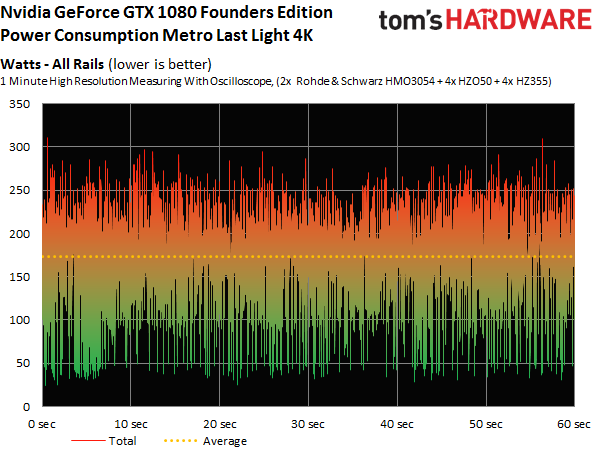

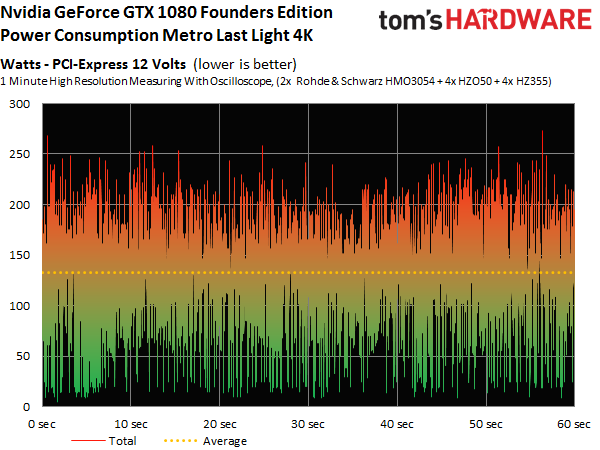

Gaming Power Consumption

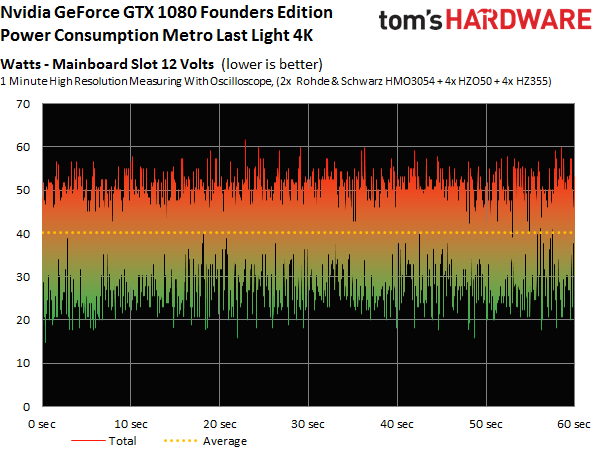

The numbers get more interesting as we measure power consumption while running a loop of Metro: Last Light at Ultra HD. After the graphics card warms up to a toasty 84 degrees Celsius (10 degrees under its thermal threshold), power consumption registers 173W. Prior to the warm-up phase, we were seeing 178W, which is the limit defined in Nvidia's BIOS. In other words, the company was right on with its 180W TDP rating.

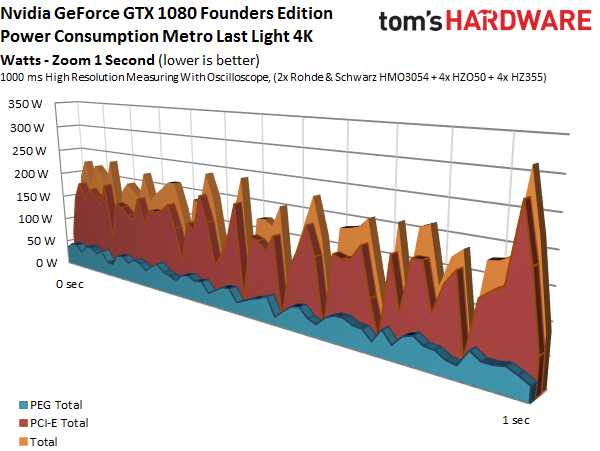

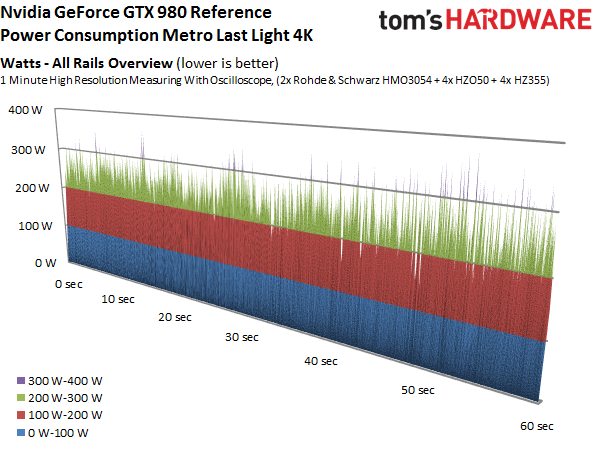

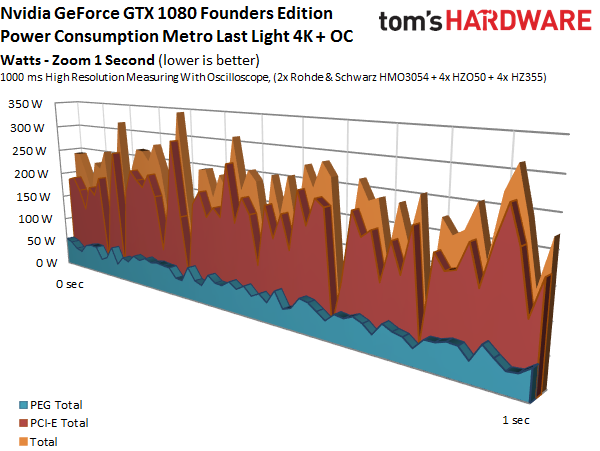

Next we compare the GeForce GTX 1080 to its predecessor, Nvidia's reference GeForce GTX 980. In the same loop, the older board consumes 180W. Now, Nvidia promises that GPU Boost 3.0 yields improved power delivery with fewer voltage fluctuations. Here's the direct comparison:

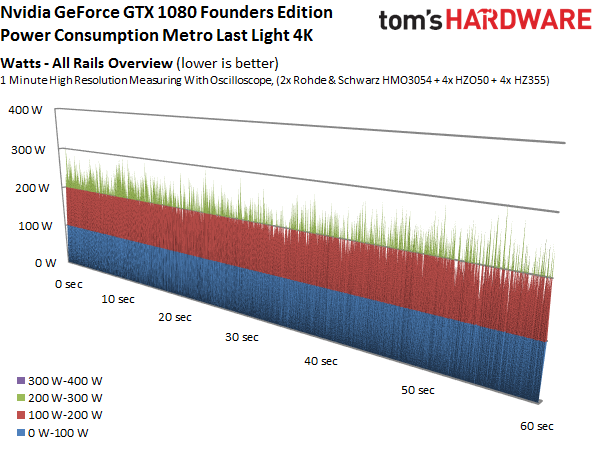

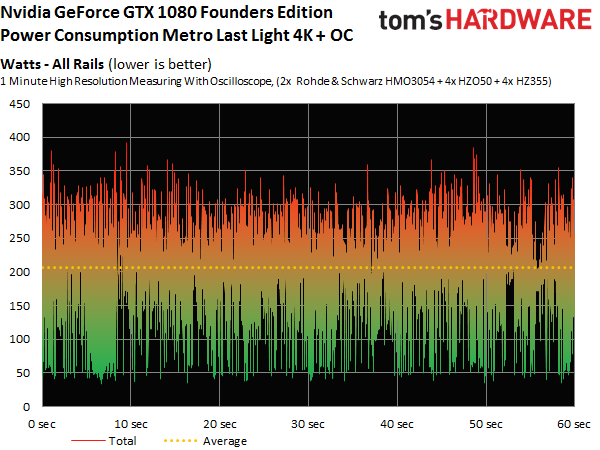

Spikes above 300W are a thing of the past. Nvidia’s GeForce GTX 1080 barely has any, whereas they were a lot more frequent on the 980. Overall, even though these cards post almost identical averages, the GeForce GTX 1080’s curve is both smoother and more dense.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

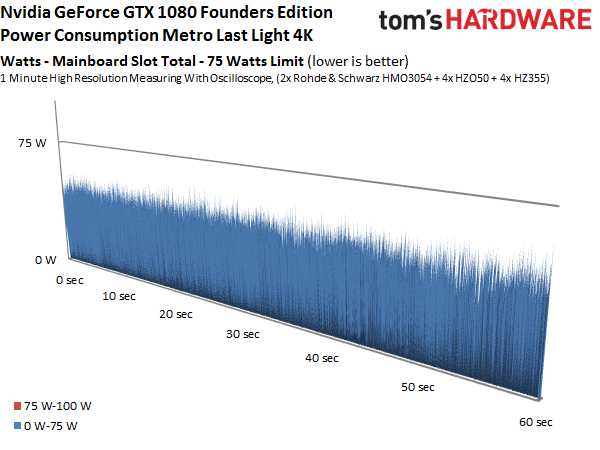

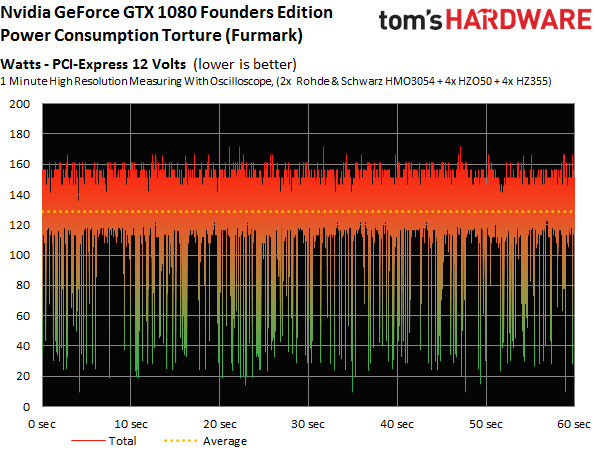

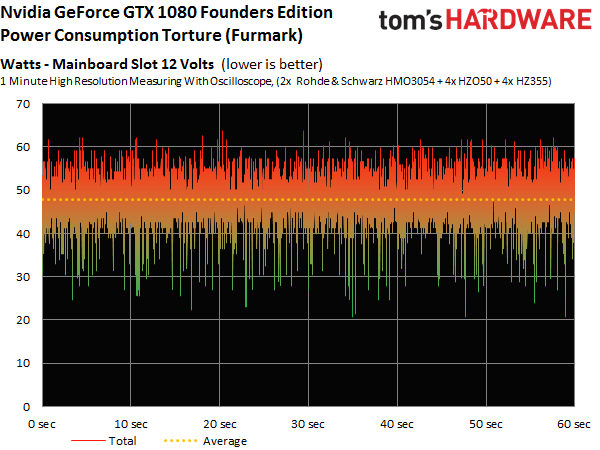

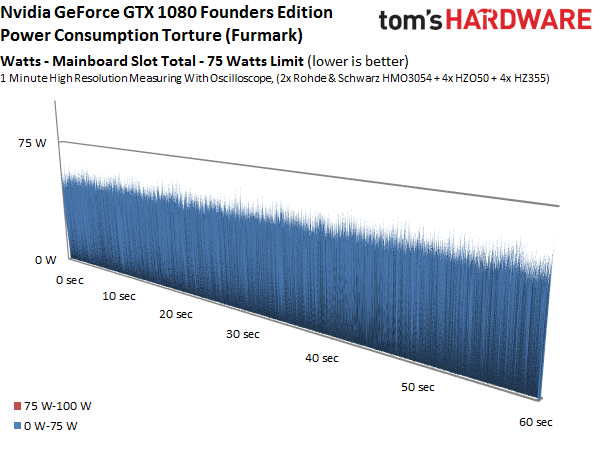

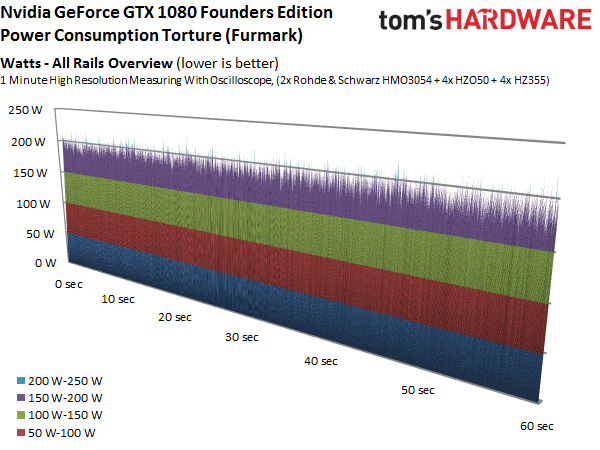

For those of you who enjoy the gory details, you'll find them in the following picture gallery:

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe Total | 5W | 273W | 133W |

| Motherboard 3.3V | 0W | 0W | 0W |

| Motherboard 12V | 15W | 62W | 40W |

| Graphics Card Total | 24W | 311W | 173W |

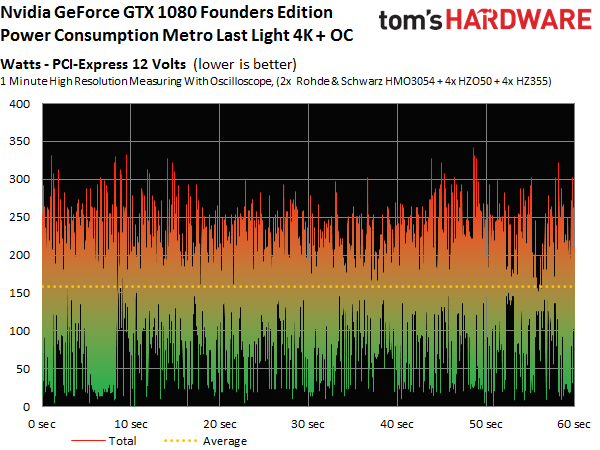

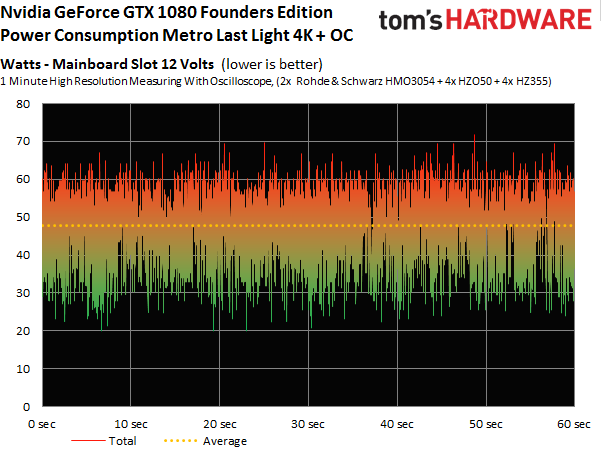

Our analysis shows that only about 40W comes from the motherboard's slot. Meanwhile, the eight-pin auxiliary power connector supplies 133W of its 150W rated maximum. This means that power consumption through the PCIe cable won't be an issue. After all, we never saw any problems from Nvidia's Quadro M6000 when that cable had to supply 170W.

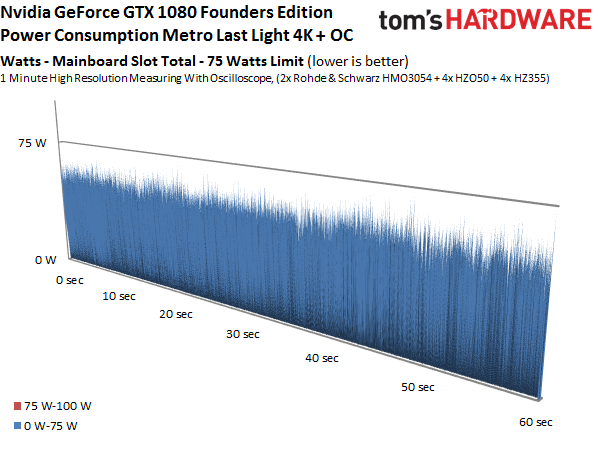

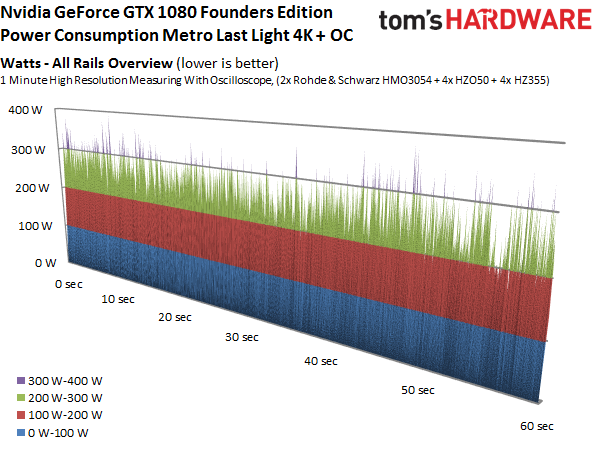

Gaming Power Consumption With Overclocking

Now let's switch to gaming under the highest overclock we could achieve with our GeForce GTX 1080 sample. Getting there required setting the power target to its 120% maximum and increasing the base clock to facilitate a 2.1GHz GPU Boost frequency.

It comes as no surprise that consumption rises 19% from 173W to 206. That's not particularly good news for enthusiasts concerned about their eight-pin power connectors, but it's still a fairly realistic goal that shouldn't cause any damage to your hardware.

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe Total | 25W | 342W | 158W |

| Motherboard 3.3V | 0W | 0W | 0W |

| Motherboard 12V | 20W | 72W | 48W |

| Graphics Card Total | 35W | 392W | 206W |

A more detailed efficiency comparison should boards from Nvidia's partners with better-performing coolers. So, at this point, we're limiting ourselves to a brief overview.

Currently, the scaling of clock rate, power consumption and gaming performance at different loads looks like this:

| Header Cell - Column 0 | FPS (Original) | Power (Original) | FPS (OC) | Power (OC) | Increase in FPS | Increase in Power |

|---|---|---|---|---|---|---|

| Metro Last Light @ UHD: | 54.1 | 173W | 58.8 | 206W | +8.6% | +19.1% |

| Metro Last Light @ FHD: | 145.0 | 166W | 154.3 | 191W | +6.4% | +15.1% |

| Thief @ UHD: | 59.2 | 170W | 64.8 | 200W | +9.5% | +17.7% |

| Thief @ FHD: | 109.9 | 146W | 116.2 | 164W | +5.7% | +12.3% |

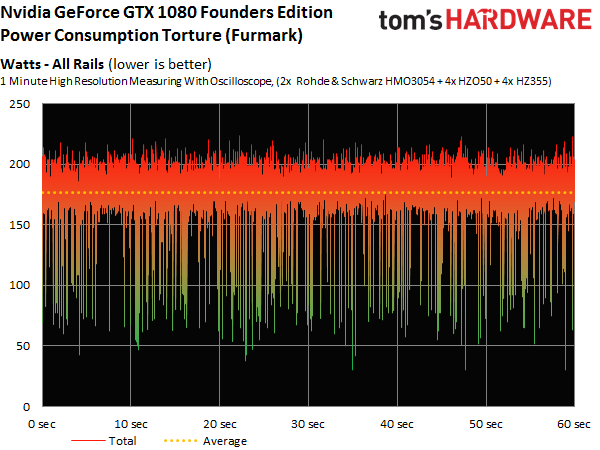

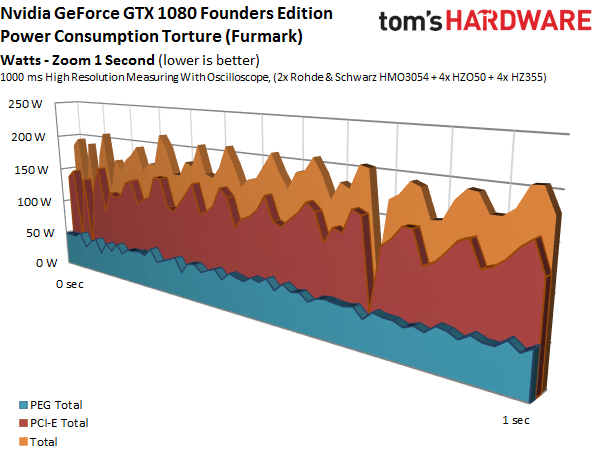

Stress Test Power Consumption

Let’s explore what happens when the GPU really heats up, forcing the card to enforce its power target.

Our 176W result lands just under Nvidia's power limit. The card does have to pull its clock rates by quite a bit to keep power consumption at this level during a stress test, though. This is equally due to the temperature limit and the PWM controller’s power limit.

| Header Cell - Column 0 | Minimum | Maximum | Average |

|---|---|---|---|

| PCIe Total | 10W | 172W | 128W |

| Motherboard 3.3V | 0W | 1W | 0W |

| Motherboard 12V | 21W | 64W | 48W |

| Graphics Card Total | 31W | 224W | 176W |

Bottom Line

The 180W boundary is never crossed without overclocking. In fact, exceeding it would be impossible according to the engineers we asked. We repeatedly double-checked our results using different intervals, and the only measurement that gave us somewhat higher readings was 10ms interval (likely due to the measurement being less accurate).

Current page: Power Consumption Results

Prev Page Professional Application Results Next Page Temperature And Noise Results-

JeanLuc Chris, were you invited to the Nvidia press event in Texas?Reply

About time we saw some cards based of a new process, it seemed like we were going to be stuck on 28nm for the rest of time.

As normal Nvidia is creaming it up in DX11 but DX12 performance does look ominous IMO, there's not enough gain over the previous generation and makes me think AMD new Polaris cards might dominate when it comes to DX12. -

slimreaper Could you run an Otoy octane bench? This really could change the motion graphics industry!?Reply

-

F-minus Seriously I have to ask, did nvidia instruct every single reviewer to bench the 1080 against stock maxwell cards? Cause i'd like to see real world scenarios with an OCed 980Ti, because nobody runs stock or even buys stock, if you can even buy stock 980Tis.Reply -

cknobman Nice results but honestly they dont blow me away.Reply

In fact, I think Nvidia left the door open for AMD to take control of the high end market later this year.

And fix the friggin power consumption charts, you went with about the worst possible way to show them. -

FormatC Stock 1080 vs. stock 980 Ti :)Reply

Both cards can be oc'ed and if you have a real custom 1080 in your hand, the oc'ed 980 Ti looks in direct comparison to an oc'ed 1080 worse than the stock card in this review to the other stock card. :) -

Gungar @F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)Reply -

toddybody Reply@F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)

LOL. My 980ti doesnt hit 2.2Ghz on air. We need to wait for more benchmarks...I'd like to see the G1 980ti against a similar 1080. -

F-minus Exactly, but it seems like nvidia instructed every single outlet to bench the Reference 1080 only against stock Maxwell cards, which is honestly <Mod Edit> - pardon. I bet an OCed 980Ti would come super close to the stock 1080, which at that point makes me wonder why even upgrade now, sure you can push the 1080 too, but I'd wait for a price drop or at least the supposed cheaper AIB cards.Reply -

FormatC I have a handpicked Gigabyte GTX 980 Ti Xtreme Gaming Waterforce at 1.65 Ghz in one of my rigs, it's slower.Reply