Nvidia GeForce GTX 1080 Pascal Review

Simultaneous Multi-Projection And Async Compute

The Simultaneous Multi-Projection Engine

Certain parts of GP104 affect the performance of every game we test today—the increased core count, its clock rate and Nvidia’s work to enable 10 Gb/s GDDR5X. Other features can’t be demonstrated yet, but have big implications for the future. Those are the tough ones to assign value to in a review. Nevertheless, the Pascal architecture incorporates several capabilities that we can’t wait to see show up in shipping games.

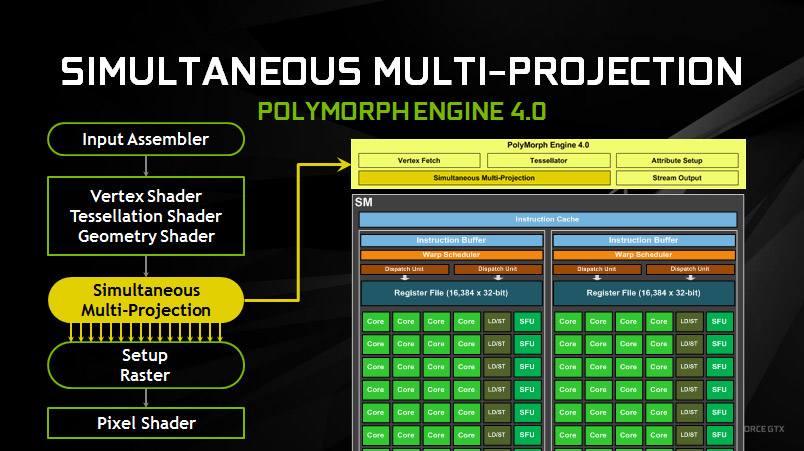

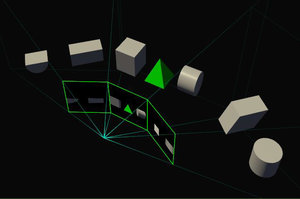

Nvidia calls the first its Simultaneous Multi-Projection Engine. This feature is enabled through a hardware block added to GP104’s PolyMorph Engines. This piece of logic takes the geometry data and processes it through as many as 16 projections from one viewpoint. Or it can offset the viewpoint for stereo applications, replicating geometry as many as 32 times in hardware and without the expensive performance overhead you’d incur if you tried to achieve the same effect without SMP.

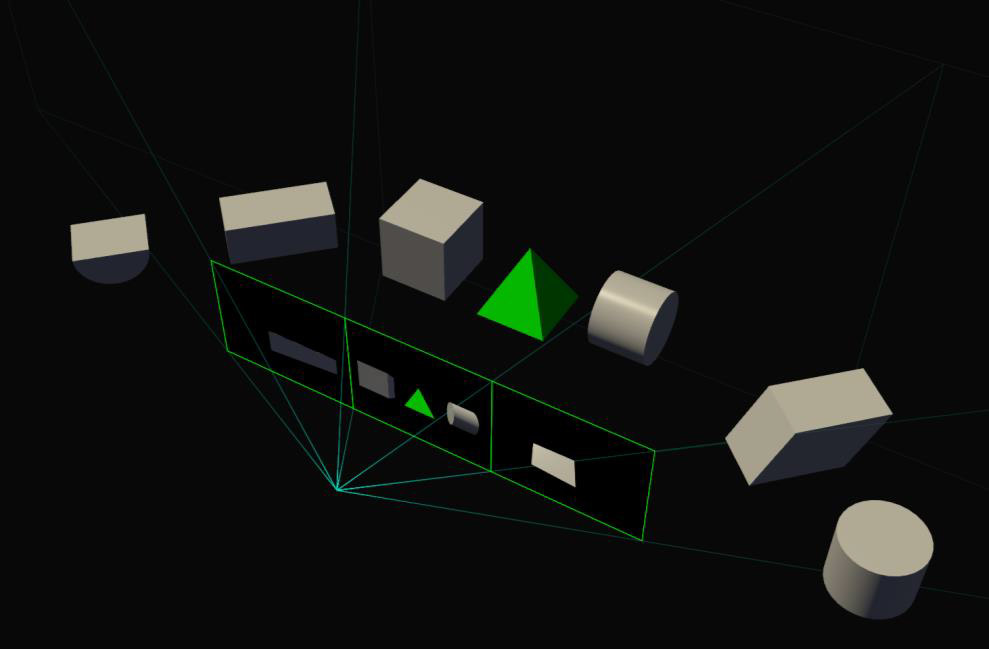

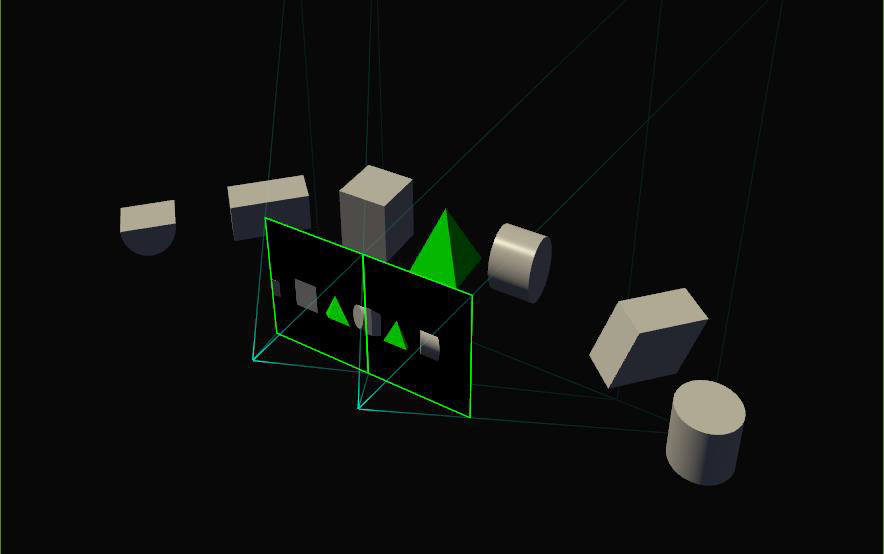

Alright, so let’s back up a minute and give this some context. I game almost exclusively on three monitors in a Surround configuration. But my monitors are tilted inward to “wrap” around my desk, if only for office productivity reasons. Games don’t know this though. A city street spanning all three displays bends at each bezel, and a circular table on the periphery appears distorted. The proper way to render for my configuration would be one projection straight ahead, a second projection to the left, as if out of a panoramic airplane cockpit, and a third projection on the right oriented similarly. The previously-bent street could be straightened out this way, and I’d end up with a much wider field of view. The entire scene still has to be rasterized and shaded, but you save the setup, driver and GPU front-end overhead of rendering the scene three times.

The catch is that an application must support wide FOV settings and use SMP API calls. That means game developers have to embrace the feature before you can enjoy it. We’re not sure how much effort will go into accommodating the relative few folks gaming in Surround. But there are other applications where it makes sense to implement this functionality immediately.

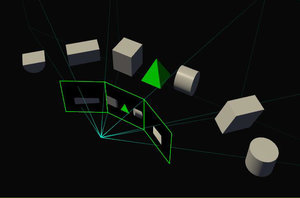

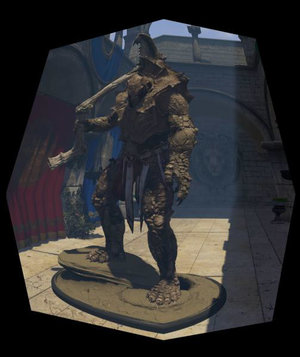

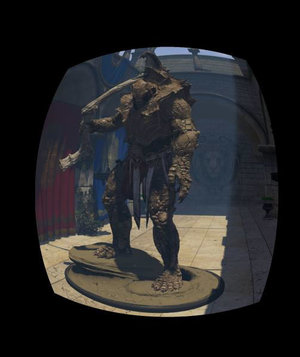

Take VR as an example. You already need one projection for each eye. Today, games simply render to the two screens separately, incurring all of the aforementioned inefficiencies. But because SMP supports a pair of projection centers, they can both be rendered in one pass using a feature Nvidia calls Single Pass Stereo. Vertex processing happens once, and SMP kicks back two positions for each vertex corresponding to your left and right eyes. From there, SMP can apply additional projections to enable a feature referred to as Lens Matched Shading.

Briefly, Lens Matched Shading attempts to make VR more efficient by avoiding a lot of the work that’d normally go into rendering a traditional planar projection, prior to it being bent in to match the distortion of an HMD’s lenses (thereby wasting the pixels out where the bend is most pronounced). Using SMP to divide the display region into quadrants, this effect can be approximated. So instead of rendering a square projection and manipulating that, the GPU creates images matching the lens distortion filter. This keeps it from generating more pixels than needed. And so long as developers match or exceed each HMD’s sampling rate requirements per eye, you won’t be able to tell a difference in quality.

When you combine Single Pass Stereo and Lens Matched Shading, Nvidia claims it’s possible to see a 2x performance improvement in VR compared to a GPU without SMP support. Some of this comes from gains related to pixel throughput—using Lens Matched Shading to avoid working on pixels that don’t need to be rendered, Nvidia’s conservative preset knocks a 4.2 MPix/s (Oculus Rift) workload down to 2.8 MPix/s, freeing up 1.5x of the GPU’s shading horsepower. Then, by processing geometry once and shifting it in hardware (rather than re-doing everything for your second eye), Single Pass Stereo effectively alleviates half of the geometry work being done today. For those of you who were awestruck by Jen-Hsun’s slide showing “2x Perf and 3x Efficiency Vs. Titan X” during the 1080 livestream, now you know what went into that math.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Asynchronous Compute

The Pascal architecture also incorporates some changes relating to asynchronous compute, which is timely for several reasons relating to DirectX 12, VR and AMD’s architectural head-start.

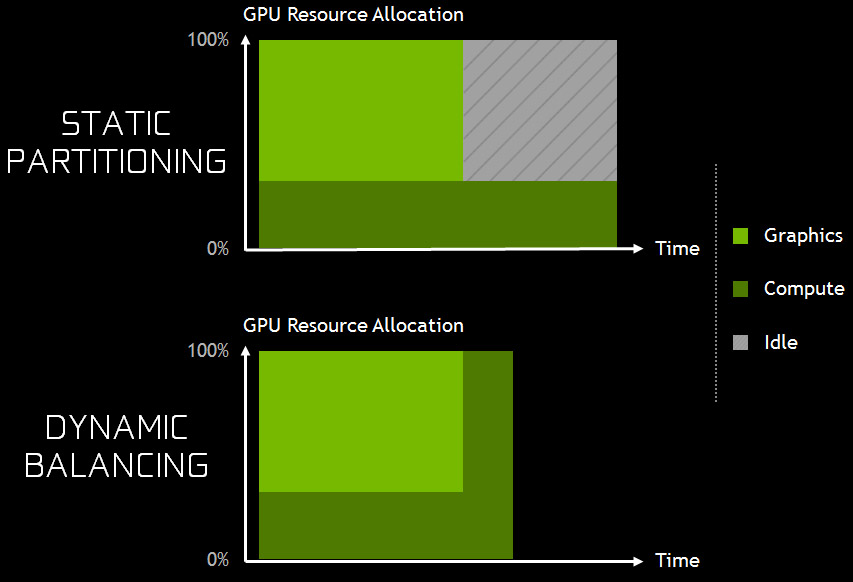

With its Maxwell architecture, Nvidia supported static partitioning of the GPU to accommodate overlapping graphics and compute workloads. In theory, this was a good way to maximize utilization, so long as the both segments were active. If you set aside 75% of the processor for graphics and that segment went idle waiting for the compute side to finish, you could burn through whatever gains might have been possible by running those tasks concurrently. Pascal addresses this with a form of dynamic load balancing. GPU resources can still be allocated, but if the driver determines that one partition is underutilized, it’ll allow the other to jump in and finish, preventing a stall from negatively affecting performance.

Nvidia also improves Pascal’s preemption capabilities—that is, its ability to interrupt a task in order to address a more time-sensitive workload with very low latency. As you know, GPUs are highly parallel machines with big buffers intended to keep those similar resources sitting next to each other busy. An idle shader does you no good, so by all means, queue up work to feed through the graphics pipeline. But not everything a GPU does, especially nowadays, is as tolerant of delays.

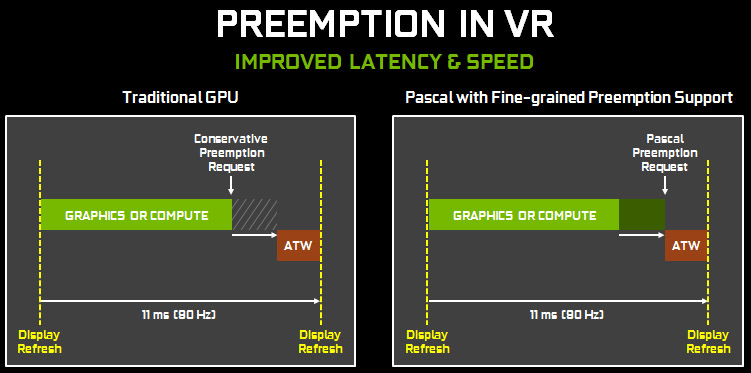

A perfect example is the asynchronous timewarp feature Oculus enabled for the launch of its Rift. In the event that your graphics card cannot get a fresh frame out every 11ms on a 90Hz display, ATW generates an intermediate frame using the rendering thread’s most recent work, correcting for head position. But it has to have enough time to create a timewarped frame, and unfortunately graphics preemption isn’t particularly granular. In fact, the Fermi, Kepler and Maxwell architectures support draw-level preemption, meaning they can only switch at draw call boundaries, potentially holding up ATW. Preemption requests consequently have to be made early in order to guarantee control over the GPU in time to get a warped frame out ahead of the display refresh. This is a bummer because you really want ATW to do its work as late as possible before that refresh.

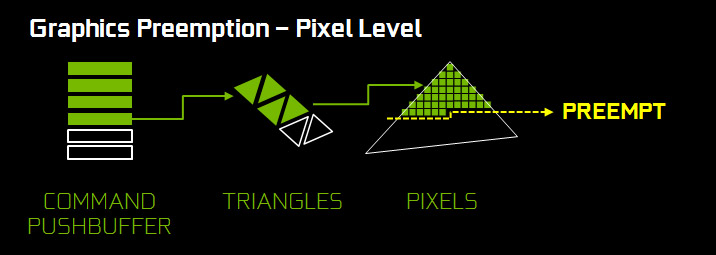

Pascal implements far more granular pixel-level preemption for graphics, so GP104 can stop what it’s doing at a pixel level, save the pipeline’s state off-die and switch contexts. Instead of the millisecond-class preemption we’ve seen Oculus write about, Nvidia is claiming less than 100µs.

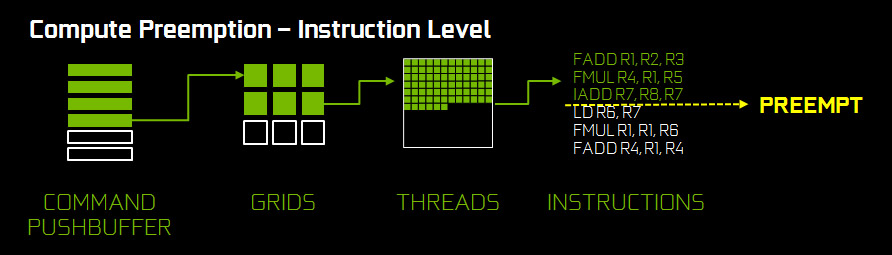

The Maxwell architecture already supported the equivalent of pixel-level preemption on the compute side by enabling thread-level granularity. Pascal has this as well, but adds support for instruction-level preemption in CUDA compute tasks. Nvidia’s drivers don’t include the functionality right now, but it, along with pixel-level preemption, should be accessible through the driver soon.

Current page: Simultaneous Multi-Projection And Async Compute

Prev Page Disassembling GeForce GTX 1080 Founders Edition Next Page The Display Pipeline, SLI And GPU Boost 3.0-

JeanLuc Chris, were you invited to the Nvidia press event in Texas?Reply

About time we saw some cards based of a new process, it seemed like we were going to be stuck on 28nm for the rest of time.

As normal Nvidia is creaming it up in DX11 but DX12 performance does look ominous IMO, there's not enough gain over the previous generation and makes me think AMD new Polaris cards might dominate when it comes to DX12. -

slimreaper Could you run an Otoy octane bench? This really could change the motion graphics industry!?Reply

-

F-minus Seriously I have to ask, did nvidia instruct every single reviewer to bench the 1080 against stock maxwell cards? Cause i'd like to see real world scenarios with an OCed 980Ti, because nobody runs stock or even buys stock, if you can even buy stock 980Tis.Reply -

cknobman Nice results but honestly they dont blow me away.Reply

In fact, I think Nvidia left the door open for AMD to take control of the high end market later this year.

And fix the friggin power consumption charts, you went with about the worst possible way to show them. -

FormatC Stock 1080 vs. stock 980 Ti :)Reply

Both cards can be oc'ed and if you have a real custom 1080 in your hand, the oc'ed 980 Ti looks in direct comparison to an oc'ed 1080 worse than the stock card in this review to the other stock card. :) -

Gungar @F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)Reply -

toddybody Reply@F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)

LOL. My 980ti doesnt hit 2.2Ghz on air. We need to wait for more benchmarks...I'd like to see the G1 980ti against a similar 1080. -

F-minus Exactly, but it seems like nvidia instructed every single outlet to bench the Reference 1080 only against stock Maxwell cards, which is honestly <Mod Edit> - pardon. I bet an OCed 980Ti would come super close to the stock 1080, which at that point makes me wonder why even upgrade now, sure you can push the 1080 too, but I'd wait for a price drop or at least the supposed cheaper AIB cards.Reply -

FormatC I have a handpicked Gigabyte GTX 980 Ti Xtreme Gaming Waterforce at 1.65 Ghz in one of my rigs, it's slower.Reply