OpenCL In Action: Post-Processing Apps, Accelerated

We've been bugging AMD for years now, literally: show us what GPU-accelerated software can do. Finally, the company is ready to put us in touch with ISVs in nine different segments to demonstrate how its hardware can benefit optimized applications.

Benchmark Results: ArcSoft Total Media Theatre SimHD

As noted earlier, and in our Radeon HD 7970/7950 launch coverage, AMD hasn't yet ironed out the kinks with hardware acceleration in its newest architecture. So, it wasn't much of a surprise that SimHD wouldn't work with our 7900-series boards. Instead, we dropped in a Radeon HD 5870, which is currently compatible. Its 1600 shaders operating at 850 MHz still deliver respectable performance in today's games, so we're curious to see what this now fairly-mainstream card can do in a compute workload.

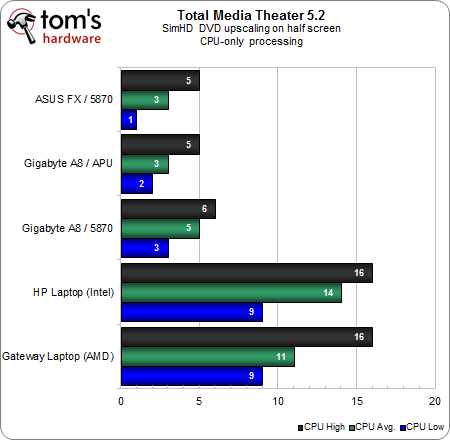

In this first test result set, we ran all of the post-processing on the CPU, with hardware acceleration disabled. Low, average, and high CPU utilization values are shown (in percent) to indicate the range of processor resources consumed by SimHD’s upscaling. Both mobile platforms are roughly comparable, though AMD's average utilization is likely lower as a result of its four physical cores.

The desktop numbers are more interesting. Yes, the overall utilization is roughly two to three times less on the desktop, which is telling since both mobile processors sport 35 W TDPs compared to the 100 and 125 W APU and FX CPU.

But also, notice how there is very little difference between the A8 APU and the higher-end FX chip. Although the FX has more transistors dedicated to general-purpose processing, a nice large shared L3 cache, and higher clock rates, it isn't able to demonstrate an advantage over the more mainstream A8. Clearly, there's some other bottleneck in play.

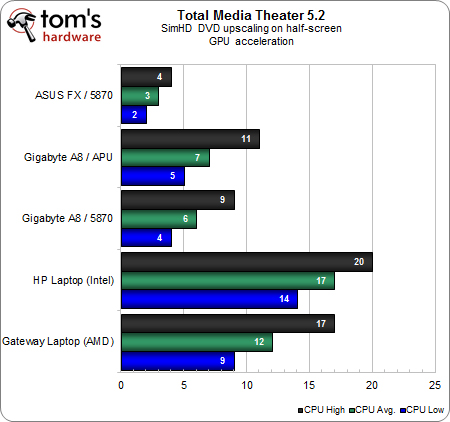

With GPU acceleration enabled, the APU-driven notebook starts to exhibit a performance advantage over the CPU-only model, though perhaps not to the extent we were expecting. Any advantage is notable when you're talking about a battery-powered piece of hardware, however, the more significant gains start cropping up when you have the resources of a desktop-class configuration to throw at the upscaler.

Clearly, the biggest gains are available to those who use more potent discrete graphics on a platform with a fast CPU to accelerate demanding workloads. Testing the same graphics card next to AMD's A8 doesn't yield as compelling of a gain, likely due to a processor bottleneck.

When it comes to balancing out performance for your dollar, though, the A8 leaning on its integrated Radeon HD 6550D graphics engine is the real winner here. Consider that the A8-3850 sells for $135. AMD's idea here has to be one of putting two technologies together at an affordable price, where in the past you would have needed separate entry-level CPUs and graphics card to achieve the same thing (and the Radeon HD 5870 wasn't a cheap board, either).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Benchmark Results: ArcSoft Total Media Theatre SimHD

Prev Page Tom's Hardware Q&A With MotionDSP, Continued Next Page Benchmark Results: vReveal On The FX-8150 And Radeon HD 5870-

bit_user amuffinWill there be an open cl vs cuda article comeing out anytime soon?At the core, they are very similar. I'm sure that Nvidia's toolchain for CUDA and OpenCL share a common backend, at least. Any differences between versions of an app coded for CUDA vs OpenCL will have a lot more to do with the amount of effort spent by its developers optimizing it.Reply

-

bit_user Fun fact: President of Khronos (the industry consortium behind OpenCL, OpenGL, etc.) & chair of its OpenCL working group is a Nvidia VP.Reply

Here's a document paralleling the similarities between CUDA and OpenCL (it's an OpenCL Jump Start Guide for existing CUDA developers):

NVIDIA OpenCL JumpStart Guide

I think they tried to make sure that OpenCL would fit their existing technologies, in order to give them an edge on delivering better support, sooner. -

deanjo bit_userI think they tried to make sure that OpenCL would fit their existing technologies, in order to give them an edge on delivering better support, sooner.Reply

Well nvidia did work very closely with Apple during the development of openCL. -

nevertell At last, an article to point to for people who love shoving a gtx 580 in the same box with a celeron.Reply -

JPForums In regards to testing the APU w/o discrete GPU you wrote:Reply

However, the performance chart tells the second half of the story. Pushing CPU usage down is great at 480p, where host processing and graphics working together manage real-time rendering of six effects. But at 1080p, the two subsystems are collaboratively stuck at 29% of real-time. That's less than half of what the Radeon HD 5870 was able to do matched up to AMD's APU. For serious compute workloads, the sheer complexity of a discrete GPU is undeniably superior.

While the discrete GPU is superior, the architecture isn't all that different. I suspect, the larger issue in regards to performance was stated in the interview earlier:

TH: Specifically, what aspects of your software wouldn’t be possible without GPU-based acceleration?

NB: ...you are also solving a bandwidth bottleneck problem. ... It’s a very memory- or bandwidth-intensive problem to even a larger degree than it is a compute-bound problem. ... It’s almost an order of magnitude difference between the memory bandwidth on these two devices.

APUs may be bottlenecked simply because they have to share CPU level memory bandwidth.

While the APU memory bandwidth will never approach a discrete card, I am curious to see whether overclocking memory to an APU will make a noticeable difference in performance. Intuition says that it will never approach a discrete card and given the low end compute performance, it may not make a difference at all. However, it would help to characterize the APUs performance balance a little better. I.E. Does it make sense to push more GPU muscle on an APU, or is the GPU portion constrained by the memory bandwidth?

In any case, this is a great article. I look forward to the rest of the series. -

What about power consumption? It's fine if we can lower CPU load, but not that much if the total power consumption increase.Reply