Watch Dogs 2 Performance Benchmarks

Introduction & Benchmark Selection

Here's a fact: Watch Dogs 2 doesn’t run that well without decent hardware. Our focus today isn't on the story. Rather, we're trying to make the game look as attractive as possible. We knew this project would involve multiple Tom's Hardware labs, so we didn't want to simply run the same benchmarks as everyone else. Hopefully you find our extra effort worth the wait.

Once you complete the lengthy and completely linear intro level, it’s time to enter Watch Dogs 2’s open world. The game’s first outing with its far-reaching views from the Golden Gate Bridge is both beautiful and a real challenge for your hardware.

We actually played through the introduction twice based on different saved games. Multi-player adds variance that can't be controlled for, so our tests are run exclusively in offline mode. The benchmarks also benefit from a God Mode tool. Consistent numbers are a lot harder to achieve if you keep getting killed, after all.

Preset or Custom Settings?

Watch Dogs 2 has a ton of graphics settings to experiment with. If you love to tweak every last detail by playing with individual options and restarting the game a million times, then you’ll have a blast in the menu system.

Then again, not every player goes to the trouble of finding a perfect balance between performance and graphics quality for their specific hardware configuration. The game accommodates this as well by offering no less than five presets, in addition to the usual range of resolutions. A sixth preset is reserved for custom settings.

The Low and Medium presets really don’t look good. Use them only if every other combination of detail options is unplayable. Visual fidelity gets better from the High preset and up. The gaming world comes to life, with Very High and Ultra only providing minor quality improvements. Those two latter modes also demand a lot more from your hardware.

Ultimately, your best bet is choosing the preset that yields frame rates you're comfortable with, and then fine-tuning from there. Everybody has their own preferences when it comes to graphics, and Watch Dogs 2 provides the tools to experiment and adjust everything to your specific tastes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: All Gaming Content

Anti-Aliasing & Performance

Beauty comes at a price. Watch Dogs 2’s open world looks fantastic and offers a lot of variety, but the detailed environments and high-resolution textures also suffer from flickering jagged edges that are on full display as soon as you start moving around. Fortunately, there are several anti-aliasing settings, some of which can be combined, to alleviate this issue.

Gamers with lower-end hardware or a relatively small amount of graphics memory won’t be able to get around SMAA or FXAA post-processing. Temporal filtering can be activated as well, which provides an upscaled lower-resolution picture. The result’s fairly muddy, but this setting is easier for your graphics card to handle and it does help combat aliasing artifacts. Just don't expect the best visual fidelity.

GeForce owners can use TXAA (2x/4x/8x), which practically eliminates the flickering. There’s a drawback though: the picture becomes a bit blurry. As usual, finding the right compromise is key. Dial in the lowest TXAA setting and adjust the sharpness to taste. Powerful hardware helps a lot, of course.

Another option is sticking with the old-school approach: MSAA (2x to 8x), though that comes with its own set of downsides. Really, there's no perfect solution. Perhaps enabling SMAA and calling it a day is preferable, which brings us back to the beginning of our discussion.

Shadows & Ambient Occlusion

Watch Dogs 2 makes gratuitous use of light, which casts a lot of shadows. And as is often the case with new games, Nvidia's GameWorks libraries aren't far behind. No matter how you feel about them, Watch Dogs 2 implements not one, but two GameWorks shadow features.

The first is called Hybrid Frustum Traced Shadows (HFTS), and it's Nvidia-exclusive. It’s supposed to combine different sharp and soft shadow geometries with each other in a more realistic manner. Be forewarned: it's a hardware-killer, and only available on second-generation Maxwell- and Pascal-based graphics cards.

With Percentage-Closer Soft Shadows (PCSS), the experience of seeing your GPU run out of steam isn't limited to GeForce owners. Anyone with a Radeon card can join in the fun as well. At least you get a more accurate shadow for your troubles, particularly when the geometries have different levels of softness. The feature isn’t perfect, which is to say that there are still errors, but it certainly does look nice.

If you don't feel the need to take realism to its extreme, stick with the Ultra quality preset. In some cases, it's actually better-looking since the picture quality can be sharper.

Watch Dogs 2’s shadow quality necessitates ambient occlusion that’s as realistic as possible. It makes the picture much more vivid and three-dimensional. This means that, as usual, this option should be turned on. The game offers several different versions. Apart from its proprietary ones, there’s HBAO+, which runs the same on Nvidia and AMD hardware and is a bit too strong for our taste. We prefer SSBC or HMSSAO, depending on your hardware and detail preferences.

Original Textures & Texture DLC

We decided to skip past the high-resolution texture DLC in our benchmarks for two reasons. First, it requires at least 6GB of graphics memory, which excludes a lot of very relevant cards. Second, we wanted to test a wide swathe of the graphics market, and including the DLC as an additional option would have added a ton of time to the benchmarking.

The original textures are decent, but they do get blurry up close. They lack a certain crispness. Just think twice about downloading the high-res texture pack if you aren't rocking at least an upper-mid-range GPU. Filling up the on-card GDDR5/HBM will cut into the quality options you can enable at playable frame rates.

The texture settings you get from each preset are perfect without the DLC. Adjust them only if you have headroom to spare.

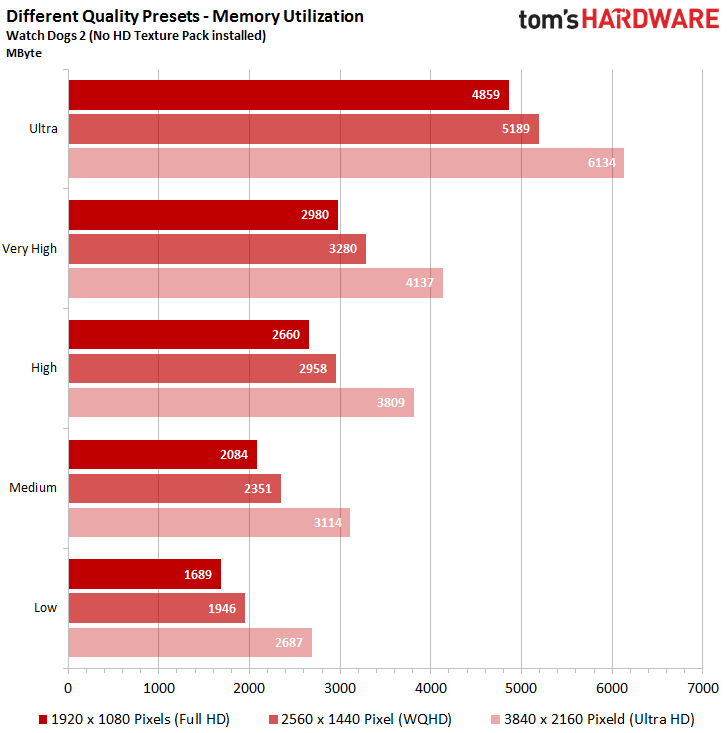

Memory Requirements

Add up all of the settings’ memory requirements, and you get Watch Dogs 2’s overall footprint. If you max out all of the options, which is to say you set the game to its Ultra preset and then manually crank up the settings not already max'ed out, then you technically exceed the capacity of even Nvidia's mighty GeForce GTX 1080 with 8GB of GDDR5X.

The table below is based on Watch Dogs 2’s own calculations. Initially, these seemed somewhat high compared to the results of several measurement tools, but it turned out that some of those were guessing too low for lengthy play sessions. These numbers give a good estimate of how much memory a graphics card should have.

Two Averaged Benchmarks Make for Optimal Results

We created and tested two benchmark sequences. Each of them took a while to complete, deliberately canceling out small fluctuations. We learned to use a methodology like this for open-world games from our experiences with GTA V. This also makes sense statistically, since variations tend to balance out over time. We did test a number of different benchmark times and found that anything under 1:30 wasn't consistent and couldn't be reproduced reliably.

Our first benchmark run consists of a 1:50-minute sprint through the countryside. It's harder on the graphics card than originally anticipated; the load is actually similar to city driving. There are barely any NPCs or vehicles on this route, making the results easy to reproduce. CPU utilization is significantly lower than in places with a dense population or many vehicles. This means that graphics is the sole bottleneck.

We're using a 1:40-minute bike ride for our second benchmark run in order to gauge how the interaction between GPU, graphics driver, and CPU changes the outcome. We chose a bike instead of a car so that we’d have an easier time getting through traffic without any collisions. The CPU load was significantly higher than it was on the Golden Gate Bridge. It was also easy to determine which graphics drivers utilize CPU resources well.

For the mean results, we simply averaged the two individual frame rates. The minimum number represents the lower of the two runs (without exception, the high-traffic test was most taxing).

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

-

amk-aka-Phantom Great and thorough testing, thank you very much. But since you said yourself that High seems to be the sweet spot whereas Very High and Ultra barely look better yet demand a lot more from your hardware, why don't we get 1920x1080/High benchmarks then? I'd love to see how my 970 does. I can sort of deduce that it'll be alright considering it does 35-44 on Very High and VRAM consumption is within its limits, but a confirmation would be nice.Reply -

cwolf78 I agree. Please add the High preset benchmark including the 970. This is the setting I'd probably use if I were to purchase it.Reply -

Elysian890 Getting 60 w/ texture DLC on High setting preset, 290x Devil 13Reply

stable 60 without DLC on very high to ultra, 1080p, vsync enabled -

cknobman Its a rather poorly optimized game TBH.Reply

Digital Foundry had big time issues getting this game to play well at high settings even using a Titan X.

You can also see that this game relies on gfx memory more than anything by the RX 480 with 8GB Ram outpacing more powerful cards with less RAM (like the Fury X). -

IceMyth I don't understand one point, how Ultra settings have better FPS then Very high settings?Reply -

FormatC Reply

Take a look at the resolution ;)19077036 said:I don't understand one point, how Ultra settings have better FPS then Very high settings?

Windows 7 is dead. Only for a few games the testing on two different systems makes no sense. This review here was alone a benchmark session over 19 working hours. I'm not testing only one run, but many - depending at the possible tolerance. I write per month around 8 reviews - from CPU, GPU and Workstation to PC-Audio and some investigative ones.19077316 said:Go test under Windows 7, you will get much better result.

-

Windows 7 is not dead as performs better in gaming and supports everything. Also Windows 10 holds only 22% of the Windows Market Share, the rest for the most part is Windows 7. I think it is rather unprofessional not to include Windows 7 in test, and just to get that bullshit argument out of the way saying Win10 is better for the gaming. It would be fair to people to show that it is not what MS. claims as many f. up their systems doing upgrade to Win10 based on false advertisement.Reply