Alan Wake 2 Requires Mesh Shaders, Excluding Most Older GPUs

RDNA 2, Turing, or Arc (or newer) required

The PC system requirements for Alan Wake 2 whipped up a storm when shared at the end of last week. Now a Remedy employee has provided some explanation for the minimum graphics card specs: The game requires a GPU with mesh shader support, meaning the GeForce GTX 10-series and Radeon RX 5000-series graphic cards are antiques as far as the game's concerned.

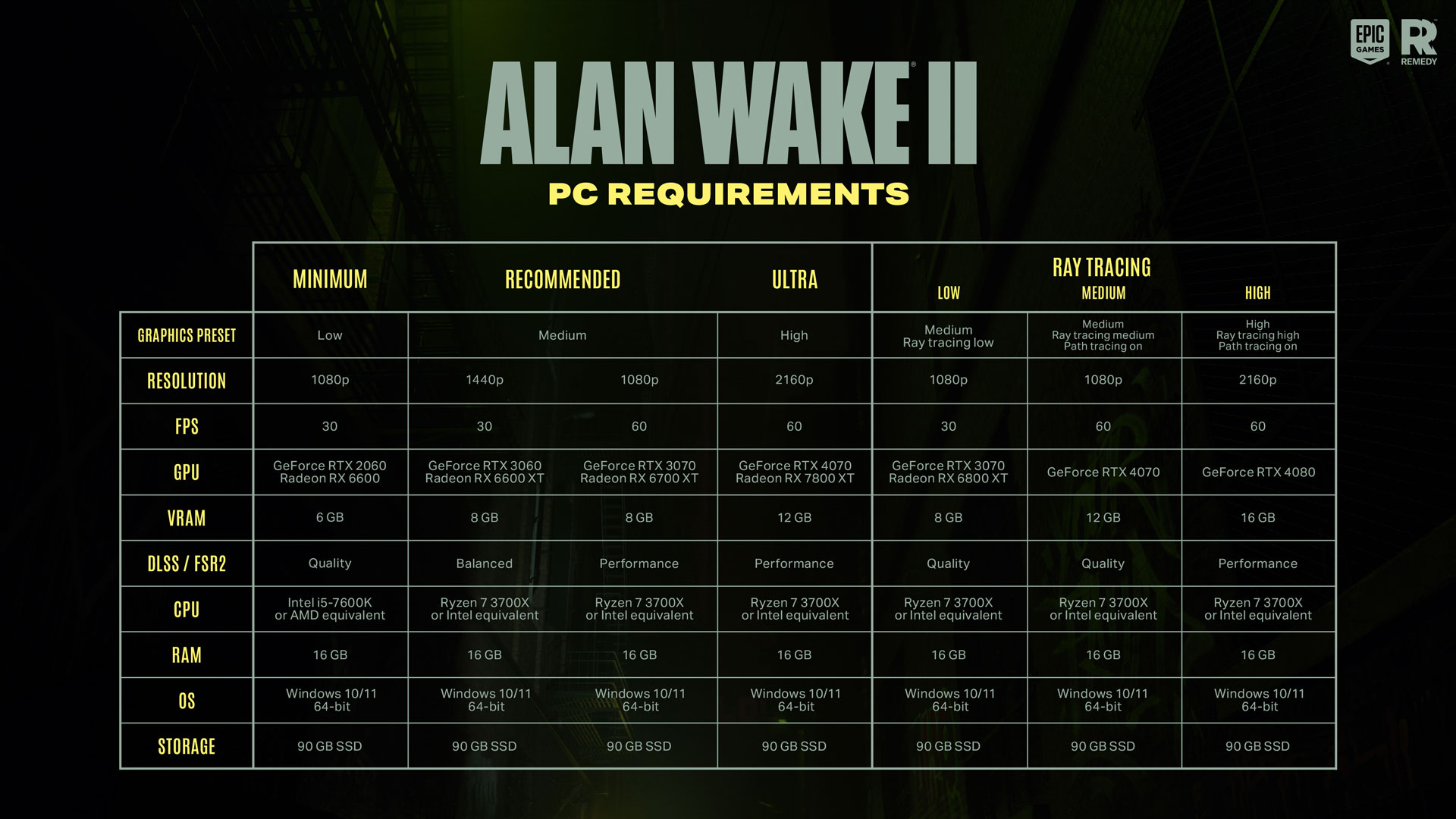

If you cast your eyes above at the official Alan Wake 2 PC requirements table, you will quickly see the minimum spec GPU for playing this game is the GeForce RTX 2060 or Radeon RX 6600. Packing such a GPU — along with an appropriate CPU, 16GB RAM, and 90GB of SSD space — you should be able to enjoy the new game in 1080p and 30fps glory.

Even then, the it looks like the game will automatically turn on DLSS or FSR2 Quality mode upscaling. Whether the upscalers are truly necessary for playable performance isn't clear just yet. Opt for a higher resolution, or try and use ray tracing or path tracing, and the specs soon ramp up to higher-tier GPUs and CPUs. The upscaling options also get a bump to Balanced and Performance modes, though again that may not be strictly necessary.

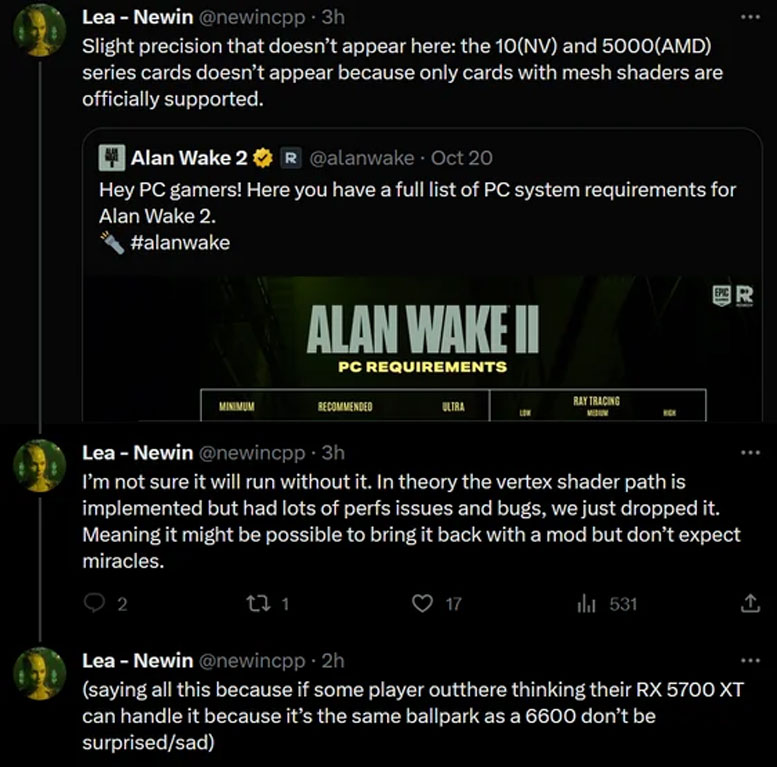

One of the key reasons that Alan Wake 2 only supports newer GPUs is that it uses a graphics engine that requires mesh shaders. Remedy employee Lea – Newin brought this information to light on Twitter / X this weekend but has since taken down the thread (captured on Reddit), due to people being incensed by the game’s upscaling requirements.

The employee highlighted that mesh shader support as one of the graphics card requirements of Alan Wake 2. Later she mused, “I’m not sure it will run without it,” before adding, “In theory the vertex shader path is implemented but had lots of perf issues and bugs, [so] we just dropped it.” Someone re-enabling that render path wasn’t ruled out, but any such mod wouldn’t deliver miracles, readers were warned.

On the topic of performance, we also have to remember that Alan Wake 2 is a cutting-edge title with Nvidia-promoted path tracing support, and has been designed with DLSS 3.5 Ray Reconstruction in mind. No surprise then that it will benefit from the newest Nvidia hardware, being the kind of title that is partly designed as a showcase for the latest graphics technologies. Not that mesh shaders are all that new, as they've been around since DirectX 12 Ultimate was detailed back in early 2020, and they're supported on Nvidia's earlier 20-series architecture — and technically also on the GTX 16-series, though obviously without ray tracing in that case.

It's a bit surprising in some ways that it has taken this long for a game to launch with mesh shaders as a hardware requirement. Three and a half years since DX12 Ultimate came out, we're finally set to get our first game to use the tech. Unreal Engine 5 and it's Nanite technology is also built around mesh shaders, though, so more games may be incoming.

Part of the difficulty with mesh shaders is that they replace the existing pipeline of vertex, geometry, hull and domain shaders (which includes tessellation) with a new path of mesh shaders and amplification shaders. As suggested by the Alan Wake 2 system requirements and tweets, getting behavior equivalent to mesh shaders on older architectures may not be easy or practical.

It's a lot like ray tracing in that respect. We've had RTX cards for over five years now, but Alan Wake 2 will be the first game to ship with path tracing support as an option. (Unless you want to count Portal RTX.) Will the graphical upgrades be worth the cost? We'll find out soon enough, as Alan Wake 2 is set to launch in four days' time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

vanadiel007 It's about time we drop support for 5 year old cards on top titles. All it does is add performance issues for newer cards, to accommodate older cards.Reply

I get it sucks for those with 1000 cards or older but at the same time there has to be a point when older hardware has served it's life.

I am sure my opinion will not be popular for many with older hardware. -

ThricebornPhoenix Reply

A glance at the latest Steam hardware survey suggests that those ancient museum pieces account for about a quarter of the current PC games market. Given the state of the GPU market for the last five years, I'm actually surprised that isn't higher.vanadiel007 said:I am sure my opinion will not be popular for many with older hardware. -

Makaveli Reply

I agree even if those old cards were supported you probably looking at 720 60fps or 1080 30fps experience yuck!vanadiel007 said:It's about time we drop support for 5 year old cards on top titles. All it does is add performance issues for newer cards, to accommodate older cards.

I get it sucks for those with 1000 cards or older but at the same time there has to be a point when older hardware has served it's life.

I am sure my opinion will not be popular for many with older hardware. -

Order 66 I really hope my RX 6800 can run this game at 1080p ultra without upscaling. I don't really like being forced to use upscaling to achieve a playable frame rate. The only game I use upscaling in is in Minecraft RTX because I want to get 60 fps and upscaling also sharpens it meaning it is not blurry like it is at native, not to mention with how pixelated Minecraft is already you can't really even notice it anyway.Reply -

hotaru251 Reply

...or devs can just do their job?vanadiel007 said:It's about time we drop support for 5 year old cards on top titles. All it does is add performance issues for newer cards, to accommodate older cards.

also even IF you cut off 5 yr old gpu (which are still good gpu btw) doesnt mean dev will optimize game properly.

Devs cutting corners is a growing trend.

imagine paying 4090 pricing and in 5yrs being told "frick you your old so we dont care"

"“In theory the vertex shader path is implemented but had lots of perf issues and bugs, we just dropped it.”"

is a lazy reason imho. rather than fix it they drop it.

They could of even implemented its buggy self as a "low" setting...letting ppl play even if not ideal experience.

GPU last more than 5 yrs.

1060 & 1080 ti are proof of it.

1060 was mot popular gpu for yrs while 1080 ti was a powerhouse even over newer generation (2080) -

Order 66 Reply

not necessarily lazy devs, but strict deadlines imposed by the studios don't give devs enough time to optimize their games.hotaru251 said:...or devs can just do their job?

also even IF you cut off 5 yr old gpu (which are still good gpu btw) doesnt mean dev will optimize game properly.

Devs cutting corners is a growing trend.

is a lazy reason imho. rather than fix it they drop it.

They could of even implemented its buggy self as a "low" setting...letting ppl play even if not ideal experience.

GPU last more than 5 yrs.

1060 & 1080 ti are proof of it.

1060 was mot popular gpu for yrs while 1080 ti was a powerhouse even over newer generation (2080) -

hotaru251 Reply

fair.Order 66 said:but strict deadlines imposed by the studios don't give devs enough time to optimize their games. -

Argolith Reply

Very useful for the 20 or so people interested.Giroro said:Well, at least we'll finally have a new game worth benchmarking. -

USAFRet Reply

And yet when Microsoft does this for the OS (Windows 11), people bring out the pitchforks.vanadiel007 said:It's about time we drop support for 5 year old cards on top titles. All it does is add performance issues for newer cards, to accommodate older cards.

I get it sucks for those with 1000 cards or older but at the same time there has to be a point when older hardware has served it's life.

I am sure my opinion will not be popular for many with older hardware.