AMD Claims World’s Fastest Per-Core Performance With New EPYC Rome 7Fx2 CPUs

Dial up the clocks, cache, and TDP

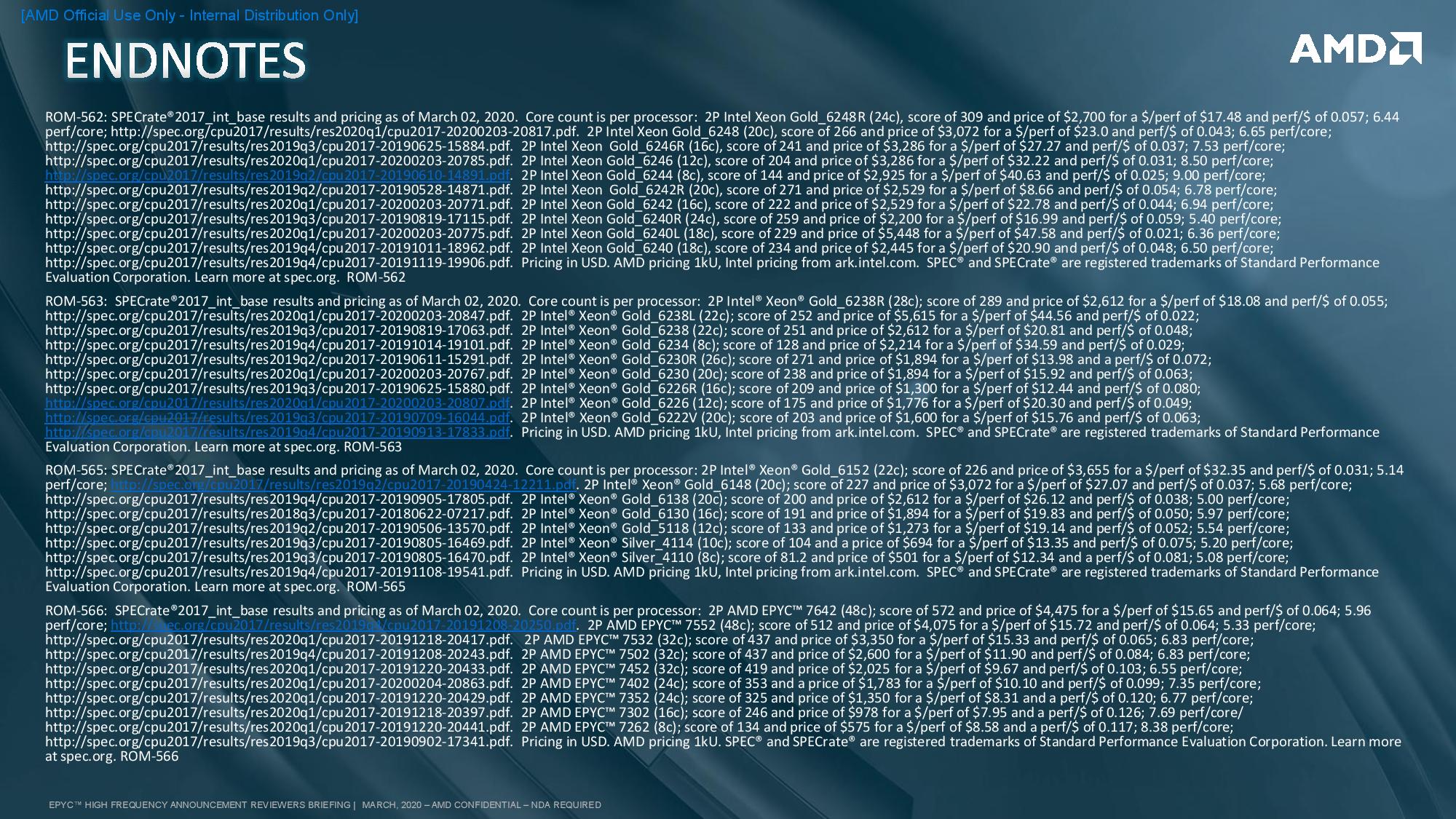

Intel recently resigned itself to engaging in a price war with AMD's EPYC processors as it slashed gen-on-gen pricing for its Cascade Lake Refresh processors. Now AMD is taking the battle in an entirely different direction as it unveils three new workload-optimized processors that strike at the heart of the mid-range market, but with higher pricing than the preceding models.

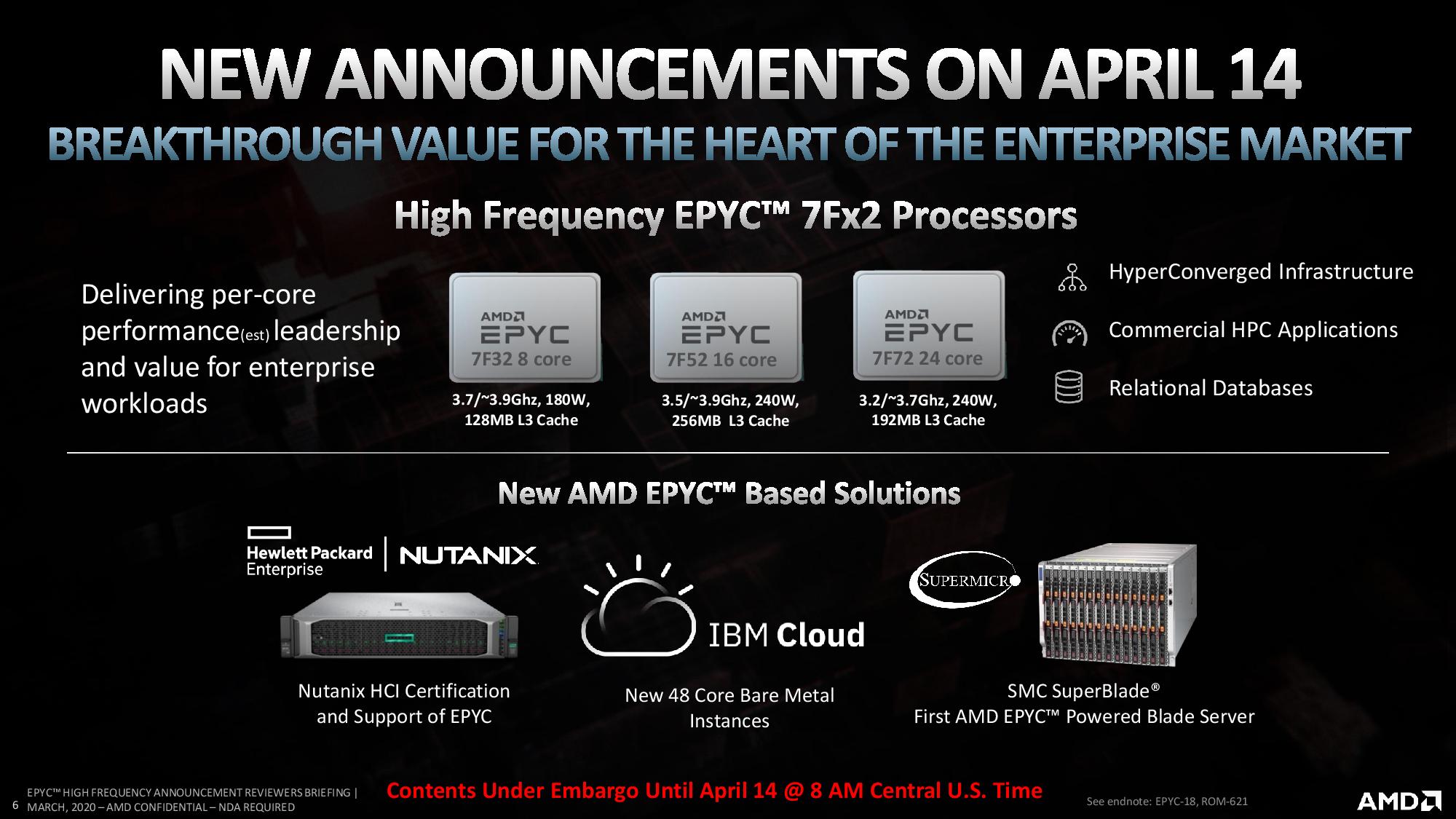

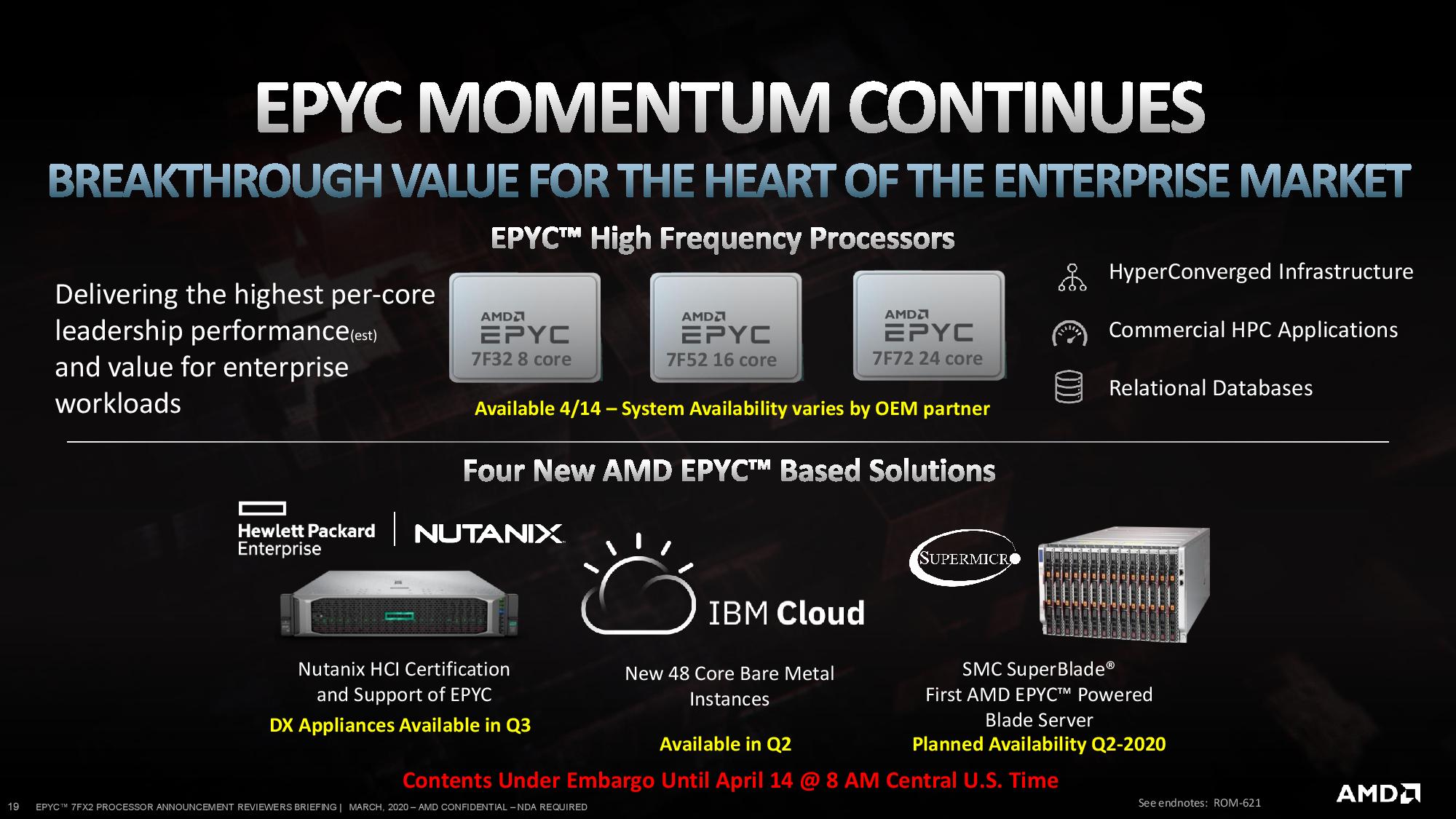

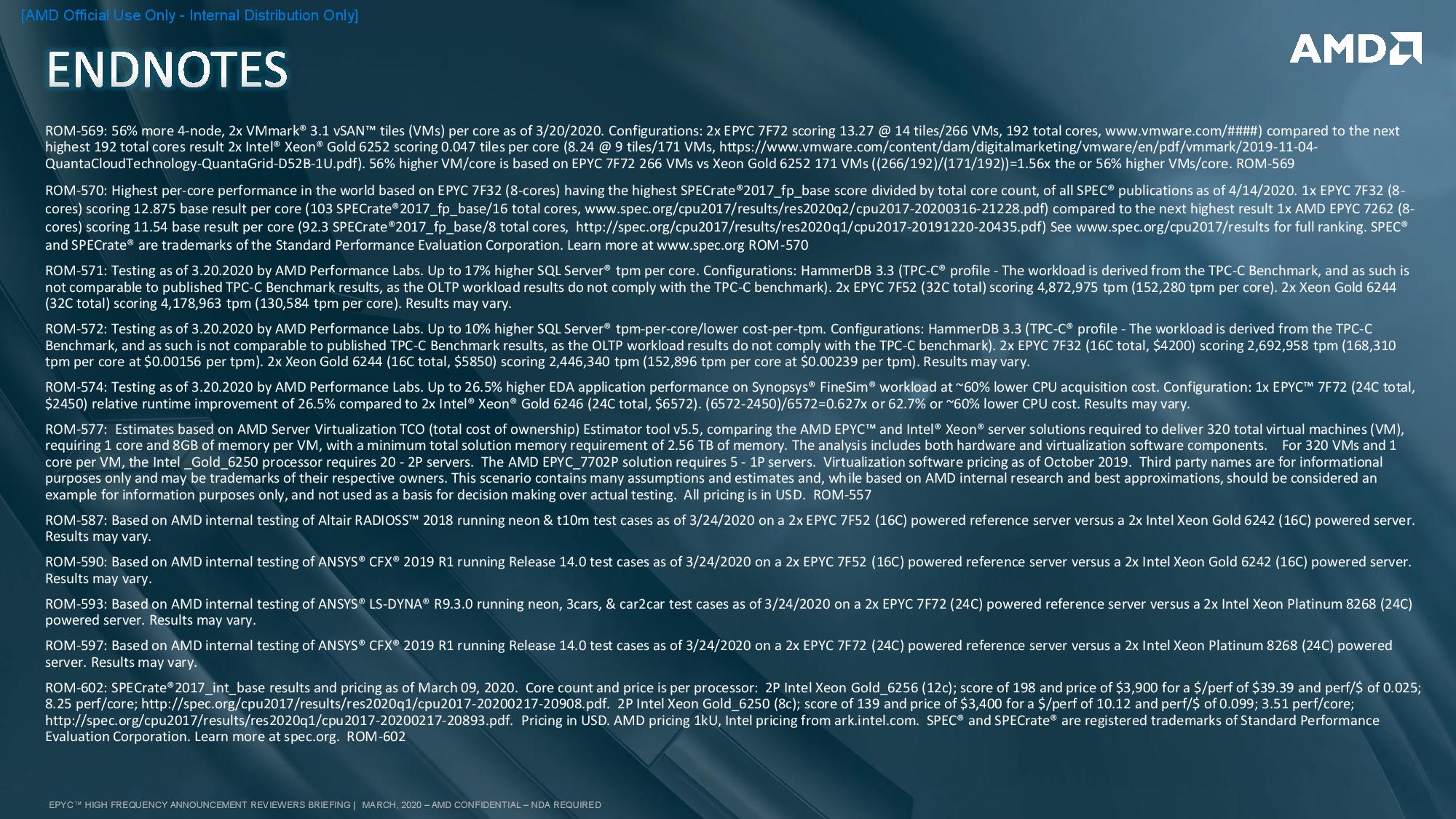

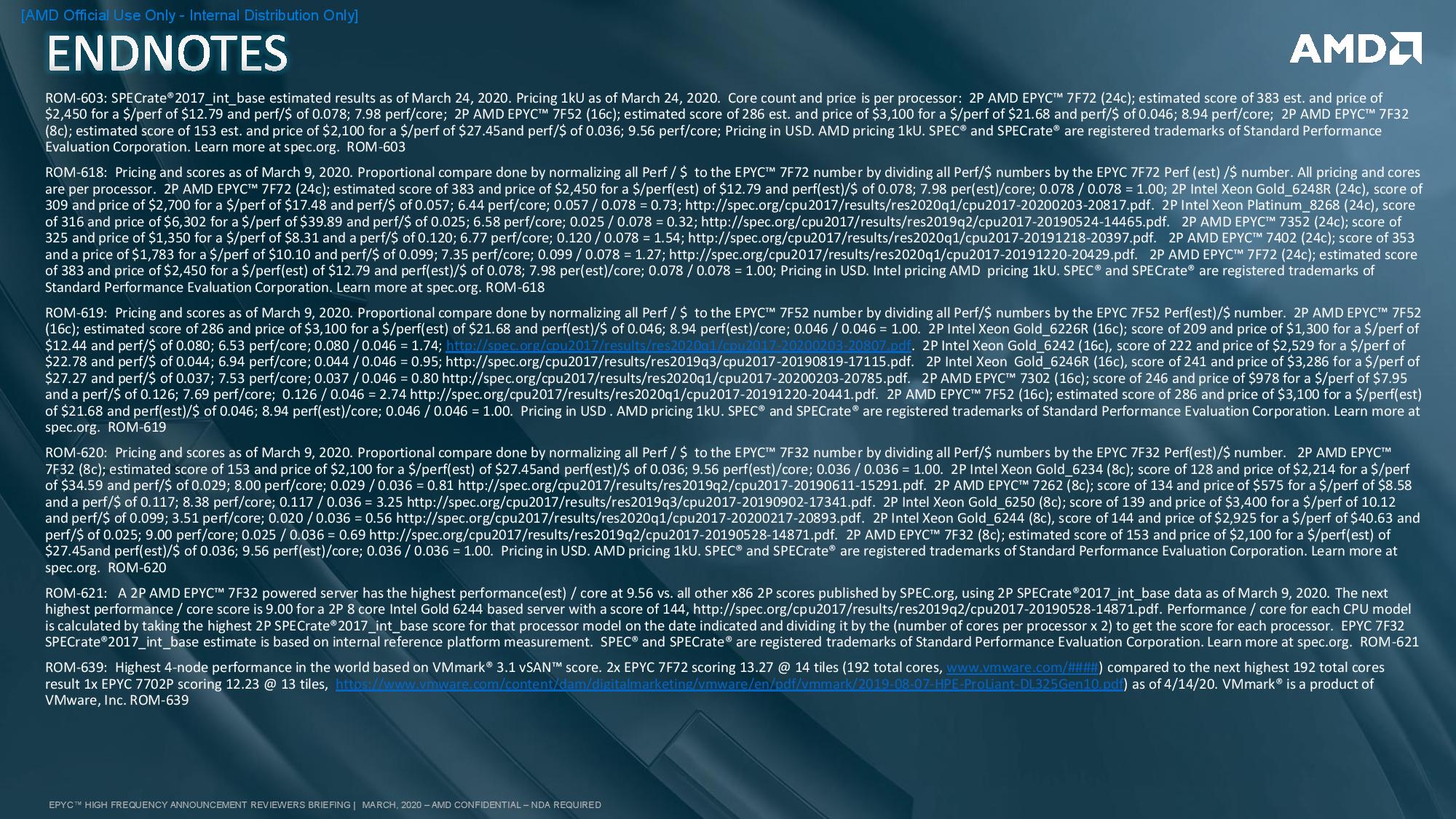

AMD says the new 7Fx2 frequency-optimized processors offer the world's fastest per-core performance from x86 server CPUs as the company looks to upset Intel's traditional leadership position in per-core performance. The boosted performance comes as a byproduct of an increased base frequency of 500 MHz paired with a healthy dollop of extra L3 cache that benefits workloads that benefit from keeping data close to the execution cores, like relational databases, high performance computing (HPC), and hyperconverged infrastructure (HCI) applications.

The tradeoff comes in the form of a higher TDP rating, meaning the processors consume more power and generate more heat, along with the higher pricing that comes as a result of the premium-binned silicon. However, the three new EPYC Rome 7Fx2 processors offset those metrics with much higher performance, thus enabling denser solutions. AMD says that combination leads to reduced up-front costs and a 50% reduction in total operating costs (TCO) for the target markets, thus justifying the higher price points.

AMD continues to nibble away at Intel's data center market share and says that it is on track to reach mid-double-digit share by the second quarter of this year. The new 7Fx2-series processors look to broaden that attack into applications where Intel has traditionally held the advantage. Let's take a closer look.

AMD EPYC Rome 7F32, 7F52, and 7F72 Processors

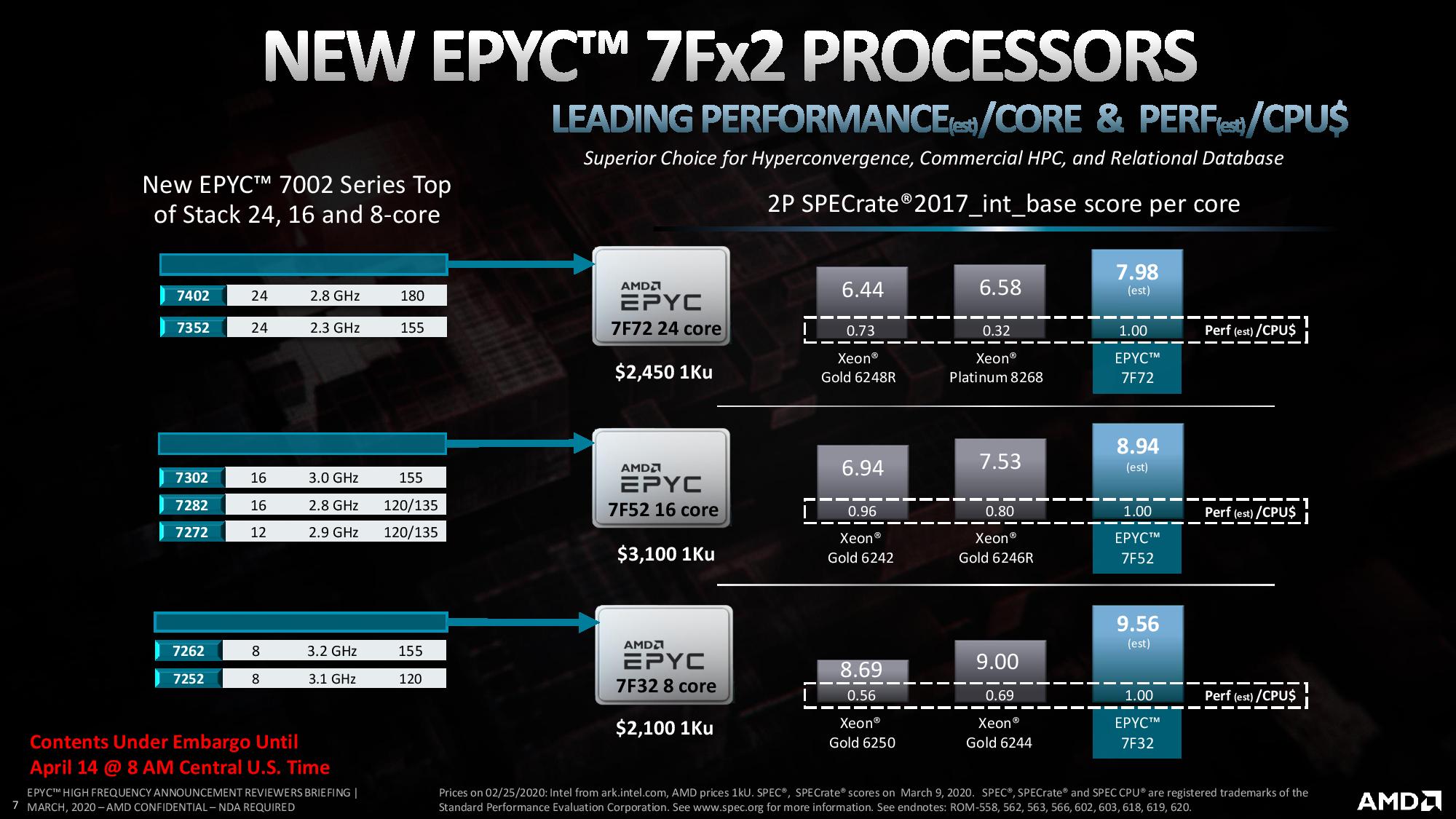

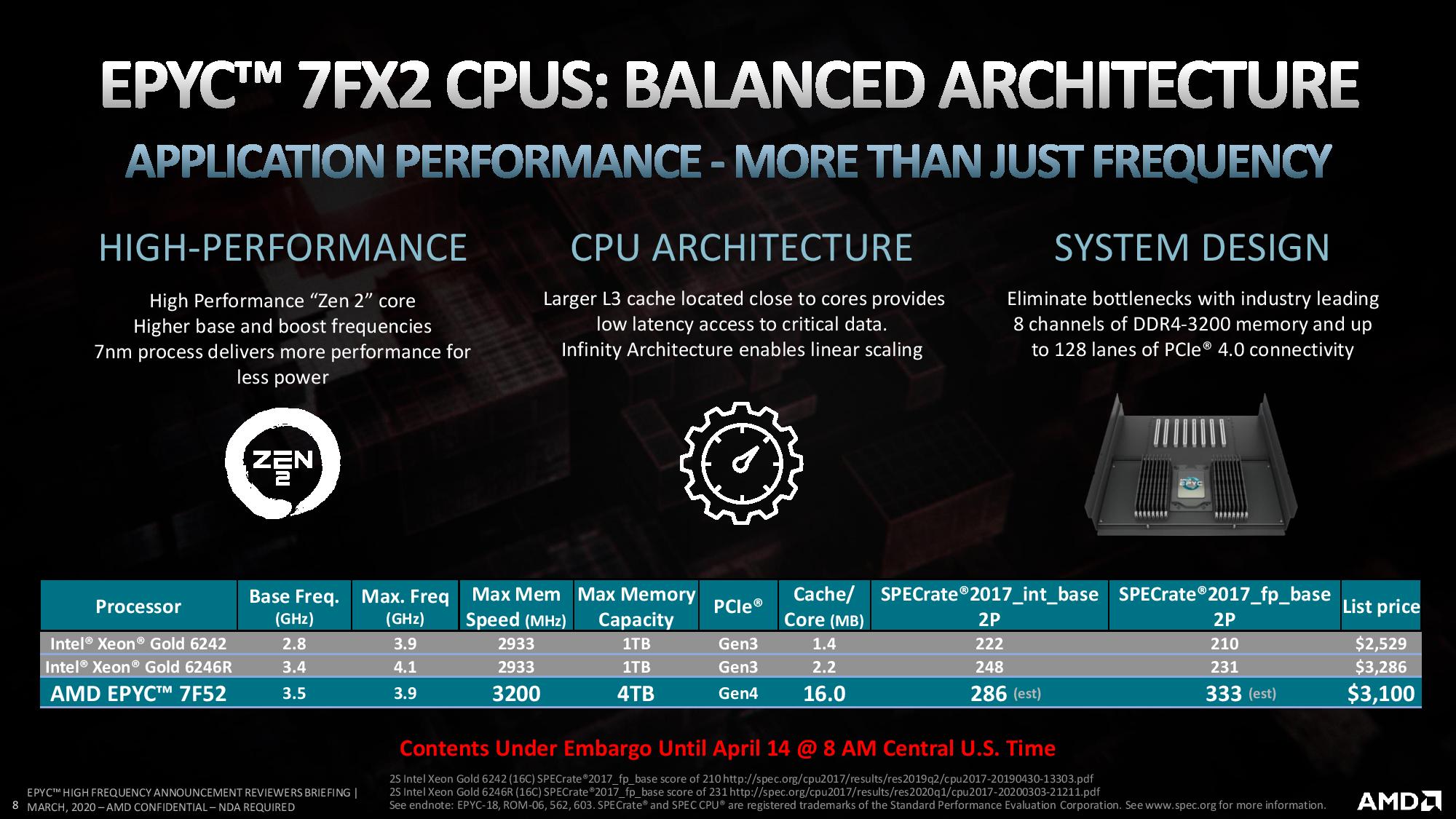

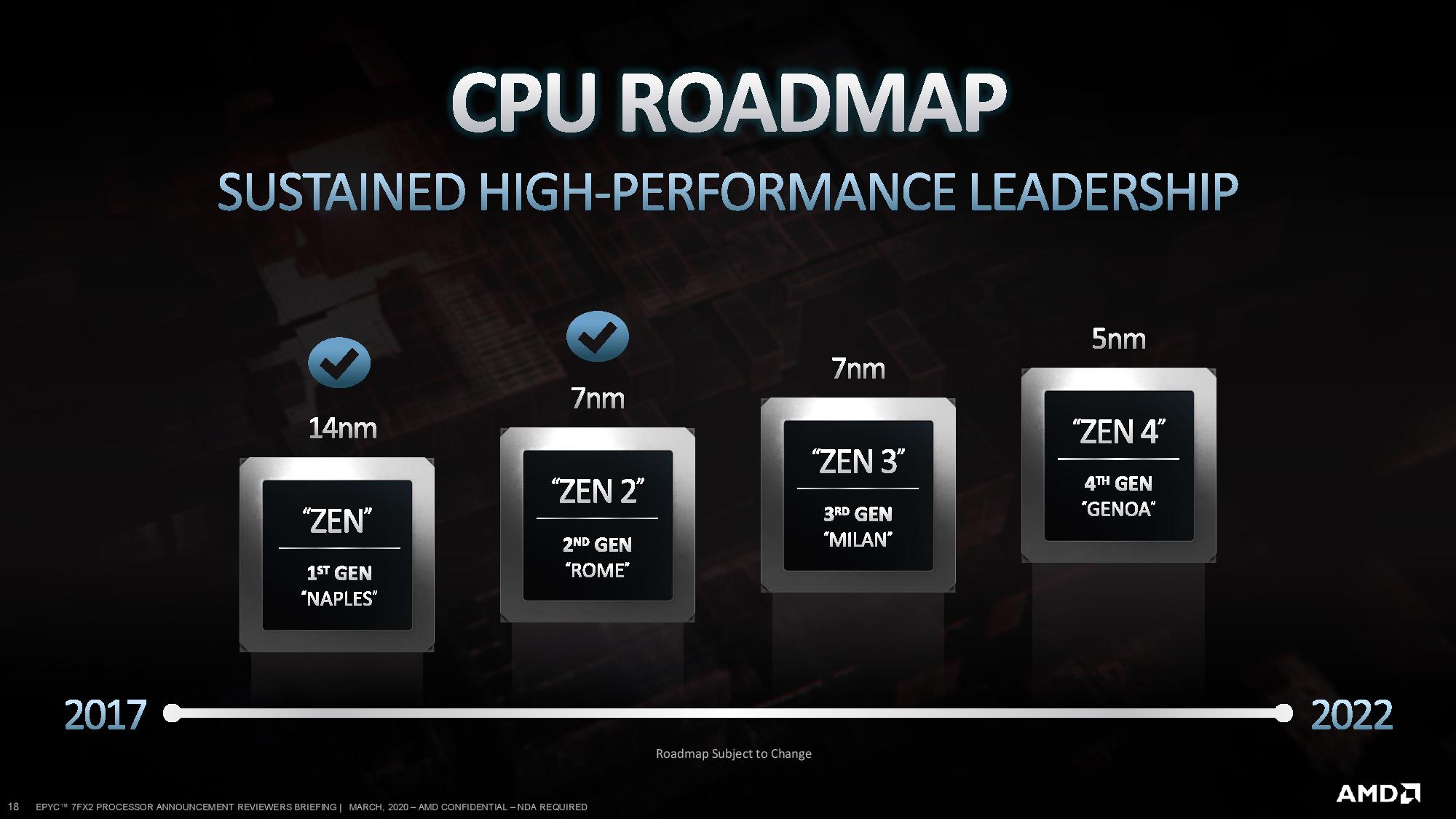

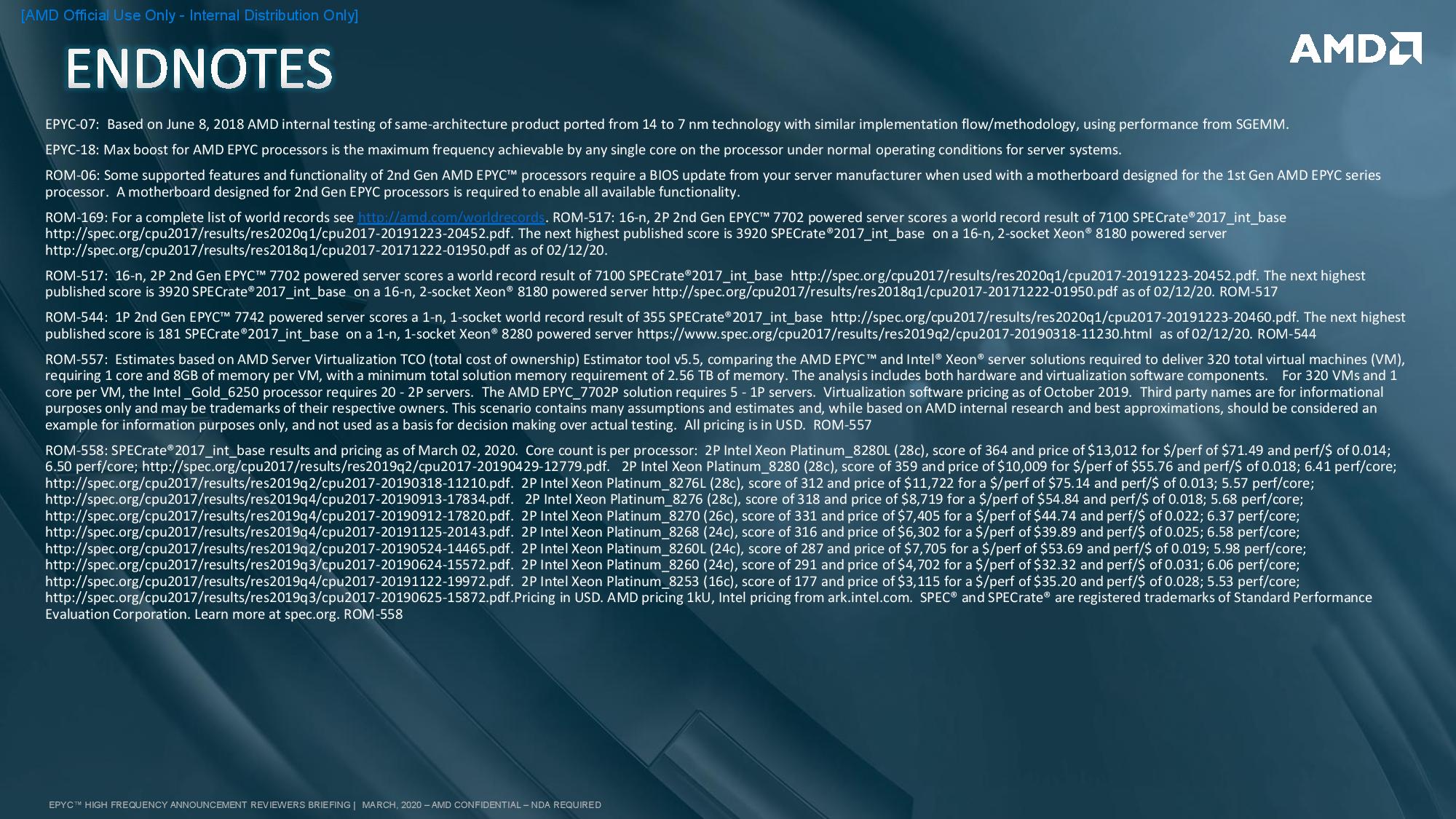

The new processors come in 8-, 16-, and 24-core flavors that wield notable increases in both base and boost frequencies. They still come with the same 7nm process and Zen 2 microarchitecture found on other EPYC Rome processors, but AMD says its targeted refinements equate to a sizeable performance advantage over preceding models. AMD provided estimated SPEC2017_int_base benchmarks that show a sizeable increase in both performance-per-core and performance-per-dollar in the lightly-threaded workloads that have long been one of Intel Xeon's strongest selling points, particularly for software that is licensed on a per-core basis. These types of applications command high premiums that increase based upon the number of active cores, so per-core performance is paramount.

As with all vendor-supplied benchmarks, not to mention estimations, take these with a grain of salt. However, we do have processors in for testing, and these benchmarks coincide with our basic expectations for the improved specifications. AMD also has the advantage of more memory channels (eight vs. Intel's six) and faster supported memory speeds (DDR4-3200 vs. Intel's DDR4-2933). Couple that with a higher 4TB memory capacity (Intel tops out at 2TB without additional fees) and 128 lanes of PCIe 4.0 (Intel has 48 lanes of PCIe 3.0), and AMD has a compelling performance story.

AMD EPYC Rome 7Fx2 Specifications and Pricing

| Row 0 - Cell 0 | Cores / Threads | Base / Boost (GHz) | L3 Cache (MB) | TDP (W) | 1K Unit Price |

| EPYC Rome 7742 | 64 / 128 | 2.25 / 3.4 | 256 | 225 | $6,950 |

| Xeon Gold 6258R | 28 / 56 | 2.7 / 4.0 | 38.5 | 205 | $3,651 |

| Xeon Platinum 8280 | 28 / 56 | 2.7 / 4.0 | 38.5 | 205 | $10,009 |

| EPYC Rome 7F72 | 24 / 48 | 3.2 / ~3.7 | 192 | 240 | $2,450 |

| Xeon Gold 6248R | 24 / 48 | 3.0 / 4.0 | 35.75 | 205 | $2,700 |

| Xeon Platinum 8268 | 24 / 48 | 2.9 / 3.9 | 35.75 | 205 | $6,302 |

| EPYC Rome 7402 | 24 / 48 | 2.8 / 3.35 | 128 | 180 | $1,783 |

| EPYC Rome 7F52 | 16 / 32 | 3.5 / ~3.9 | 256 | 240 | $3,100 |

| Xeon Gold 6246R | 16 / 32 | 3.4 / 4.1 | 35.75 | 205 | $3,286 |

| Xeon Gold 6242 | 16 / 32 | 2.8 / 3.9 | 22 | 150 | $2,529 |

| EPYC Rome 7302 | 16 / 32 | 3.0 / 3.3 | 128 | 155 | $978 |

| EPYC Rome 7282 | 16 / 32 | 2.8 / 3.2 | 64 | 120 | $650 |

| EPYC Rome 7F32 | 8 / 16 | 3.7 / ~3.9 | 128 | 180 | $2,100 |

| Xeon Gold 6250 | 8 / 16 | 3.9 / 4.5 | 35.75 | 185 | $3,400 |

| Xeon Gold 6244 | 8 / 16 | 3.6 / 4.4 | 24.75 | 150 | $2,925 |

| EPYC Rome 7262 | 8 / 16 | 3.2 / 3.4 | 128 | 155 | $575 |

| EPYC Rome 7252 | 8 / 16 | 3.1 / 3.2 | 64 | 120 | $475 |

As we can see, the top of the stack remains unchanged. But while those models tend to grab the flashbulbs, the real volume resides in the mid-range dual-socket server market.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AMD's new processors slot in below the new VMware licensing model that doubles pricing for processors with more than 32 cores. The increased per-core performance also benefits systems where per-core licensing costs often outweigh the price of the hardware. Utilizing the software more fully will ultimately reduce cost, and AMD tackles that mission with a 500 MHz increase to the base frequencies. That comes with a 60W increase in TDP for the 24-core 48-thread EPYC 7F72, a 90W increase for the 16-core 32-thread EPYC 7F52, and a 25W increase for the EPYC 7F32.

AMD also increased the boost frequency to 3.7 GHz for its 24-core model, and 3.9 GHz for its 16- and 8-core variants. These boost frequencies adhere to AMD's standard practice of guaranteeing the rating for one physical core only, which is then complemented by thread-targeting that ensures the fastest core receives the brunt of single-threaded workloads.

The increased L3 cache capacities also pop off the page, with the 24-core model sporting 192MB of L3, a 64MB increase, and the 16-core model weighs in with a whopping 256MB of cache, an incredible increase of 128MB. This beefy cache is incredibly potent in the target applications and contributes to the increased per-core performance.

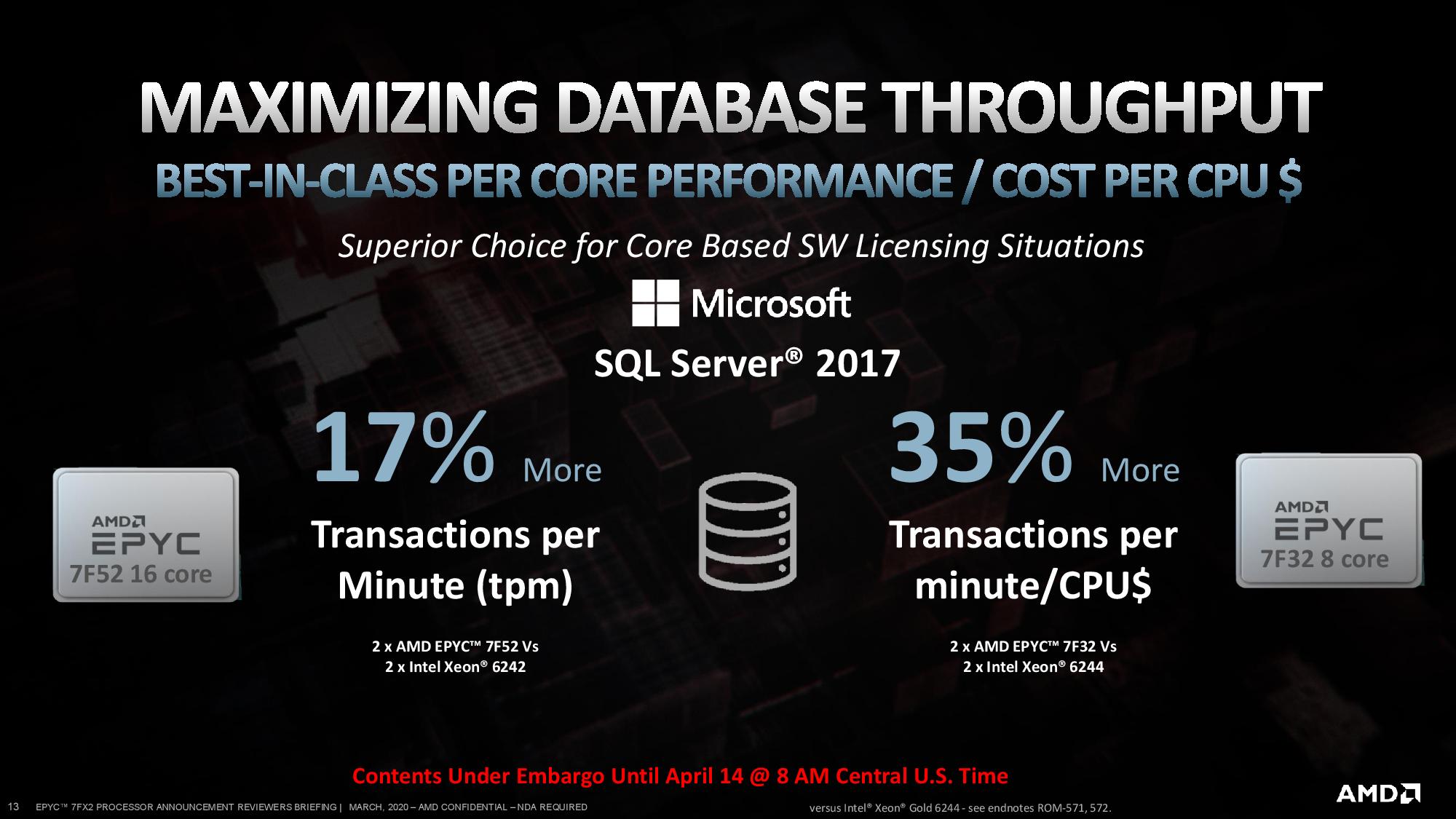

The chips come with a higher price point to match the specifications. The 24-core model weighs in at $2,450, an extra $667 over AMD's comparable model but still less expensive than Intel's $2,700 Gold 6248R. The 16-core model retails for $3,100, a $2,122 markup over the 7302. This model lands only slightly below Intel's $3,286 Gold 6246R, underlining AMD's confidence that it has the faster chip. That comes despite the 6246R's higher boost frequency, but that's the advantage of AMD's 256MB of L3 cache compared to Intel's 35.75MB.

AMD EPYC Rome 7Fx2 Performance and Systems

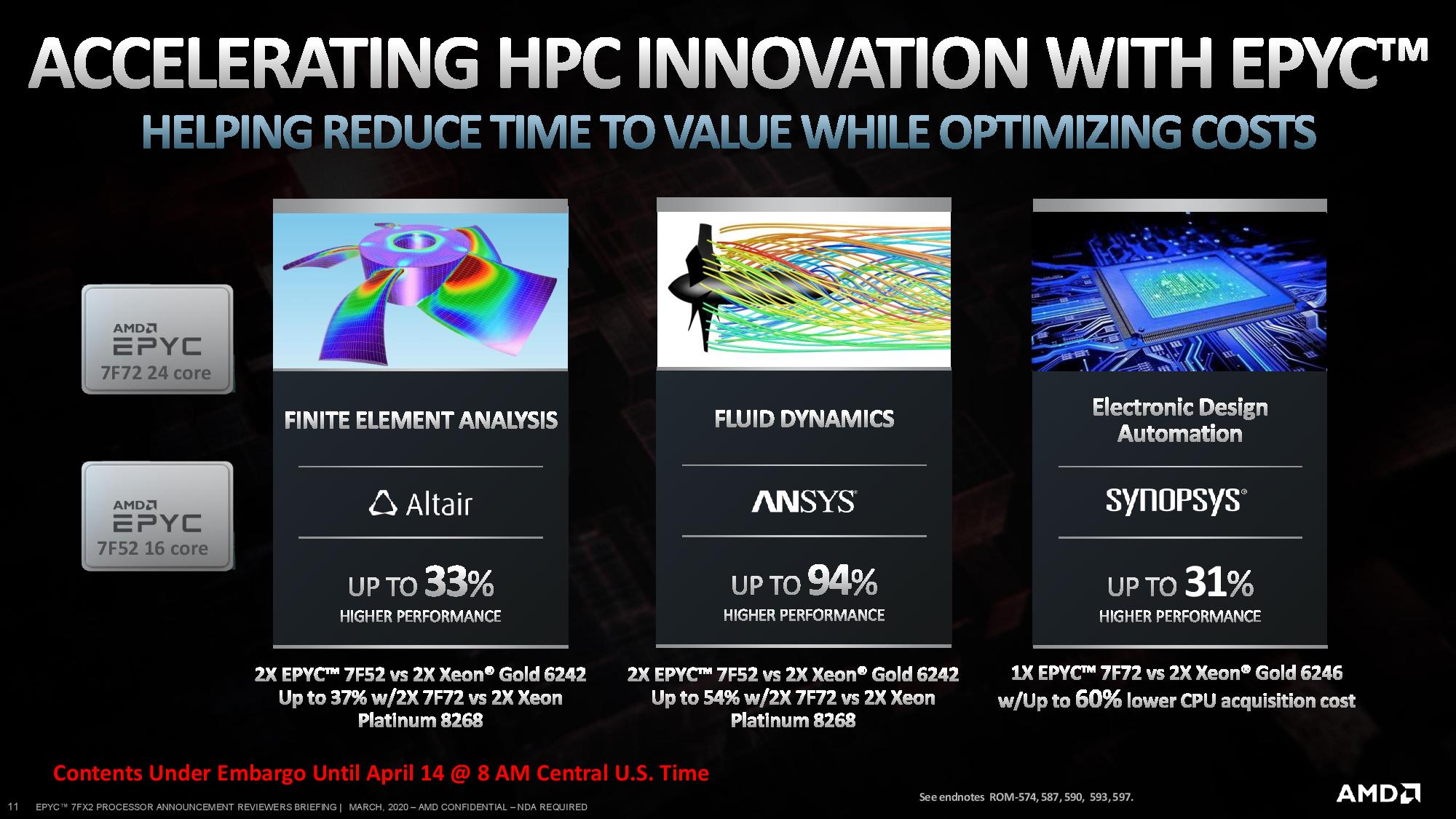

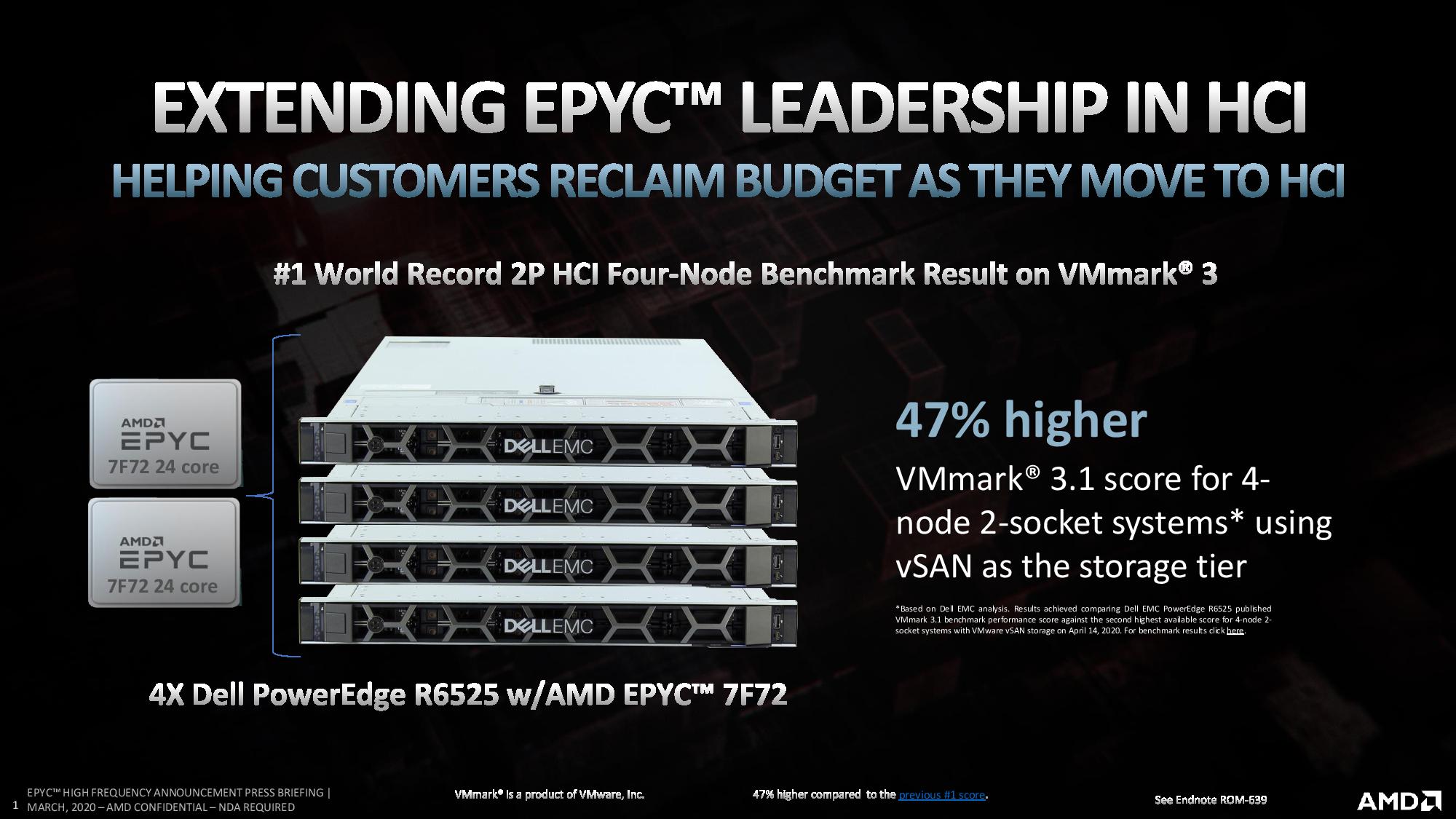

AMD cited performance benchmarks in a few applications that typically come with per-core licensing models. That includes a surprising claim of 31% more performance from a single 7F72 model than two of Intel's Xeon Gold 6246 chips.

Improved performance is great, but it won't enjoy broad uptake if there isn't a robust set of qualified solutions available from reputable vendors. As such, AMD has been busy building out its ecosystem of platforms. The company now has 110 platforms in the market and plans to expand to 140+ by the end of 2020 from the likes of Dell, HPE, and Lenovo, among others.

AMD also continues to broaden the spate of instances available through cloud service providers, with 106 available now in all flavors, from compute to balanced types, and plans for 150+ by the end of the year. These instances come from industry leaders like AWS, Microsoft, Google, IBM, Oracle, Tencent, and Baidu.

AMD also announced today that it has been certified on Nutanix platforms from HPE, and Supermicro has launched its first blade-based EPYC Rome system with its SuperBlade system.

AMD's move marks a starkly different tack than Intel has taken lately as the latter has reduced pricing across the board to assuage customers that now have a less expensive x86 alternative. Despite Intel's price adjustments, AMD still holds the value crown and its expansion into the frequency-optimized category gives it yet another angle of attack on the lucrative server market.

We're working on a full review of the new models as we pit them against Intel's Cascade Lake Refresh models. Stay tuned.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

TCA_ChinChin Glad to see AMD introducing more options into the more frequency optimized areas of the server market. Sacrificing some efficiency and some of the low pricing in order to challenge Intel in the frequency department I think is worth it, as then there are simply more AMD options for customers to choose from.Reply -

hannibal Intels room gets tighter and tighter.Reply

Well played AMD. You most likely waked the sleeping dragon, but it is good thing. The dragon was sleeping too long... -

JayNor Intel's Ice Lake Server is on the roadmap for the fall, with up to 38 cores, 64 PCIE4 lanes, 8 channels DDR4 3200, AVX512, dlboost according to leak from last Oct.Reply

https://hothardware.com/news/intel-10nm-ice-lake-sp-q3-2020-38-cores-76-threads -

Paul Alcorn Replyjthill said:"rotational databases".

Ugh! Thanks. It's supposed to be relational. Fixed. -

jeremyj_83 Reply

Just in time to go up against Epyc 7003 series. I can just about guarantee you that no one will buy a 38 core CPU for a vSphere environment due to the licensing changes. That will make that CPU pretty much irrelevant, much the same way as AMDs 48 core CPU is pretty irrelevant due to current vSphere licensing.JayNor said:Intel's Ice Lake Server is on the roadmap for the fall, with up to 38 cores, 64 PCIE4 lanes, 8 channels DDR4 3200, AVX512, dlboost according to leak from last Oct.

https://hothardware.com/news/intel-10nm-ice-lake-sp-q3-2020-38-cores-76-threads -

spongiemaster 240W TDP for a 16 core CPU? 180W for an 8 core on the most advanced 7nm node? Not so easy to hit those topend clock speeds that Intel has done on old 14nm is it?Reply -

msroadkill612 Reply

I think its normal for clocks to REDUCE with node?spongiemaster said:240W TDP for a 16 core CPU? 180W for an 8 core on the most advanced 7nm node? Not so easy to hit those topend clock speeds that Intel has done on old 14nm is it? -

bit_user Reply

Apples vs. oranges.spongiemaster said:240W TDP for a 16 core CPU? 180W for an 8 core on the most advanced 7nm node? Not so easy to hit those topend clock speeds that Intel has done on old 14nm is it?

Compared with desktop Ryzens, Epyc's additonal cache, PCIe lanes, memory controllers, and onboard south bridge logic all burn additional power. Compared with Intel Cascade Lake, the Zen2 cores are architecturally wider (in addition to the other stuff I just mentioned).

Basically, don't get distracted by shiny clock speed numbers. Just pay attention to the actual benchmarks. You can find loads more, here:

https://www.phoronix.com/scan.php?page=article&item=amd-epyc-7f52 -

bit_user Reply

Perhaps it's becoming that way. I think we're getting into a territory where leakage is increasing faster than it can be offset by other benefits of ever-smaller nodes.msroadkill612 said:I think its normal for clocks to REDUCE with node?

Or, maybe the issue is more that Zen2 prioritized IPC over clock speed and architecturally can't clock as high.