AMD’s RX 6000 GPUs to Boost Perf With Ryzen 5000 CPUs via Smart Memory Access

Big Navi + Ryzen 5000 = Win

AMD’s Radeon RX 6000 series launch revealed one exciting and somewhat unexpected new technology - AMD’s Radeon RX 6000 GPUs will now operate in tandem with AMD’s Ryzen 5000 processors (with the caveat that you need a 500-series motherboard) through a new Smart Access Memory feature that will boost gaming performance by enhancing data transfer between the CPU and GPU.

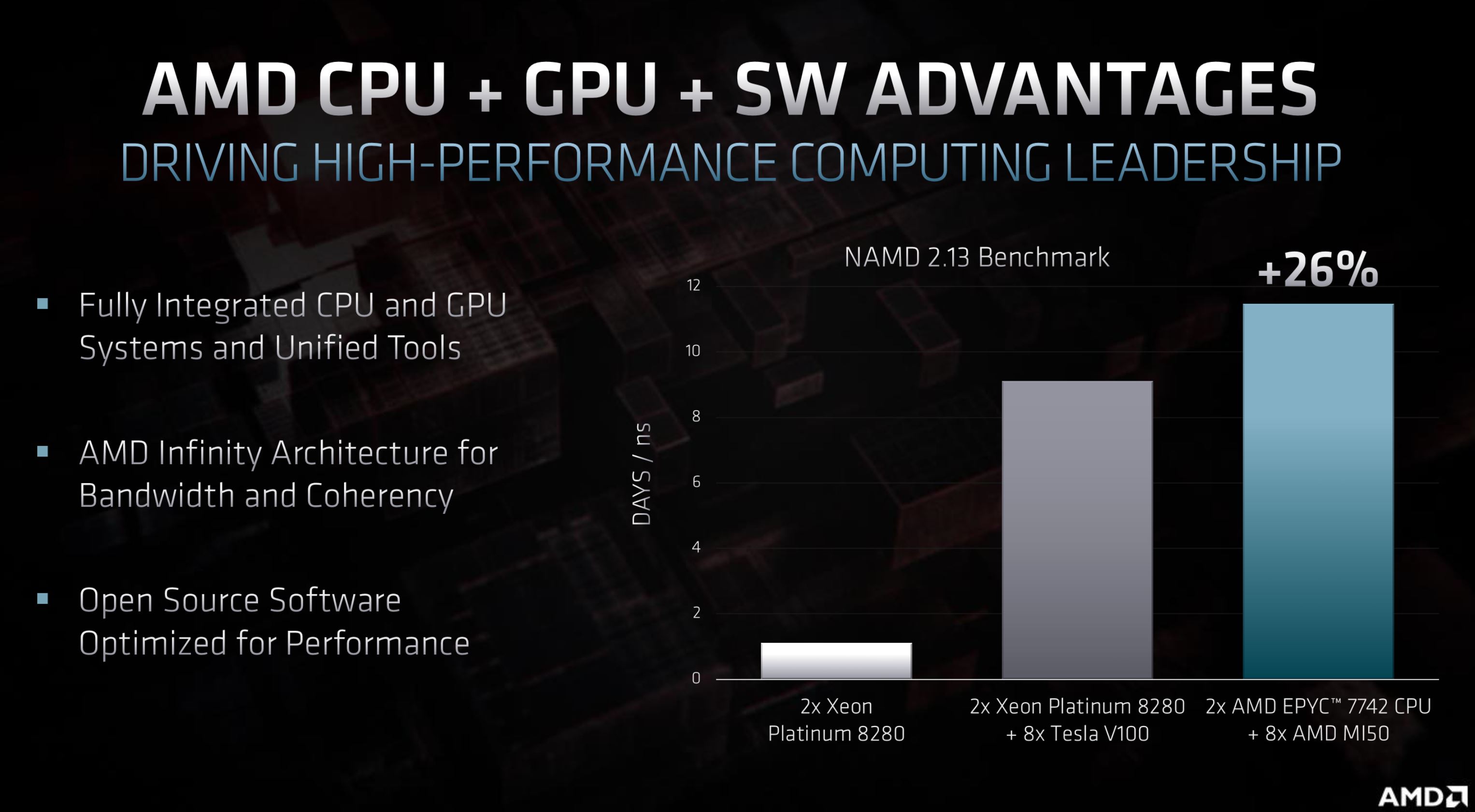

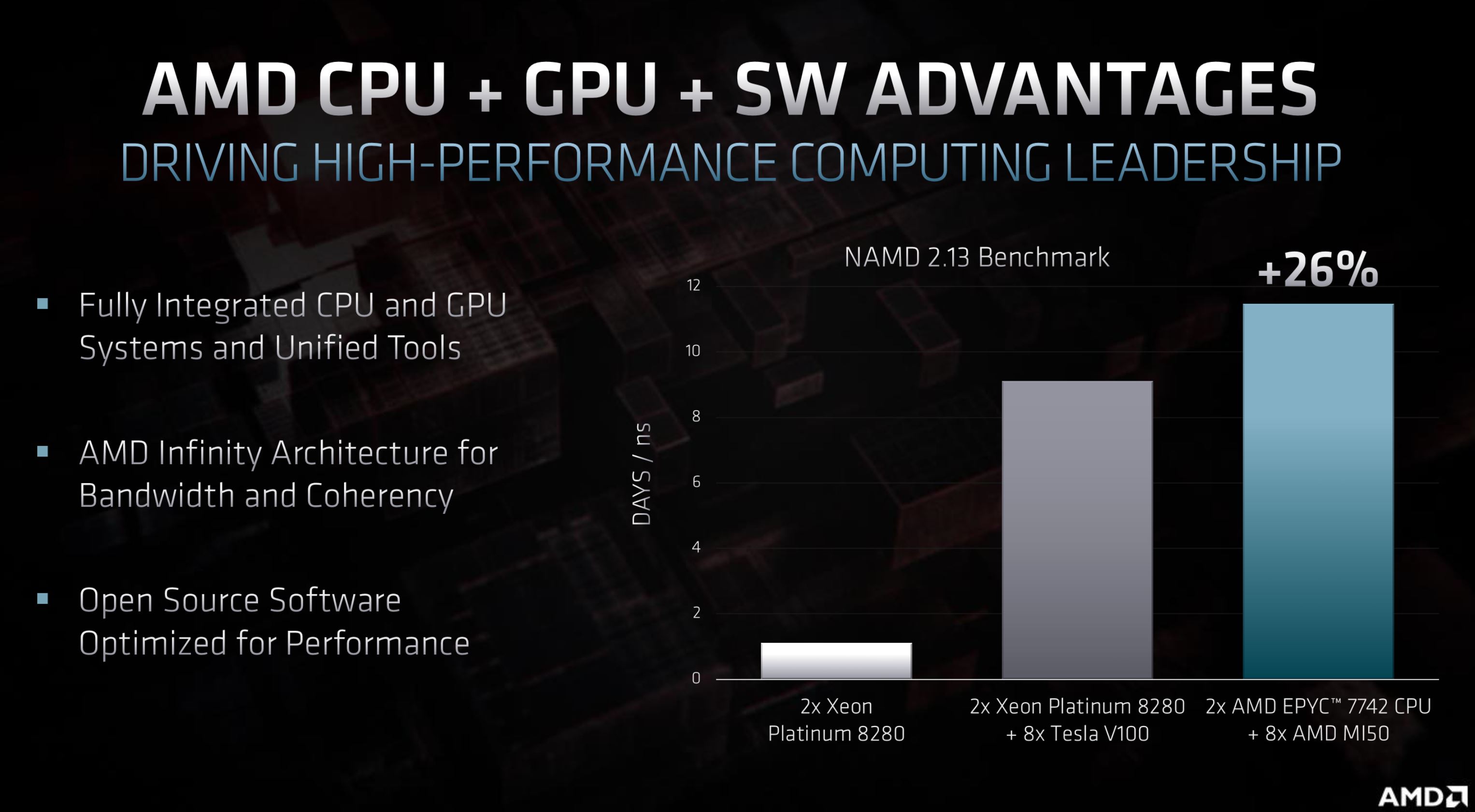

The announcement brings one of AMD’s key advantages into full focus: AMD is the only company that produces x86 processors and has a line of discrete gaming GPUs currently on the market. That affords some advantages, specifically in terms of optimizing both the GPU and CPU to offer the best possible performance when they operate in tandem. But now AMD is taking the concept to an entirely new level with its Smart Access Memory

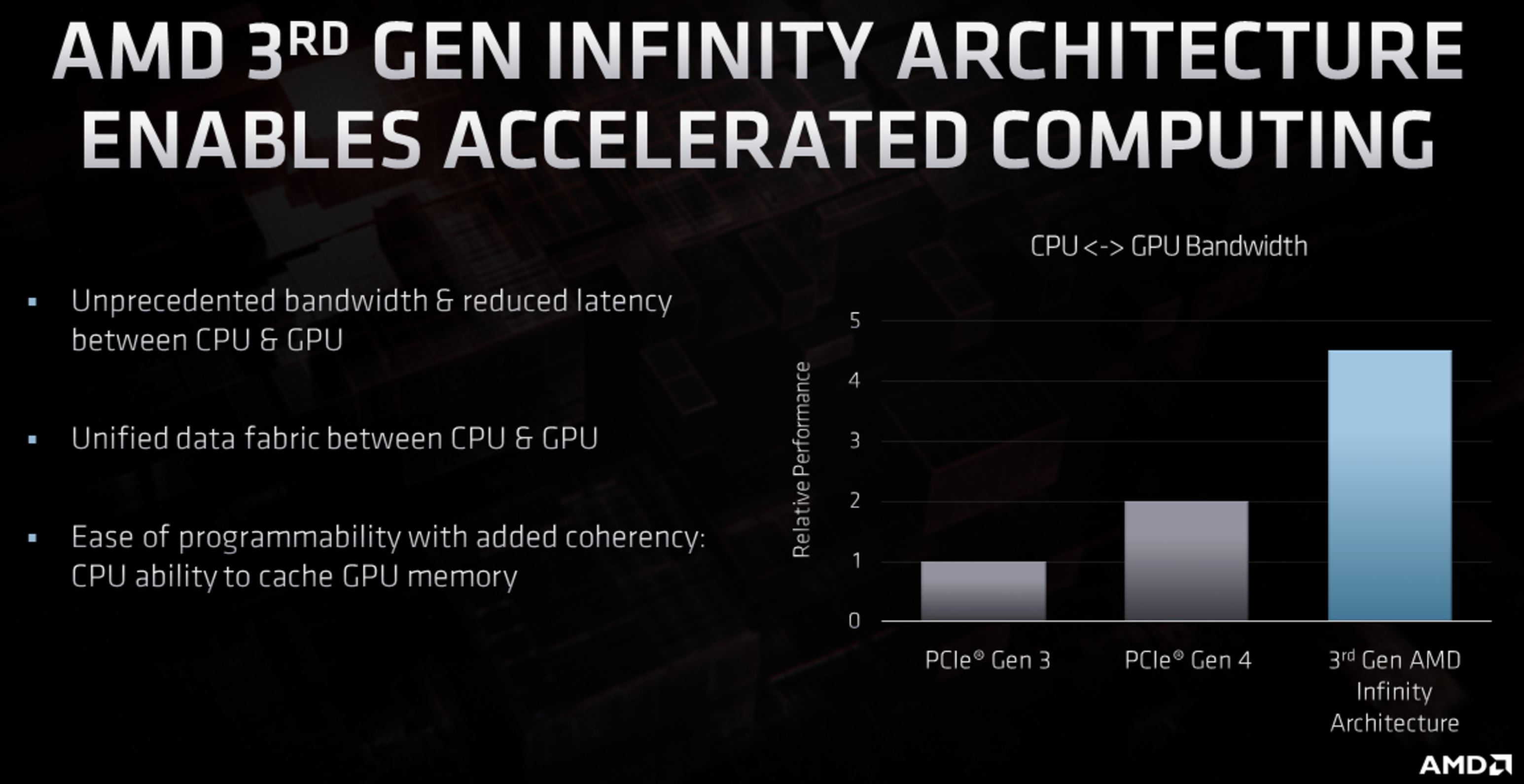

AMD isn’t sharing the new tech's full details, but we do know the broad strokes. By enabling the Smart Memory Access feature in the Radeon RX6000’s vBIOS and the motherboard BIOS, the CPU and GPU will gain unprecedented full access to each other’s memory, which maximizes data transfer performance between the CPU and the GPU’s on-card 16GB of VRAM.

As a basic explainer (we’ll learn more details at an upcoming Tech Day), AMD says that the CPU and GPU are usually constrained to a 256MB ‘aperture’ for data transfers. That limits game developers and requires frequent trips between the CPU and main memory if the data set exceeds that size, causing inefficiencies and capping performance. Smart Access Memory removes that limitation, thus boosting performance due to faster data transfer speeds between the CPU and GPU.

It’s a simple equation - moving data always costs more energy than actually performing compute operations against it, so streamlining the process results in improved performance and higher power efficiency.

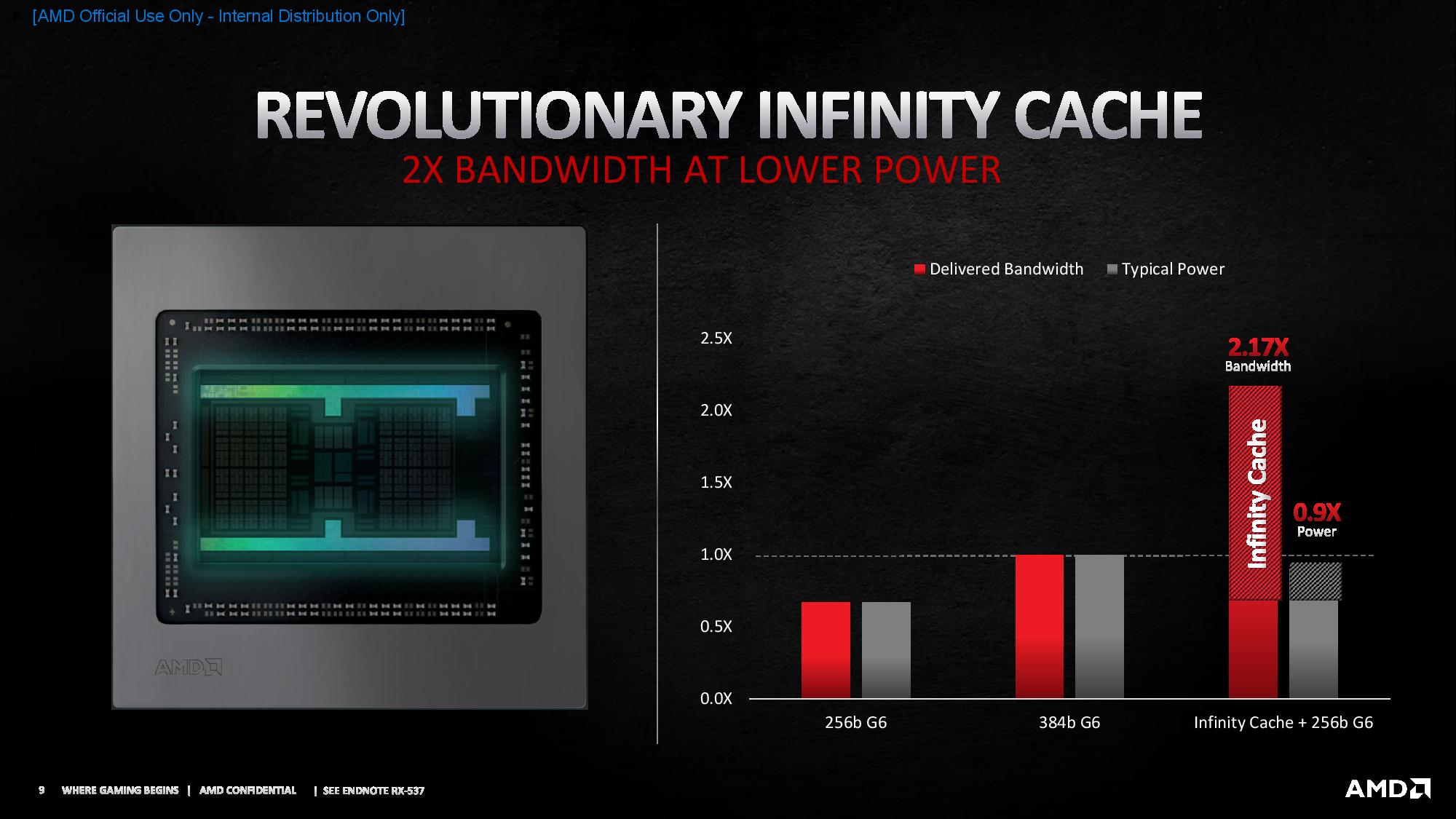

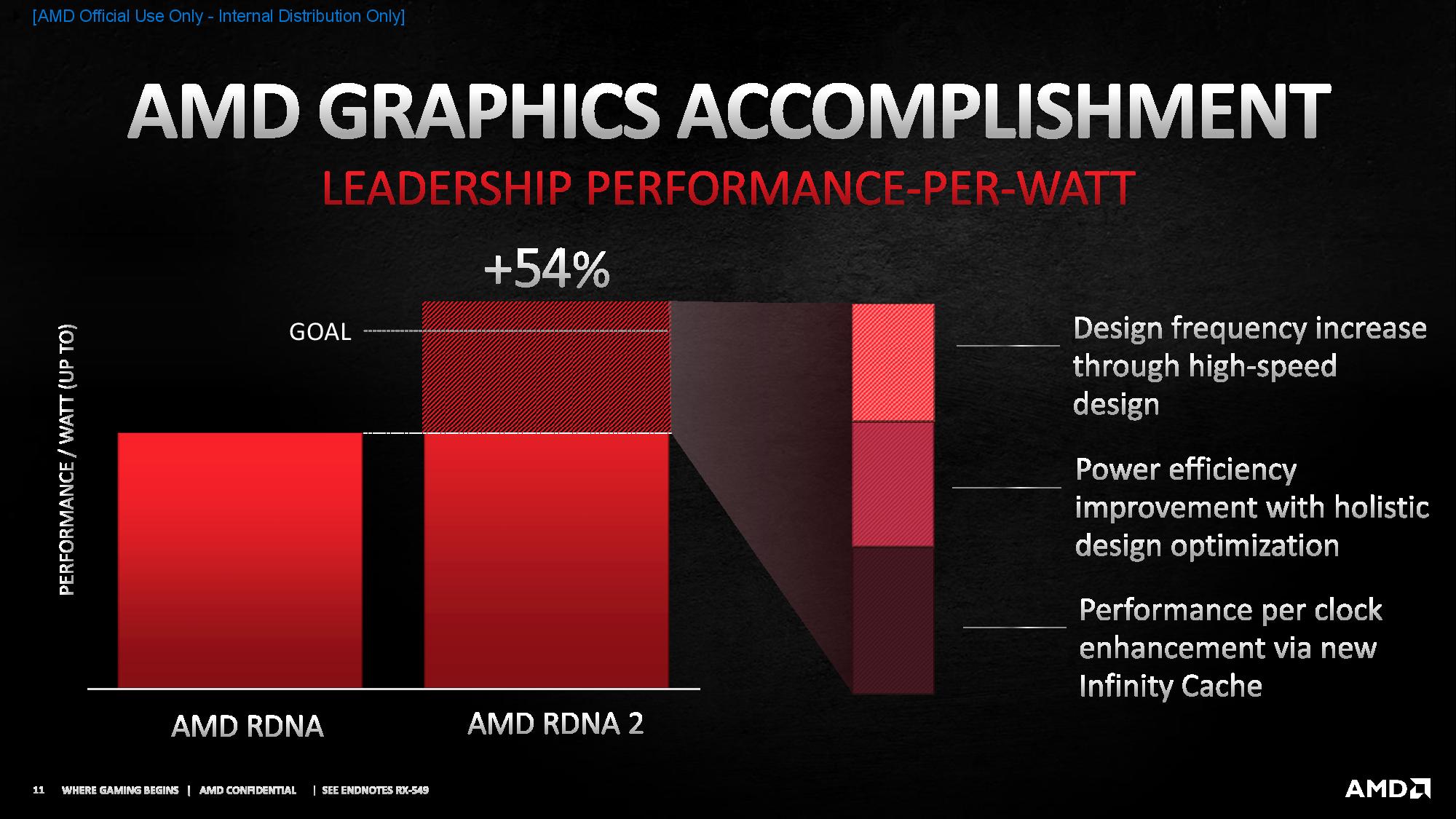

Pairing this enhanced data transfer performance with the new 128MB Infinity Cache could yield a massive boost to throughput between the CPU and GPU. AMD also isn’t sharing the fine-grained details of its Infinity Cache, but we do know that the 128MB cache essentially serves as a large on-die frame buffer that is transparent to developers. It isn’t clear if the new cache is implemented in an L3 or L4-esque manner, but AMD does say the high-speed, high-density memory holds more data close to the compute units, thus increasing hit rates, which then boosts performance-per-clock.

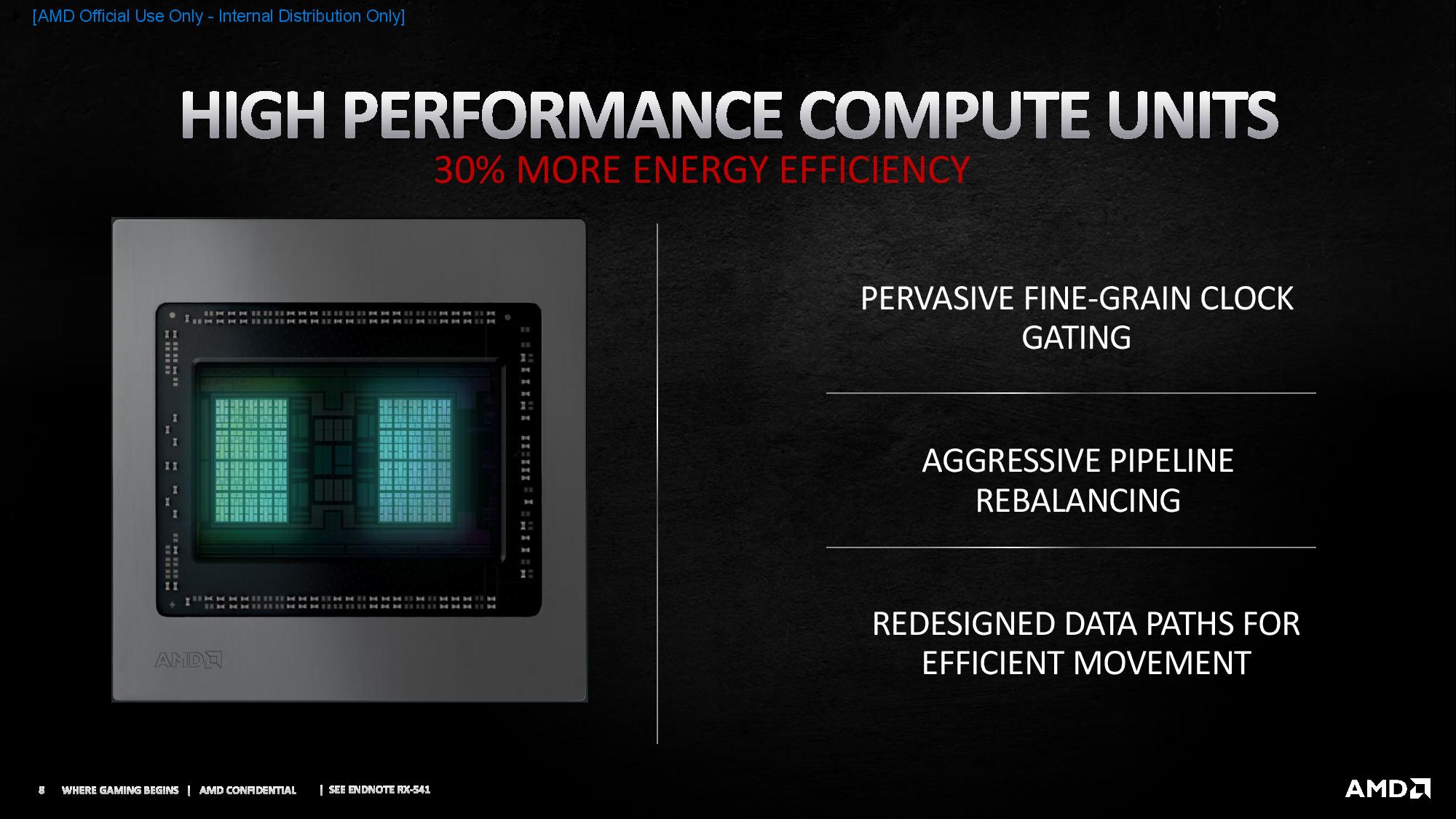

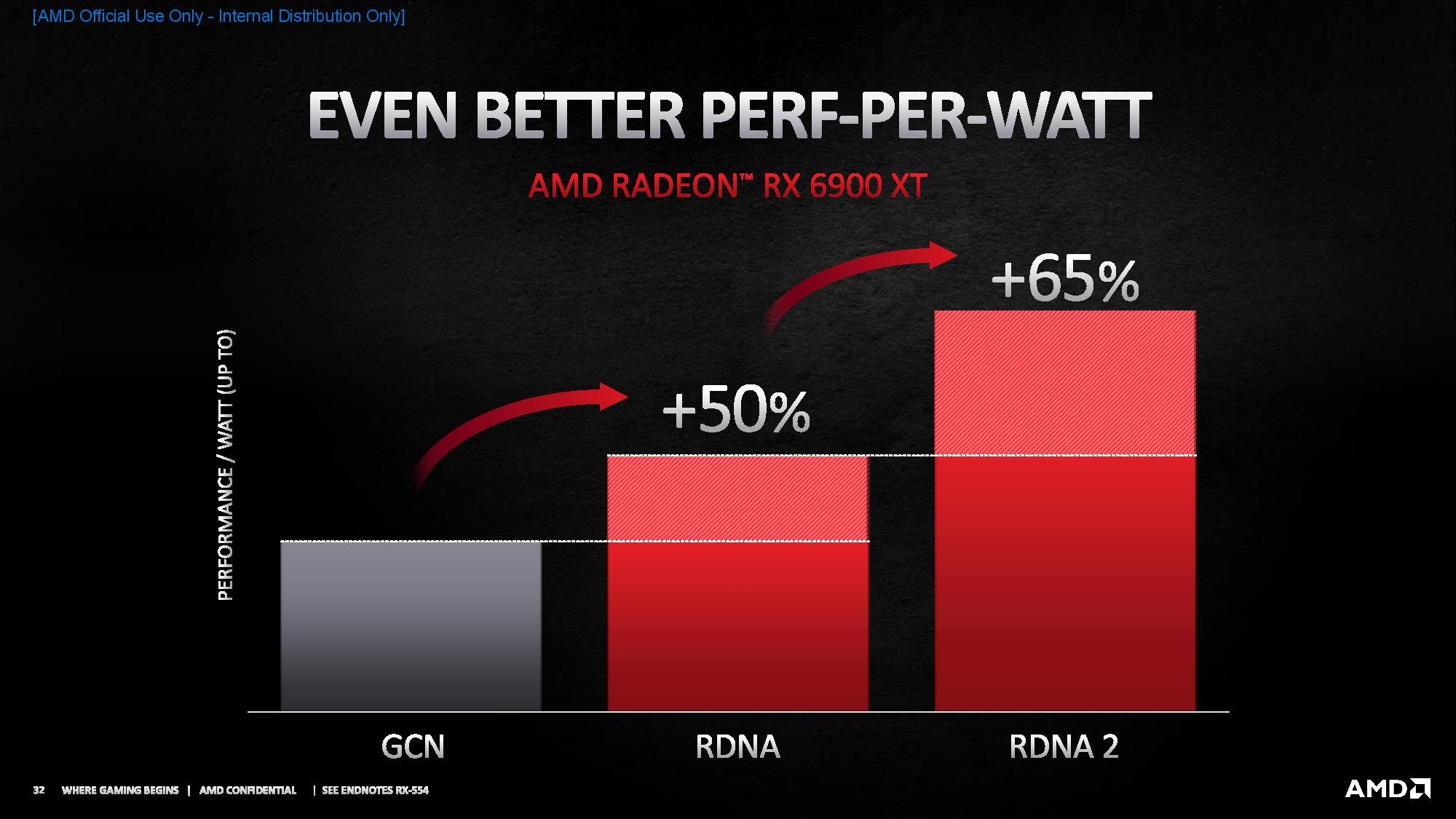

The new Infinity Cache leverages the GPU's redesigned data paths to aggressively maximize performance while minimizing data movement and power within the GPU. Overall, AMD says the Infinity Cache equates to a 10% increase in power efficiency and doubles the bandwidth (117% increase) at lower power than traditional memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Naturally, pairing that large cache and using it as a landing pad for data flowing in from the CPU via the Smart Memory Access feature will obviously yield big throughput benefits. Surprisingly, AMD tells us that the Infinity Cache is based on the Zen CPU’s L3 cache design, which means it comes as the fruits of AMD’s cross-pollination between its CPU and GPU teams. AMD feels the Infinity Cache is a better engineering investment than going with a more expensive solution, like using wider and faster memory (i.e., HBM memories).

AMD says the Infinity Cache boosts performance-per-clock scaling as frequency increases largely because the GPU is less constrained by external memory bandwidth limits.

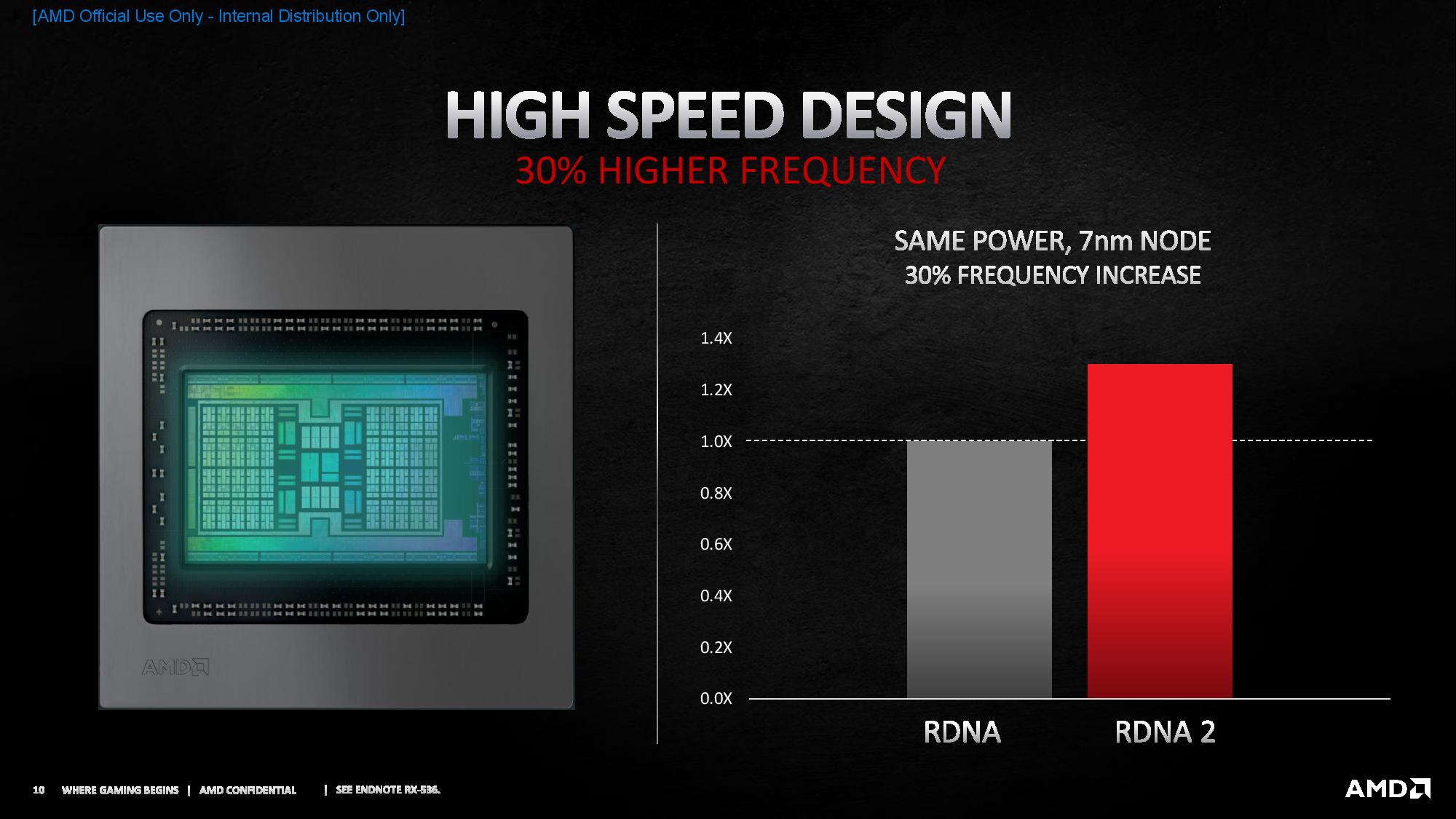

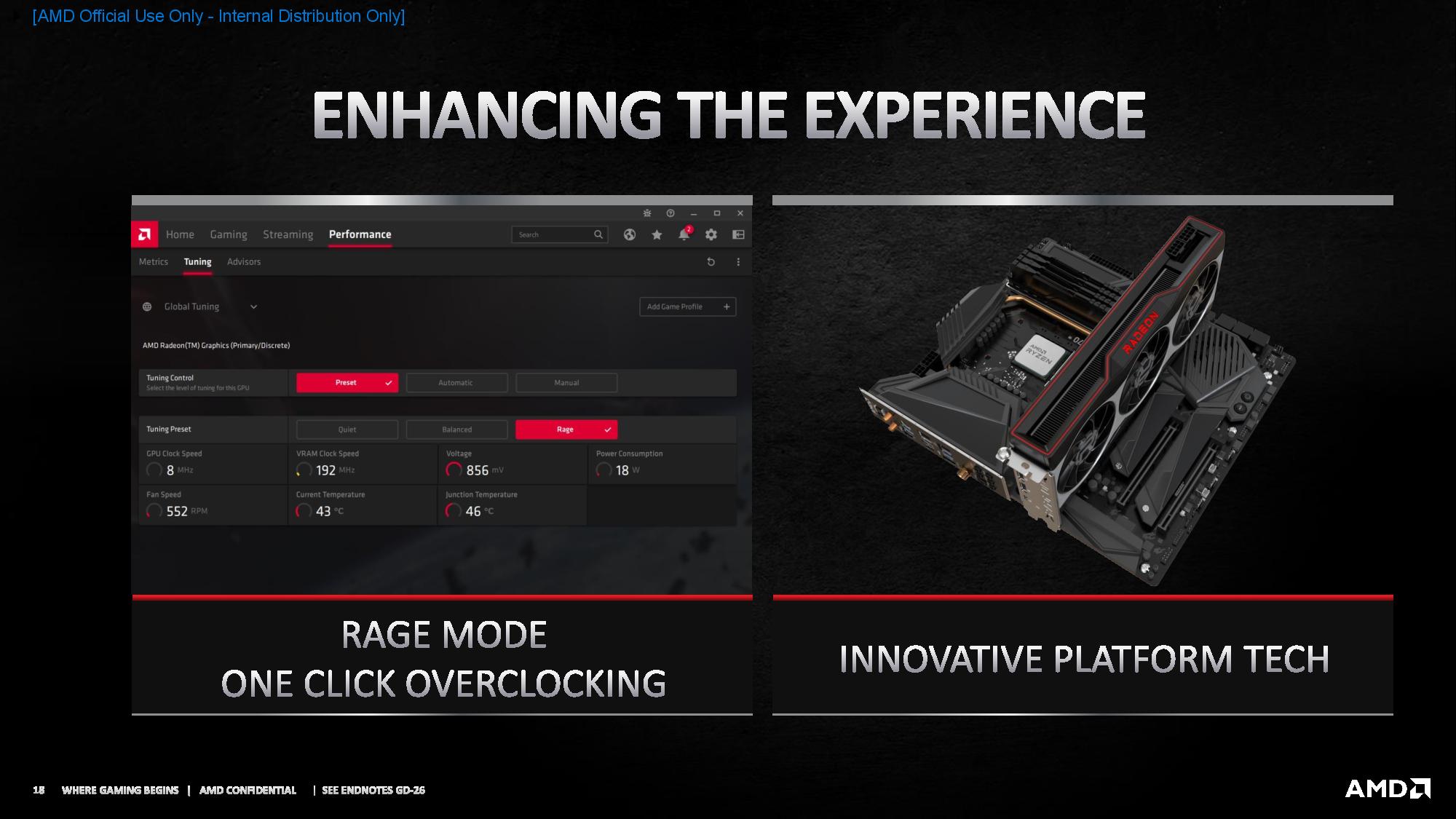

Unlocking better performance scaling with higher clock speeds means one big thing to enthusiasts: Bigger gains from overclocking, which is where AMD’s new Rage Mode auto-overclocking software comes in.

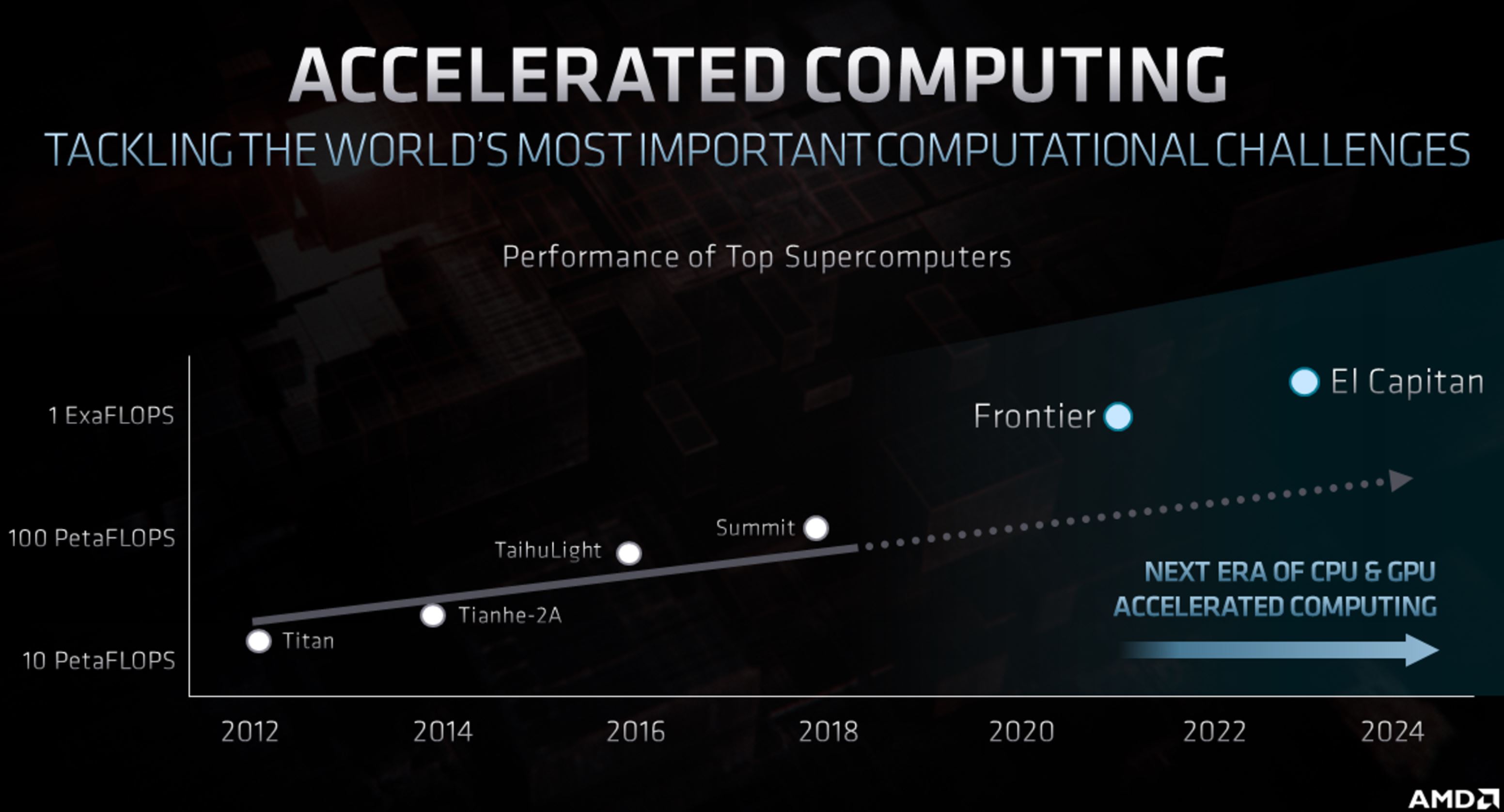

AMD sees this new Smart Access Memory and Infinity Cache tech as satisfying the increasing needs for increased data throughput due to the shift to larger resolutions, like 4K gaming. The Infinity Cache also boosts ray tracing performance considerably, as more of the working data set is kept closer to the compute units to ‘feed the beast,’ as it were.

AMD’s Radeon RX 6000 will also support the DirectStorage API, which reduces game load times, and it’s possible that explosive latency-reducing storage tech could benefit from the Smart Memory Access feature, too, giving AMD yet another advantage. But we’ll have to wait to learn more details.

AMD says that game developers will have to optimize for the Smart Memory Access feature, which means it could take six to twelve months before we see games optimized for the new tech. The company does expect to benefit from some of the shared performance tuning efforts between PC and the new consoles, namely the Sony PS5 and Microsoft Xbox Series X.

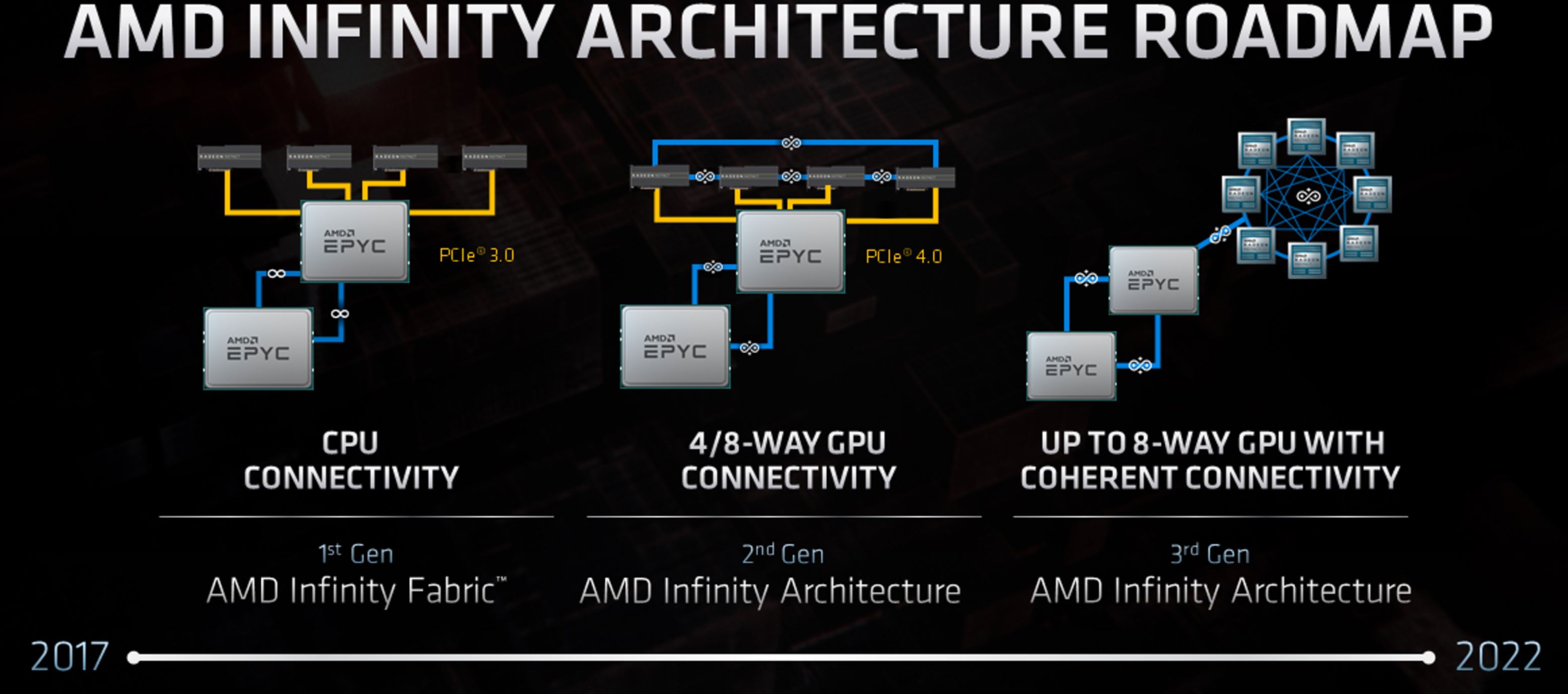

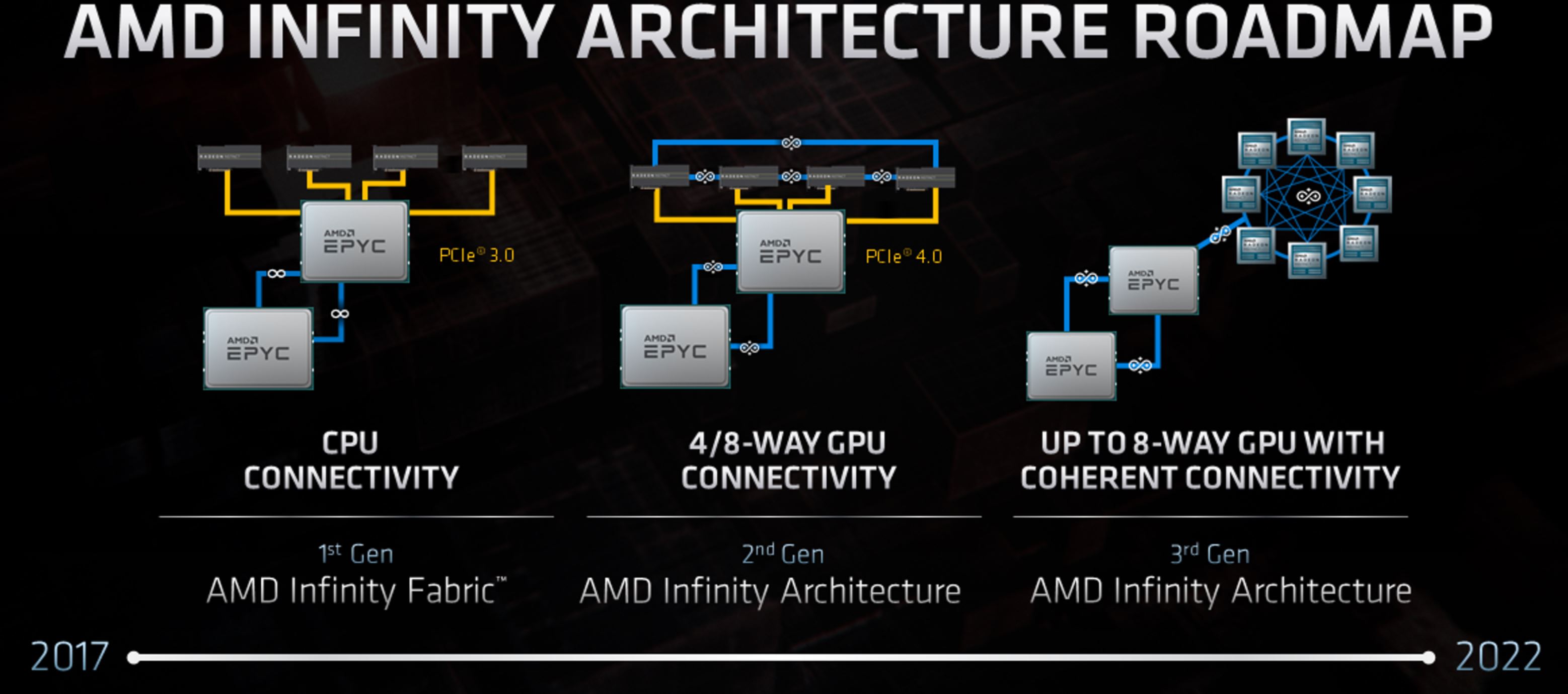

Naturally, developer support will hinge on how easy it is to enable the new feature, but if past indicators are any signal, it could actually simplify coding. AMD’s new Smart Access Memory feels very similar to AMD’s Infinity Cache 3.0 on its enterprise products that enables cache coherency between the CPU and GPU. Leveraging cache coherency, like the company does with its Ryzen APUs, unifies the data and provides a "simple on-ramp to CPU+GPU for all codes."

AMD outlined that tech in a presentation last year, saying that shared memory allows the GPU to access the same memory the CPU uses, thus reducing and simplifying the software stack. AMD also provided some examples of the code required to use a GPU without unified memory, while accommodating a unified memory architecture actually alleviates much of the coding burden, which you can read about here. It will be interesting to learn if there are any similarities between the two approaches.

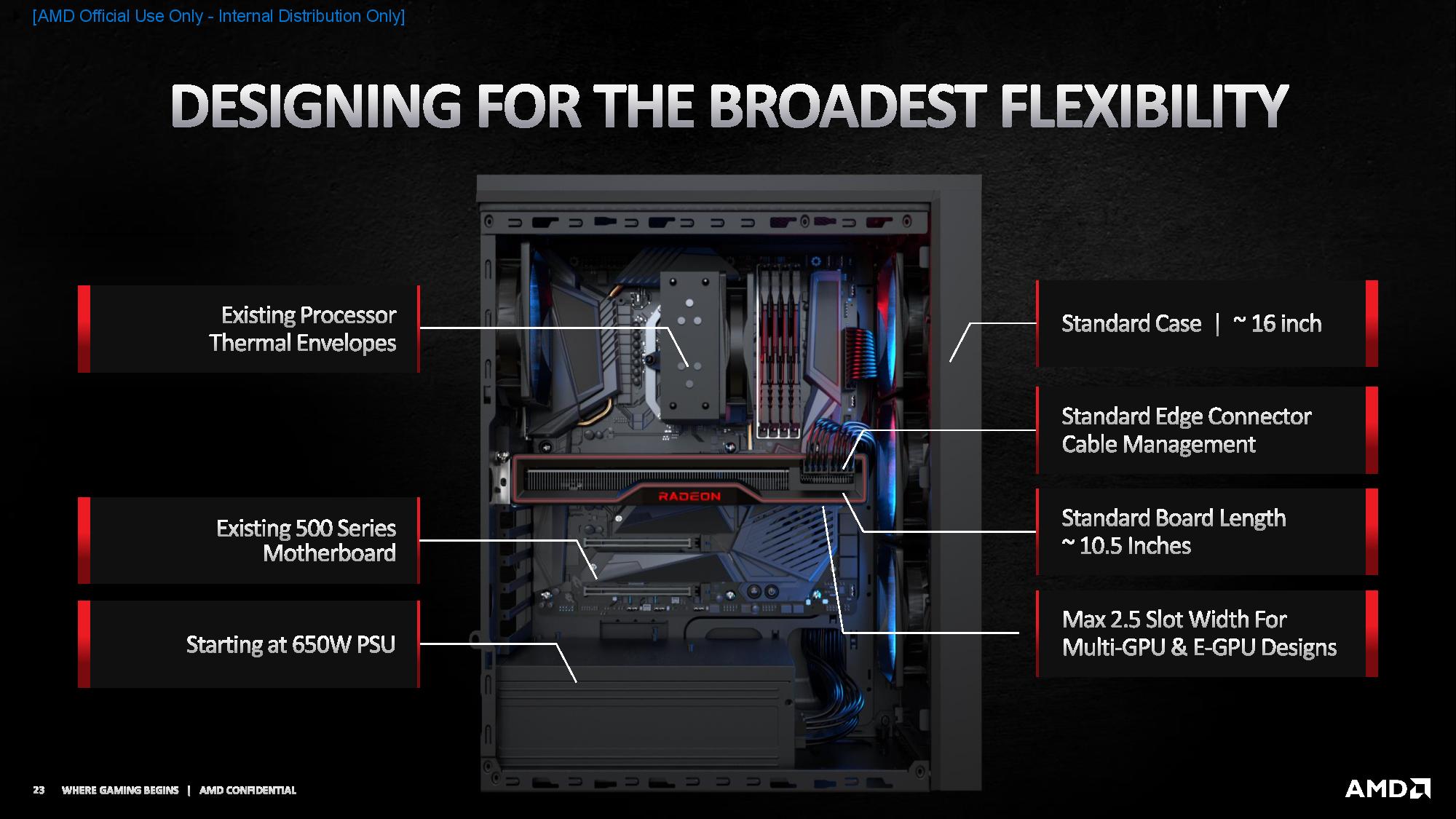

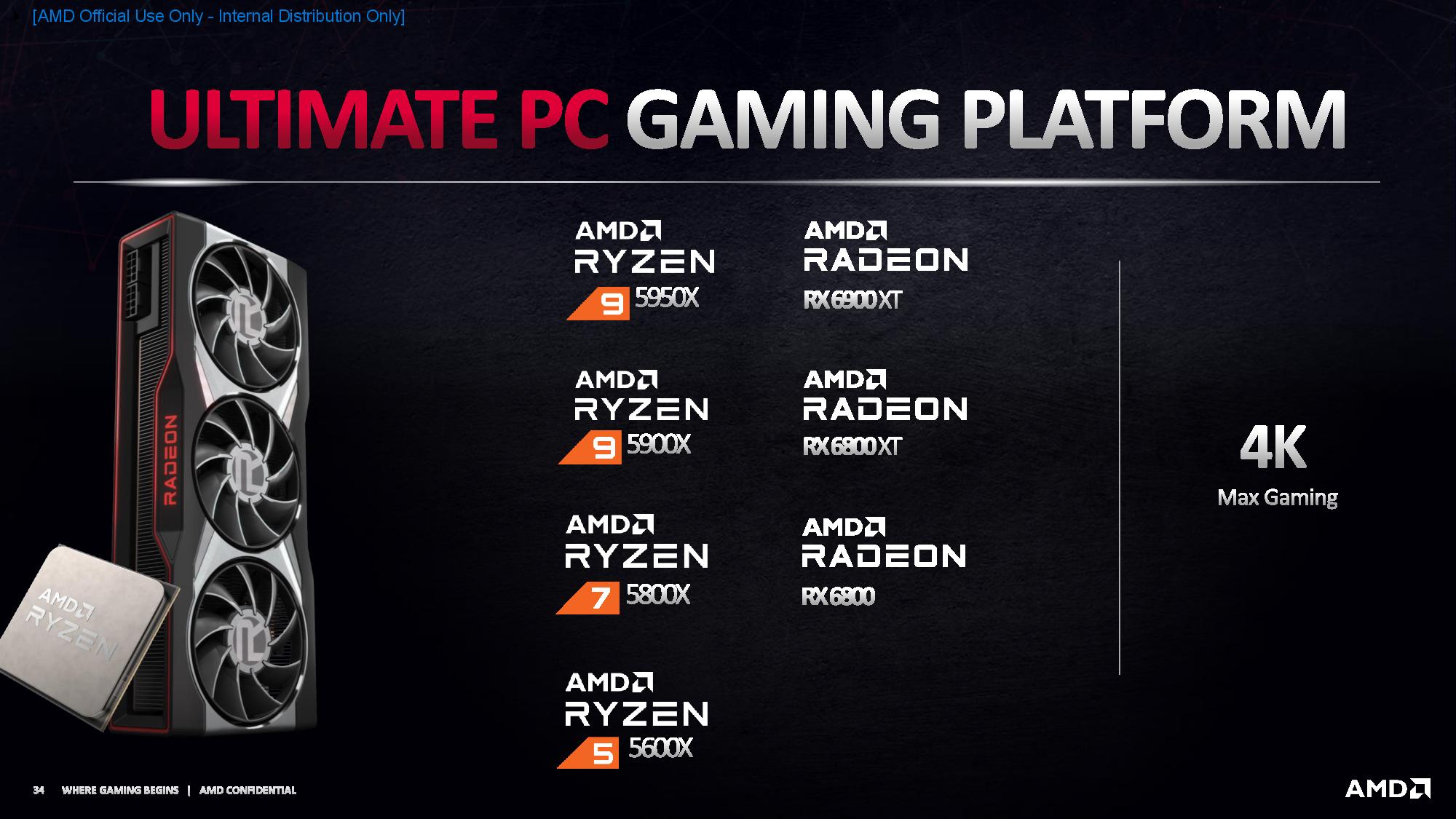

You’ll need three ingredients to unlock the Smart Memory Access feature: A Radeon RX 6000 GPU (all models support it), a Ryzen 5000 processor, and a standard 500-series motherboard. We asked AMD if the tech will come to its previous-gen CPUs and motherboards, but the company merely tells us that it would provide updates at a future time if there is more enablement.

In the end, whether or not this tech results in real-world advantages remains to be seen: Developers will have to support Smart Memory Access. We still have a lot to learn from AMD about this new tech, and if there are any parallels between other approaches like Nvidia's NVLink. However, it does show that AMD's inherent advantage of producing both CPUs and gaming GPUs gives it a leg up on the competition, enabling features that its competitors, like Nvidia, probably can't match in the consumer market.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

GregoryDude Exciting times to be PC enthusiast. I love what AMD is doing and I love Nvidia's continued innovation. Competition, regardless of what camp you side with makes all us consumers the beneficiaries. Now we need Intel to get their act together and the PC environment will be balanced and more glorious!Reply -

atomicWAR Finally AMD is going to try and compete at the high end GPU market with lower prices and mostly equivlent feature sets. The question is can their drivers compete? Their are the number one reason after performance I have not stayed invested in an AMD/ATI GPU since the HD 3000 series. I have dipped my toe in now and again since then but the drivers always cause me to sell off or gift my AMD GPU parts to other people...Here's to hoping that is all about to change!!Reply -

gggplaya ReplyatomicWAR said:Finally AMD is going to try and compete at the high end GPU market with lower prices and mostly equivlent feature sets. The question is can their drivers compete? Their are the number one reason after performance I have not stayed invested in an AMD/ATI GPU since the HD 3000 series. I have dipped my toe in now and again since then but the drivers always cause me to sell off or gift my AMD GPU parts to other people...Here's to hoping that is all about to change!!

I think their drivers and problems with crashing will get much better considering that Xbox Series X/S essentially uses windows 10 core for it's operating system and will be using DirectX 12 ultimate exactly the same as PC and NAVI is the same gpu architecture. So if a game is developed to run on console and PC, it'll likely be fairly solid with a NAVI gpu. -

atomicWAR Replygggplaya said:I think their drivers and problems with crashing will get much better considering that Xbox Series X/S essentially uses windows 10 core for it's operating system and will be using DirectX 12 ultimate exactly the same as PC and NAVI is the same gpu architecture. So if a game is developed to run on console and PC, it'll likely be fairly solid with a NAVI gpu.

Execpt that didn't happen with the with their drivers after the launch of XB1/PS4/WiiU. So on the driver front if anything i am even more skeptical then I was last generation. They didn't not fix things last time, they actually got worse since then IMHO. You just look at the 5700Xt launch for such confirmation. I hope all is well as we really need AMD competive in the GPU market. Nvidia's antics have only got worse over the last 6 years... -

gggplaya Replylarsv8 said:I thought I read somewhere that the top card will not be made by AIBs, does anyone know?atomicWAR said:Execpt that didn't happen with the with their drivers after the launch of XB1/PS4/WiiU. So on the driver front if anything i am even more skeptical then I was last generation. They didn't not fix things last time, they actually got worse since then IMHO. You just look at the 5700Xt launch for such confirmation. I hope all is well as we really need AMD competive in the GPU market. Nvidia's antics have only got worse over the last 6 years...

I'm not sure what you're talking about? XB1 and PS4 aren't using NAVI graphics architecture. Neither did PC until the 5xxx series graphics cards launched. No one was developing for NAVI at the time. Consoles were on GCN architecture and I haven't had any more issues with my Vega card than I did with my Geforce 970.

Next month, Xbox Series x/s and PS5 will both launch with NAVI and within months millions of gamers will be using NAVI. Up until now, NAVI sales of the 5xxx cards haven't been that great. So there hasn't been a compelling reason to optimize for NAVI. After next month, there will be. -

colonie Do you guys think with SMA there will be a difference in performance for B550 Sven X570?Reply

Maybe PCIe over CPU (B550) vs PCIe over PCH (X570) could show some improvents because of the "direct communication" between CPU and GPU.

What do you think? -

atomicWAR @gggplaya No not Navi and yes GCN for the XB1/PS4/Wii U. But they did have GCN parts and Drivers were constantly an issue. Now AMD does get some very very small credit for improving FPS via drivers over time therefore getting more horse power from their cards years later. But my issues with this is the drivers only got better FPS with time because of the far less than ideal state they dropped in. Second huge problem for me was by the time their drivers would have made a difference on a purchasing front for me in terms of FPS....I was alreadying moving on to a new generation of cards for my systems. If a user held on to cards for greater then one generation newer (ie every other gen or older) I could see an argument being made but with both my wife and I being PC gamers at least one rig is getting a new card if not two (we have 3 gaming rigs in the house not counting laptops) ever generation that drops as we pass down cards to her PC from mine and the game stream server gets one every other generation. As for you not having issues with Vega, I am happy to hear it. Thing is though plenty of folks did have issues. I can't speak for Vega personally as I avoided it like the plague after my experiences with the HD 3000s, HD 4000s, HD 6000s, HD 7000s, etc on the driver front. I have had more driver issues with every AMD released GPU core, I personally used even if short term do to frustration....compared to Nvidia's equivalent part.Reply -

gggplaya ReplyatomicWAR said:@gggplaya No not Navi and yes GCN for the XB1/PS4/Wii U. But they did have GCN parts and Drivers were constantly an issue. Now AMD does get some very very small credit for improving FPS via drivers over time therefore getting more horse power from their cards years later. But my issues with this is the drivers only got better FPS with time because of the far less than ideal state they dropped in. Second huge problem for me was by the time their drivers would have made a difference on a purchasing front for me in terms of FPS....I was alreadying moving on to a new generation of cards for my systems. If a user held on to cards for greater then one generation newer (ie every other gen or older) I could see an argument being made but with both my wife and I being PC gamers at least one rig is getting a new card if not two (we have 3 gaming rigs in the house not counting laptops) ever generation that drops as we pass down cards to her PC from mine and the game stream server gets one every other generation. As for you not having issues with Vega, I am happy to hear it. Thing is though plenty of folks did have issues. I can't speak for Vega personally as I avoided it like the plague after my experiences with the HD 3000s, HD 4000s, HD 6000s, HD 7000s, etc on the driver front. I have had more driver issues with every AMD released GPU core, I personally used even if short term do to frustration....compared to Nvidia's equivalent part.

Microsoft didn't start making the push for a UWP(Universal Windows Platform) until 2015. Until then, consoles used different API's then windows because they were lower level API's, closer to the hardware. Even up to today, direct x versions for xbox and pc were developed in parallel but not used universally by game dev's. Most games are still on DirectX 11 on PC, which came out in 2009. So the cross optimizations for PC and Console aren't really universally there. Now with DirectX12 Ultimate, dev's will be on the same API for PC and console, finally: https://www.thurrott.com/games/xbox/232903/direct-x-12-ultimate-is-the-missing-xbox-series-x-link -

atomicWAR Replygggplaya said:Microsoft didn't start making the push for a UWP(Universal Windows Platform) until 2015. Until then, consoles used different API's then windows because they were lower level API's, closer to the hardware. Even up to today, direct x versions for xbox and pc were developed in parallel but not used universally by game dev's. Most games are still on DirectX 11 on PC, which came out in 2009. So the cross optimizations for PC and Console aren't really universally there. Now with DirectX12 Ultimate, dev's will be on the same API for PC and console, finally: https://www.thurrott.com/games/xbox/232903/direct-x-12-ultimate-is-the-missing-xbox-series-x-link

I truly hope you are right...you are on UWP/DX11 and all that...I just want ATI I mean AMD back in the high end GPU market. I truly loved ATI cards...