Project Tango: Where We Are And What's To Come (Update: Video)

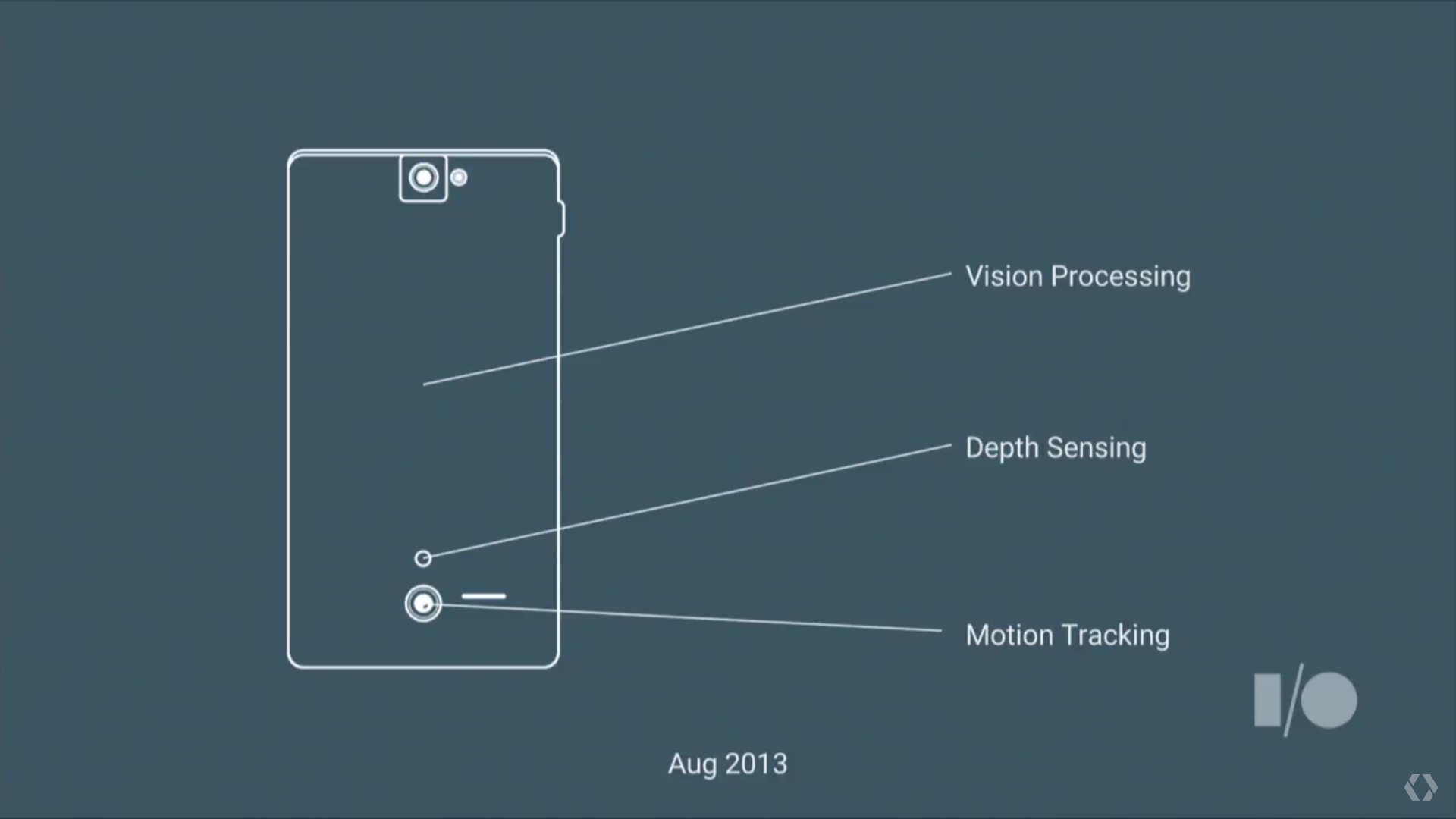

Every day, we use our eyes to judge light, shadows, shapes and distances. We feel at home in the places we are most familiar with. Project Tango is intended to give devices a similar level of spatial perception through motion tracking, depth perception and area learning. It employs motion tracking sensors, depth sensors, and hardware-accelerated vision processing units (VPUs). The prototype tablet proved to be clunky, but refinements allowed Google to both downscale the hardware into mobile phone-class components and achieve the most processing power in tablets.

Over the past year, Google worked with Qualcomm to ensure that support for Qualcomm’s Board Support Package (BSP) reaches Project Tango’s necessary components. This means that OEMs opting for Snapdragon processors can make Project Tango-enabled devices. Lenovo’s new smartphone, which we saw this year at CES, will be the first consumer device with these features. More information about the phone will be revealed during Lenovo’s TechWorld show on June 9.

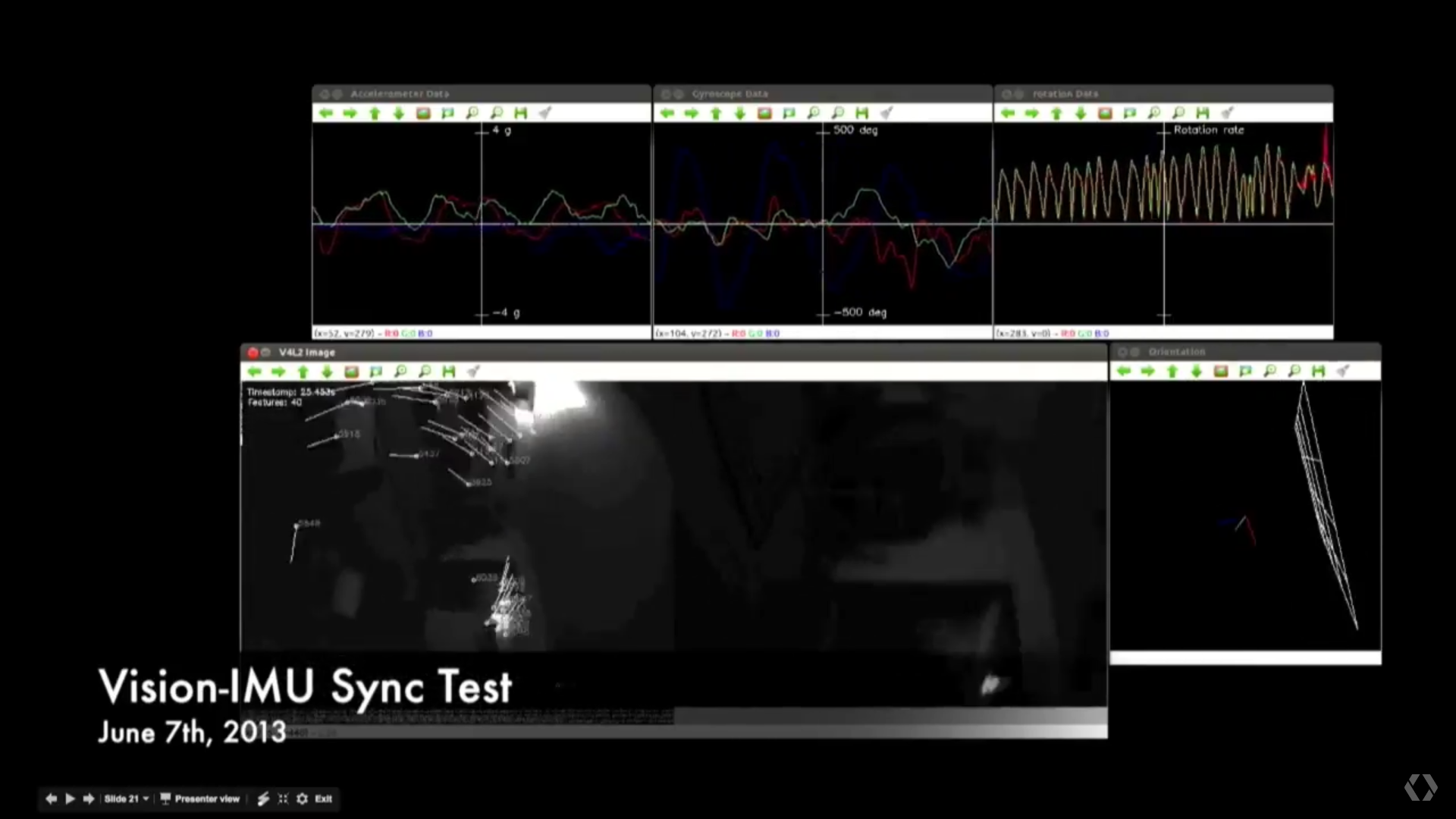

A short video illustrated the milestones the Project Tango team crossed since the inception of this technology. The dev team ran Project Tango through a variety of stress tests, including motion tracking on a roller coaster, tracking in cluttered environments, and tracking while biking downhill. Project Tango is now capable of room-scale localization, realtime on-device room capture, augmented reality overlays and more.

Project Tango’s basic APIs are now integrated into Android N, giving supported devices access to the 6DoF Pose Sensor and Depth Camera. With this, app developers can make motion-sensitive games and apps that measure the shape of the device's surroundings. Devices that meet all of Project Tango’s specifications will receive further support for area learning, depth, meshing and augmented reality.

The Project Tango team built an out-of-box experience for all Tango-enabled devices that constructs a virtual environment around the user, which can be examined by moving the device. Users can observe everything, including the shrubbery, the grass and plants below, and the animals roaming around.

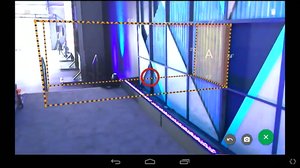

The lives demos illustrated (literally) what Project Tango is capable of. For example, the Measure app allows the device to measure existing objects based on distance and perception and can even create measured volumes. This type of application would be perfect for measuring the length of spaces in a construction environment.

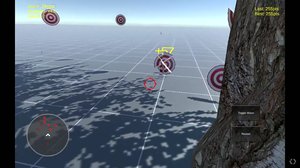

The Wayfair app allows users to place 3D furniture within an area and view it from different angles and move around within the space. The demo shows a dining room being placed on the floor and a virtual lamp being placed on a real table. Games can be developed with movement; the demo shows a first-person target practice game. The catch? The bullets ricochet, so players must move while they’re shooting.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

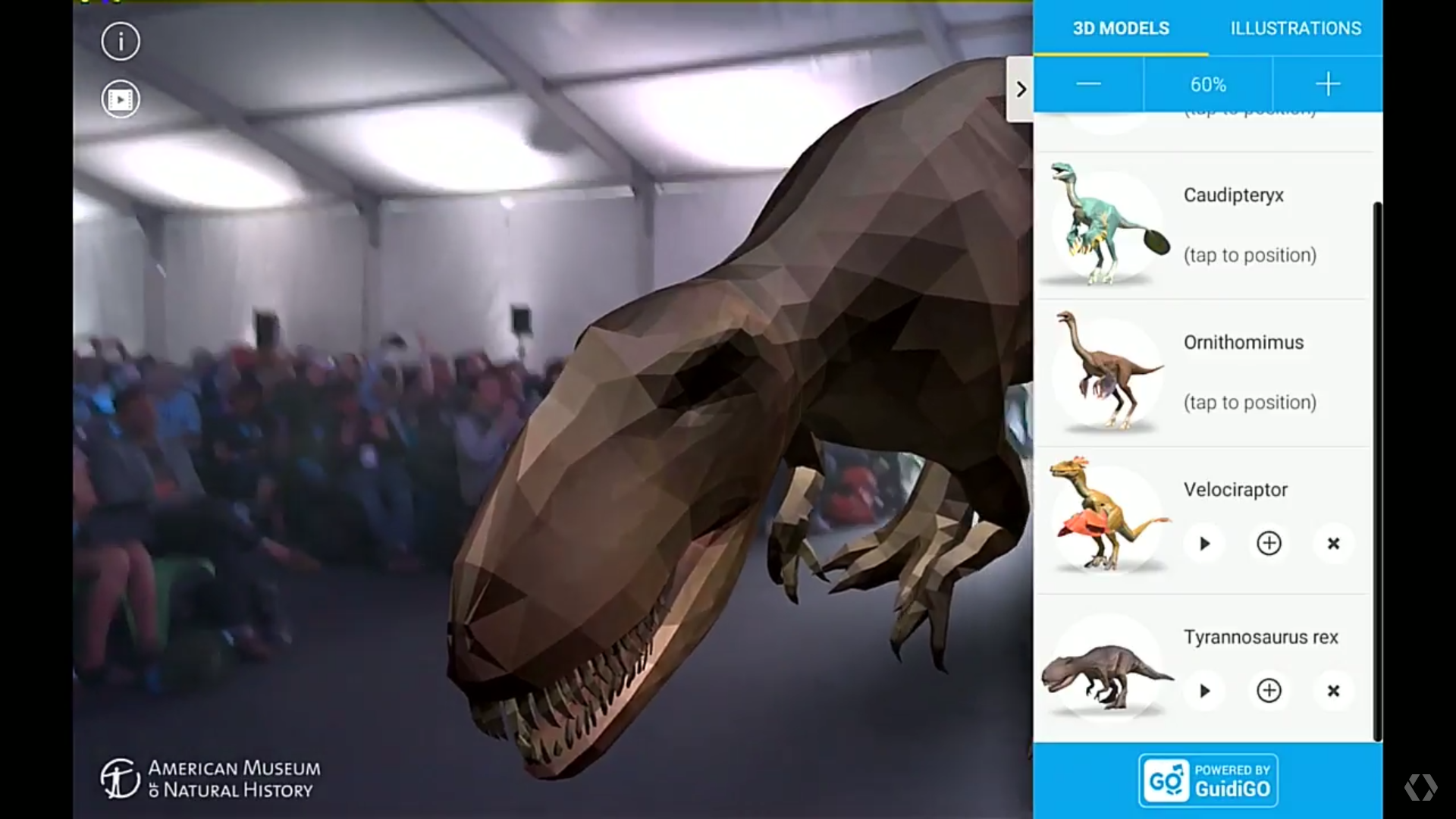

Finally, an app developed in conjunction with the American Museum of Natural History allows users to place dinosaurs in the area around them with accurate scaling, similar to the furniture in the Wayfair app. It feeds you facts about the dinosaurs, and you can observe the beasts' mannerisms. The app lets you scaled the dinosaurs however you like, so for example, a T-Rex can range from tiny to life-size.

These apps are just a handful of many that are in development. Another video provided a glimpse of the type of apps currently in development. We took note of a game with virtual assets such as dominos and toy aliens, a virtual pet, a car shopping app that provides an accurate virtual representation of the vehicle (including the interior), and an FPS game.

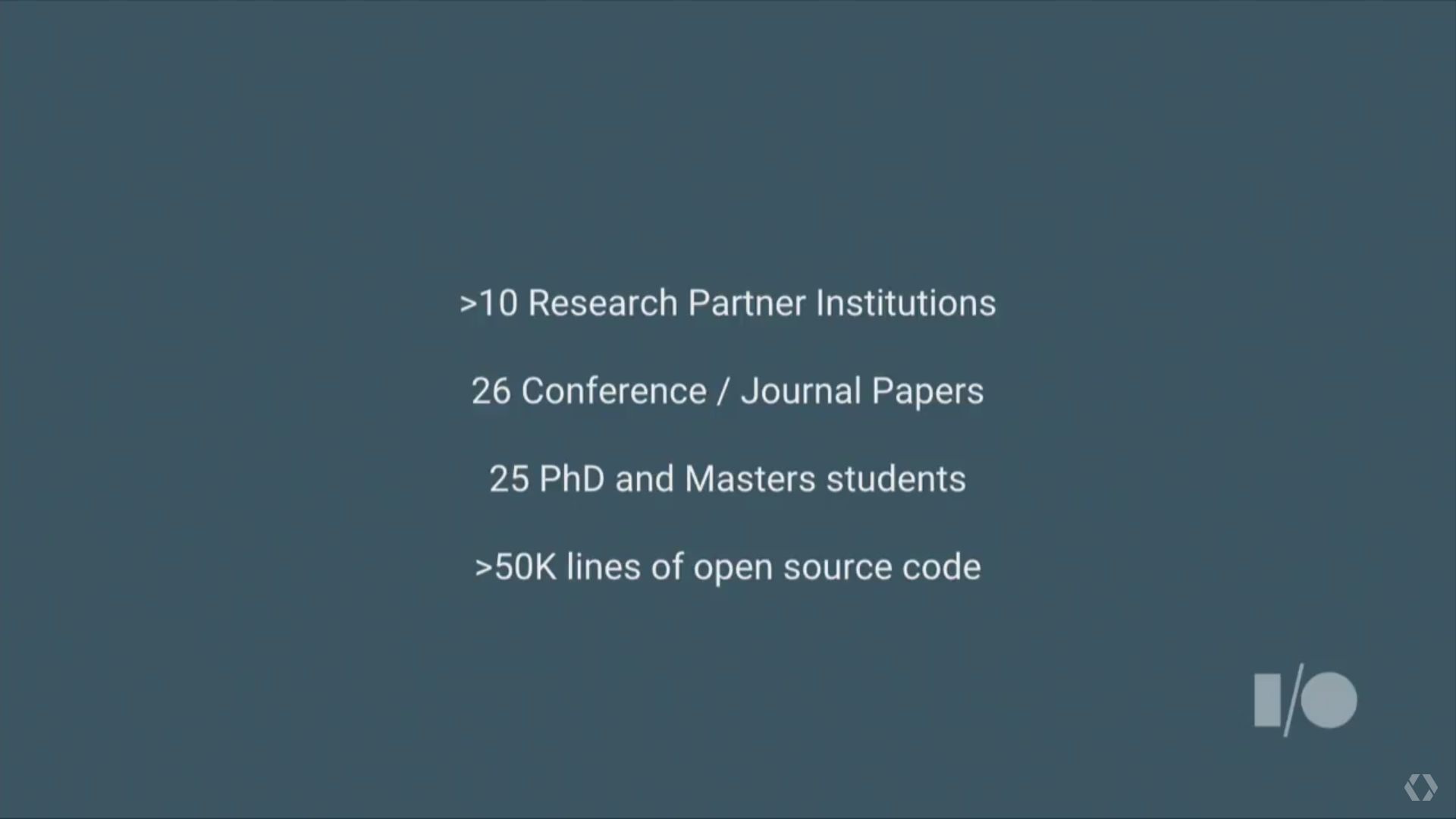

Project Tango is far from perfect, and the dev team recognizes this. For example, Project Tango can recognize an object, but it won’t know what it looks like from behind or from the inside. It won’t know what an environment will look like at night. These challenges can’t be solved in the next couple of years, either; it will take years and years before Project Tango can accurately simulate human perception. Thus, the Project Tango dev team openly collaborates with outside researchers to improve the software’s code. To date, outside researchers have contributed over 50,000 lines of open source code to the effort.

That isn’t to say we should be discouraged. The benefits Project Tango can offer are near and tangible. The demonstration concluded with a video illustrating several ongoing projects that are part of the research efforts. The video includes precise indoor navigation, outdoor 3D construction and micro-gravity testing.

Many fun and exciting developments will inevitably spring from Project Tango, but more importantly, we’ll see Project Tango used in ways that redefine how we traditionally approach tasks. This technology can allow users to visit places they’ve never seen before, provide aid to the visually impaired, and help emergency workers approach disasters in safer and more organized way.

Everything that was shown was accomplished through Google’s Project Tango tablets, which are available as a part of the Project Tango Development Kit for $512.

Alexander Quejado is an Associate Contributing Writer for Tom’s Hardware and Tom’s IT Pro. Follow Alexander Quejado on Twitter.

Tom's Hardware is the leading destination for hardcore computer enthusiasts. We cover everything from processors to 3D printers, single-board computers, SSDs and high-end gaming rigs, empowering readers to make the most of the tech they love, keep up on the latest developments and buy the right gear. Our staff has more than 100 years of combined experience covering news, solving tech problems and reviewing components and systems.

-

bit_user I think Tango is mostly a stepping stone for proper AR glasses.Reply

It's difficult for me to see benefits that justify the added cost of depth sensors in the average phone. Even in high-end phones, I think consumers would opt to forego them to reduce weight, size, cost, and battery drain.

-

bit_user Reply

Part of it, I think. I don't have any experience with Hololens, nor have I used Tango's Unity interface. But, with those caveats, what Tango will do is:18006208 said:So it's Hololens on a tablet??

■ Let you capture a depth image & RGB image.

■ Learn an area, and give you the absolute position & orientation within it (termed the tablet's "pose").

■ Capture & reconstruct scene geometry (only recently exposed feature).The technique for doing 2 & 3 is called SLAM (Simultaneous Localization And Mapping), and it's not particularly new. What Tango does is package it up in a nice API and hardware platform, so that developers can start to build apps atop it, and independent device makers can make devices on which the apps will run. The API is pretty agnostic to specifically what combination of sensors is used, so they could be: structured light, ToF, stereo, or (typically) some combination.

This is a natural first step towards building a generalized AR platform. I am waiting for some AR glasses to support it, whether it's Google Glass 2, Magic Leap, Sulon, or somebody else.

In fact, Intel has a Tango Phone (which may never see the light of day), and has demo'd a GearVR-style face mount, in order to use it as non-transparent AR glasses. I think Google even has a version of Cardboard for use with their tablet.

BTW, one difference between Tango and Hololens might be how easily you can insert objects in the scene. From what I've seen, Tango doesn't (yet) make it easy to place a 3D object in the physical world and have automatically rendered, it whenever that location is visible. Maybe in the Unity API, but not (yet) in the C or Java APIs.