G.Skill Brought Its Blazing Fast DDR4-4800 To Computex

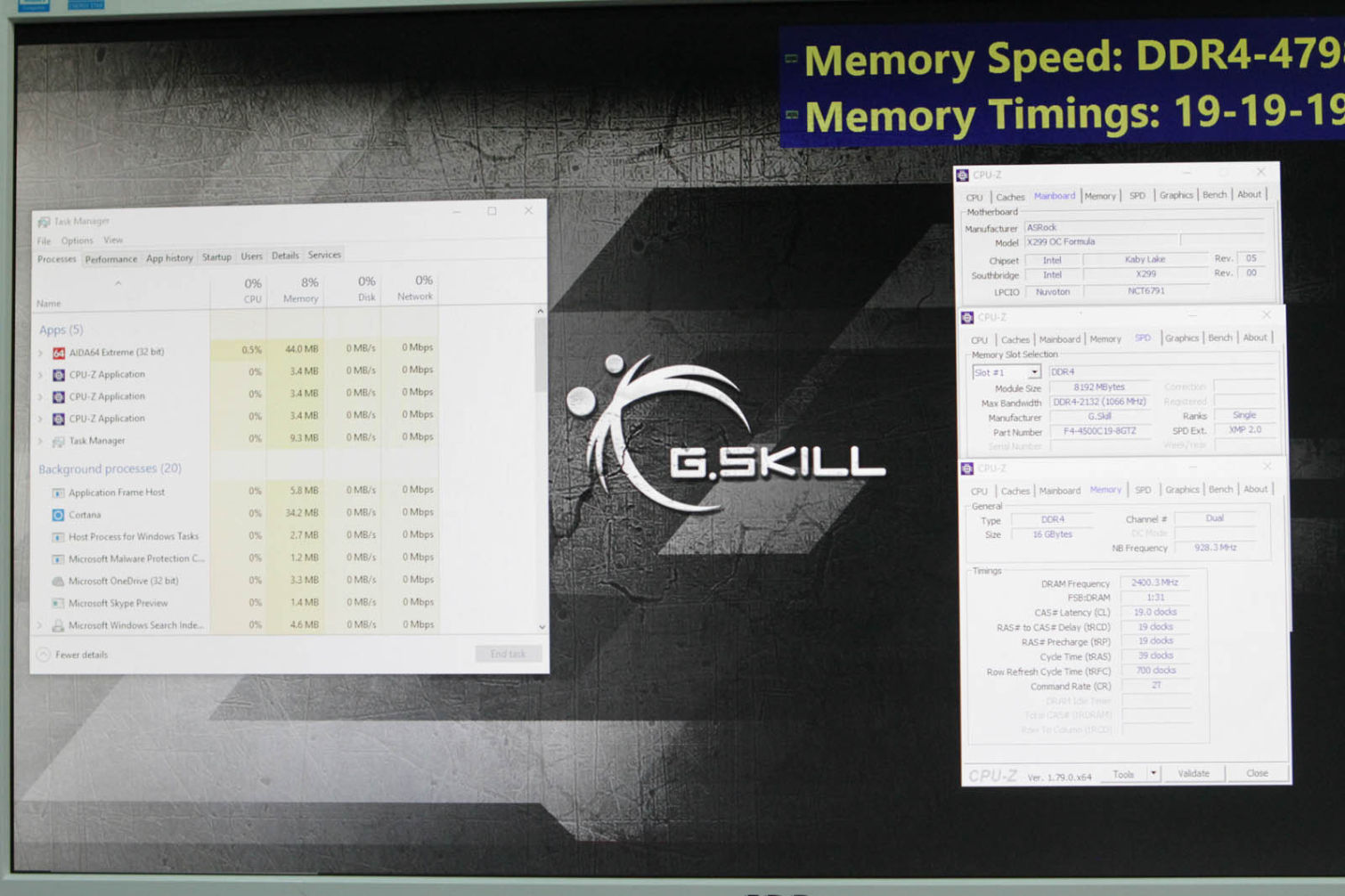

G.Skill didn't only make good on its promise to bring DDR4-4500 to market this year--it upped the ante by announcing DDR4-4800 at Computex. The company showcased everything from a 32GB DDR4-3466 SO-DIMM memory kit (2 x 16GB) at CL 16 -18-18-43 timings all the way up to its Trident Z DDR4-4800 dual-channel desktop memory kit (2 x 8GB) at CL 19-19-19-39.

G.Skill gave its complete line of Trident Z RGB memory a speed boost starting at DDR4-3600 16GB kits (2 x 8GB) at CL 16-16-16-36 for AMD’s Ryzen-based platform, all the way up to DDR4-4400 16GB kits (2 x 8GB) at CL 19-19-19-39 for use with Intel’s new X-Series processors. There’s also a 64GB (8 x 8GB) DDR4-4400 at CL 19-21-21-41 available for X299 motherboard owners, too.

Those of you who are more interested in top speed versus RGB lighting functionality will be happy to know the company also had a Trident Z DDR4-4800 16GB kit (2 x 8GB) at CL 19-19-19-39 on display for use with Intel-based HED motherboards.

During an overclocking event held at the G.Skill booth in Taipei, one competitor even managed to set a DDR4 memory speed world record. Using the company's DDR4 memory built with Samsung 8Gb ICs, professional overclocker Toppc managed to hit DDR4-5500 speeds using liquid nitrogen cooling.

G.Skill’s booth at Computex featured a number of custom water-cooled systems, all running the aforementioned memory kits in a variety of configurations. Information on pricing and availability were not available at press time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Steven Lynch is a contributor for Tom’s Hardware, primarily covering case reviews and news.

-

the nerd 389 It's too bad that Intel's new X-series uses thermal paste as the TIM. It really puts a damper on overclocking.Reply

On the S-series, you could get a few CPUs and take the risk of replacing the TIM. That's a lot less likely when the CPU costs $1000 (for the i9-7900X) to start with, and uses a much larger die.

Still, it's good to know you can push RAM to DDR4-4800, even if the i9-7900X only goes to 4500 MHz on a good day. -

RomeoReject Everything I've read says that the new AMD stuff does better with fast memory. Would something like this help them even more? Or do we hit a certain point where added speed becomes pointless?Reply -

JimmiG Reply19836436 said:Everything I've read says that the new AMD stuff does better with fast memory. Would something like this help them even more? Or do we hit a certain point where added speed becomes pointless?

The highest speed divider that you can dial in on Ryzen is 4000 MHz, with AGESA 1.0.0.6. However I don't think anyone has achieved 4000 MHz with any stability.

With the highest quality RAM modules and the most expensive motherboards, it seems 3466 MHz is about the limit of what you can achieve without encountering memory errors and stability issues. A small number of users might have achieved 3600 MHz, though I have to wonder whether that's completely stable running 24/7 stress tests (memory errors can take many hours of stress testing to manifest themselves).

There are diminishing returns above 3200 MHz anyway so the sweet spot for Ryzen is still a good set of 3200 C14 sticks. -

Reply19836436 said:Everything I've read says that the new AMD stuff does better with fast memory. Would something like this help them even more? Or do we hit a certain point where added speed becomes pointless?

That was mostly true with the chips that had integrated graphics. However, it's not really that true with this generation.

IMO, a big problem are that the JEDEC standards, and the industry in general, had no idea the MT/s would increase so fast. With DDR3, the stardard went from 800 to 2133 MT/s, over a 10 year period. With DDR4, the stardard goes from 2133 MT/s to 3200 MT/s. Well, about 2-3 years in, we are already at 4800 MT/s speeds.

We are outside of standards and outside of specs. It's a wonder it works at all. I expect it will be a really bad situation in a couple years, for several reasons. -

jasonelmore @andy chow, that's where your wrong. Ryzen's cache and CCX fabric benefit greatly from fast memory.. it's what determines how fast the two CCX's communicate with each other.Reply -

Reply19838958 said:@andy chow, that's where your wrong. Ryzen's cache and CCX fabric benefit greatly from fast memory.. it's what determines how fast the two CCX's communicate with each other.

Really? Have any proof? I've read that, but doubt it. Show me actual benchmarks in real world scenarios (I consider compiling real-world), and I'll believe it. -

the nerd 389 Reply19839096 said:19838958 said:@andy chow, that's where your wrong. Ryzen's cache and CCX fabric benefit greatly from fast memory.. it's what determines how fast the two CCX's communicate with each other.

Really? Have any proof? I've read that, but doubt it. Show me actual benchmarks in real world scenarios (I consider compiling real-world), and I'll believe it.

See:

http://www.tomshardware.com/reviews/overclocking-amd-ryzen,5011-7.html

http://www.tomshardware.com/reviews/amd-ryzen-5-1500x-cpu,5025-2.html

http://www.guru3d.com/articles_pages/amd_ryzen_7_memory_and_tweaking_analysis_review,1.html

The TH reviews don't explicitly cover application performance in the memory sections, but the guru3D article is quite thorough. If you need more, I'd be happy to oblige. Not many reviewers cover compiling, so you'll have to make do with the more standard benchmarks, such as compression. Compression serves as a decent gauge of performance across a number of workloads.

It's also worth mentioning that compilers are updated so frequently that it's difficult to use them as a benchmarking tool. I'm sure you've seen a number of sites try to do so, but they normally give up pretty quickly. You'll have to find another application to serve as your go-to for benchmarking. -

Reply19839159 said:19839096 said:19838958 said:@andy chow, that's where your wrong. Ryzen's cache and CCX fabric benefit greatly from fast memory.. it's what determines how fast the two CCX's communicate with each other.

Really? Have any proof? I've read that, but doubt it. Show me actual benchmarks in real world scenarios (I consider compiling real-world), and I'll believe it.

See:

http://www.tomshardware.com/reviews/overclocking-amd-ryzen,5011-7.html

http://www.tomshardware.com/reviews/amd-ryzen-5-1500x-cpu,5025-2.html

http://www.guru3d.com/articles_pages/amd_ryzen_7_memory_and_tweaking_analysis_review,1.html

The TH reviews don't explicitly cover application performance in the memory sections, but the guru3D article is quite thorough. If you need more, I'd be happy to oblige. Not many reviewers cover compiling, so you'll have to make do with the more standard benchmarks, such as compression. Compression serves as a decent gauge of performance across a number of workloads.

It's also worth mentioning that compilers are updated so frequently that it's difficult to use them as a benchmarking tool. I'm sure you've seen a number of sites try to do so, but they normally give up pretty quickly. You'll have to find another application to serve as your go-to for benchmarking.

Your links mostly confirmed my suspicions. Between 2133 and 3200 ram, you have a 3% increase in performance. and that's pure compression, which is valid, but artificial at the same time (the same algorithm over and over with no heterogeneity).

Compiling is a very valid and standard benchmark. Phoronix has been running them for years, a decade a least. Comparing multi-platform benchmarks for compilations of various software, with various GCC and clang versions, is an industry standard and very common. Some of us work for a living, it's not niche.

If you pay a QMO guy 85k a year, and he can run 3 or 4 tests a day, then that's a benchmark which is very important. -

the nerd 389 Reply19847244 said:19839159 said:19839096 said:19838958 said:@andy chow, that's where your wrong. Ryzen's cache and CCX fabric benefit greatly from fast memory.. it's what determines how fast the two CCX's communicate with each other.

Really? Have any proof? I've read that, but doubt it. Show me actual benchmarks in real world scenarios (I consider compiling real-world), and I'll believe it.

See:

http://www.tomshardware.com/reviews/overclocking-amd-ryzen,5011-7.html

http://www.tomshardware.com/reviews/amd-ryzen-5-1500x-cpu,5025-2.html

http://www.guru3d.com/articles_pages/amd_ryzen_7_memory_and_tweaking_analysis_review,1.html

The TH reviews don't explicitly cover application performance in the memory sections, but the guru3D article is quite thorough. If you need more, I'd be happy to oblige. Not many reviewers cover compiling, so you'll have to make do with the more standard benchmarks, such as compression. Compression serves as a decent gauge of performance across a number of workloads.

It's also worth mentioning that compilers are updated so frequently that it's difficult to use them as a benchmarking tool. I'm sure you've seen a number of sites try to do so, but they normally give up pretty quickly. You'll have to find another application to serve as your go-to for benchmarking.

Your links mostly confirmed my suspicions. Between 2133 and 3200 ram, you have a 3% increase in performance. and that's pure compression, which is valid, but artificial at the same time (the same algorithm over and over with no heterogeneity).

Compiling is a very valid and standard benchmark. Phoronix has been running them for years, a decade a least. Comparing multi-platform benchmarks for compilations of various software, with various GCC and clang versions, is an industry standard and very common. Some of us work for a living, it's not niche.

If you pay a QMO guy 85k a year, and he can run 3 or 4 tests a day, then that's a benchmark which is very important.

It's true that if you have the time to rerun benchmarks with each update, compiling is the best way to gauge performance in compiling workloads (naturally). Unfortunately, very few people outside of the IT industry have time to parse through 3 or 4 tests per day, and it's practically impossible to write articles at that rate. The impracticality of using compilers as a general purpose benchmark should be self-evident from your response.

If that's all you're paid to do, and it's the only software you'll ever use, that's one thing. For those of us that work in other areas, it's not an option. The funny part is that using information in general reviews can give estimates that are very close to the accuracy of actual workload-specific testing if you do your homework.

Lastly, improving the inter-CCX latency isn't going to give you dramatic gains like a clock speed boost would. 3% is quite significant as far as that factor is concerned. After all, there were times when that was the performance difference between consecutive generations of Intel CPUs. Will it change how you work? Probably not. Will it be noticeable in heavy workloads? Definitely.