Intel Gen11 Graphics Will Support Integer Scaling

Intel Vice President Lisa Pearce has revealed via a video tweet that Intel will add integer scaling support in the end of August. The bad news is that only Intel processors with Gen11 and newer graphics solution will have access to the feature.

Modern monitors are constantly pushing the resolution limit. Unfortunately, not all games, especially older titles, were designed to work with higher resolutions. The most common example is when you try to play a game that only supports the 1280x720 resolution on a 4K gaming monitor (3840x2160). Although there are multiple techniques to scale the image up to the monitor's native resolution, the result isn't always pleasant.

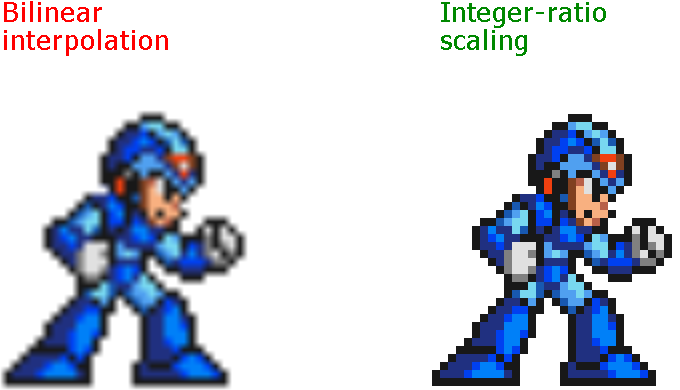

AMD and Nvidia employ bilinear or bicubic interpolation methods for image scaling. However, the final image often turns out blurry, too smooth or too soft. The integer scaling method should help preserve sharpness and jagged edges. Pixel-graphic game and emulator aficionados will surely love the sound of that. The benefits of integer scaling extend far beyond that though, as it opens the door for you to play old games at their native resolution with lossless scaling on high-resolution monitors.

Despite constant petitions from AMD and Nvidia graphics cards owners, neither chipmaker has implemented the integer scaling technique up to this point. So Intel definitely deserves the props for listening to users and taking the first step to add this feature. However, integer scaling is only compatible with Intel processors with Gen11 graphics. The closest candidate is Ice Lake, which should land before the year ends.

According to Pearce, the older Intel parts with Gen9 graphics lack hardware support for nearest neighbor algorithms. While Intel did explore the possibility of a software solution, the chipmaker felt it wasn't viable due to the performance hit. Intel expects to have integer scaling up and running by the end of August. You can enable and disable the feature through the Intel Graphics Command Center software as long as you have a compatible processor.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

koblongata Finally... Pixel perfect 1080P content for 4K displays... Been asking for it for years...Reply

Consider that 10nm Intel integrated graphics could do 1080P normal settings for most new games just fine, AND playback 4K HDR videos just fine, no rush, or even no need for a seriously expensive gaming rig for my 4K TV anymore... -

joeblowsmynose So this gives more jagged edges while upscaling? That doesn't sound useful ... at all! Unless you need a pixelated image - like the one they posted in the article? I don't get it ....Reply

Edit: I guess if smoothing was applied after the upscaling ... but wouldn't tat defeat the feature? Someone explain, maybe I'm just missing something ... -

cryoburner Reply

It's so that you can run a lower resolution that is evenly divisible by your screen's native resolution, while looking more like that resolution is the native resolution of the display. It effectively makes the pixels larger without needlessly blurring them in the process. This is arguably preferable for keeping the image looking sharp. Without integer scaling, if you run a game at 1080p on a 4K screen, for example, it will typically look a bit worse than if you were to run it on a 1080p screen of the same size, since pixels are bleeding into one another during the upscaling process. That blurring is necessary for resolutions that can not be evenly divided into your screen's native resolution, but for those that can, you are probably better without it.joeblowsmynose said:I don't get it ....

Unlike the example image, the pixels are still going to be relatively small if you are running, for example, 1080p on a 4K screen. You can still use anti-aliasing routines as well, but you won't have everything getting blurred on top of that when the image is scaled to fit the screen. -

bit_user ReplyAMD and Nvidia employ bilinear or bicubic interpolation methods for image scaling. However, the final image often turns out blurry, too smooth or too soft. The integer scaling method should help preserve sharpness and jagged edges. Pixel-graphic game and emulator aficionados will surely love the sound of that.

Actually, Nvidia recently added DLSS, which is fundamentally a very sophisticated image scaling algorithm.

However, one could argue that they got too ambitious with it, trying to make it output near-native quality, by requiring a game-specific model. What they should do is make a few generic DLSS filters that can be applied to different styles of games, including retro. Then, you could have sharp, crisp graphics, with minimal jaggies and blurring.

According to Pearce, the older Intel parts with Gen9 graphics lack hardware support for nearest neighbor algorithms.

At some level, this is utter nonsense. All GPUs support nearest-neighbor sampling. The only difference is that they're probably doing the scaling while sending the video signal out to the monitor, whereas a software approach would need to add a post-processing pass after the framebuffer has been rendered.

However, since you get some performance benefit by rendering at a lower resolution, the benefits should still outweigh the added cost of this trivial step. -

koblongata Replyjoeblowsmynose said:So this gives more jagged edges while upscaling? That doesn't sound useful ... at all! Unless you need a pixelated image - like the one they posted in the article? I don't get it ....

Edit: I guess if smoothing was applied after the upscaling ... but wouldn't tat defeat the feature? Someone explain, maybe I'm just missing something ...

Err... No, even at 80 inch, it's not easy to tell "native" 1080P & 4K apart. BUT displaying 1080P on a 4K, everything just becomes a blurry mess with no real contast. -

mitch074 So, this is a way to render downscaled images without performing mode switching? And the "missing hardware implementation" would be a way to reduce framebuffer size when doing so? What a non-event... Especially since the retro games and emulators that would be interested in that would implement nearest neighbor interpolation anyway.Reply

And what about non-integer? Say, a game running in 800x600 rendered on a 4K screen? There's no way to do an integer upscale without getting black borders...

No, the only use case I see for this "feature" is when plugged into a buggy screen that doesn't support mode switching to one's preferred rendering resolution, to allow GPU-side upscaling using a nearest neighbor algorihm. And if Intel were dumb enough to implement only bilinear or bicubic interpolation algorithms in their chips up until now, then it's nothing to brag about : they should be ashamed instead. -

koblongata Replymitch074 said:So, this is a way to render downscaled images without performing mode switching? And the "missing hardware implementation" would be a way to reduce framebuffer size when doing so? What a non-event... Especially since the retro games and emulators that would be interested in that would implement nearest neighbor interpolation anyway.

And what about non-integer? Say, a game running in 800x600 rendered on a 4K screen? There's no way to do an integer upscale without getting black borders...

No, the only use case I see for this "feature" is when plugged into a buggy screen that doesn't support mode switching to one's preferred rendering resolution, to allow GPU-side upscaling using a nearest neighbor algorihm. And if Intel were dumb enough to implement only bilinear or bicubic interpolation algorithms in their chips up until now, then it's nothing to brag about : they should be ashamed instead.

Being able to play 800*600 content pixel perfect on a TV is like dream come true...

Say, even perfect 24001800 display of 800600 would be very nice, but remember, we are talking about 1080P here, which is what matters the most.

Non feature? users have been asking for it since LCD displays first came out. Yes, for different aspect ratios to be able to go full screen, of course interpolations are needed, but what has been a huge annoyance is that even if it can be scaled perfectly which is what most users expect, it always end up a blurry mess, it's truly one of those WTF moments. I think you really underestimate the significance of this. -

TJ Hooker Reply

In theory, yes. In practice, from the comparisons I've seen, DLSS ends up looking similar or worse to regular upscaling. e.g. https://www.techspot.com/article/1794-nvidia-rtx-dlss-battlefield/bit_user said:Actually, Nvidia recently added DLSS, which is fundamentally a very sophisticated image scaling algorithm. -

joeblowsmynose Replykoblongata said:Err... No, even at 80 inch, it's not easy to tell "native" 1080P & 4K apart. BUT displaying 1080P on a 4K, everything just becomes a blurry mess with no real contast.

I don't have a 4k screen so I guess I never noticed that all the upscaling sampling done by GPUs and TVs/Monitors is so shitty ... super simple fix, weird this has even been a problem ever ... -

koblongata Replyjoeblowsmynose said:I don't have a 4k screen so I guess I never noticed that all the upscaling sampling done by GPUs and TVs/Monitors is so shitty ... super simple fix, weird this has even been a problem ever ...

Oh... well, if you have 1080P you can run games at 540P, if 1440P then 720P... perfectly sharp and vibrant.

My god, I mean, at 720P you can run latest games with this tiny 10nm iGPU, FLUIDLY.