3D XPoint SSD Pictured, Performance And Endurance Revealed At FMS

Micron and Intel have been under fire due to the lack of details surrounding the 3D XPoint launch. The company provided the now-infamous rough guidelines of "1000x faster and 1000X more endurant than NAND, with 10X the density of DRAM" but did not reveal the underlying technology, or anything else for that matter. Intel also embarked on a traveling roadshow of ambiguous 3D XPoint demonstrations of its new product, which didn't do much to quell the criticism.

Now, Micron has pulled back the veil on many of the details of its new line of 3D XPoint products at the Flash Memory Summit.

Micron branded its new 3D XPoint SSDs as QuantX (quantum leap), and unlike Intel with its Optane products, the company was surprisingly frank on many aspects of the technology. We still do not know the base materials or even the precise definition of the technology, but we learned many of the key specifications of the product at the show. Let's start with the hardware.

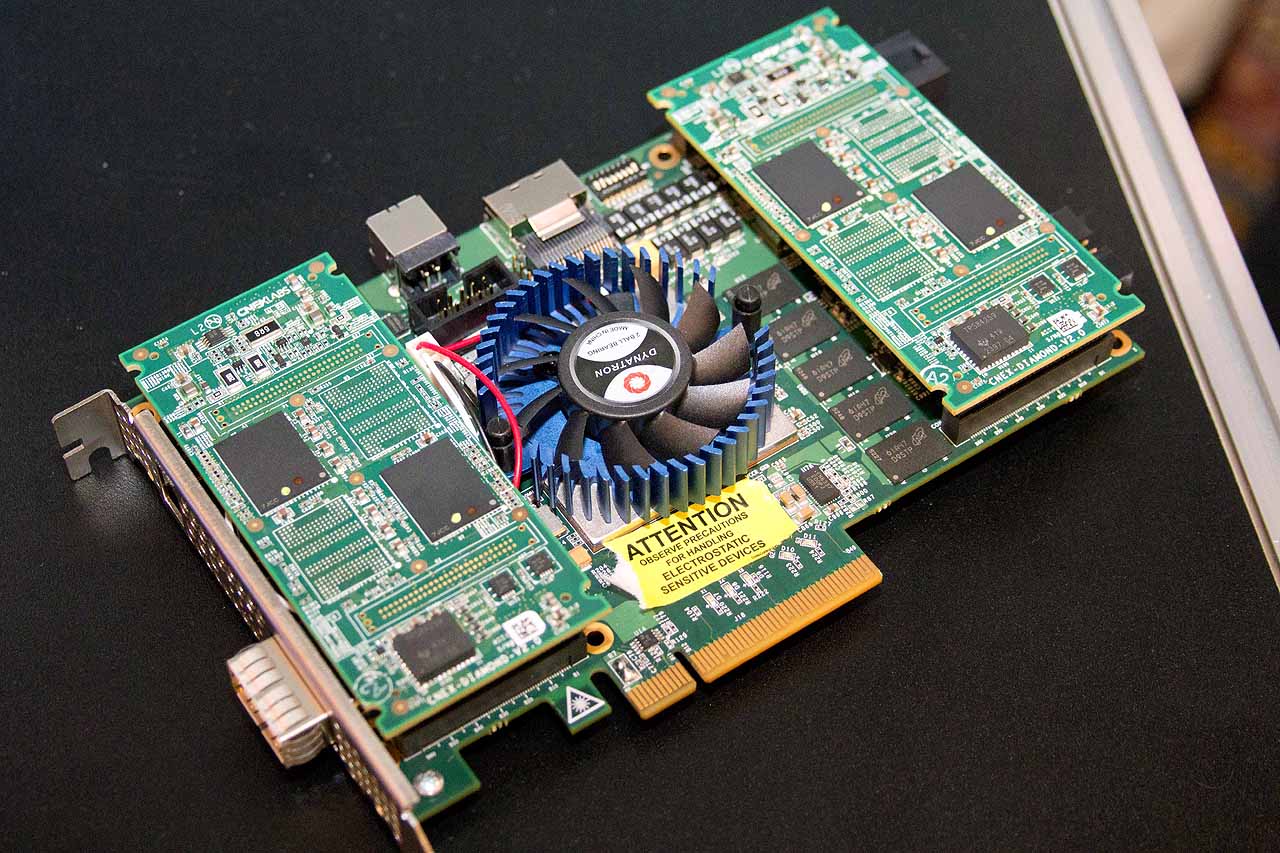

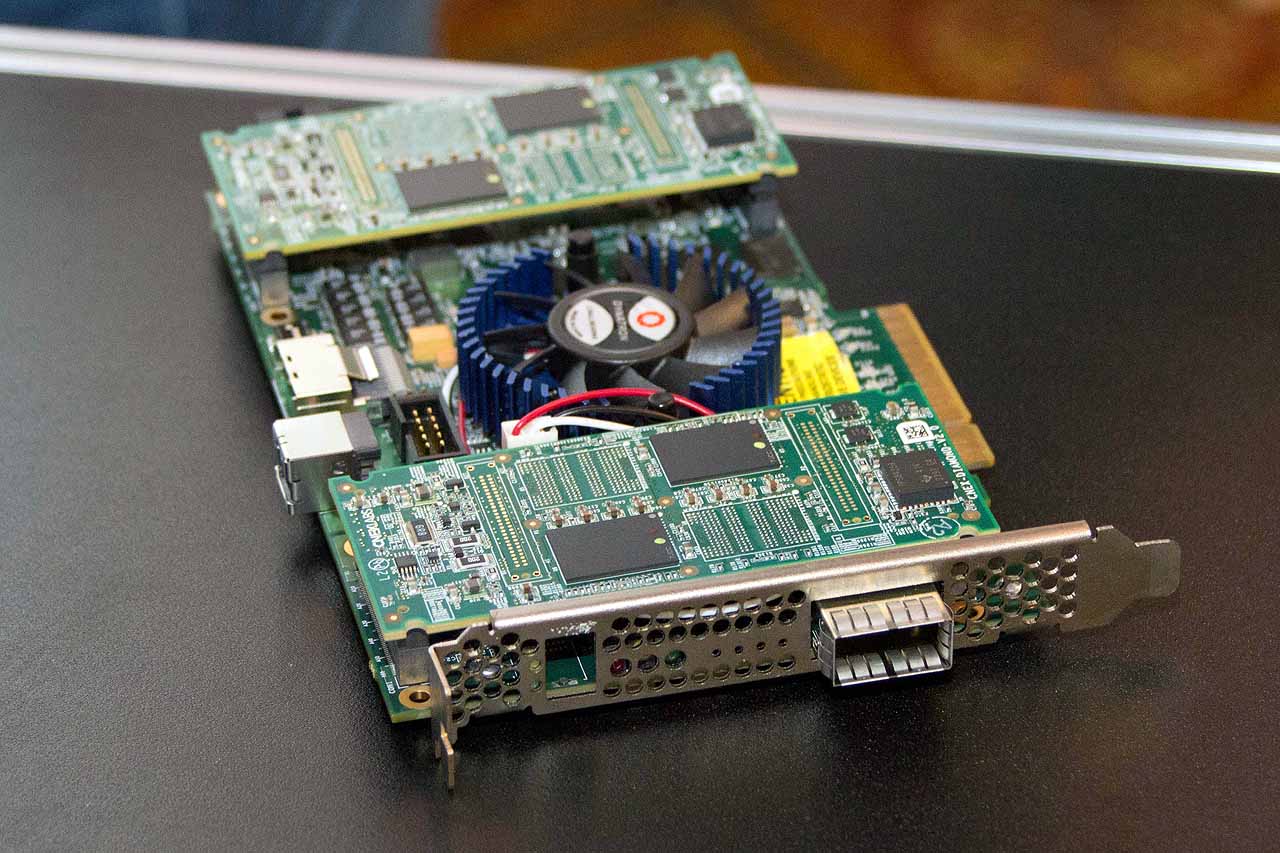

Micron QuantX Development Board

Micron designed the NVMe QuantX prototype SSD as block-based storage, and although both Intel and Micron have teased wafers before, the four chips on the two daughterboards are the first 3D XPoint packages we have seen in the wild. The packages are unmarked, but the overall capacity of the PCIe 3.0 x8 card weighs in at 128 GB. We are unsure if there are more packages on the board, as our time with the prototype was short.

If there were only four packages on the board, it would equate to 32 GB per package. Micron noted that 20nm 3D XPoint would feature a die density of 128 Gbit (16 GB) per die, so that implies that each of the four packages features a two-die stack. Achieving the ability to stack the die successfully is itself an important part of the maturation process.

The 128 Gbit capacity is a far cry from the 384 Gbit (48 GB) density of Micron's 3D TLC NAND. Micron's 3D NAND does have the highest capacity per die than competing NAND types, but it features 32 layers. 3D XPoint is currently a two-layer design, and the company indicated that it expects the density to increase as the technology matures.

Micron used the yellow sticker below the fan to hide the name of a partner that the company wasn't ready to reveal, and the large fan in the middle sits over a large as-of-yet undisclosed FPGA. Five Micron DRAM packages flank the FPGA, which is interesting because it indicates that Micron is likely using some form of a standard FTL (Flash Translation Layer) that manages the L2P (Logical to Physical) address map. Micron may also be using the cache to absorb incoming write traffic, but that is doubtful considering the high-endurance nature of the underlying 3D XPoint. It shouldn't require as much data massaging as regular SSDs to defray endurance issues.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

There are also two visible rows of capacitors. Micron likely uses the capacitors to flush the data held in the DRAM cache to the 3D XPoint in the event of an unexpected power loss. 3D XPoint is a persistent storage medium, so it will not need capacitors.

The end of the card reveals a QFSP+ connector for an NVMe over Fabrics and a row of status LEDs. There also appears to be an Ethernet port (possibly for out of band management) and a traditional SAS connection on the top of the card, but with prototype boards, it is common to find a wide variety of ports that don't make it to production.

Performance

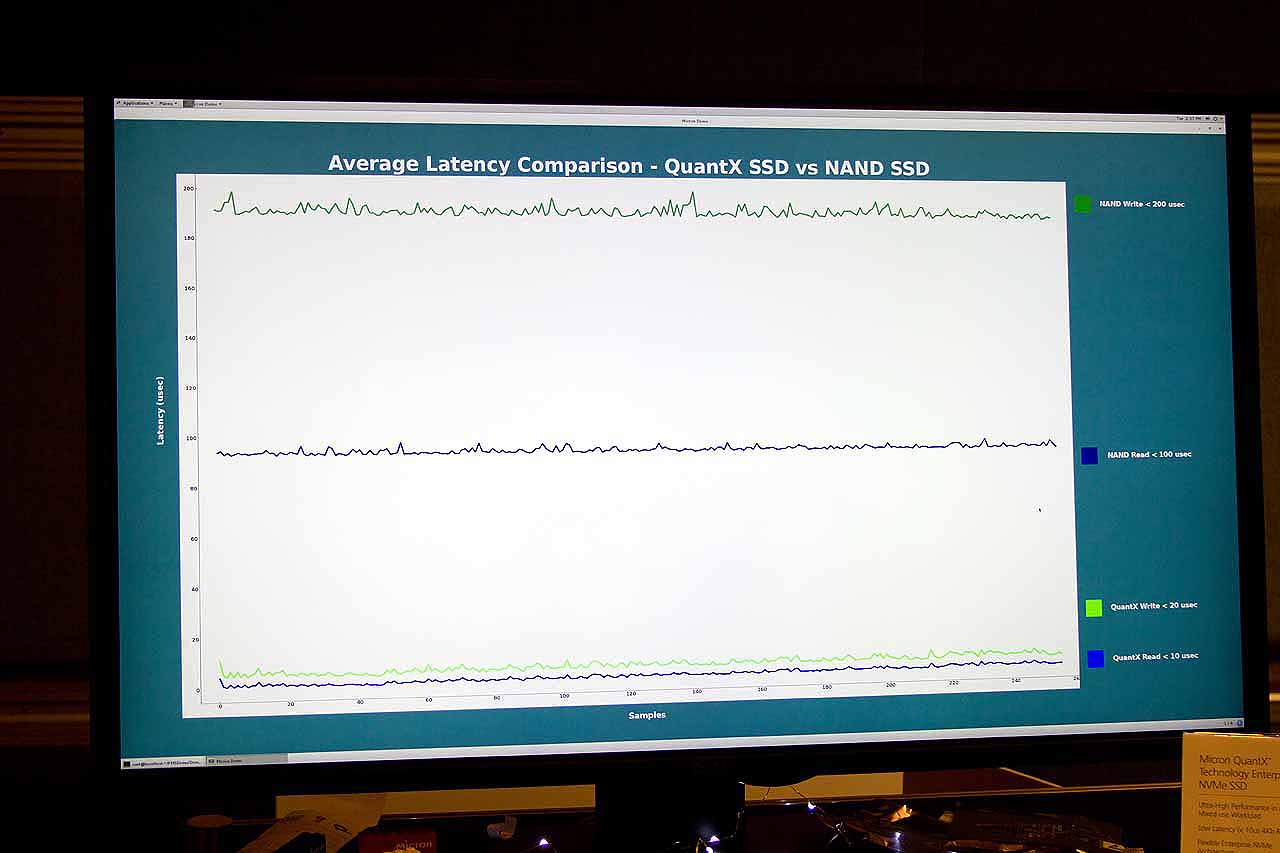

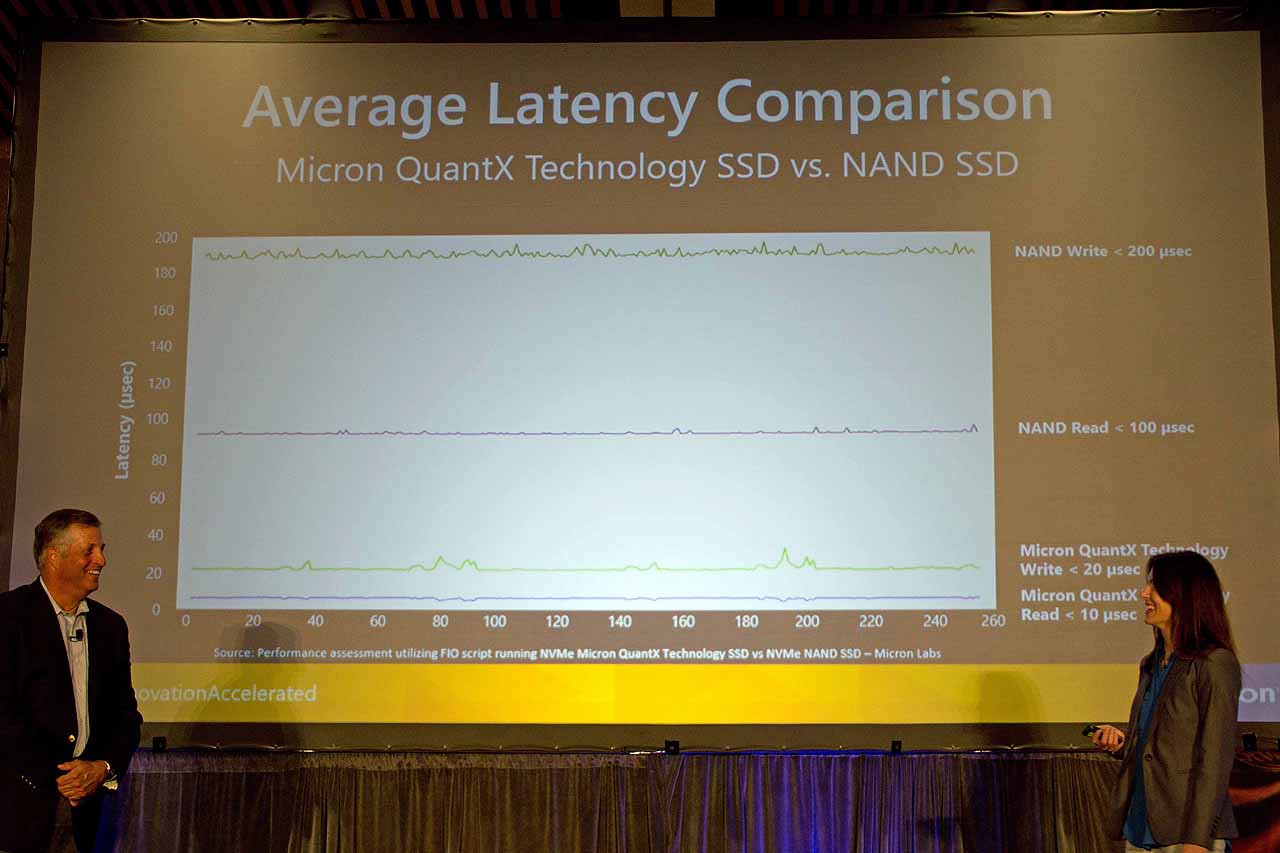

Micron noted that the QuantX SSD, which it designed to be a normal storage volume, provides 10X+ performance of NAND at low queue depths, a 10X+ faster response time than NAND, and 4X+ the memory capacity per CPU than DRAM.

Micron's first real-time test compared the read and write latency of a NAND-based SSD, which occupies the top of the chart. The NAND write operations require 200 microseconds, and the read operations require an average of 100 microseconds. QuantX write latency (at the bottom of the chart) weighs in at 20 microseconds, while reads require only 10 microseconds.

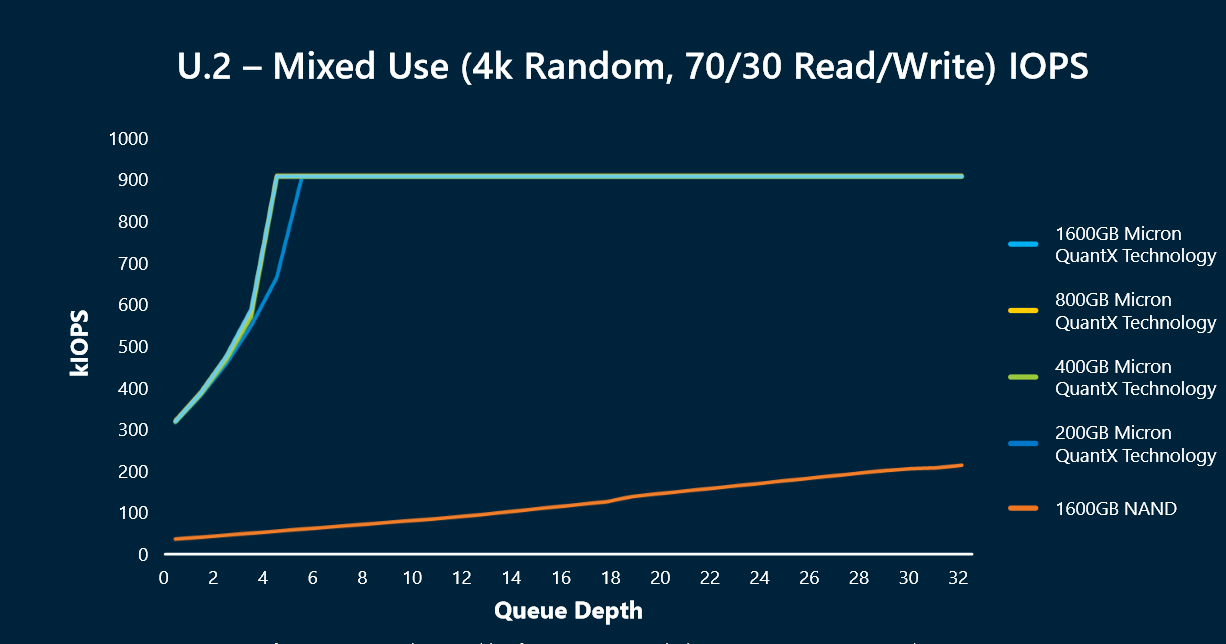

Micron presented a few charts that revealed stunning performance and scaling under light workloads. The first chart shows a PCIe 3.0 x4 connection with several QuantX SSDs. The workload consisted of a 70/30 mixed read/write 4K random workload. The yellow line along the bottom of the chart highlights the performance with a typical 1.6 TB NAND-based enterprise NVMe SSD, which topped out at a "mere" 200,000 IOPS at QD32. The 3D XPoint SSD positively blows that performance away with 900,000 IOPS during the same workload, but the main takeaway lies in the incredible scaling under light load; the 3D XPoint SSDs saturate the x4 connection at QD4.

We love to see the performance of SSDs under heavy load, but the overwhelming majority of workloads, even in heavy-duty enterprise environments, rarely exceed QD8. NAND-based SSDs require a heavy load to reach their top speed, which means that most applications utilize only a fraction of the actual performance available because the workload is not intense enough to tease out the full performance. We focus on low QD performance in our enterprise SSD tests because delivering the full performance under light loads provides a real performance boost to normal use cases.

Another interesting trend is that larger NAND-based SSDs typically offer higher performance with higher capacities, which exposes more NAND packages to the incoming commands (parallelism). The chart actually includes the test results of four QuantX SSDs with varying capacities, but they all provide the full performance under light loads, so the results overlay each other. Normal NAND-based SSDs have less performance with smaller capacity points, so many users purchase unneeded capacity on large SSDs simply to reach the higher level of performance. Even a 200 GB QuantX SSD saturates the x4 bus at QD4, which means users will get more performance with less capacity, which reduces cost.

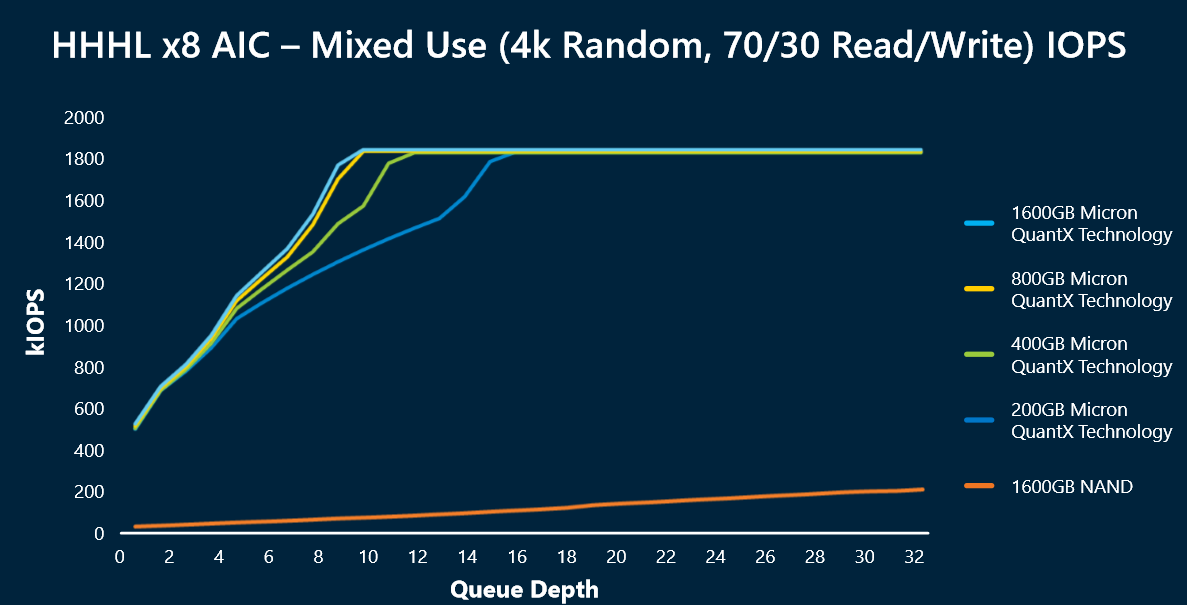

The second chart is an identical test with an x8 connection. The wider PCIe pipe provides more throughput, so the QuantX SSDs require more load to saturate the interface at 1.8 million IOPS. The QuantX SSDs exhibit a similar scaling trend during the test but reach maximum performance between QD8 and QD12 for the 1.6 TB, 800 and 400 GB capacities, whereas the 200 GB SSD tops out at QD16.

Facebook Provides Real World Results

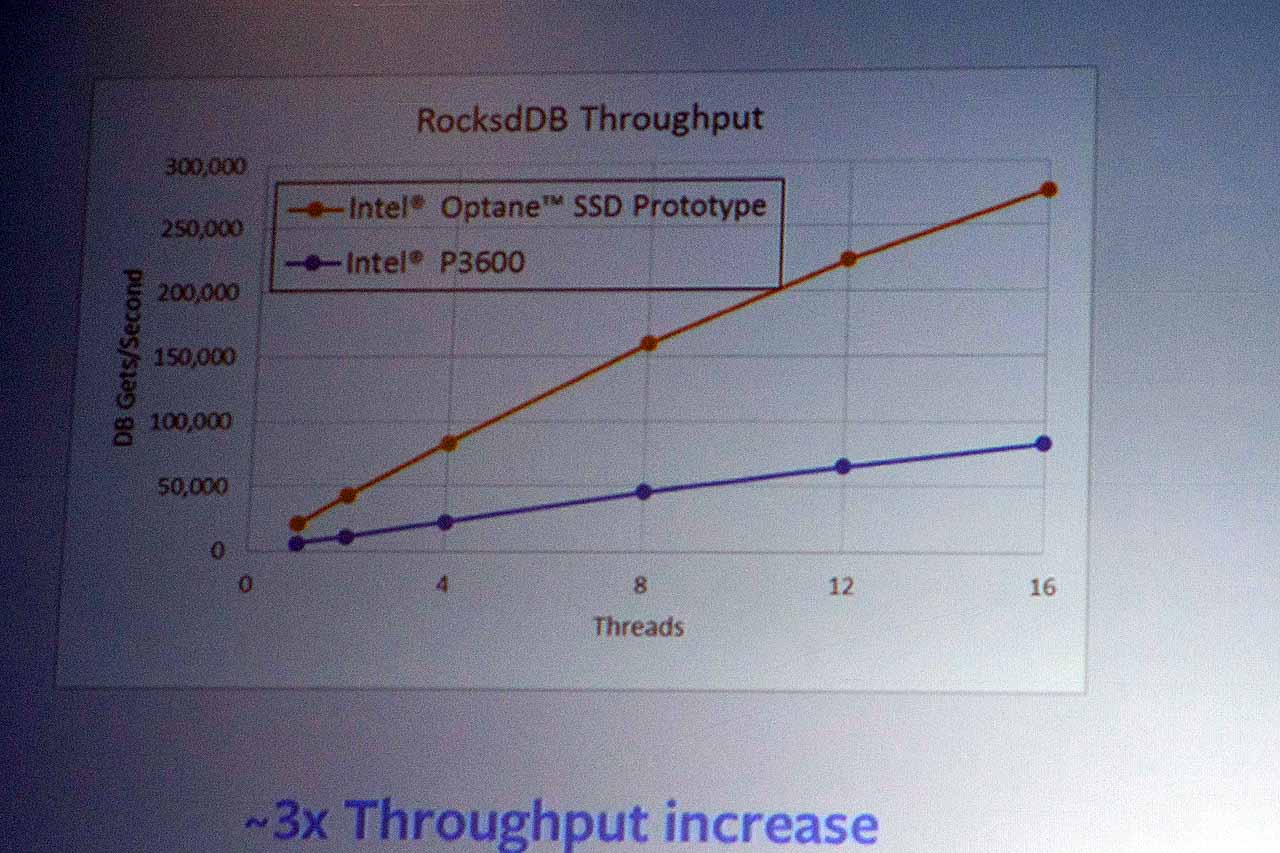

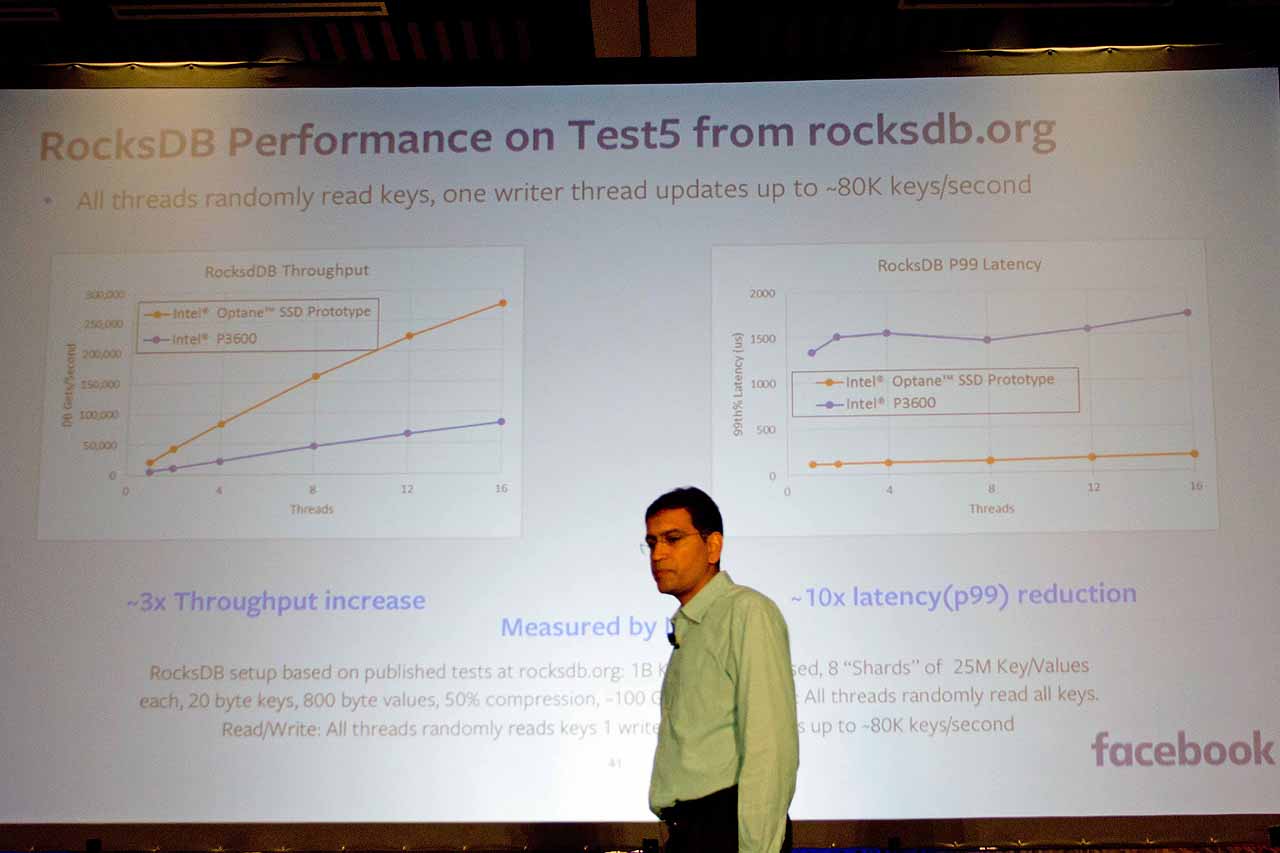

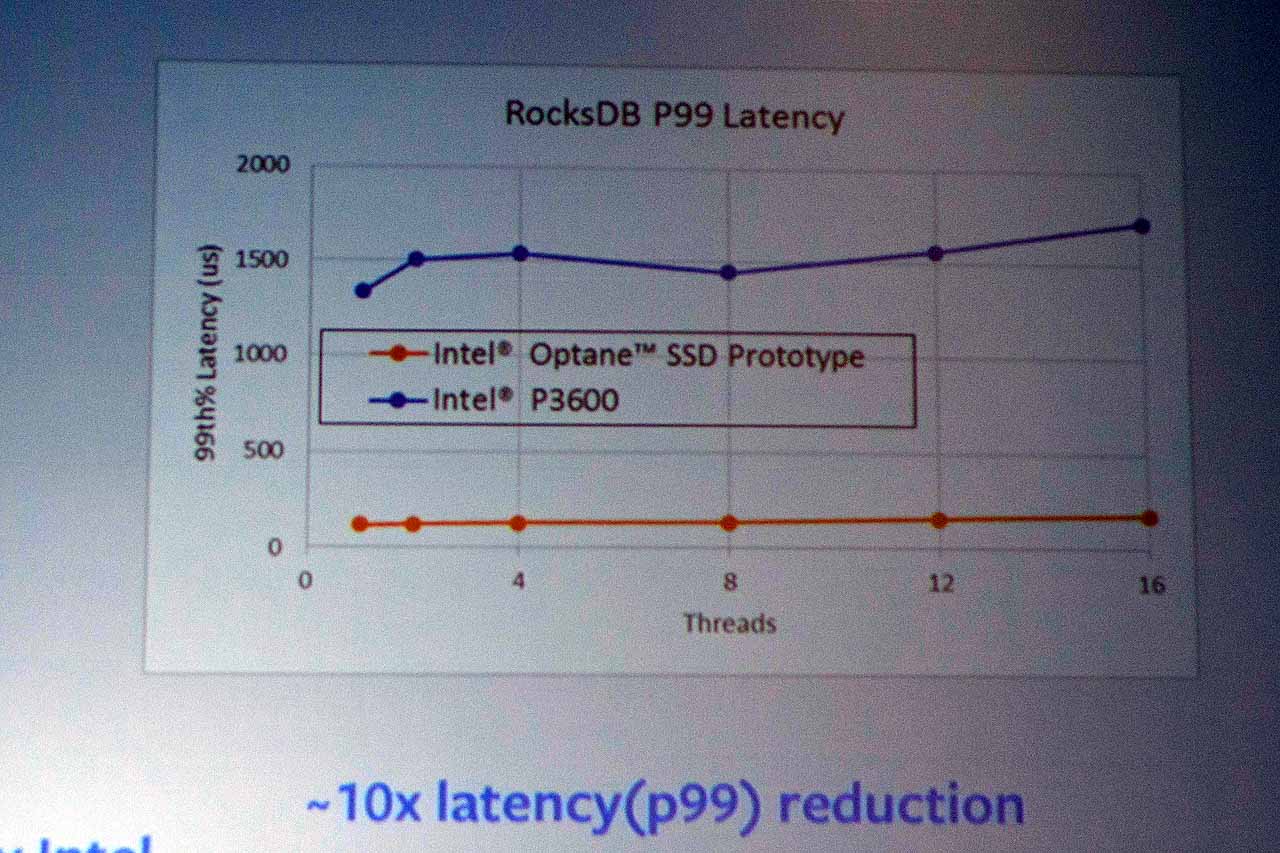

Facebook, which is already working to deploy 3D XPoint into its architecture, also joined in the benchmarking party by revealing its performance results with an Intel Optane SSD prototype. The Optane SSD tripled the number of transactions that the company achieved with a normal P3600 NAND SSD during a RocksdDB throughput test, but more importantly, Optane reduced the 99.99th percentile results (worst-case latency) by more than 10X. The increase in performance is impressive, but the drastic reduction of errant I/Os will be a boon for intense transactional workloads. Facebook noted that Intel measured the results.

How Are They Doing It?

We posed a few questions to Micron during a Q&A session to determine the root of the high performance, particularly under light load. NAND manufacturers boost performance by splitting the die into several planes, which are separate sections of the NAND that respond independently to incoming program/erase commands. Planes increase performance by enhancing parallelism. NAND vendors often split die into two planes, whereas Micron split its 3D NAND into four planes.

Micron confirmed our suspicions and responded that, although the 16 GB 3D XPoint die isn't split into what would be considered "normal" planes, each die features 64 regions that can independently respond to program/erase commands, which is analogous to a 64-plane architecture. This built-in parallelism allows each 3D XPoint die to respond to data requests with a similar amount of parallelism as 16 entire NAND dies.

Why So Slow?

The performance specifications, though impressive, are far below the promised "1000X the performance of NAND," but Micron representatives indicated that the company based these statements on the speed of the actual storage medium (at the cell level) before it is placed behind the SSD controller, firmware, software stack and drivers. These factors conspire to increase latency and decrease the performance of the final solution (i.e., the SSD).

Much of the leading-edge developmental work to increase performance is focusing on peeling back these layers, and some emerging techniques, such as reducing interrupts by utilizing polling drivers (found in the Intel storage SPDK), can offer drastic performance increases. In many cases, fully utilizing 3D XPoint will require rearchitecting the entire stack, and moving 3D XPoint to the memory bus will unlock much higher performance because it is a faster bus that removes the performance-killing interrupts.

Endurance

Micron also revealed that its first QuantX SSDs would feature 25 DWPD (Drive Writes Per Day) of endurance over a five year period with the first generation of 3D XPoint. In comparison, some enterprise SSDs based on MLC NAND provide up to 10 DWPD, whereas TLC NAND SSDs provide between <1 to 5 DWPD of endurance.

Twenty-five DWPD is hardly an earth-shaking endurance specification, and it obviously pales compared to the 1000x increase in endurance touted during the product launch. Micron noted that it is currently tuning 3D XPoint for use as a storage device, which doesn't require as much endurance as it will when it's used as a memory device (NVDIMMS on a RAM slot).

Endurance is defined by the tolerance for raw bit error rates, and according to Micron, the 3D XPoint bit error rate is much lower than flash. The addition of sophisticated ECC algorithms reduces performance, and the company is balancing ECC capabilities and performance to find the "sweet spot" for storage applications. Further, 3D XPoint doesn't have to play by the normal internal SSD management rules, such as garbage collection and wear leveling, to the same extent that a NAND-based SSD does. Micron can dial back all of these processes to increase performance, and it also helps to provide the impressive worst-case latency results in the Facebook tests.

Micron and Intel are designing NVDIMMs for memory applications, and the companies will tune those solutions to provide much more endurance than the storage-based products. The controllers are the real secret sauce of the development initiative, and for now, Micron is utilizing FPGAs, but one would expect the company to switch to ASICs once the design matures.

3D XPoint As Memory

The biggest advances will come when we move 3D XPoint to the memory bus, but it also will require the most change. The first step is to build support in operating systems, and that work is well underway due to the increasing use of NAND-backed DRAM, and even NAND, on the memory bus with NVDIMM form factors.

Software developers will begin to add in support for the new functionality by rewriting applications once the operating system vendors solidify support, but this process could take years. It took nearly ten years for the industry to begin using flash to its fullest potential, but Micron and Intel are both working to build a robust support ecosystem to speed the process with 3D XPoint.

Using 3D XPoint as memory also produces a number of interesting challenges. 3D XPoint is persistent, so if someone removed a DIMM from a server, they could simply plug it into another server to retrieve the data. This is a serious security concern, so all data held in the memory will require encryption. QuantX SSDs will also feature the full suite of TCG OPAL and FIPS 140-2 encryption.

For now, the best route to market is to simply provide 3D XPoint solutions as storage, because it's a drop-in replacement that will help build production volume. Shrinking lithography and stacking higher layers will increase density, which also reduces cost, but it all starts with volume, which will help reduce cost and foment widespread adoption.

Power and Heat

Power consumption is an overriding concern in data center applications, and that is where many of the leading-edge products will land. Micron revealed that 3D XPoint has similar power consumption metrics as NAND, largely because even light workloads will "light up all of the die." However, the device delivers much more performance than NAND so that it will deliver superior efficiency (IOPS-per-Watt). Micron is also testing the device to ensure that it meets JEDEC's stringent heat requirements for SSDs. JEDEC has much higher heat thresholds for DRAM, and it remains to be seen if 3D XPoint DIMMs will fit within the normal DRAM thermal envelope.

Where To Go From Here

The bad news is that Micron is not specifying a release date for its QuantX products, but Intel stated that it intends to ship its 3D XPoint-powered Optane SSDs this year. There are still a number of unanswered questions, such as what the material actually is. Price is another looming question, as it will be the key to the success or demise of 3D XPoint solutions. We expect more news to come from IDF next week.

However, the amount of information trickling out of Micron shows that although 3D XPoint may not be as impressive as some expected, it would still enable a sea change in the computing paradigm. The performance on display was impressive, but one can only imagine what it is capable of with a PCIe 3.0 x16 connection, or better yet, PCIe 4.0.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Zaxx420 Some may be not so impressed but this is just the very beginning of a tech thats just in its infancy (just 2 layers...lol)...give it 2-3 years and I bet it'll be a mind blow.Reply -

manleysteele http://www.guru3d.com/news-story/micron-3d-xpoint-storage-is-four-to-five-times-as-expensive-as-nand.htmlReply

This little blurb gives me pause. I'm waiting for Z-270 MB's in anticipation of the 3D X-point memory spec, but 4-5 times more expensive makes my head hurt. -

MRFS Allyn Malventano at pcper.com writes about the U.2 chart:Reply

http://www.pcper.com/news/Storage/FMS-2016-Micron-Keynote-Teases-XPoint-QuantX-Real-World-Performance

"These are the performance figures from an U.2 device with a PCIe 3.0 x4 link. Note the outstanding ramp up to full saturation of the bus at a QD of only 4."

If the bus can be saturated that easily, it's time to up the clock rate on PCIe lanes, at a minimum to 12 Gbps just like 12G SAS.

I'm optimistic that these non-volatile memory technologies will get refined with time, and get cheaper with mass production. Also, the PCIe 4.0 clock will oscillate at 16G, so it's time the industry embraced the concept of "overclocking" storage a/k/a variable channel bandwidth. -

anbello262 Well, 4-5 times more expensive should be expected, and even more. New technology, and when it comes to market the performance leap could be as big as the jump from HDD to SSD, and SSDs were 10 times more expensive at the beginning.Reply

I hope this continues, but I'm also afraid of a scenario were all XPoint technology belongs to just 1 or 2 big companies, which would be awful for pricing and compatibility. -

ammaross Reply18415596 said:This little blurb gives me pause. I'm waiting for Z-270 MB's in anticipation of the 3D X-point memory spec, but 4-5 times more expensive makes my head hurt.

Rather than 4-5 times more expensive than NAND, you should be thinking of it as half the price of DRAM (as it's closer to DRAM specs than it is NAND specs). The charts show it being crippled by the interface, not the tech. -

MRFS https://forums.servethehome.com/index.php?threads/highpoint-has-done-it-rocketraid-3800a-series-nvme-raid-host-bus.10847/Reply

-

anonymous1000 "Much of the leading-edge developmental work to increase performance is focusing on peeling back these layers... such as reducing interrupts by utilizing polling drivers ... can offer drastic performance increases" - I really don't know how realistic this idea is, but maybe its worth trying using pcie entries (cards, cables) inside big data (fastest nand etc..) just as SAS controllers are used in SCSI /SATA/ethernet. Does that make sense? If many pcie connections to fast data can work simultaneously and manipulate the data, then we can drop Ethernet style switches with load balancing, latency and other last millennium concerns .. no?Reply -

hannibal I expect it to be at least 10 times or even more expensive during the release than normal nand. In the longer run 4 times more than normal nand may sounds plausible.Reply

First devices will go to big corporates that handle a lot of data, like Google and Facebook who has more money, than is needed to get very high speed storage.

So first devices are like luxury sport cars, fast and expensive. -

MRFS > I really don't know how realistic this idea isReply

From an analytical point of view, I share your concern.

Here's why:

When doing optimization research, we focus on where

a system is spending most of its time.

For example, if the time spent is distributed 90% / 10%,

cutting the 90% in half is MUCH more effective

than cutting the 10% in half.

Now, modern 6G SSDs are reaching 560 MB/second

on channels oscillating at 6 GHz ("6G").

The theoretical maximum is 6G / 10 bits per byte = 600 MB/second

(1 start bit + 8 data bits + 1 stop bit = "legacy frame")

So, what percentage of that ceiling is overhead?

Answer: (600 - 560) / 600 = 40 / 600 = 6.7%

WHAT IF we merely increase the channel clock to 8 GHz?

Then, 8G / 10 bits per byte = 800 MB/second

That alone raises the ceiling by 800/600 = 33.3%

Now, add PCIe 3.0's 128b/130b jumbo frame

(130 bits / 16 bytes).

Then, 8G / 8.125 bits per byte = 984.6 MB/second

984.6 / 600 = 64% improvement

And, what if we increase the channel clock to 12G (like SAS)

-and- we add jumbo frames too:

Then, 12G / 8.125 bits per byte = 1,476.9 MB/second

1,476.9 / 600 = 146% improvement

I submit to you that the clock rate AND the frame size

are much more sensitive factors.

Yes, latencies are also a factor, but we must be

very realistic about "how sensitive" each factor is, in fact,

and be empirical about this question, NOT allowing

theories to "morph" into fact without experimental proof.

-

manleysteele Reply18416571 said:18415596 said:This little blurb gives me pause. I'm waiting for Z-270 MB's in anticipation of the 3D X-point memory spec, but 4-5 times more expensive makes my head hurt.

Rather than 4-5 times more expensive than NAND, you should be thinking of it as half the price of DRAM (as it's closer to DRAM specs than it is NAND specs). The charts show it being crippled by the interface, not the tech.

I'm thinking of the usage, not the specs. In the end, no matter where it's deployed, it's still memory as storage, like NAND. It's a NAND replacement on a memory bus. The early adapters will pay a premium. I accept that. What I won't accept is 32-64GByte of storage, for the price of 2TBytes of storage in my precious memory slot. I'll just populate with memory and go on about my business in that case. Unless and until Intel and Micron get this price structure under control, I'll wait.