Intel To Launch Spring Crest, Its First Neural Network Processor, In 2019

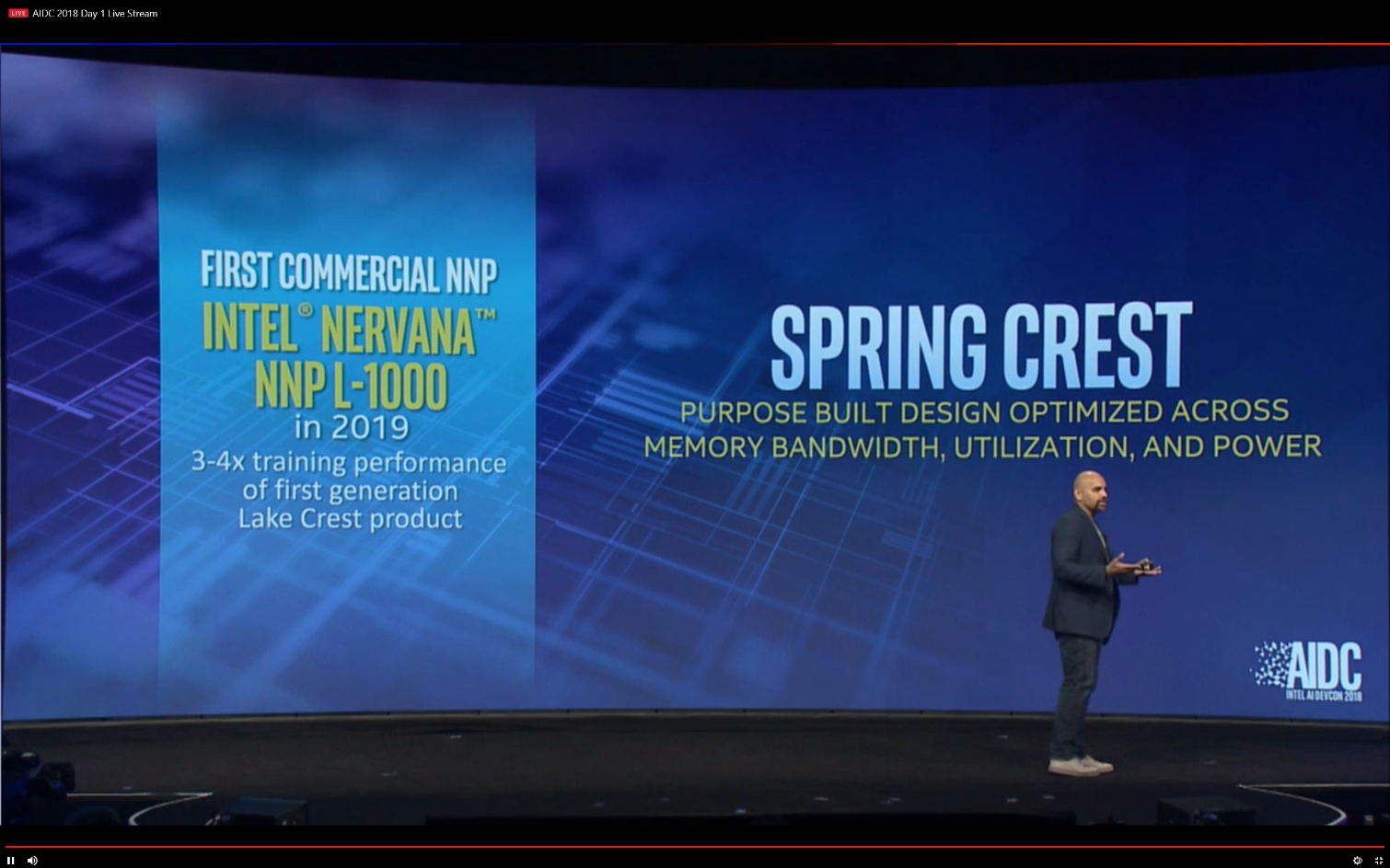

At its first AI Developer Conference, Intel announced the Nervana NNP-L1000, which is the first neural network processor (NNP) to come out of the Nervana acquisition. The chip will prioritize memory bandwidth and compute utilization over theoretical peak performance.

Intel’s New Attempt To Succeed In The ML Market

Initially, Intel started competing with Nvidia in the machine learning (ML) chip market with its Xeon Phi architecture, which used tens of Atom cores to “accelerate” ML tasks. However, Intel must have realized that Phi alone wasn’t going to allow it to catch up to Nvidia, which seems to make significant leaps in performance every year.

As such, the company began looking for other options, which led it to buy Altera for its field programmable gate arrays (FPGAs), Movidius for its embedded vision processor, MobilEye for its self-driving chip, and Nervana for its specialized neural network processor. Also, Intel has started working on its own dedicated GPU. The company is also working on neuromorphic and quantum computing chips.

Intel calls all of these options a “holistic approach” to artificial intelligence. However, the company may also want to avoid betting everything on a single architecture again, as it did with Phi, and then fall even farther behind Nvidia in the ML chip market. On the other hand, this scattered strategy for ML may also confuse developers, because they won’t know which technology Intel will back the most in the long-term (and Intel may not know yet, either).

Intel’s Nervana NNP-L1000

For now, Intel seems to focus more on pushing its Nervana chips to ML researchers, possibly because it may be the one that is going to compete most directly with Nvidia (at least until Intel’s dedicated GPUs arrive).

The Nervana NNP-L1000, code-named Spring Crest, seems to put great emphasis not so much on peak trillion operations per second (TOPS) as on high memory bandwidth and low latency.

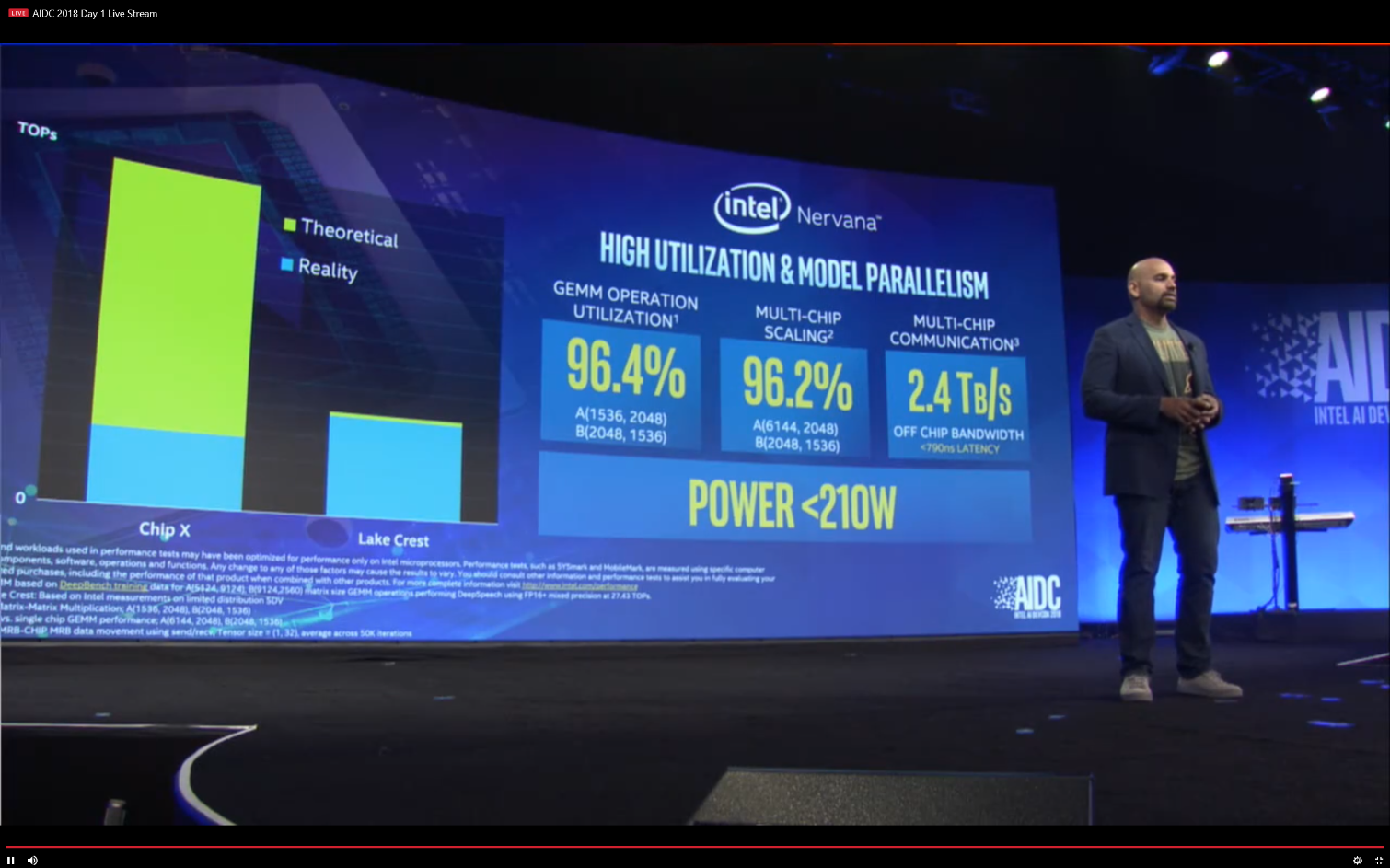

Intel showed the following performance numbers for its Lake Crest prototype, which is currently being demoed to some partners:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

General Matrix to Matrix Multiplication (GEMM) operations using A(1536, 2048) and B(2048, 1536) matrix sizes have achieved more than 96.4 percent compute utilization on a single chip. This represents around 38 TOP/s of actual (not theoretical) performance on a single chip.Multichip distributed GEMM operations that support model parallel training are realizing nearly linear scaling and 96.2 percent scaling efficiency for A(6144, 2048) and B(2048, 1536) matrix sizes – enabling multiple NNPs to be connected together and freeing us from memory constraints of other architectures.We are measuring 89.4 percent of unidirectional chip-to-chip efficiency of theoretical bandwidth at less than 790ns (nanoseconds) of latency and are excited to apply this to the 2.4Tb/s (terabits per second) of high bandwidth, low-latency interconnects.All of this is happening within a single chip total power envelope of under 210 watts. And this is just the prototype of our Intel Nervana NNP-L1000 (Lake Crest) from which we are gathering feedback from our early partners.

The Nervana NNP-L1000, which will be the first Nervana product to ship to customers, promises 3-4x the neural network training performance compared to Lake Crest, according to Intel.

Intel said that the NNP-L1000 would also support bfloat16, a numerical format that’s being adopted by all the ML industry players for neural networks. The company will also support bfloat16 in its FPGAs, Xeons, and other ML products. The Nervana NNP-L1000 is scheduled for release in 2019.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

hixbot My cpu is a neural net processor, a learning computer. https://www.youtube.com/watch?v=xcgVztdMrX4Reply -

bit_user Reply20994413 said:Intel said that the NNP-L1000 would also support bfloat16, a numerical format that’s being adopted by all the ML industry players for neural networks. The company will also support bfloat16 in its FPGAs, Xeons, and other ML products.

https://stackoverflow.com/questions/44873802/what-is-tf-bfloat16-truncated-16-bit-floating-point?

In other words, high-range and low-precision. Good for ML. For everything else, mind the epsilon! -

stdragon Basically, it will be leveraged for analytic processing and AI assistance based on that via fuzzy logic.Reply

Personally, it's a "spyware accelerator" used for background tasks that aren't explicitly yours. Call it "Shadow Computing" were the analytics is based on greater data collection processing capability and data resolution.

Whatever CPU will have this built-in, I want it turned off. -

bit_user Reply

Dude, you already have a neural network accelerator in your PC. It's called a GPU.20994750 said:Whatever CPU will have this built-in, I want it turned off.

So far, the specialized chips & engines are only for cloud and embedded use.

It's hard to see a good case for putting embedded neural processing engines in a desktop CPU. To be worthwhile, they require a substantial amount of die area (i.e. added cost). Furthermore, they have a lot of functional overlap with GPUs, yet they don't offer such a great performance advantage over GPUs that would justify the added cost for mass-market products. -

derekullo The chip will prioritize memory bandwidth ...Reply

All I hear is potential Ethereum miner