Intel Grabs AMD's Raja Koduri, Outlines Broad GPU Vision

On the heels of his exit from AMD, Raja Koduri has found work at Intel, heading a newly formed division called the Core and Visual Computing Group. This new group's purpose is to develop new Intel high-end discrete graphics cards.

The news comes less than 24 hours after AMD confirmed Koduri’s departure, and many speculated what would be next for the former AMD GPU figurehead. With Intel’s recent shocking announcement of Kaby Lake-H chips featuring AMD GPUs, the idea that Koduri would be trading in his red shirt for a blue one wasn’t out of the realm of possibility. Now, Intel has confirmed that Raja would indeed be joining Intel in its quest to drive its iGPU and discrete GPU segments.

“Intel today announced the appointment of Raja Koduri as Intel chief architect, senior vice president of the newly formed Core and Visual Computing Group, and general manager of a new initiative to drive edge computing solutions,” stated the press release. “In this position, Koduri will expand Intel’s leading position in integrated graphics for the PC market with high-end discrete graphics solutions for a broad range of computing segments.”

The announcement is as significant as it is vague. It points to an even bigger Intel investment in the GPU market, and Koduri could have a beefier budget than he had at AMD, but it's unclear exactly what this group will be making.

The release mentions that the Core and Visual Computing Group is a "new initiative to drive edge computing solutions" and that it will "unify and expand differentiated IP across computing, graphics, media, imaging and machine intelligence capabilities for the client and data center segments, artificial intelligence, and emerging opportunities like edge computing." That's incredibly broad and could mean almost anything; the language is certainly inclusive (and vague) enough to hint at (or at least not rule out) GPUs for gaming-oriented PC graphics cards or for AI data centers.

One also wonders if and how Koduri will poach his best brains from AMD and bring them to Intel. "If," because it's unclear if he needs to build a team or if Intel already has a bevy of engineers ready to execute on the complete picture; and "how" because, presumably, there are non-compete agreements littering the halls of both companies.

There are many questions left unanswered. We've reached out to AMD for further comment, as well as Intel to see what immediate other details the company is ready to provide, if any. What is certain is that AMD lost one of its big names to one of its two toughest competitors.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zooming Out

One thing that we can speculate about is why Intel was an attractive avenue for Koduri, and what Intel is banging on about in its release regarding its new prize talent—something it has clearly been after for some time.

Intel's push into the client GPU market is almost a given as it works to build its new discrete GPUs, particularly because it will allow the company to build scale and whittle away at its competitors' market share. It goes without saying that Intel's integrated graphics will also stand to benefit from the new GPU focus. A new entry in the high-end desktop graphics market—and that's one key area Intel is aiming for—is important, but as we've seen with Nvidia's shifting focus, AI will generate even more revenue over the coming years.

Intel is nine months into its three-year restructuring that began with a bang in the form of 11,000 layoffs. Intel is diversifying into data center adjacencies and other new areas, such as IoT and automated driving, as it reduces its reliance on its bread and butter desktop PC market, which continues to decline steadily. Even though all of these new focus areas feature profoundly different silicon use-cases, AI is one of the technologies that touches all of them. Perhaps most important to Intel, AI-driven architectures are taking over the data center at an amazing rate. And CPUs aren't the preferred solution.

That means Intel has to address the AI elephant in the room in one form or another, and the company actually chose several. AI workloads can execute on several different forms of compute, such as FPGAs, ASICs, CPUs, and GPUs. Each form of compute has its own advantages and disadvantages, but GPUs are widely viewed as the most flexible route for machine learning and inference.

CPUs can execute AI workloads, but they aren't as efficient from a performance-per-watt perspective, a critical aspect in the data center, and their performance lags woefully far behind other solutions. No amount of AVX-512 optimization will suddenly push CPUs into contention as the best solution for AI workloads, although processors are useful in data centers that can run AI workloads, such as AI-based analytics, during off-peak hours.

Intel also has other home-grown solutions for AI workloads, such as Xeon Phi Knights Landing and Knights Mill, which sprung up from the ashes of Larrabee (Intel's second failed discrete GPU attempt). Again, these solutions have very specific tasks, so they aren't one-size-fits-all.

FPGAs are also a good fit for tightly integrated low-latency solutions, so Intel's recent Altera acquisition is a key component to the AI puzzle. Diving further into customizable solutions, ASICs offer the best performance-per-watt for AI workloads, but they address relatively narrow market segments. In that vein, Intel purchased Nervana earlier this year.

Intel is also pushing into other areas that can arguably benefit the most from GPUs, such as automated driving and IoT. Edge processing in IoT devices, which primarily consists of lightweight inference workloads, is a key part of Intel's strategy.

That gives Intel a solution in its AI portfolio for nearly every segment, with Xeons, Xeon Phi, FPGAs, and ASICs, but the lack of GPUs is a glaring deficiency, particularly given Nvidia's AI dominance in the data center. Intel is betting a big part of the company's future on data center-driven revenue, and addressing the need for GPUs in the AI market plays a key role. The company is also seeing tremendous revenue growth from its new IoT division, and because IoT devices push data back to the data center, it's a highly complementary segment to Intel's core revenue driver.

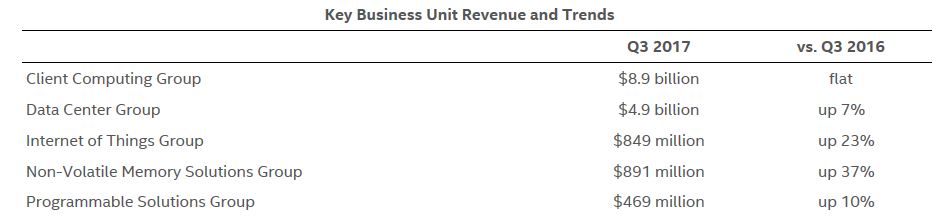

Intel has a plan, but it's going to take brains and heavy investments to bring it to reality. Koduri is obviously the beginning of solving the technical challenges, but, as evidenced by the formation of the new Core and Visual Computing Group, Intel is also clearly ready to invest heavily in bringing its new graphics solutions to market. Intel currently has five key revenue-generating business units, and we can get a sense of the heft of each unit just by observing the Q3 revenue of each of the segments. For perspective, AMD's entire Computing and Graphics group, which consists of both processors and graphics revenue, weighed in at $819 million last quarter.

Intel also has a bevy of complimentary technologies, such as EMIB, that can help integrate GPUs tightly into a broad range of devices from the data center to the edge.

Intel's press release is quite clear that the company is going to make a scalable solution that will enhance revenue in each of these key segments, so the formation of the new Core and Visual Computing Group represents the last piece in a much larger puzzle for Intel.

For now, details are sparse. But, we are heading into several important trade shows, and we can expect the company to release more details in due time.

Derek Forrest was a contributing freelance writer for Tom's Hardware. He covered hardware news and reviews, focusing on gaming desktops and laptops.

-

vern72 This is the one thing that baffles me about Intel: how come they can't make a decent graphics chip? I guess this will be put to rest in the near future now.Reply -

kinggremlin Raja looks really happy in that profile picture. That's the smile of someone moving to a company where his paycheck won't bounce any more and who won't have to hold bake sales every week to raise funds for his department.Reply -

Eliad Buchnik they did try it was called project larrabee , it failed to deliver by the timeReply

it supposed to release a GPU that actually rivaled amd and Nvidia offerings (was around 2010) the are many reason why it couldn't achieve that, the main one is delays, in a market that rapidly improves overtime delay of your product could be fetal if its performance is not amazingly better. -

Rob1C He'll probably be in charge of an Altera Knights Movidius Nervana - an AIPU.Reply

They shouldn't take another kick at an uninteresting iGPU that would fail to compete on too many fronts and be a Patent Minefield. -

motocros1 when i read his departure letter i smelled a thanks but someone else is going to pay betterReply -

hannibal Well Intel is not gonna pay long time for amd gpu for it new embedded system. Less than a year They have a substitute of their own...Reply -

mzadotcom Can't tell you how happy I am about this! I watched AMD's Vega development from day one and it can be said that this man shoulders a huge part, if not all, of it's overall design , performance and launch failure . He didn't even have the b@lls to actually admit the shoddy effort put in by him and his Radeon Technology Group division. When all was said and done, Koduri simply put out a laughable, undeserved pat on the back statement while announcing his 3-month "hiatus"...Reply

https://videocardz.com/72498/raja-koduri-is-taking-a-3-month-break-from-rtg

So fantastic news for AMD! Good riddance 8^).

The completely incompetent job he did while having all the time in the world to produce something competitive vs. NVIDIA/Intel has proven his lack of leadership and vision. Now Intel, with it's underhanded dealings, general shady business practices and having manufacturing plants in apartheid entities, can choke on him.

To quote Powers Boothe/"Curly Bill Brocius" from "Tombstone" ~ "Well...................Bye." -

Andrejs Sosnovskis at least the next vega will be on intel, hopefully AMD will get somebody who knows how to make good gaming gpusReply -

-Fran- Well, Intel has the money to build a full "playground" for Mr. Raja. I'm pretty profanity sure he's very excited about it. I'm sure as profanity I'd be.Reply

For all I want AMD to succeed, they are in a financial red zone still, so they don't have the massive R&D budget Apple nor Intel have to satisfy someone like Raja (this is my impression). He might have loved his time with ATI, but that Company is long gone now.

Let's see if Intel can now be competitive with whatever processor Raja ends up leading/creating. AI and GPU are most likely candidates.

Cheers!