Intel Shows Conceptual 2035 Xe Graphics Cards, Provides Updates

Intel's "Join the Odyssey" initiative is in full swing here at Computex 2019, with the company holding an Odyssey Taipei event to reveal its new Graphics Command Center and show off some conceptual fan art of what graphics cards may look like in the far future of 2035. It's all part of its far-ranging "Join the Odyssey" program, which is an outreach program designed to keep enthusiasts up to date on the latest developments through an Intel Gaming Access newsletter, outreach events, and even a beta program. The information-sharing goes both ways, though, as the company also plans to use the program to gather feedback from gamers.

Intel's Gregory Bryant, the head of the company's client compute business, kicked off the event, with Lisa Pierce, the VP of the Graphics and Software team, later taking the stage to announce that a new version of Intel's Graphics Command Center is available today from the Microsoft Store. This software comes with six skins, 44 new games with one-click optimizations, and the power optimizations page is now active.

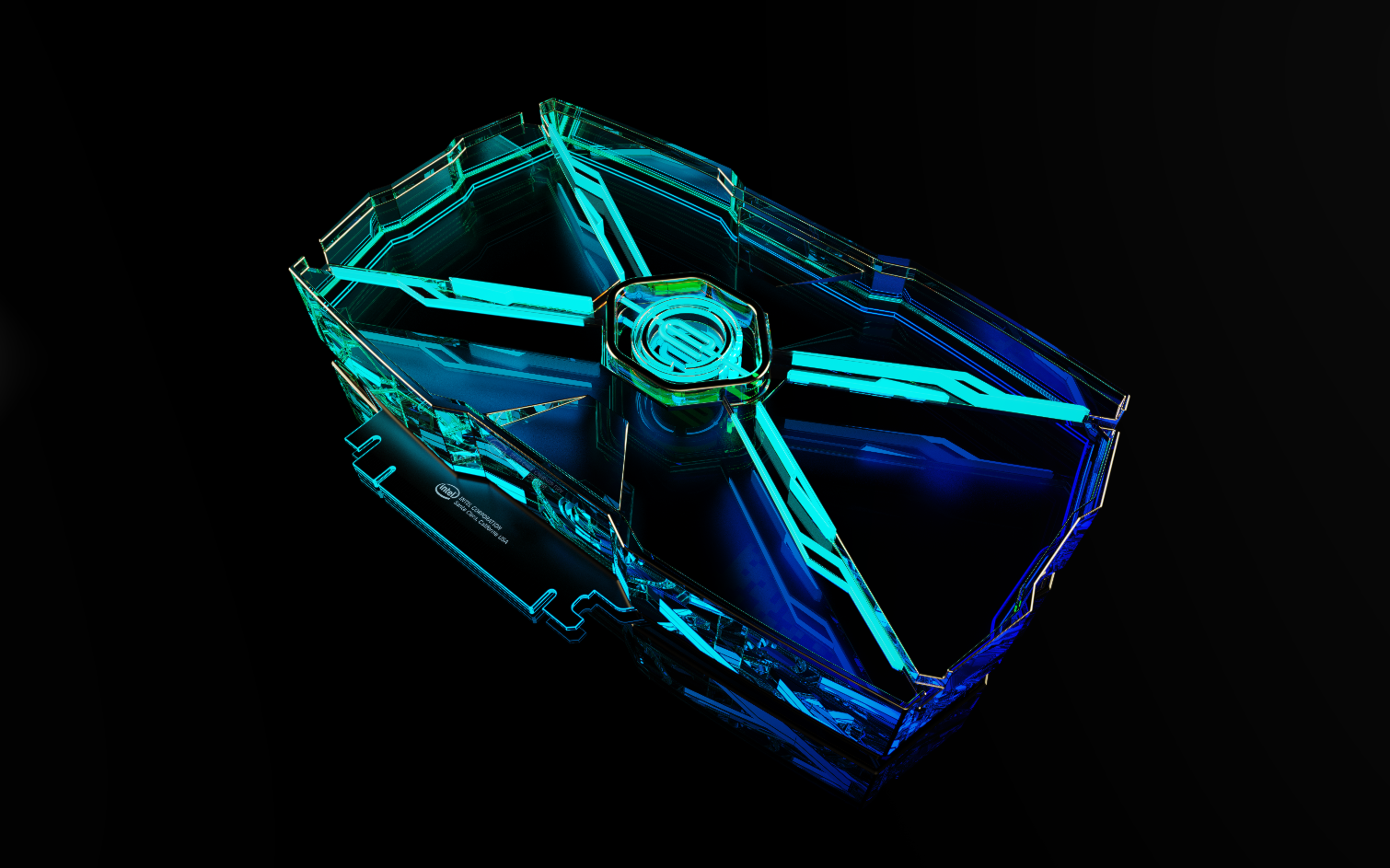

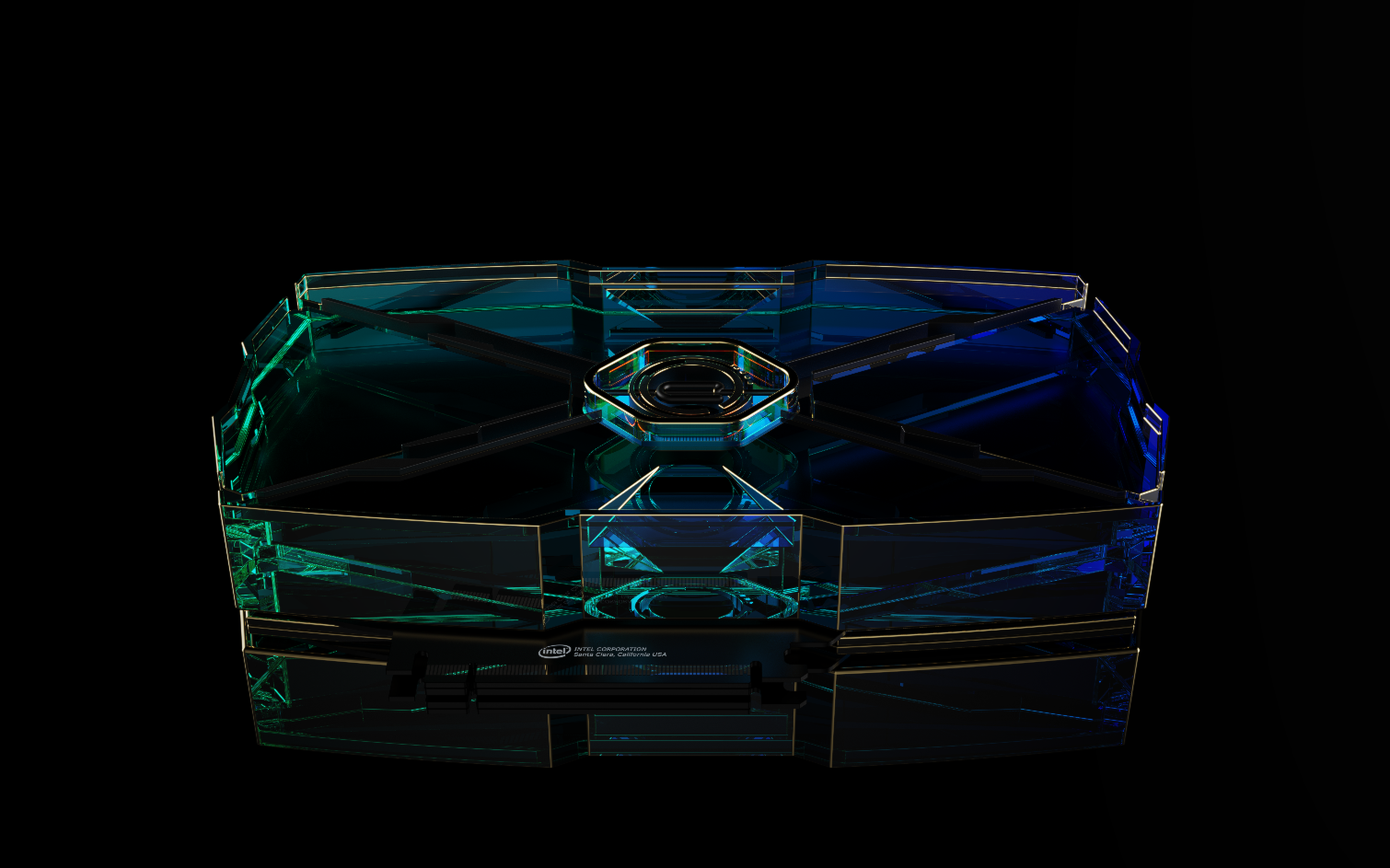

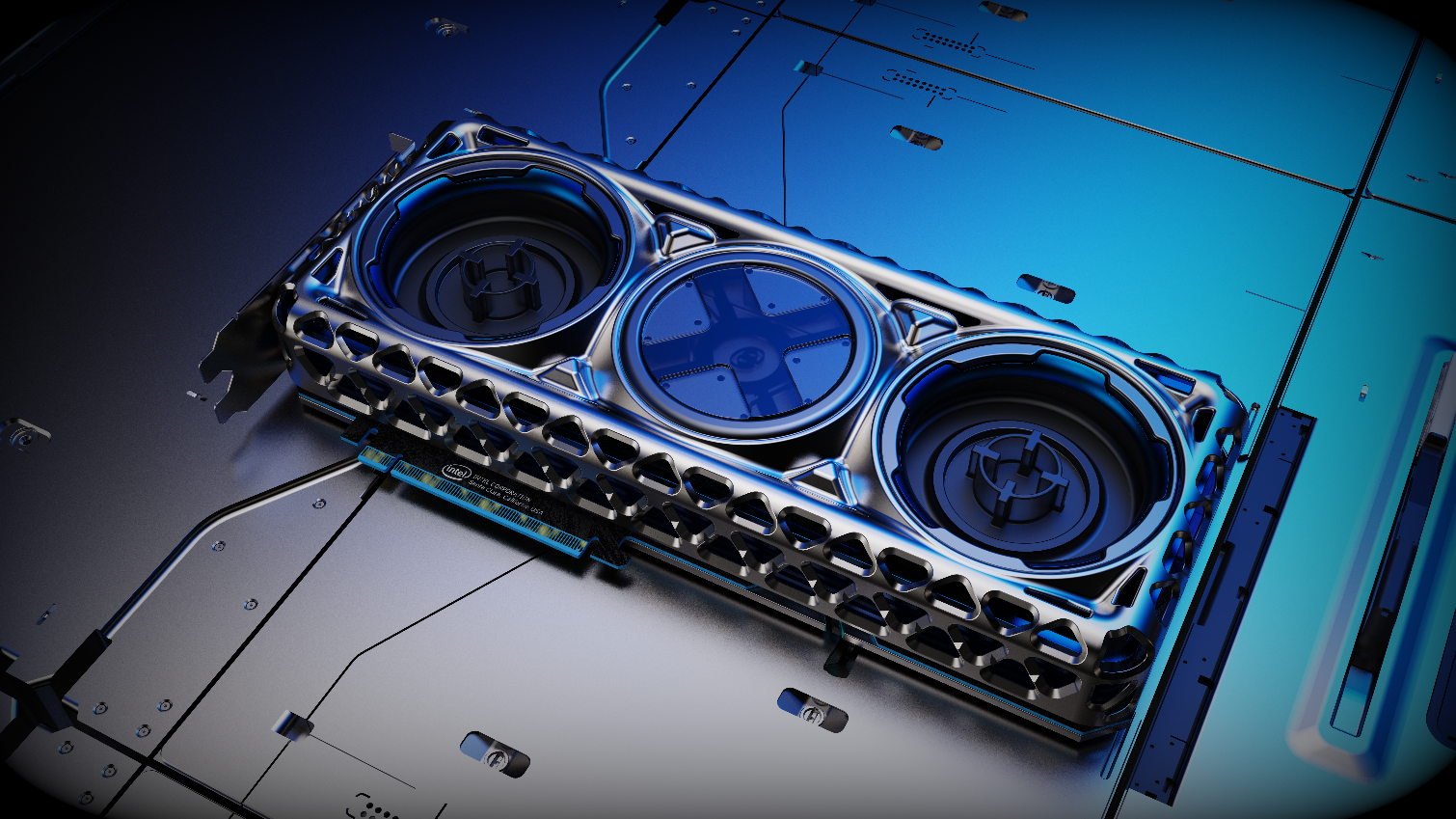

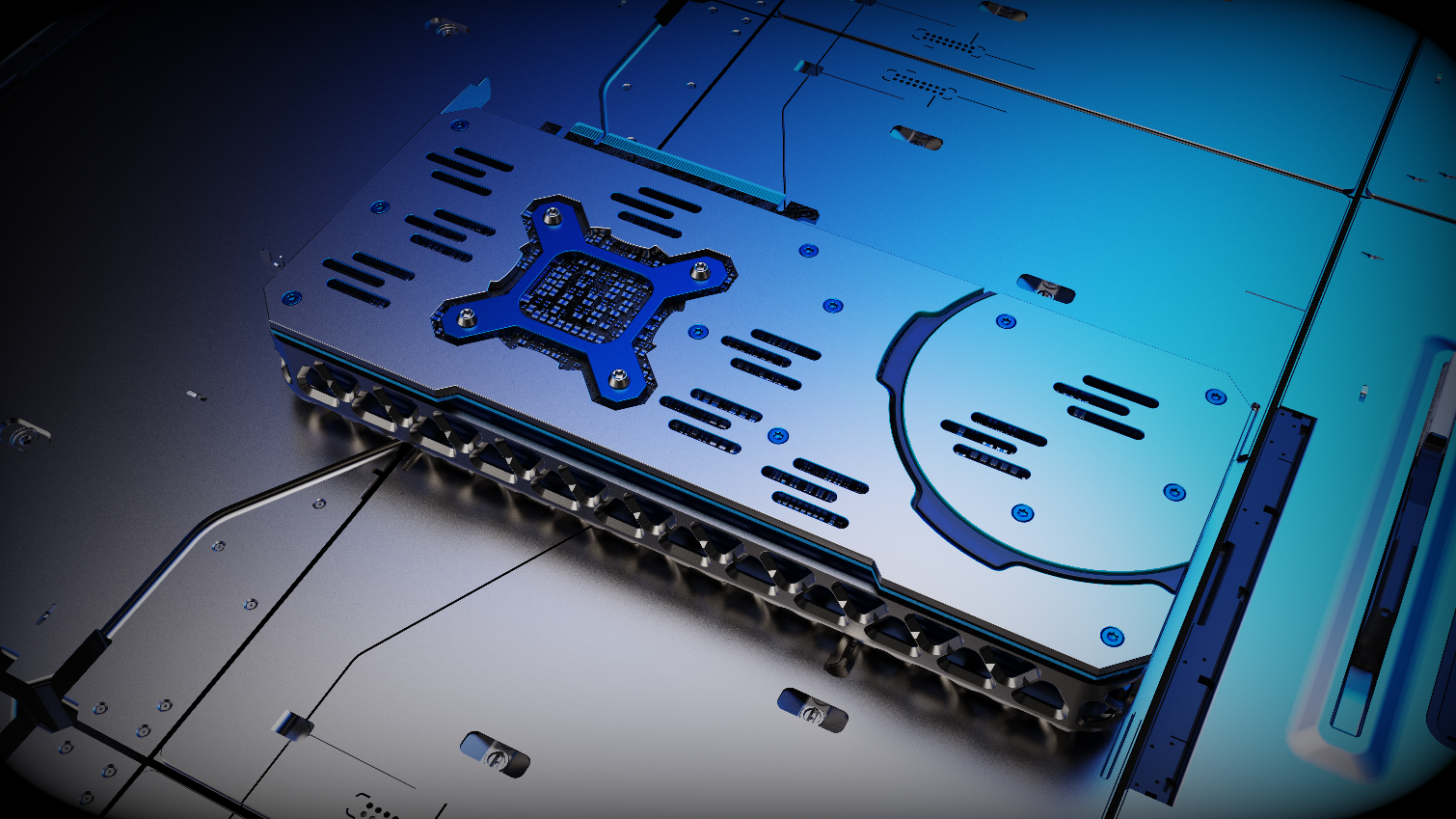

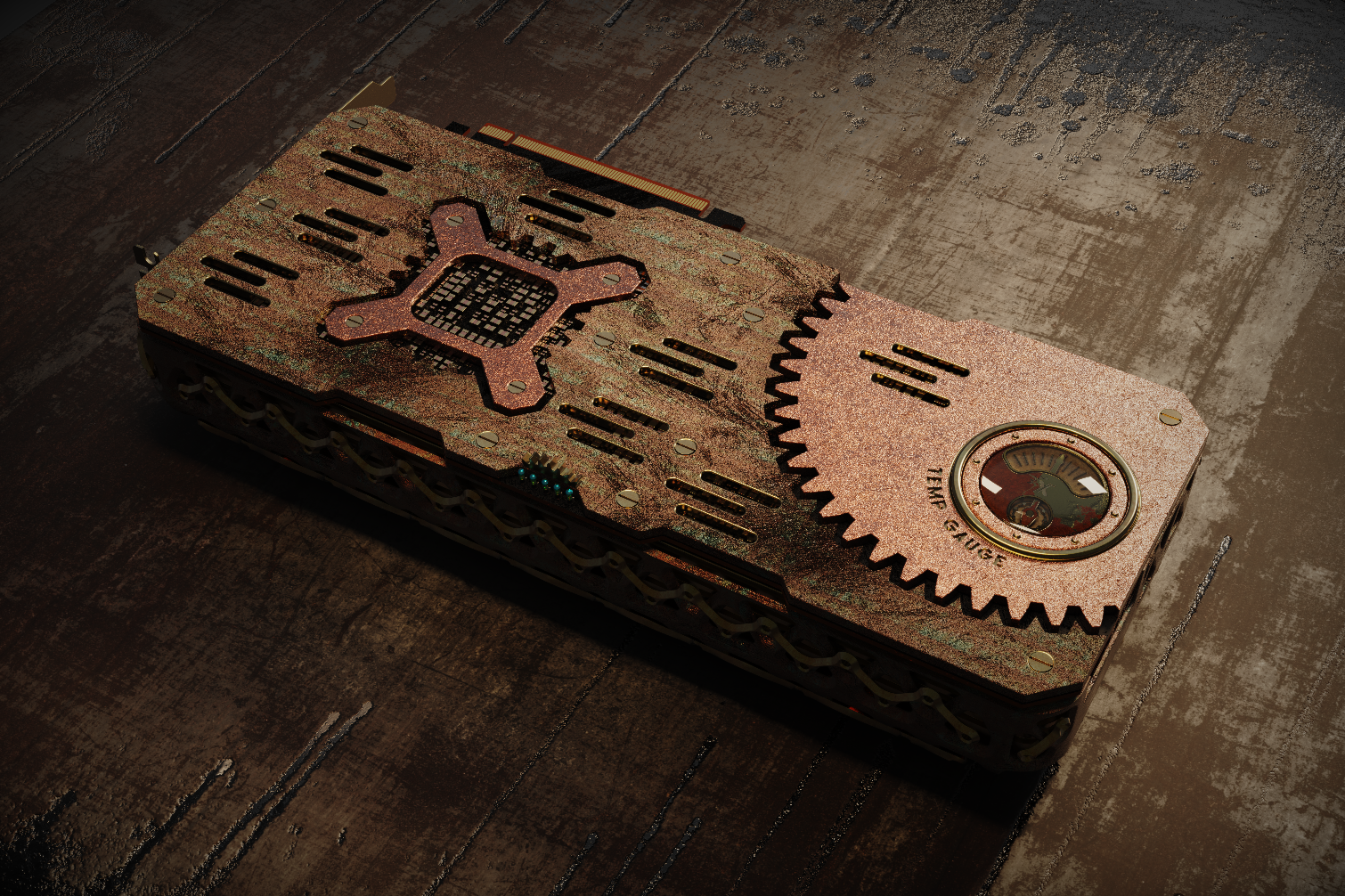

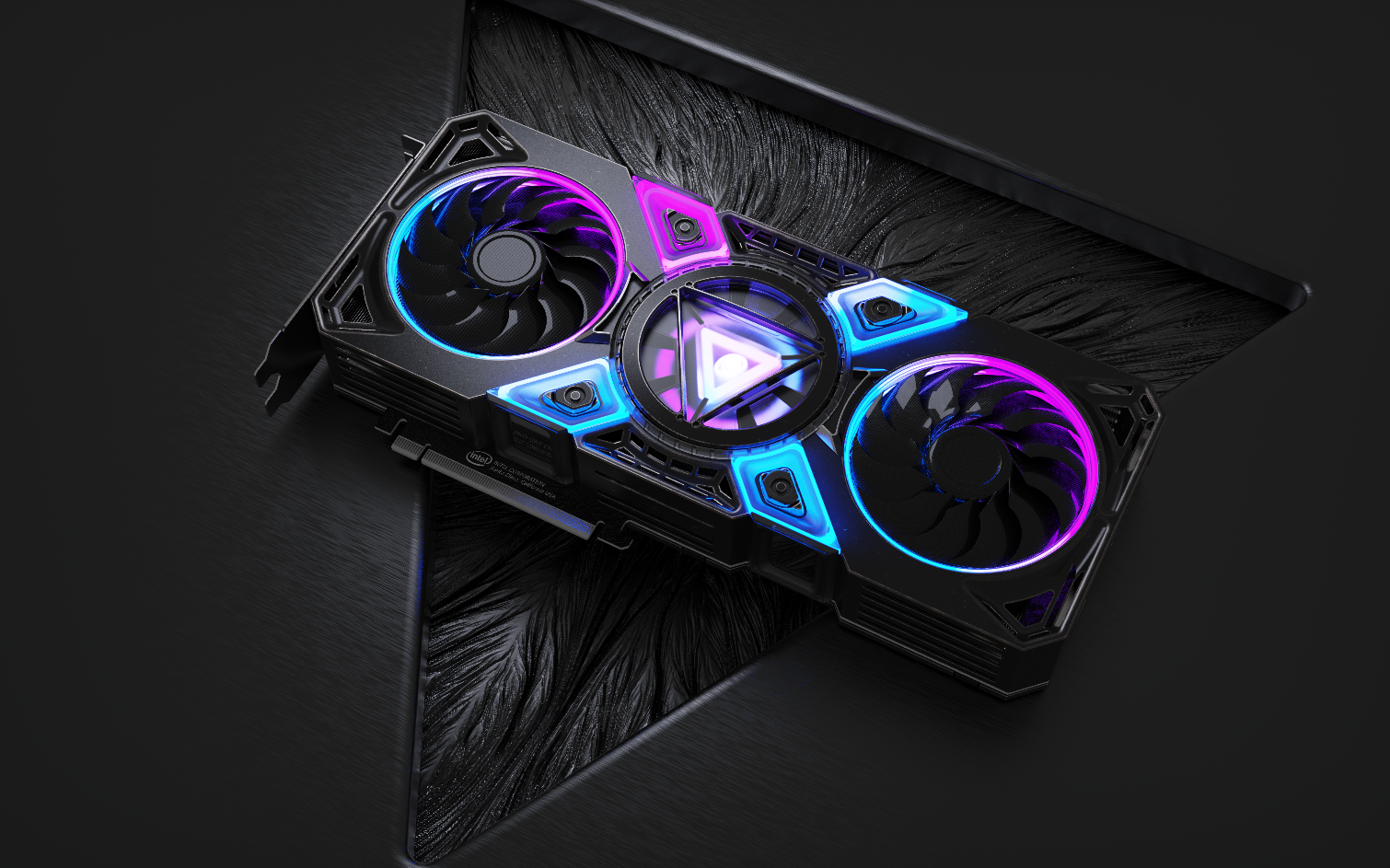

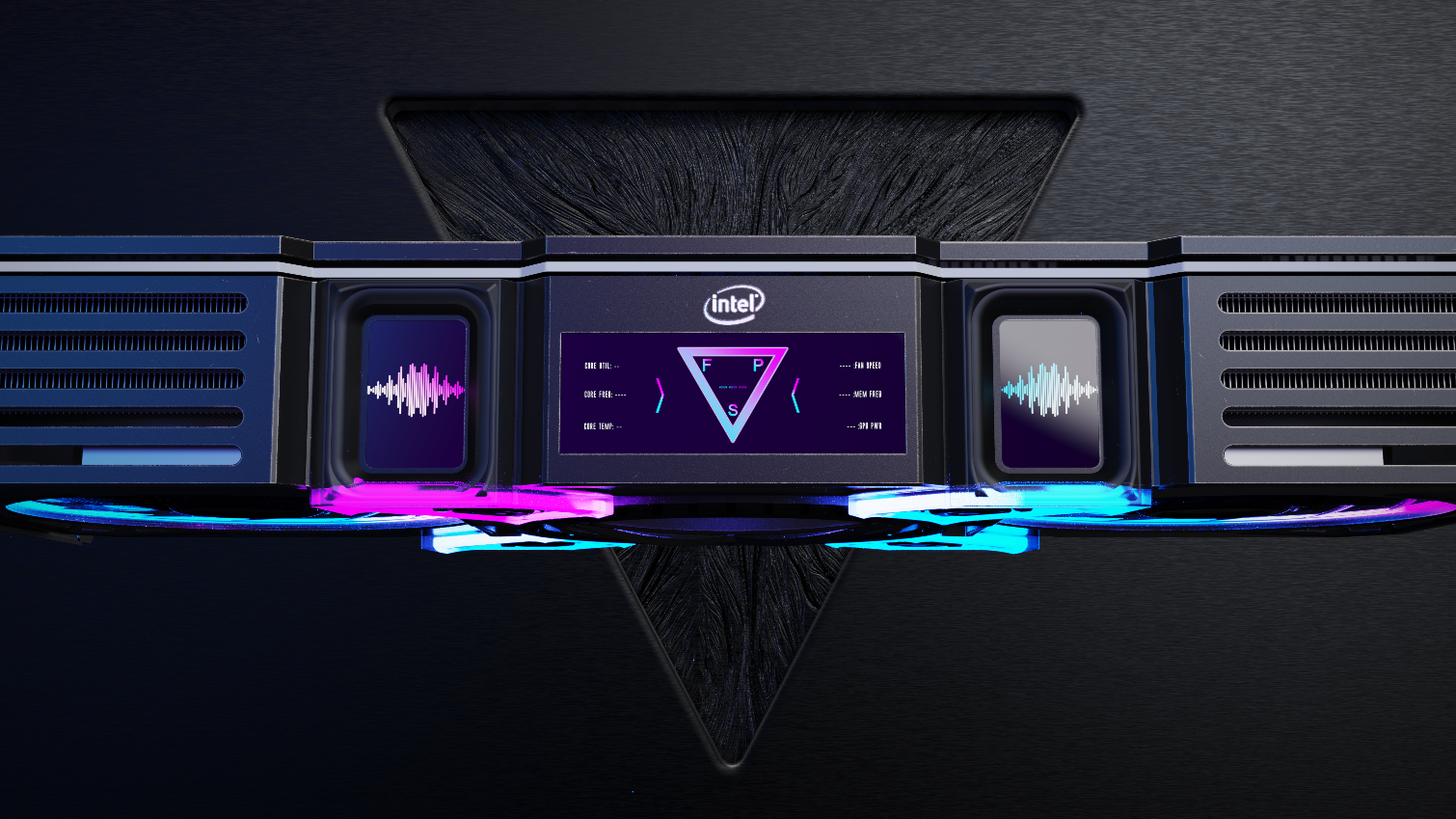

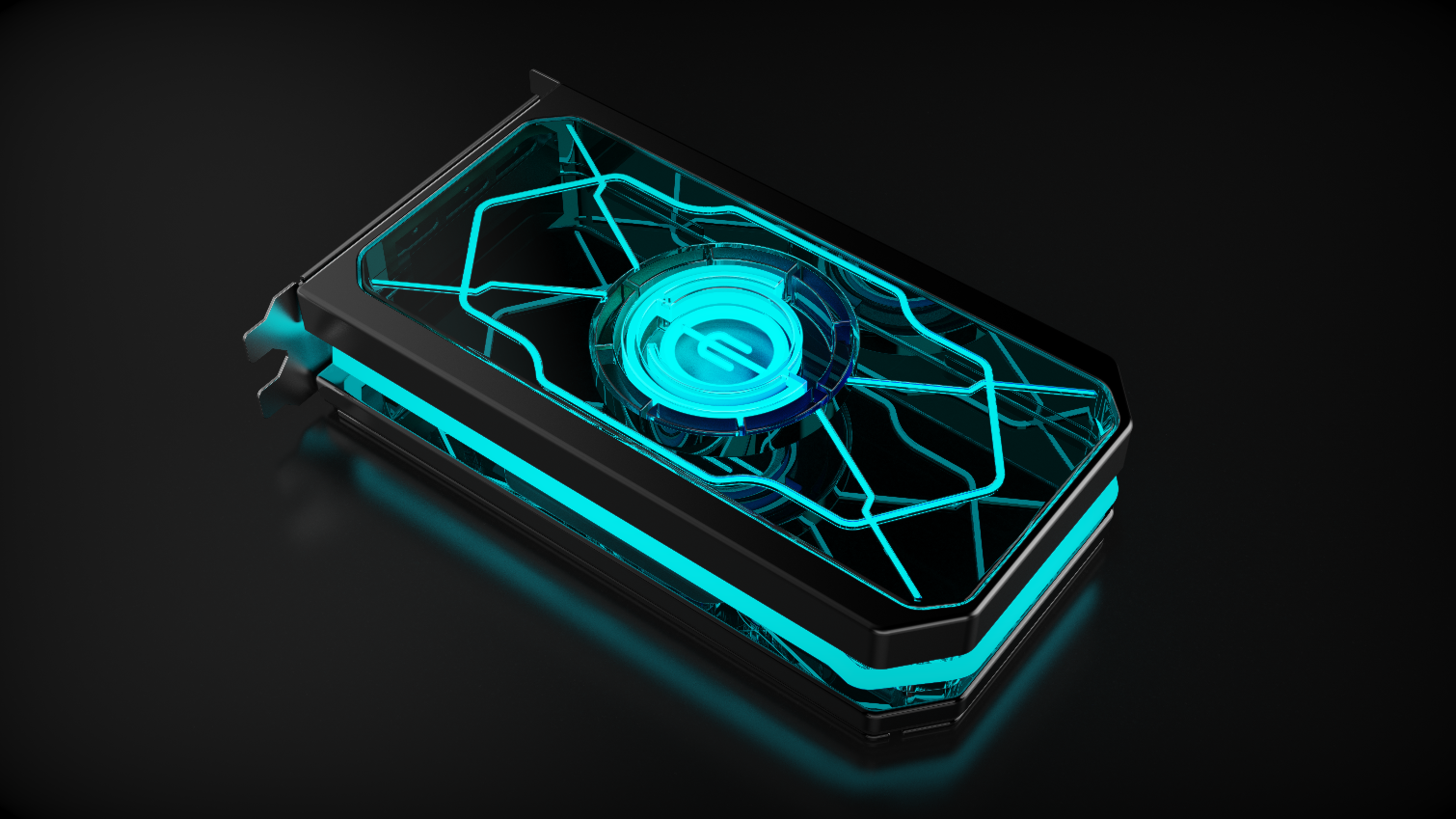

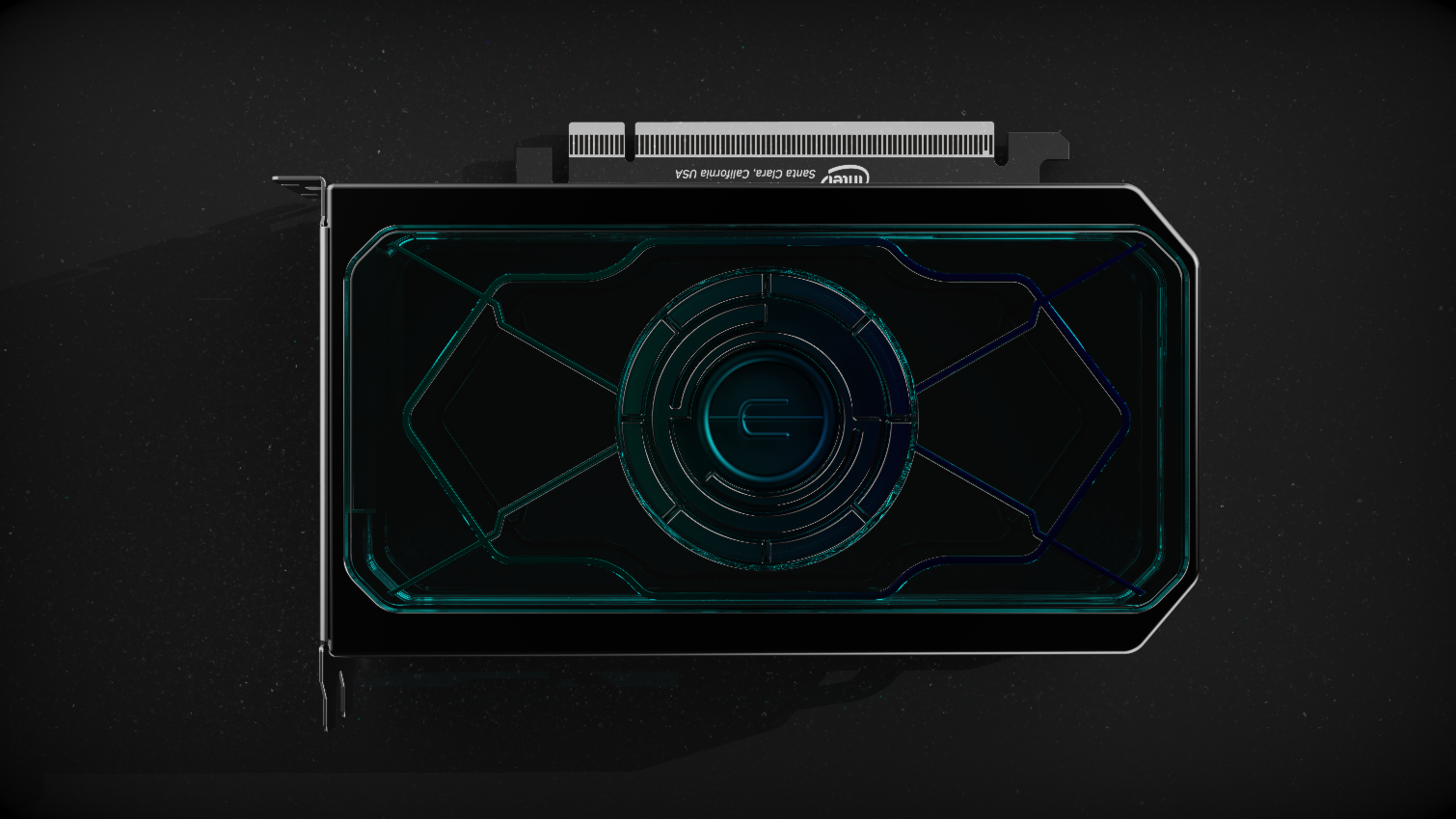

Intel also showed off several conceptual renders of what graphics cards might look like in the year 2035. To be clear, these aren't planned graphics cards, but they do give us an interesting look at what the future may hold. Check them out in the albums below.

Andromeda

Gemini

Oblivion

Prometheus

Sirius

If you want to learn more about Intel's upcoming Xe Graphics card, head to our roundup of all we know.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

southernshark 2035 Intel releases 7nm graphics cards....Reply

Most of those designs look like graphics cards released today. 2035 doesn't seem very original. -

hotaru251 Prometheus & sirius are best.Reply

andromeda looks like....a completely translucent gpu?

oblivion looks nice, but its thermals would suck ;|

similar for gemini.

that could be done by making a magnetic fan thats swappable...but again its not actually doing job of a fan and would have awful thermals. -

thegriff Are these going to come out around the same time as Navi graphics. (OK, just a dig at AMD dragging it on forever, kinda like Intel dragging on 10nm process forever) :)Reply -

cryoburner Reply

That is just what I was going to say. This concept art doesn't show much imagination. Graphics cards still connecting via a PCIe x16 slot, in pretty much the exact same form factor they have today? And RGB is still in style? Prometheus looks like it could be a card getting released this year. Sirius and Andromeda are a lot more vague about how they are actually intended to dissipate heat. Are they solid blocks of efficient crystal circuitry, perhaps? That still connect to a PCIe slot for some reason, since apparently motherboards don't have access to the same tech? Sirius even has its connector on the wrong side. Yes, I am being way too critical. : Psouthernshark said:Most of those designs look like graphics cards released today.

In any case, something tells me graphics cards in 2035 might be rather different from this. Unless there are some massive breakthroughs in chip design that bring far more efficiency, performance gains are going to continue to slow down. To gain much more performance, chips may need to get bigger, draw more power, and become more expensive, and it's questionable how practical that would be for consumer products. It's possible that upgradable graphics cards as we know them might even get phased out, in favor of APUs containing the CPU, GPU and memory all in one chip, or at least on the same board, as we see with consoles and laptops. If the performance gains slow enough, people may not be upgrading components often enough to support a market of dedicated cards like these. -

ElectrO_90 Xe is based on AMD leasing its technologies to them, to which was their biggest mistake.Reply

Intel has enough money to invest in this and then over take AMD and Nvidia, in the long run (as long as they learn how to die shrink) -

Giroro Based on the last 20 years of Graphics card design progression:Reply

A single GPU in 2035 will use approximately 120 Watts of power, occupy between 4 and 6 PCI slots, contain a minimum of 5 fans, be 22 inches long. and be built on a 5nm+++++ process.

The backplate will be accomponied by 2 'side plates' for stability, but they will do nothing to help dissipate heat, even though they easily could. -

JQB45 Computers in the future will simply be terminals connected to a vast network of quantum computers I agree that SOCs are more likely to take over then massive discrete graphics cards. PCIe and DDR standards will be replaced with new standards we haven't heard of yet. Everyone in the developed world will have a minimum of 1GBps wireless satellite internet. x86-64 processors will loose their 16 and 32 bit capabilities if x86-64 is even a viable technology in 2035. This will make a lot of room available to focus on the core 64bit technology.Reply

And since cellphones are computers they will also see technological leaps as well, and will likely all be satelite phones, ground based towers may finally disappear. -

TJ Hooker Reply

Did that ever actually happen? It was a rumored, but then IIRC all that ended up happening is that AMD just sold them finished GPU dies, rather than license the GPU IP to Intel for them to develop their own.ElectrO_90 said:Xe is based on AMD leasing its technologies to them, to which was their biggest mistake. -

jimmysmitty Replysouthernshark said:2035 Intel releases 7nm graphics cards....

Most of those designs look like graphics cards released today. 2035 doesn't seem very original.

Considering that silicon is being stretched to its limits with 7nm and even further with 5nm we might actually still be on 7nm or 5nm. We might get to a point where we wont be looking at the nm anymore.

We may be able to go further but it will require moving beyond silicon and even then 1nm is probably the limit we will hit with physical materials. We will probably have to look into organic processors beyond that.

TJ Hooker said:Did that ever actually happen? It was a rumored but then IIRC all that ended up happening is that AMD just sold them finished GPU dies, rather than license the GPU IP to Intel for them to develop their own.

I heard similar to what you heard, that they did it to put some Vega chips into Intel CPUs.

https://www.extremetech.com/extreme/249581-intel-puts-kibosh-reports-will-license-amd-gpu-technology

From what I know Intel has quite a few graphics, I have heard more patents that both AMD and nVidia.