MIT's Superconducting Qubit Breakthrough Boosts Quantum Performance

A new MIT circuit based on an alternative qubit design promises significantly improved performance through improved error correction capabilities.

Science (like us) isn't always sure of where the best possible future is, and computing is no exception. Whether in classic semiconductor systems or in the forward-looking reality of quantum computing, there are sometimes multiple paths forward (and here's our primer on quantum computing if you want a refresher). Transmon superconducting qubits (such as the ones used by IBM, Google, and Alice&Bob) have gained traction as one of the most promising qubit types. But new MIT research could open up a door towards another type of superconducting qubits that are more stable and could offer more complex computation circuits: fluxonium qubits.

Qubits are the quantum computing equivalent to transistors - get increasing numbers of them together, and you get increased computing performance (in theory). But while transistors are deterministic and can only represent a binary system (think of the result being either side of a coin, mapped to either 0 or 1), qubits are probabilistic and can represent the different positions of the coin while it's spinning in the air. This allows you to explore a bigger space of possible solutions than what can easily be represented through binary languages (which is why quantum computing can offer much faster processing of certain problems).

One current limitation to quantum computing is the accuracy of the computed results - if you're looking for, say, new healthcare drug designs, it'd be an understatement to say you need the results to be correct, replicable, and demonstrable. But qubits are sensitive and finicky to external stressors such as temperature, magnetism, vibrations, fundamental particle collisions, and other elements, which can introduce errors into the computation or collapse entangled states entirely. The reality of qubits being much more prone to external interference than transistors is one of the roadblocks on the road to quantum advantage; so a solution lies in being able to improve the accuracy of the computed results.

It's also not just a matter of applying error-correcting code to low-accuracy results and magically turning them into the correct results we want. IBM's recent breakthrough in this area (applying to transmon qubits) showed the effects of an error-correction code that predicted the environmental interference within a qubit system. Being able to predict interference means you can account for its effects within the skewed results and can compensate for them accordingly - arriving at the desired ground truth.

But in order for it to be possible to apply error-correction codes, the system has to already have passed a "fidelity threshold" - a minimum operating-level accuracy that enables those error-correcting codes to be just enough for us to be able to extract predictably useful, accurate results from our quantum computer.

Some qubit architectures - such as fluxonium qubits, the qubit architecture the research is based on - possess higher base stability against external interference. This enables them to stay coherent for longer periods of time - a measure of how long the qubit system can be effectively used between shut-downs and total information loss. Researchers are interested in fluxonium qubits because they've already unlocked coherence times of more than a millisecond - around ten times longer than can be achieved with transmon superconducting qubits.

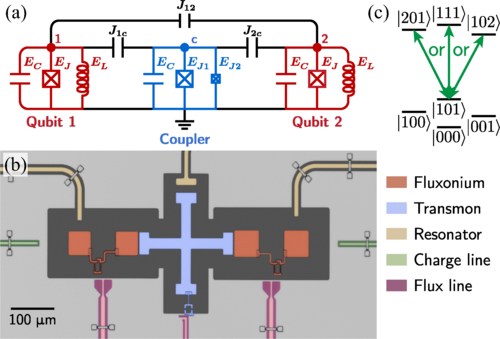

The novel qubit architecture enables operations to be performed between fluxonium qubits with important accuracy levels. Within it, the research team enabled fluxonium-based two-qubit gates to run at 99.9% accuracy and single-qubit gates to run at a record 99.99% accuracy. The architecture and design were published under the title "High-Fidelity, Frequency-Flexible Two-Qubit Fluxonium Gates with a Transmon Coupler" in PHYSICAL REVIEW X.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You could think about fluxonium qubits as being an alternative qubit architecture with its own strengths and weaknesses; not as an evolution of the quantum computing that has come before. Transmon qubits are made of a single Josephson junction shunted by a large capacitor, while fluxonium qubits are made of a small Josephson junction in series with an array of larger junctions or a high kinetic inductance material. It's partly for this that fluxonium qubits are harder to scale: they require more sophisticated coupling schemes between qubits, sometimes even using transmon qubits for this purpose. The fluxonium architecture design described in the paper does just that in what's called a Fluxonium-Transmon-Fluxonium (FTF) architecture.

Transmon qubits such as the ones used by IBM and Google are relatively easier to manipulate into bigger qubits arrays (IBM's Osprey is already at 433 qubits) and have faster operation times, performing fast and simple gate operations mediated by microwave pulses. Fluxonium qubits do offer the possibility of performing slower yet more accurate gate operations through shaped pulses than a transmon-only approach would enable.

There's no promise of an easy road to quantum advantage through any qubit architecture; that's the reason why so many companies are pursuing their differing approaches. In this scenario, it may be useful to think about this Noisy-Intermediate Scale Quantum (NISQ) era being the age where multiple quantum architectures flourish. From topological superconductors (as per Microsoft) through diamond vacancies, transmon superconduction (IBM, Google, others), ion traps, and a myriad of other approaches, this is the age where we will settle into certain patterns within quantum computing. All architectures may flourish, but it's perhaps most likely that only some will - which also justifies why states and corporations aren't pursuing a single qubit architecture as their main focus.

The numerous, apparently viable approaches to quantum computing we're witnessing put us right in the middle of the branching path before x86 gained dominance as the premier architecture for binary computing. It remains to be seen whether the quantum computing future will readily (and peacefully) agree on a particular technology, and how will a heterogeneous quantum future look like.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.