Get Your Frame Buffers Ready: Nvidia Demos Upcoming Iray VR At GTC 2016

Nvidia announced Iray VR and Iray VR Lite at GTC 2016, and while it won't be out for some time yet, the demos were very impressive.

One of Nvidia’s big announcements at GTC 2016 is its new Iray VR, which is basically the VR-enabled version of its original Iray plugin for 3ds Max, Maya, and other 3D modelling software. The original plugin is what you’d use to render photorealistic images of your models, but of course that’s not sufficient in VR – in VR you want to be able to turn your head and look around, and Iray VR enables you to render still scenes built in 3D modelling applications for viewing through a VR headset.

For GTC, Nvidia has demos of IRay VR, Iray VR Lite, and the Iray VR Lite demo in real-time.

The simplest version, Iray VR Lite, renders a spherical stereoscopic panorama, and you can look all around from that one specific point. The benefit of Iray VR Lite is that post-rendering, it has relatively low requirements. The catch is that you can’t move your head around--that is, you cannot move your head through 3D space.

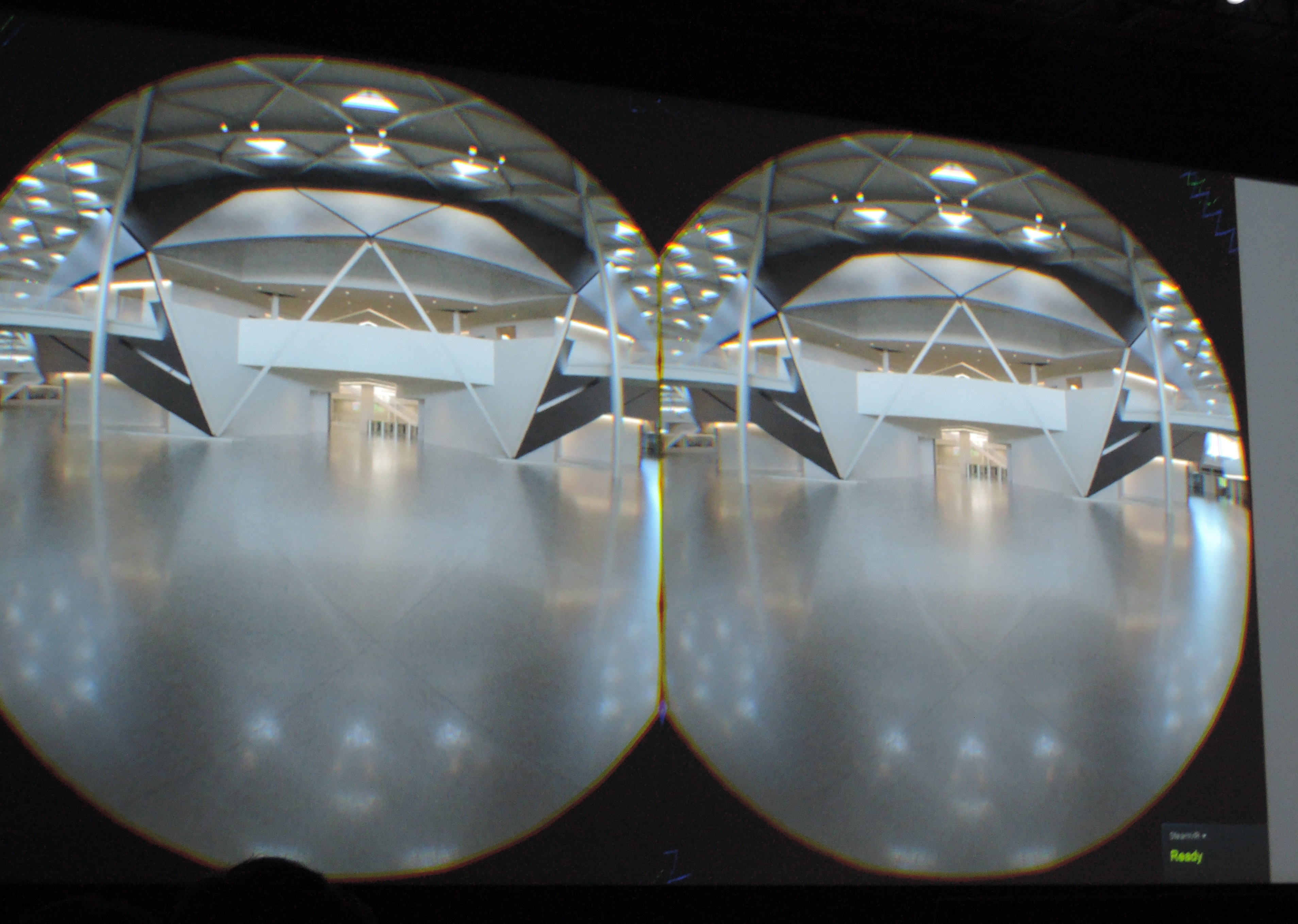

I got the chance to experience a demonstration of Iray VR Lite on an HTC Vive, and in this demo Nvidia had a handful of pre-defined head positions. I could look around, and in the distance there would be a glowing green orb. Using the controller, I could aim at it and trigger a teleport. With that, I was able to maneuver through the upcoming headquarters, Endeavor. It was quite an impressive demo, but lacking the ability to move my head around did feel, well, lacking.

I asked how big a frame buffer such a demo required, and Nvidia explained that for the demo it is quite large, particularly because of the glowing orbs and the use of uncompressed images. When the final release comes, approximately an 8 GB frame buffer should be ample for most demos.

Nvidia also demonstrated a live demo of Iray VR Lite, although for all intents and purposes, this demo was very much of the “because we can” variety. The demo was run on Nvidia’s VCA (Visual Computing Appliance) with 32 nodes in Santa Clara, with the output transmitted to the convention center through a very fast Internet connection. The demo showed a BMW Z4, and I could change its color, interior detailing, rims, and the environment it was situated in. Like the VR Lite demo. With the photorealistic graphics, it really did feel like I was in a BMW Z4 when I selected the driver's view.

In the demo, it was clear that the system in Santa Clara was working very hard to render the scene. As it first came up, the image was still quite fuzzy, and only sharpened after a few seconds. As I looked around, I could also see the scene being built up around me, with the areas not yet rendered shown simply as white space. Calling it "real-time" is a bit of a stretch.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The full version, Iray VR is more capable, but it has a serious performance cost. It enables you to move your head around within the scope of what Nvidia calls a "light field." This demo has five degrees of freedom: You can move your head through three dimensions, and then you can look up and down or side to side (the other 2 dimensions). Iray VR calculated the light rays you would see from each direction at every point in space within the light field. Additionally, for the demo, Nvidia also enabled tone mapping, so as I moved from looking at a dark part inside the building to looking out of a window, the lighting changed.

Of course, you can see where this gets difficult: Rendering a scene like this generates tremendous amounts of data. Therefore, the demo also only works best on a graphics card with a huge frame buffer. For that reason, Nvidia used the 24 GB Quadro M6000 for the demos. Fortunately, you do not need a large amount of GPU compute power to display a rendered scene--just the enormous frame buffer.

Iray VR Lite will be available as early as June. Availability for the full version of Iray VR with 5 degrees of freedom will be announced this spring.

(Note that except for the camera-shot image, the renders shown above are from the existing version of Iray, not Iray VR.)

Follow Niels Broekhuijsen @NBroekhuijsen. Follow us @tomshardware, on Facebook and on Google+.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.

-

lun471k These are the things we need for this industry to become affordable and really, really mind blowing.Reply -

randomizer ReplyCalling it "real-time" is a bit of a stretch.

I don't think NVIDIA ever calls it "real time". The correct term for this sort of renderer is "progressive". -

dstarr3 Is that BMW picture a photo or a 3D render? Because if that's not real, that's bloody incredible.Reply -

Deehomes ReplyIs that BMW picture a photo or a 3D render? Because if that's not real, that's bloody incredible.

It was rendered -

blackbit75 I think that VR should have come with raytracing. It would have given VR it's own visual style. But I suppous we didin't have enough power.Reply

Market don't demand raytracing on VR, too. Because we can't want what we don't know exists. But once implemented, we will not want to go back, I believe.

Can't wait for it. -

alextheblue ReplyIs that BMW picture a photo or a 3D render? Because if that's not real, that's bloody incredible.

Keep in mind that's a still render. In 10 years time we'll be doing that at 60FPS or better . :D -

dstarr3 Reply17793343 said:Is that BMW picture a photo or a 3D render? Because if that's not real, that's bloody incredible.

Keep in mind that's a still render. In 10 years time we'll be doing that at 60FPS or better . :D

It's still bloody impressive as a still render. Just, the sheer amount of detail is staggering. The lighting, the reflections, the depth of field, etc. It is just completely indistinguishable from a photograph. -

bit_user Reply

Still renders often look better than when you see it animated.17793343 said:It's still bloody impressive as a still render. Just, the sheer amount of detail is staggering. The lighting, the reflections, the depth of field, etc. It is just completely indistinguishable from a photograph.

We also don't know how much of the background is rendered vs. a photograph.

That said, I'm as hopeful for realtime raytracing as anyone else. I wonder if NV will add any specialized hardware engines to their GPUs, along the lines of what Imagination is doing.