Nvidia Outs Grace CPU Superchip Arm Server Lineup, Ships in Early 2023

Nvidia's first Arm CPUs make a big splash in a wave of reference designs

Nvidia's decision to build its own lineup of Arm-based server CPUs, dubbed the Grace CPU Superchip and the Grace Hopper Superchip, charted the company's course to building out full systems with its CPUs and GPUs in the same box. That initiative moved closer to reality today as Nvidia announced at Computex 2022 that several major server OEMs will offer dozens of reference systems based on its new Arm CPUs and Hopper GPUs in the first half of 2023.

Nvidia tells us that these new systems, which we break down below, will co-exist with its existing lineup of reference servers, so the company will continue to support x86 processors from AMD and Intel for the foreseeable future.

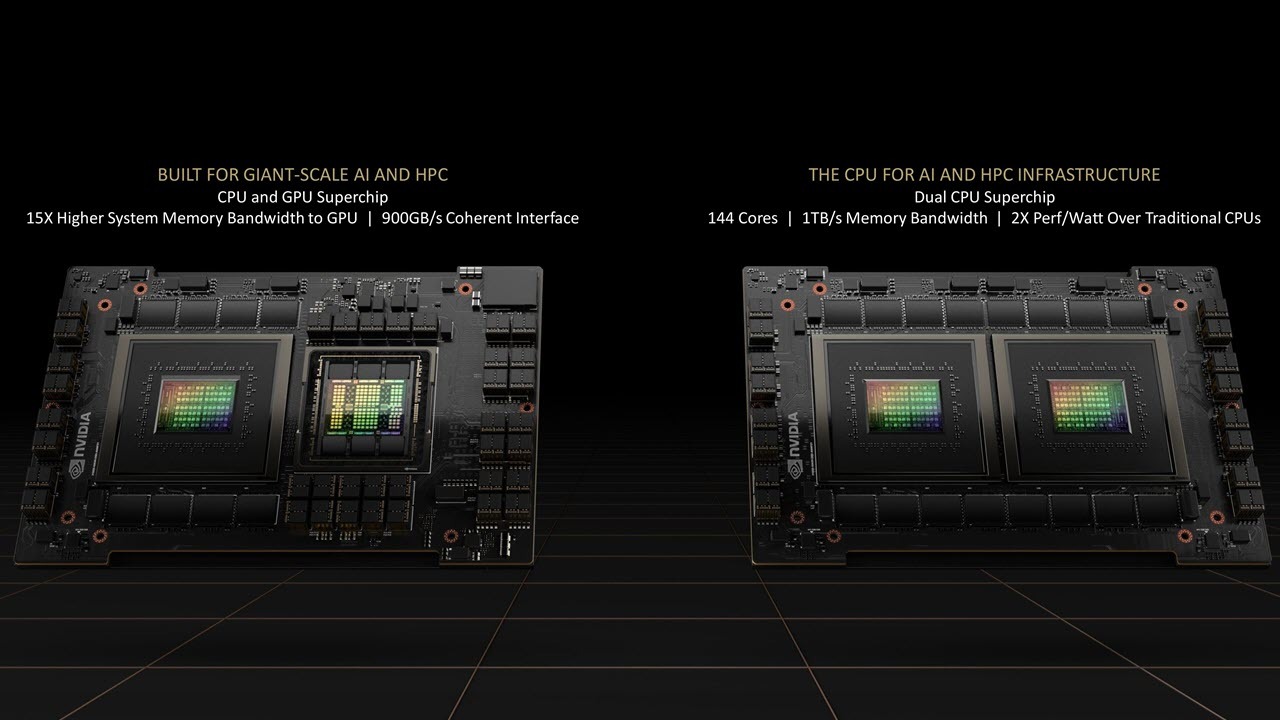

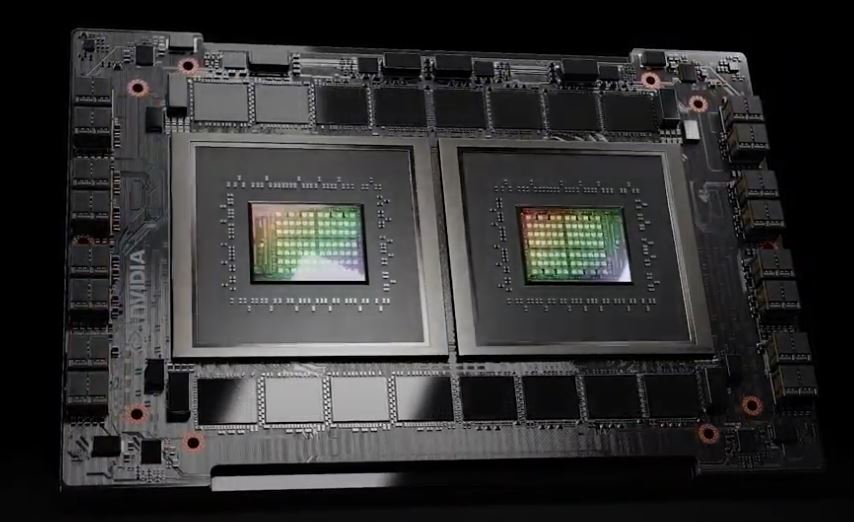

As a quick reminder, Nvidia's Grace CPU Superchip is the company's first CPU-only Arm chip designed for the data center and comes as two chips on one motherboard, while the Grace Hopper Superchip combines a Hopper GPU and the Grace CPU on the same board. The Neoverse-based CPUs support the Arm v9 instruction set and systems come with two chips fused together with Nvidia's newly branded NVLink-C2C interconnect tech.

Overall, Nvidia claims the Grace CPU Superchip will be the fastest processor on the market when it ships in early 2023 for a wide range of applications, like hyperscale computing, data analytics, and scientific computing. (You can read the deep-dive details about the silicon here.)

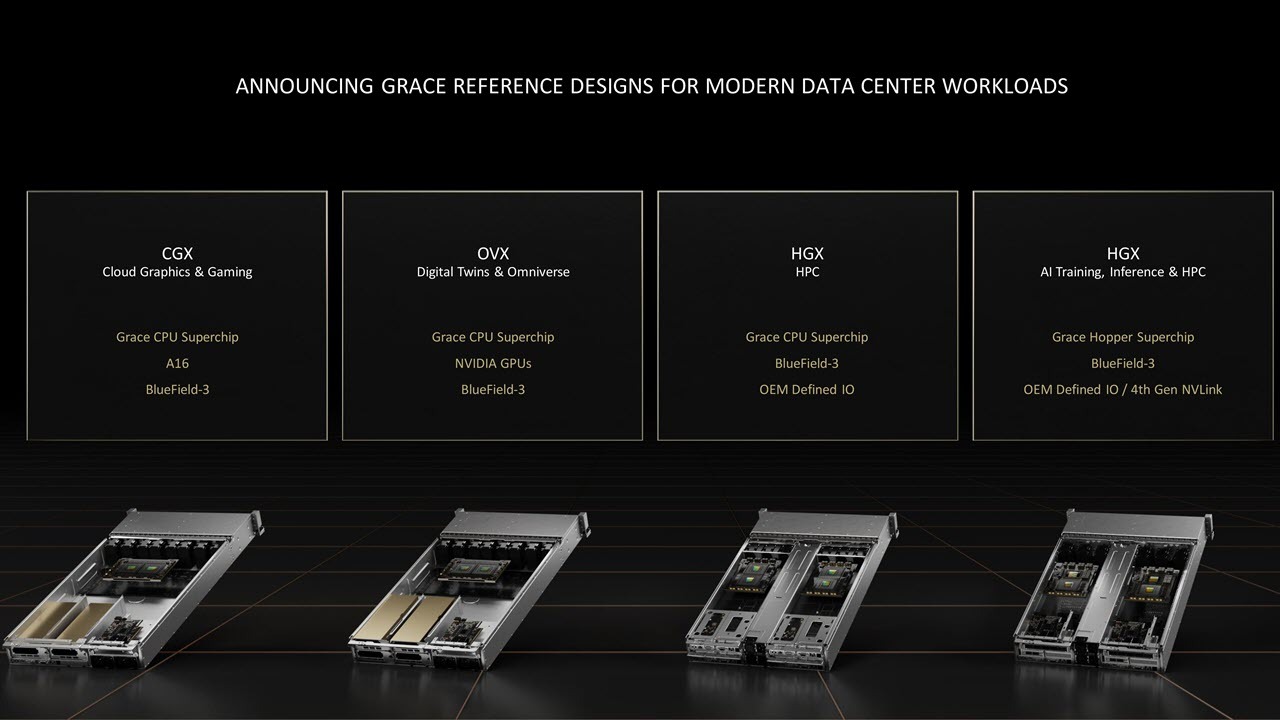

A slew of blue-chip OEM/ODMs, like Asus, Gigabyte, Supermicro, QCT, Wiwynn, and Foxconn, have dozens of new reference server designs planned for launch in the first half of 2023, indicating that Nvidia remains on track with its Grace CPU silicon. The OEMs will craft each of the server designs from one of Nvidia's four reference designs that include server and baseboard blueprints. These servers will be available in 1U and 2U form factors, with the former requiring liquid cooling.

The Nvidia CGX system for cloud graphics and gaming applications comes with the dual-CPU Grace Superchip paired with Nvidia's A16 GPUs. The Nvidia OVX servers are designed for digital twin and omniverse applications and also come with the dual-CPU Grace, but they allow for more flexible pairings with many different Nvidia GPU models.

The Nvidia HGX platform comes in two flavors. The first is designed for HPC workloads and only comes with the dual-CPU Grace, no GPUs, and OEM-defined I/O options. Meanwhile, on the far right, we see the more full-featured HGX system for AI training, inference, and HPC workloads with the Grace CPU + Hopper GPU Superchip, OEM-defined I/O, and support for the option of fourth-gen NVLink for connections outside of the server via NVLink switches.

Notably, Nvidia will offer the NVLink option with its CPU+GPU Grace Hopper Superchip models, but not for systems powered by the dual-CPU Grace Superchip.

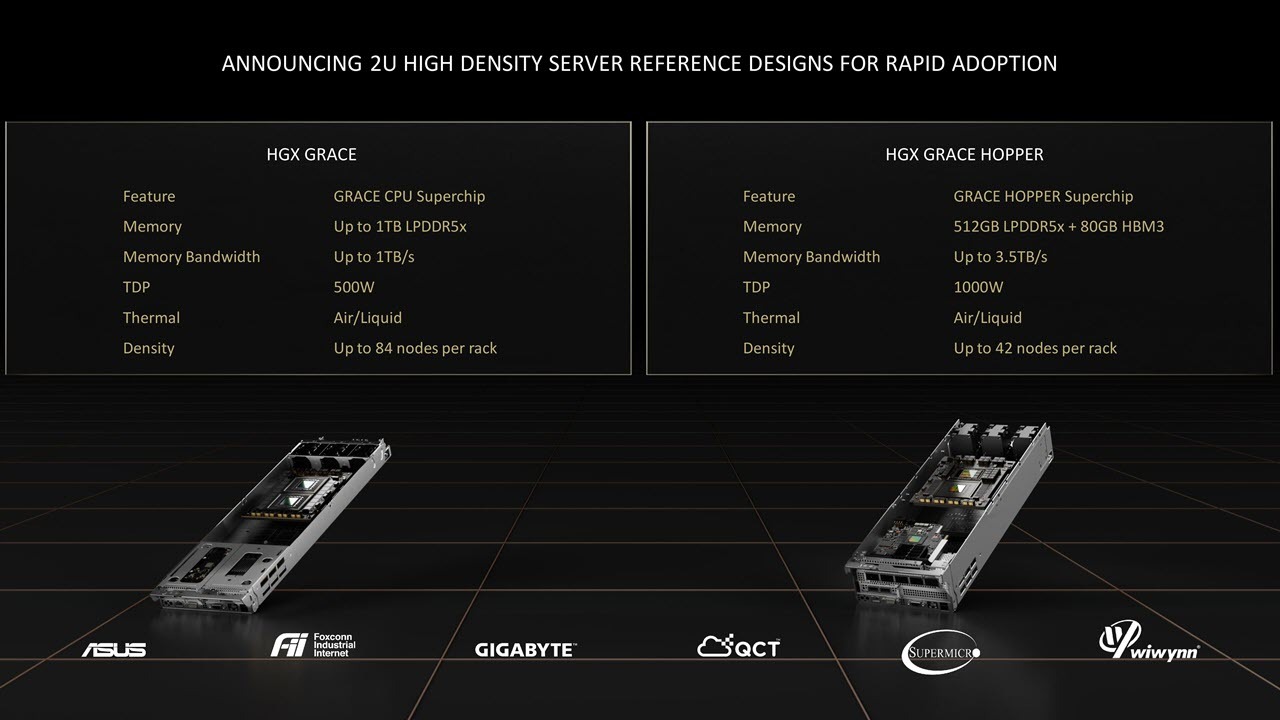

Here we can see the two different 2U blades that can power the HGX systems. The dual-CPU 'HGX Grace' CPU Superchip blade has up to 1TB of LPDDR5x memory, provides up to 1TB/s of memory bandwidth, adheres to a 500W TDP envelope, can be cooled with either liquid or air, and supports two blades per node for up to 84 nodes per rack.

The HGX 'Grace Hopper' Superchip blade comes with a single Grace CPU paired with the Hopper GPU, providing 512 GB of LPDDR5x memory, 80GB of HBM3, and up to a combined 3.5 TB/s of memory throughput. As you'd expect, given the addition of the GPU, this blade comes with a higher 1000W TDP envelope and comes with either air or liquid cooling. This larger blade limits the HGX Grace Hopper systems to 42 nodes per rack.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Unsurprisingly, Nvidia offers all of these systems with its other important additive that is helping the company become a solutions provider: The 400 Gbps Bluefield-3 Data Processing Units (DPUs) that come as the fruits of its Mellanox acquisition. These chips offload critical work from the CPUs, allowing streamlined networking, security, storage, and virtualization/orchestration features.

Nvidia already has CGX, OVX, and HGX systems available with x86 CPUs from both Intel and AMD, and the company tells us that it plans to continue to provide those servers and develop newer revisions with Intel and AMD silicon.

"x86 is a very important CPU that is pretty much all of the market of Nvidia GPUs today. We'll continue to support x86, and we'll continue to support Arm-based CPUs, offering our customers in the market the choice of wherever they want to deploy accelerated computing," Paresh Kharya, Nvidia's Senior Director of Product Management and Marketing, told us.

That doesn't mean that Nvidia will pull performance punches, though. The company recently demoed its Grace CPU in a weather forecasting head-to-head with Intel's Ice Lake, claiming that its Arm chip is 2X faster and 2.3X more efficient. Those same Intel chips currently power Nvidia's OVX servers.

The company hasn't spared AMD, either. Nvidia also claims that its Grace CPU Superchip is 1.5X faster in the SPECrate_2017_int_base benchmark than the two previous-gen 64-core EPYC Rome 7742 processors it uses in its current DGX A100 systems.

We'll soon see if Nvidia's first foray into CPUs lives up to its claims. The reference CGX, OVX, and HGX systems will ship in the first half of 2023.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.